If your AI chatbot doesn't reflect what's changed on the web, users notice.

From pricing updates to policy changes and product docs, websites evolve constantly - and if your retrieval or chatbot index doesn’t keep up, answers drift, hallucinations increase, and trust erodes.

In this guide, we’ll show how to use Crawler APIs to continuously monitor website changes and keep AI chatbots and RAG systems fresh - without brittle cron scripts, manual URL lists, or expensive re-scrapes.

Key Takeaways

Use a Crawler API to discover URLs, detect content changes, and trigger targeted re-indexing for AI chatbots and RAG systems.

- Prefer Crawler API for domain-wide discovery and scheduled monitoring

- Detect changes via normalized content hashing (markdown/clean HTML) instead of raw HTML

- Use webhooks to stream page events to a queue for diffing and re-embedding

- Limit scope with depth rules, sitemaps, robots, and domain allowlists

- Re-index selectively: only re-embed changed chunks to control costs

- Combine Scrape API for high-priority pages that need real-time checks

What is a Crawler API?

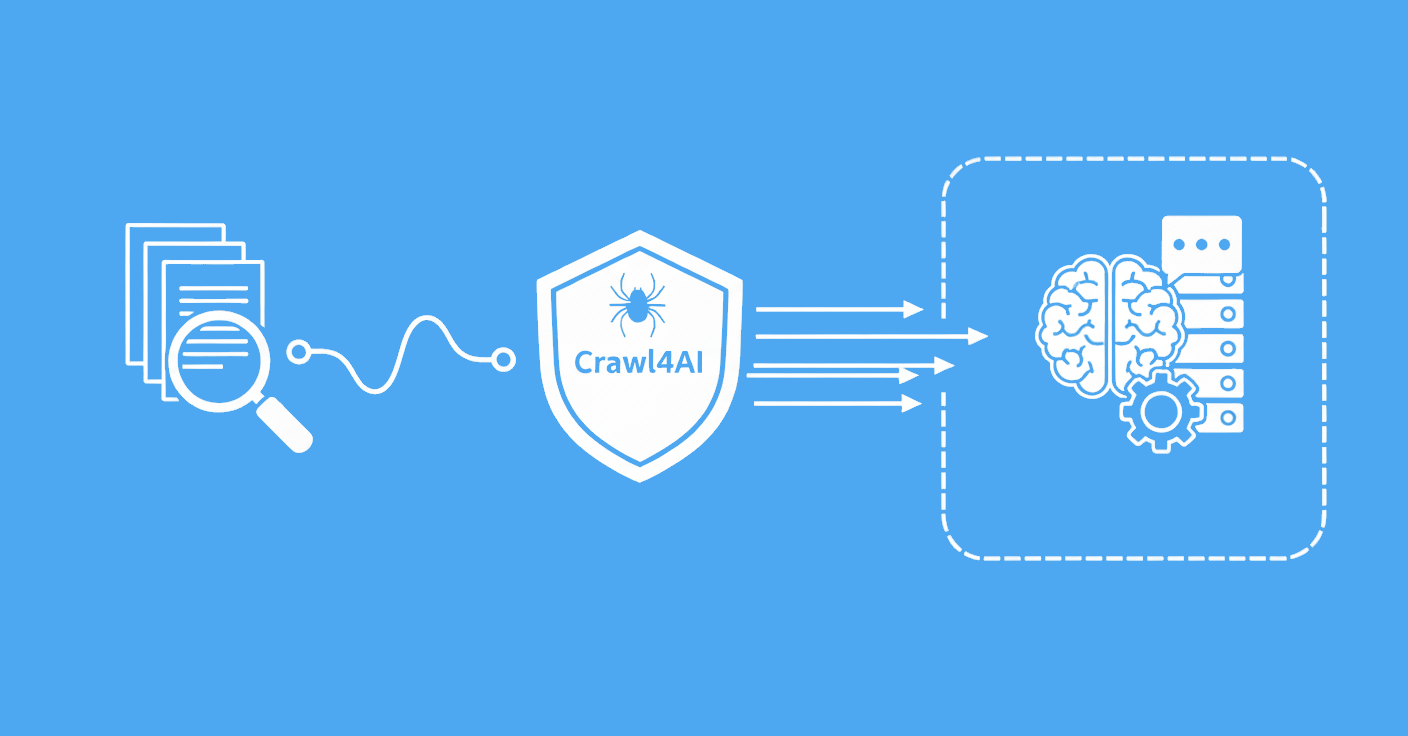

A Crawler API automates the process of discovering and visiting multiple pages across a website, eliminating the need for manually maintained URL lists.

Instead of hard-coding URLs, you provide seed starting points and configure rules - such as depth limits, allowed domains, and politeness settings - and the crawler automatically follows links, extracts content, and returns structured results with metadata.

Think of it as an intelligent bot that respects the site's policies while systematically gathering information at scale.

Use a Crawler API when you need broad, ongoing coverage of a site or documentation section without the maintenance burden of manual URL management. The market offers many crawler solutions, each with different trade-offs in cost, speed, infrastructure complexity, and ease of integration.

Why monitor website changes for AI chatbots?

Chatbots and RAG (Retrieval-Augmented Generation) systems are only as good as the freshness of their underlying knowledge. The web is constantly evolving - documentation gets updated, pricing changes, policies shift, and new features launch.

If your chatbot's index doesn't keep pace with these changes, it becomes a liability: outdated answers erode user trust, increase hallucinations, and ultimately damage your product's credibility.

Continuous monitoring bridges this gap by automatically detecting what's changed and triggering intelligent re-indexing.

Key benefits of automated monitoring:

- Accuracy at scale: Reduce drift between source pages and model responses. Keep answers synchronized with reality rather than months-old cached versions

- Cost efficiency: Re-embed only the chunks that actually changed, not entire pages. This targeted approach cuts embedding costs dramatically compared to full re-crawls

- Speed to update: React in near real-time with webhook-driven pipelines instead of hourly batch jobs. Critical changes propagate to your chatbot in minutes, not hours

- Observability: Track change frequency and content health across all your knowledge sources. Spot stale sources before they impact user experience

Automated monitoring transforms the relationship between your knowledge sources and your AI system. Instead of deploying a chatbot and hoping it stays relevant, you gain visibility into what's changing and respond intelligently.

Crawler API vs Scraper API for Monitoring

Both Crawler and Scraper APIs support website change monitoring, but they address different needs. Selecting the right tool for each job improves coverage, cost control, and responsiveness.

Scraper API is suitable for targeted, high-frequency monitoring of specific, stable URLs. Use it when you need:

- Frequent checks for a small set of critical pages (pricing, policies, key documents)

- Precise control over request timing, headers, and custom parsing

- Per-request reliability and latency measurements

- Screenshots or element-level selection to detect visual changes

Typical uses include tracking competitor prices, monitoring a particular policy page, or capturing error messages on key flows.

Crawler API is designed for broader discovery and periodic monitoring. Use it when you need:

- Automatic URL discovery within a site or section without manual lists

- Scheduled scans (daily or weekly) rather than minute-level polling

- Scope management (depth, domain, sitemaps) to keep crawls focused

- Polite behavior that respects server load and robots.txt

Typical uses include monitoring documentation sites for updates, watching a product directory, or keeping a knowledge base current.

Many teams combine both approaches: use a Crawler API for wide coverage and discovery, then pair it with a Scraper API for a small set of URLs that require near-real-time checks. This pairing balances coverage, cost, and latency.

Effective Change Detection Strategies

Accurate change detection depends on comparing the right content. Raw HTML tends to be noisy,navigation elements, timestamps, ads, and cookie banners can trigger false positives. Focusing on normalized, semantic content helps capture meaningful changes while filtering out presentation noise.

The following strategies reduce false positives and improve detection accuracy:

HTTP cache headers (ETag / Last-Modified)

Use server-provided cache hints to skip unchanged resources. When a page returns the same ETag or an unchanged Last-Modified timestamp, there is no need to re-process it. This approach reduces crawl load and speeds up detection on sites with good caching practices.

Sitemap-based discovery

Parse sitemap.xml and check the lastmod field to identify recently updated pages. Prioritizing these pages over unchanged ones minimizes unnecessary processing. Combining sitemap signals with content hashing provides more reliable detection.

Blocklists and allowlists

Filter out query parameters, breadcrumb variations, and paths that carry no semantic value. Regex patterns or path filters can restrict crawls to relevant sections (e.g., /docs/, /blog/, /pricing/) while ignoring noise like /search?q=, /login, or session IDs.

Combining these techniques produces a detection system that identifies real changes without excessive false positives.

Building a Change Monitoring Pipeline

A practical monitoring pipeline connects the Crawler API to your chatbot's knowledge base through a series of steps. The goal is to detect changes early, process them efficiently, and update only what has changed.

Pipeline overview:

- Schedule crawl jobs : Run crawls on a regular cadence (daily, hourly) with depth, rate, and domain limits to control scope and cost.

- Receive page events : Each crawled page triggers a webhook containing content (markdown, clean HTML) and metadata such as URL and timestamp.

- Compute content hashes : A background worker normalizes the content and generates a hash. Comparing this hash to the previously stored value reveals whether the page changed.

- Diff and re-chunk : When a change is detected, the worker generates a minimal diff and splits the updated content into chunks.

- Re-embed selectively : Only the affected chunks are sent to the embedding model and upserted into the vector store.

- Keep indexes fresh : The chatbot retrieves up-to-date chunks without requiring a full re-index.

This pipeline ensures that your chatbot stays synchronized with source content while minimizing compute and embedding costs. By processing only what has changed, you avoid the overhead of full re-crawls and keep response latency predictable.

Monitoring with ScrapFly Crawler API

ScrapFly's managed Crawler API handles proxies, anti-bot protection, and content extraction, so you can focus on change detection rather than infrastructure.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Check out Scrapfly's web scraping API for all the details.

The following example demonstrates a basic monitoring workflow using the ScrapFly Crawler API.

from scrapfly import ScrapflyClient, CrawlConfig

import hashlib

scrapfly = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

def content_fingerprint(item: dict) -> str:

"""Stable hash from normalized content formats."""

# Prefer markdown; fall back to clean_html or text

body = (

item.get("markdown")

or item.get("clean_html")

or item.get("text")

or ""

)

normalized = "\n".join(

line.strip() for line in body.splitlines() if line.strip()

)

return hashlib.sha256(normalized.encode("utf-8")).hexdigest()

# 1) Start a crawl job

crawl = scrapfly.crawl(CrawlConfig(

url="https://docs.example.com",

page_limit=200, # safety cap

max_depth=3, # stay within docs tree

content_formats=["markdown", "clean_html", "text"],

asp=True, # Anti Scraping Protection

delay=1.0, # politeness

))

print("Started crawl:", crawl.crawl_uuid)

# 2) Poll for status (or use webhooks in production)

status = scrapfly.get_crawl_status(crawl.crawl_uuid)

print(f"Status: {status.status}, pages: {status.pages_scraped}")

# 3) Retrieve result pages and compute fingerprints

results = scrapfly.get_crawl_results(crawl.crawl_uuid)

changes = []

for item in results.items:

url = item.url

fp = content_fingerprint(item.content)

# compare with your persisted fp store (db/kv). Pseudo:

old = load_previous_fp(url) # -> str | None

if old != fp:

save_fp(url, fp)

changes.append(url)

print(f"Detected {len(changes)} changed pages")

In production environments, webhooks are generally preferred over polling. Streaming page events to a message queue (such as SQS, Pub/Sub, or RabbitMQ) allows parallel workers to process changes as they arrive, improving throughput and reducing latency.

When to Use Scraper API Instead

While the Crawler API handles broad discovery and scheduled monitoring, some scenarios call for a Scraper API instead. Consider using a Scraper API when:

- High-frequency checks are required : Monitoring a small set of URLs every few minutes rather than on a daily or weekly schedule.

- Custom parsing or element targeting is needed : Extracting specific elements, running custom scripts, or applying fine-grained request configuration.

- Visual change detection matters : Capturing screenshots to identify layout or design changes that text-based diffing would miss.

For most monitoring setups, combining both tools provides the best balance: use the Crawler API for wide coverage and the Scraper API for latency-sensitive, high-priority pages.

FAQ

Here are answers to common questions about using Crawler APIs for monitoring website changes:

How often should I crawl my sources?

It depends on update frequency and tolerance for staleness. High-change sites (pricing, documentation) benefit from daily or hourly crawls. Static content can use weekly schedules. Use lastmod headers and ETags to avoid re-crawling unchanged pages and control costs.

Should I use Crawler API or Scraper API for monitoring?

Use Crawler API for broad, ongoing site coverage with automatic URL discovery and politeness controls. Use Scraper API for known, critical pages that need high-frequency, real-time checks. Most teams combine both: Crawler for wide coverage, Scraper for latency-sensitive pages.

What if websites block my crawler?

Respect robots.txt, use appropriate User-Agent headers, implement politeness delays between requests, and honor rate-limit headers. If blocked despite compliance, use residential proxies or managed crawling services. Always prioritize ethical crawling over speed.

Summary

In this guide, we explored how Crawler APIs enable automated website change monitoring for AI chatbots. By continuously detecting content updates and triggering selective re-indexing, you can keep your chatbot's knowledge fresh without expensive full re-crawls or manual URL management.

The result is a monitoring system that scales with your knowledge base, responds to changes in real-time, and keeps your AI chatbot trustworthy and accurate. Your users will notice the difference.