Your competitor just dropped their prices by 15%. By the time you notice manually checking their site, you've already lost dozens of sales.

Price monitoring shouldn't work this way. Modern e-commerce moves too fast for manual checks or weekly CSV downloads. You need automated systems that track competitor prices across thousands of products and alert you the moment something changes. While you can build a basic price tracker from scratch, scaling to enterprise-level monitoring requires different tools.

The problem? Most e-commerce sites are built to stop exactly this kind of monitoring. Cloudflare protection, dynamic pricing that varies by location, JavaScript-heavy product pages, and aggressive rate limiting make price scraping incredibly difficult. That's where the Crawler API approach changes everything.

Key Takeaways

Build production-ready competitor price monitoring systems with automated crawling, anti-bot bypass, and real-time alerts for e-commerce intelligence.

- Use Crawler API to recursively discover and monitor competitor product catalogs automatically

- Bypass Cloudflare and bot detection on major e-commerce sites with ASP configuration

- Handle dynamic pricing across multiple regions using proxy pool routing

- Process thousands of products with URL filtering and extraction rules at scale

- Build data pipelines that normalize prices and trigger alerts when competitors change pricing

- Integrate with repricing tools to automatically adjust your prices based on market conditions

Why Price Monitoring Actually Matters

Price is still the top factor in purchase decisions. Studies show that 60% of online shoppers compare prices before buying, and 87% will leave your site if they find a better deal elsewhere.

But here's what most merchants miss: competitor pricing changes throughout the day. That Amazon listing you checked this morning? Different price at noon. That direct competitor running a flash sale? You didn't even know about it.

Manual price checking scales terribly. Even with a small catalog of 500 products across 3 competitors, you're looking at 1,500 URLs to monitor. Do this daily and you've created a full-time job that still misses most price changes.

Automated price monitoring solves three business problems:

Margin protection: Know immediately when competitors undercut you, so you can decide whether to match, wait, or highlight other value propositions.

Revenue optimization: Identify pricing opportunities where you're significantly cheaper than competitors. You might be leaving money on the table.

Market intelligence: Track competitor promotional calendars, seasonal pricing patterns, and inventory levels to inform your own strategy.

The ROI is straightforward. If price monitoring helps you avoid losing 50 sales per month at $80 average order value, that's $4,000 in saved revenue. Most automated monitoring systems cost a fraction of that.

For manufacturers and brands distributing through retailers, MAP (Minimum Advertised Price) monitoring ensures resellers comply with pricing agreements. For retailers, competitor monitoring focuses on staying competitive in your market segment.

The Real Challenges Nobody Talks About

Building a price monitoring system sounds simple until you actually try it. Here's what breaks most scrapers:

Anti-Bot Protection on E-commerce Sites

Major retailers and marketplaces don't want you monitoring their prices. They use sophisticated protection:

- Cloudflare on virtually every major e-commerce site

- PerimeterX and DataDome on enterprise retailers

- Custom fingerprinting that detects automated browsers

- Rate limiting that blocks IPs after 10-20 requests

Your standard Python scraper with requests and BeautifulSoup? Blocked before you scrape the first product page. Check out our complete guide on avoiding web scraping blocks to understand these protection mechanisms.

Scale and Resource Management

Monitoring 1,000 products across 5 competitors means 5,000 URLs to check. Do this daily and you're making 150,000 requests per month. Traditional approaches run into walls:

- Single-threaded scrapers take hours to complete

- Running headless browsers for everything drains server resources

- Managing proxy rotation and failure retries adds complexity

- Storing and versioning price history requires database design

Data Freshness vs Cost

Real-time monitoring sounds great until you see the infrastructure bill. Running browsers 24/7 to catch price changes costs serious money. You need a strategy that balances:

- How often to check prices (hourly? daily? on-demand?)

- Which products need frequent monitoring vs periodic checks

- When to use expensive residential proxies vs standard datacenter IPs

Most tutorials ignore these tradeoffs and show you how to scrape 10 products. That doesn't help when you need to scale to thousands.

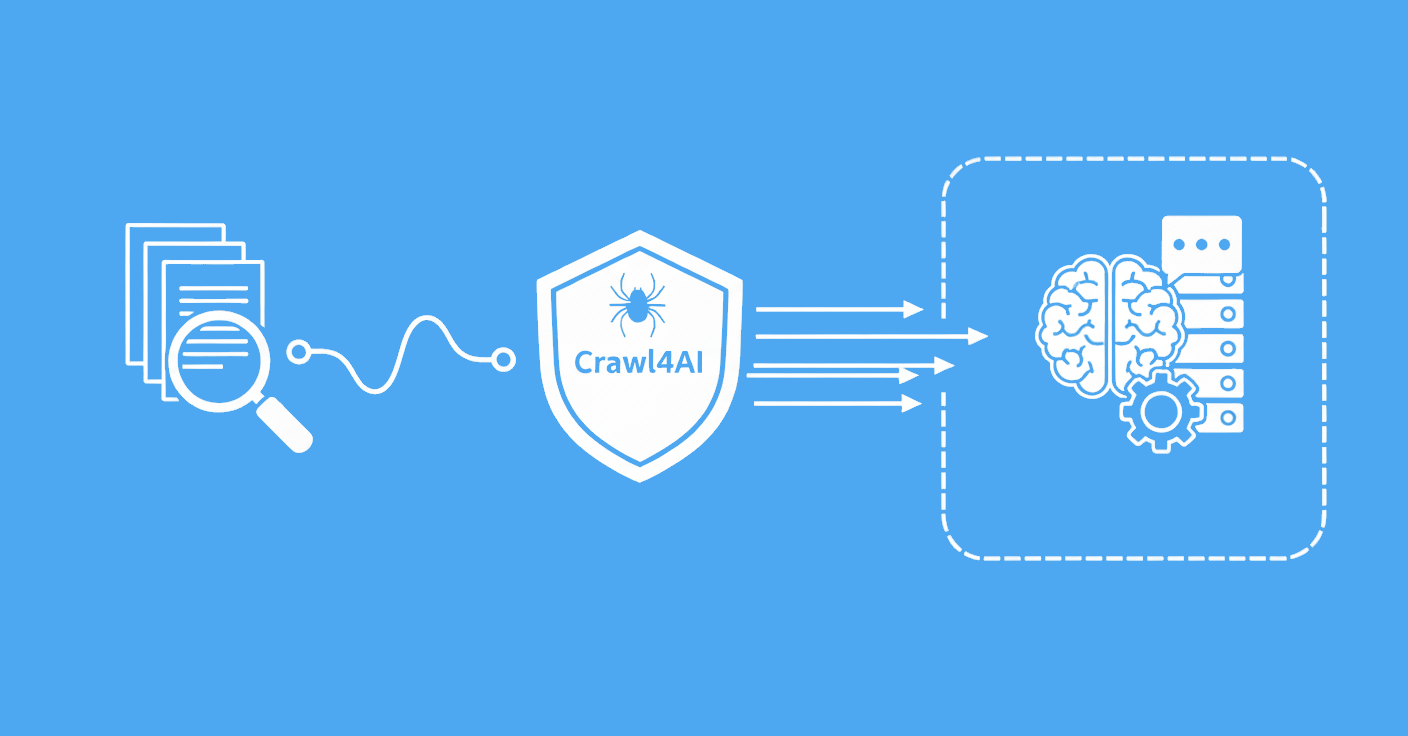

Crawler API Architecture for Price Monitoring

Instead of managing URLs manually, the Crawler API discovers and monitors product catalogs automatically. Here's how it works for price monitoring:

Basic Setup

Start with a simple crawler configuration pointed at a competitor's product category:

import requests

API_KEY = "YOUR_API_KEY"

BASE_URL = "https://api.scrapfly.io"

# Start monitoring a competitor's product category

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://competitor.com/products/electronics",

"page_limit": 500, # Monitor up to 500 products

"max_depth": 2, # Category page + product pages

"include_only_paths": ["/products/*"], # Stay in product section

"content_formats": ["markdown", "page_metadata"],

"asp": True, # Enable anti-scraping protection

"cache": True, # Use cache to reduce costs

"cache_ttl": 3600, # Cache valid for 1 hour

}

)

crawler_data = response.json()

crawler_uuid = crawler_data["uuid"]

print(f"Started crawler: {crawler_uuid}")

This single call sets up recursive crawling that:

- Discovers all product links from the category page automatically

- Follows pagination to find more products

- Respects depth limits to avoid crawling unrelated pages

- Caches results to avoid re-scraping unchanged pages

Extracting Price Data

Product pages need structured extraction. Use extraction rules to pull prices, names, and availability:

# Configure extraction for product pages

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://competitor.com/products/electronics",

"page_limit": 500,

"asp": True,

"content_formats": ["extracted_data"],

"extraction_rules": {

"/products/*": {

"type": "prompt",

"value": """Extract product information as JSON with these fields:

- product_name: full product name

- current_price: current price as number (no currency symbols)

- original_price: original/list price if shown, else null

- currency: currency code (USD, EUR, etc)

- availability: in_stock, out_of_stock, or low_stock

- sku: product SKU or ID if visible

"""

}

}

}

)

crawler_uuid = response.json()["uuid"]

The prompt-based extraction handles variations in HTML structure across different competitor sites. No need to write site-specific parsing logic.

Filtering What Gets Monitored

Product catalogs have noise like clearance items, bundles, and accessories you don't compete with. Filter them out:

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://competitor.com/products",

"page_limit": 1000,

"include_only_paths": [

"/products/laptops/*",

"/products/monitors/*",

"/products/keyboards/*",

],

"exclude_paths": [

"*/bundles/*", # Skip bundle deals

"*/clearance/*", # Skip clearance items

"*/refurbished/*", # Skip refurb products

],

"asp": True,

}

)

This keeps your monitoring focused on actual competing products rather than wasting credits on irrelevant pages.

Handling Product Catalogs at Scale

Monitoring thousands of products requires different strategies than scraping a few dozen URLs.

Parallel Monitoring Across Competitors

Track multiple competitors simultaneously by launching parallel crawlers:

import asyncio

import requests

import time

API_KEY = "YOUR_API_KEY"

BASE_URL = "https://api.scrapfly.io"

competitors = [

"https://competitor-a.com/electronics",

"https://competitor-b.com/tech-products",

"https://competitor-c.com/shop/electronics",

]

async def monitor_competitor(url):

"""Monitor a single competitor's catalog"""

# Start the crawler

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": url,

"page_limit": 500,

"asp": True,

"content_formats": ["extracted_data"],

"extraction_rules": {

"/products/*": {"type": "prompt", "value": "..."}

}

}

)

crawler_uuid = response.json()["uuid"]

# Poll for completion

while True:

status_response = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/status",

params={"key": API_KEY}

)

status = status_response.json()

if status["is_finished"]:

if status["is_success"]:

# Retrieve results

contents_response = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

)

return contents_response.json()

else:

raise Exception(f"Crawl failed: {status['stop_reason']}")

await asyncio.sleep(10)

async def monitor_all_competitors():

"""Monitor all competitors in parallel"""

tasks = [monitor_competitor(url) for url in competitors]

results = await asyncio.gather(*tasks)

# Combine results from all competitors

all_products = []

for pages in results:

all_products.extend(pages)

return all_products

# Run monitoring

results = asyncio.run(monitor_all_competitors())

print(f"Monitored {len(results)} products across {len(competitors)} competitors")

This approach monitors multiple competitors simultaneously without waiting for each to finish sequentially.

Incremental Updates vs Full Crawls

Don't re-scrape everything on every run. Use caching to detect what's changed:

import time

# Daily full crawl with 24-hour cache

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://competitor.com/products",

"cache": True,

"cache_ttl": 86400, # 24 hours

"asp": True,

"content_formats": ["extracted_data"],

}

)

crawler_uuid = response.json()["uuid"]

# Wait for crawl to complete (poll status)

while True:

status = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/status",

params={"key": API_KEY}

).json()

if status["is_finished"]:

break

time.sleep(10)

# Get results

pages_response = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

)

pages = pages_response.json()

for page in pages:

if page.get("cache_status") == "MISS":

# This is fresh data - price may have changed

process_price_update(page)

else:

# Cached data - price unchanged

continue

Cache hits are free since you only pay for fresh scrapes. This dramatically reduces costs for monitoring stable products.

Handling Out-of-Stock Items

Products go out of stock. Don't waste credits checking dead URLs:

import time

from datetime import datetime, timedelta

def monitor_with_availability_tracking(product_urls):

"""Track products but skip those out of stock for >7 days"""

# Load out-of-stock tracking from database

oos_products = load_oos_products()

# Filter out products that have been OOS for over a week

active_urls = [

url for url in product_urls

if url not in oos_products or

(datetime.now() - oos_products[url]) < timedelta(days=7)

]

# Monitor only active products

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": active_urls[0],

"page_limit": len(active_urls),

"asp": True,

"content_formats": ["extracted_data"],

}

)

crawler_uuid = response.json()["uuid"]

# Wait for completion and get results

while True:

status = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/status",

params={"key": API_KEY}

).json()

if status["is_finished"]:

break

time.sleep(10)

# Update OOS tracking

pages = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

).json()

for page in pages:

if page.get("extracted_data", {}).get("availability") == "out_of_stock":

mark_product_oos(page["url"])

This avoids burning credits on products that have been unavailable for days or weeks.

Anti-Bot Configuration for E-commerce Sites

E-commerce sites are the most aggressive about blocking scrapers. Here's how to handle it.

ASP for Major Retailers

Major retailers like Amazon, Walmart, Target, and Best Buy all use heavy protection. Enable ASP:

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://www.amazon.com/s?k=laptop",

"asp": True, # Required for Amazon, Walmart, etc

"country": "US",

"rendering_delay": 2000, # Enable JS rendering with 2s wait

}

)

ASP handles:

- Cloudflare challenges automatically

- Browser fingerprinting that detects automation

- Cookie and session management

- JavaScript anti-bot checks

Without ASP, you'll get blocked on the first request.

Geographic Pricing Variations

Prices vary by location. Track pricing across multiple regions:

import time

# Monitor US and UK pricing separately

us_response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://global-retailer.com/products",

"country": "US",

"asp": True,

"content_formats": ["extracted_data"],

}

)

uk_response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://global-retailer.com/products",

"country": "GB",

"asp": True,

"content_formats": ["extracted_data"],

}

)

us_crawler_uuid = us_response.json()["uuid"]

uk_crawler_uuid = uk_response.json()["uuid"]

# Wait for both crawls to complete

for uuid in [us_crawler_uuid, uk_crawler_uuid]:

while True:

status = requests.get(

f"{BASE_URL}/crawl/{uuid}/status",

params={"key": API_KEY}

).json()

if status["is_finished"]:

break

time.sleep(10)

# Compare regional pricing

us_prices = requests.get(

f"{BASE_URL}/crawl/{us_crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

).json()

uk_prices = requests.get(

f"{BASE_URL}/crawl/{uk_crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

).json()

# Identify pricing arbitrage opportunities

for us_product in us_prices:

uk_product = find_matching_product(us_product, uk_prices)

if uk_product and us_product["price"] < uk_product["price"] * 1.2:

print(f"Arbitrage opportunity: {us_product['name']}")

This reveals regional pricing strategies and potential market opportunities.

Handling Dynamic Product Pages

Modern e-commerce sites load prices with JavaScript. Enable rendering:

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://js-heavy-retailer.com/products",

"rendering_delay": 2000, # Wait 2 seconds for JS to load prices

"asp": True,

}

)

The crawler waits for JavaScript to execute before extracting content. No more missing prices because they loaded after HTML parsing.

Data Pipeline: Crawl → Normalize → Alert

Raw price data needs processing before it becomes actionable intelligence.

Price Normalization

Prices come in different formats across competitors:

def normalize_price(price_str, currency):

"""Convert various price formats to standard decimal"""

import re

# Remove currency symbols and text

cleaned = re.sub(r'[^\d.,]', '', price_str)

# Handle different decimal separators

if ',' in cleaned and '.' in cleaned:

# EU format: 1.234,56 -> 1234.56

if cleaned.rfind(',') > cleaned.rfind('.'):

cleaned = cleaned.replace('.', '').replace(',', '.')

# US format: 1,234.56 -> 1234.56

else:

cleaned = cleaned.replace(',', '')

elif ',' in cleaned:

# Could be decimal (EU) or thousands (US)

# Assume decimal if 2 digits after comma

if len(cleaned.split(',')[1]) == 2:

cleaned = cleaned.replace(',', '.')

else:

cleaned = cleaned.replace(',', '')

return float(cleaned)

# Process crawler results

pages = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

).json()

for page in pages:

raw_price = page["extracted_data"]["current_price"]

currency = page["extracted_data"]["currency"]

normalized = normalize_price(raw_price, currency)

page["extracted_data"]["normalized_price"] = normalized

This handles "$1,234.56", "€1.234,56", "1234.56 USD" and other variations.

Detecting Price Changes

Compare current prices against historical data:

def detect_price_changes(current_prices, historical_prices):

"""Identify products with price changes"""

changes = []

for product in current_prices:

product_id = product["sku"]

current_price = product["normalized_price"]

# Look up historical price

historical = historical_prices.get(product_id)

if not historical:

continue

previous_price = historical["price"]

change_pct = ((current_price - previous_price) / previous_price) * 100

# Flag significant changes (>5%)

if abs(change_pct) > 5:

changes.append({

"product_id": product_id,

"product_name": product["product_name"],

"previous_price": previous_price,

"current_price": current_price,

"change_pct": change_pct,

"competitor": product["competitor_name"],

"timestamp": datetime.now(),

})

return changes

This identifies which products need attention rather than overwhelming you with unchanged data.

Alert Configuration

Different price changes need different responses:

def categorize_alerts(price_changes):

"""Group changes by severity"""

critical = [] # Competitor undercut us by 10%+

warning = [] # Competitor within 5% of our price

info = [] # Other changes worth noting

for change in price_changes:

our_price = get_our_price(change["product_id"])

competitor_price = change["current_price"]

diff_pct = ((our_price - competitor_price) / our_price) * 100

if diff_pct > 10:

critical.append(change)

elif diff_pct > 5:

warning.append(change)

else:

info.append(change)

return critical, warning, info

# Send alerts based on severity

critical, warning, info = categorize_alerts(price_changes)

if critical:

send_slack_alert(critical, channel="#pricing-critical")

if warning:

send_email_digest(warning)

if info:

log_to_dashboard(info)

This prevents alert fatigue by routing different severities to appropriate channels.

Integration with Repricing Tools

Price monitoring becomes powerful when connected to automated repricing.

Webhook-Based Repricing

Use webhooks to trigger repricing immediately when competitor prices change:

# Configure crawler with webhook

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://competitor.com/products",

"webhook_name": "price-monitoring-webhook",

"webhook_events": ["crawler_finished"],

"asp": True,

"content_formats": ["extracted_data"],

}

)

crawler_uuid = response.json()["uuid"]

print(f"Started crawler: {crawler_uuid}")

# Webhook handler (Flask example)

from flask import Flask, request

app = Flask(__name__)

@app.post('/webhooks/price-monitor')

def handle_price_update():

"""Process price updates and trigger repricing"""

data = request.json

crawler_uuid = data["payload"]["crawler_uuid"]

# Fetch results

pages_response = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

)

pages = pages_response.json()

# Detect changes

changes = detect_price_changes(pages, load_historical_prices())

# Trigger repricing for affected products

for change in changes:

if should_reprice(change):

update_our_price(

product_id=change["product_id"],

new_price=calculate_competitive_price(change)

)

return {"status": "ok"}

This creates a real-time repricing loop: competitor changes price → crawler detects it → webhook fires → your price updates automatically.

Rule-Based Repricing Strategies

Different products need different repricing logic:

def calculate_competitive_price(price_change):

"""Determine new price based on product strategy"""

product = load_product(price_change["product_id"])

competitor_price = price_change["current_price"]

# Loss leader - always be cheapest

if product["strategy"] == "loss_leader":

return competitor_price * 0.95 # Undercut by 5%

# Premium positioning - never match cheap prices

elif product["strategy"] == "premium":

if competitor_price < product["floor_price"]:

return product["floor_price"] # Hold the line

else:

return competitor_price * 0.98 # Slight undercut

# Margin protection - maintain minimum margin

elif product["strategy"] == "margin_protect":

min_price = product["cost"] * 1.25 # 25% minimum margin

if competitor_price < min_price:

return min_price # Don't go below margin floor

else:

return competitor_price * 0.99 # Match closely

# Market follower - match competitor pricing

else:

return competitor_price

This ensures repricing decisions align with your overall business strategy.

Export to Existing Tools

Most businesses already have repricing tools. Export data in compatible formats:

def export_for_repricer(price_changes):

"""Export price data for external repricing tools"""

import csv

# Format for common repricing tools

with open('price_updates.csv', 'w', newline='') as f:

writer = csv.DictWriter(f, fieldnames=[

'sku', 'competitor_name', 'competitor_price',

'our_price', 'recommended_price', 'timestamp'

])

writer.writeheader()

for change in price_changes:

our_price = get_our_price(change["product_id"])

writer.writerow({

'sku': change["product_id"],

'competitor_name': change["competitor"],

'competitor_price': change["current_price"],

'our_price': our_price,

'recommended_price': calculate_competitive_price(change),

'timestamp': change["timestamp"],

})

# Upload to repricing tool via API or FTP

upload_to_repricer('price_updates.csv')

This bridges automated monitoring with existing repricing workflows.

Production Deployment Considerations

Moving from prototype to production means handling failures and controlling costs.

Cost Management

Price monitoring costs add up. Control spending:

import time

# Set budget limits per crawl

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": "https://competitor.com/products",

"page_limit": 1000,

"max_api_credit": 5000, # Stop at 5000 credits

"max_duration": 1800, # Stop after 30 minutes

}

)

crawler_uuid = response.json()["uuid"]

# Wait for completion and monitor credit usage

while True:

status = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/status",

params={"key": API_KEY}

).json()

if status["is_finished"]:

break

time.sleep(10)

# Check final stats

state = status["state"]

print(f"Credits used: {state['api_credit_used']}")

print(f"Pages crawled: {state['urls_visited']}")

print(f"Cost per page: {state['api_credit_used'] / state['urls_visited']}")

This prevents runaway costs from large crawls or unexpected site structures.

Scheduling Strategy

Balance freshness needs with costs:

import schedule

# High-value products - check hourly

schedule.every().hour.do(

monitor_products,

product_list=high_value_skus,

cache_ttl=3600

)

# Standard products - check daily

schedule.every().day.at("02:00").do(

monitor_products,

product_list=standard_skus,

cache_ttl=86400

)

# Low-value products - check weekly

schedule.every().monday.at("03:00").do(

monitor_products,

product_list=low_value_skus,

cache_ttl=604800

)

while True:

schedule.run_pending()

time.sleep(60)

This allocates monitoring resources based on business impact.

Error Handling and Retries

Crawls fail. Handle errors gracefully:

import time

def monitor_with_retry(url, max_retries=3):

"""Monitor with automatic retries on failure"""

for attempt in range(max_retries):

try:

# Start crawler

response = requests.post(

f"{BASE_URL}/crawl",

params={"key": API_KEY},

json={

"url": url,

"asp": True,

"page_limit": 500,

"content_formats": ["extracted_data"],

}

)

crawler_uuid = response.json()["uuid"]

# Wait for completion

while True:

status = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/status",

params={"key": API_KEY}

).json()

if status["is_finished"]:

break

time.sleep(10)

# Check success

if status["is_success"]:

contents = requests.get(

f"{BASE_URL}/crawl/{crawler_uuid}/contents",

params={"key": API_KEY, "format": "extracted_data"}

).json()

return contents

else:

raise Exception(f"Crawl failed: {status['stop_reason']}")

except Exception as e:

print(f"Attempt {attempt + 1} failed: {e}")

if attempt < max_retries - 1:

time.sleep(60 * (attempt + 1)) # Exponential backoff

else:

# Log to monitoring system

log_critical_failure(url, e)

raise

This ensures temporary failures don't break your monitoring pipeline.

FAQs

How often should I monitor competitor prices?

It depends on your industry. Fast-moving electronics might need hourly checks. Furniture or appliances can work with daily monitoring. Start daily and adjust based on how frequently competitors change prices.

Will this get me blocked or banned?

No, when done correctly. The Crawler API uses residential proxies and ASP to look like regular shoppers. You're accessing public data the same way a customer would. Respect robots.txt and avoid hammering sites with unreasonable request rates.

How many competitors should I monitor?

Start with your top 3-5 direct competitors. Monitoring 20 competitors sounds good but creates overwhelming amounts of data. Focus on the competitors who actually affect your sales.

What if a competitor uses dynamic pricing?

Dynamic pricing that changes by user or session is tricky. Use consistent proxy locations (same city/country) and avoid cookies between requests. This gives you a baseline "anonymous shopper" price that's comparable over time.

Can I monitor marketplaces like Amazon or eBay?

Yes, but marketplace monitoring is more complex. You're often tracking multiple sellers for the same product. Focus on the "Buy Box" winner or lowest price rather than trying to track every seller. Check out our guides on scraping Amazon and scraping eBay for marketplace-specific techniques.

How much does price monitoring cost?

Using Crawler API, monitoring 1,000 products daily costs around 30,000 API credits per month (1,000 products × 30 days × ~1 credit per product with caching). At Scrapfly's pricing, that's roughly $50-75/month. Compare that to lost sales from being undercut by competitors.

Should I match every price drop?

No. Use rules-based repricing that considers your margins, positioning, and product strategy. Some products are loss leaders worth matching. Others are premium products where matching cheap competitors hurts your brand.

How do I handle products that change URLs?

Track products by SKU or unique identifier rather than URL. When a URL changes, your system can match the SKU to find the same product at its new location.

Summary

Competitor price monitoring used to require complex infrastructure, proxy management, and custom scrapers for every site. The Crawler API changes that equation completely.

By handling the hard parts (discovering product URLs, bypassing anti-bot protection, rendering JavaScript, managing proxies), it lets you focus on the business logic: which products to monitor, how to analyze price changes, and what repricing strategies to use.

The pattern is straightforward:

- Configure crawlers for each competitor's product catalog

- Extract prices using prompt-based extraction rules

- Normalize data across different formats and currencies

- Detect changes by comparing against historical prices

- Alert and reprice based on your business rules

Start with a small set of high-value products and one competitor. Prove the value. Then scale to hundreds or thousands of products across multiple competitors. The same code that monitors 10 products works for 10,000 by adjusting the page limits and budget controls.

The differentiator that matters: this actually works on protected e-commerce sites. Most price monitoring tutorials break on Amazon, Walmart, or any major retailer. This approach handles them out of the box.

For complete code examples and production-ready implementations, check the Scrapfly examples repository.