Web crawling has evolved dramatically in 2026. What used to require complex infrastructure and constant maintenance can now be accomplished with a few clicks or lines of code. But with so many options available, choosing the right tool can make or break your data collection project.

In this guide, you'll learn:

- The fundamental differences between web scrapers and crawlers

- Top 5 web crawler tools for different skill levels and use cases

- Real-world code examples showing how each tool works

- Performance comparisons to help you choose the right solution

- Integration strategies for scaling your data collection efforts

Whether you're a beginner looking to automate your first data collection task or an enterprise team building a production crawling system, this guide will help you make an informed decision.

Manually collecting data from websites is manageable for a handful of pages, but quickly becomes overwhelming when you need to gather information from thousands or even millions of URLs. To efficiently scale your data collection efforts whether for price monitoring, market research, or AI training using the right web crawler tool is essential.

In this guide, we will explore the leading web crawler tools, from all-in-one platforms and no-code options for beginners to open-source frameworks and API-based services for developers and enterprises.

Key Takeaways

Master web crawler tools in 2026 with advanced automation platforms, no-code solutions, and enterprise frameworks for comprehensive data collection workflows.

- Use Octoparse and Scrapy for powerful web crawling with visual interface and Python framework capabilities

- Configure no-code solutions for beginners and non-technical users with drag-and-drop crawling interfaces

- Implement enterprise-grade crawling with distributed systems and advanced data processing pipelines

- Choose appropriate tools based on technical expertise, project complexity, and scalability requirements

- Configure proper rate limiting and ethical crawling practices to respect website terms of service

- Use specialized tools like ScrapFly for automated web crawling with comprehensive anti-blocking features

Before we explore the tools, remember that web crawling should always respect website terms of service and robots.txt files. Many websites have specific policies about automated access.

What is Web Crawling?

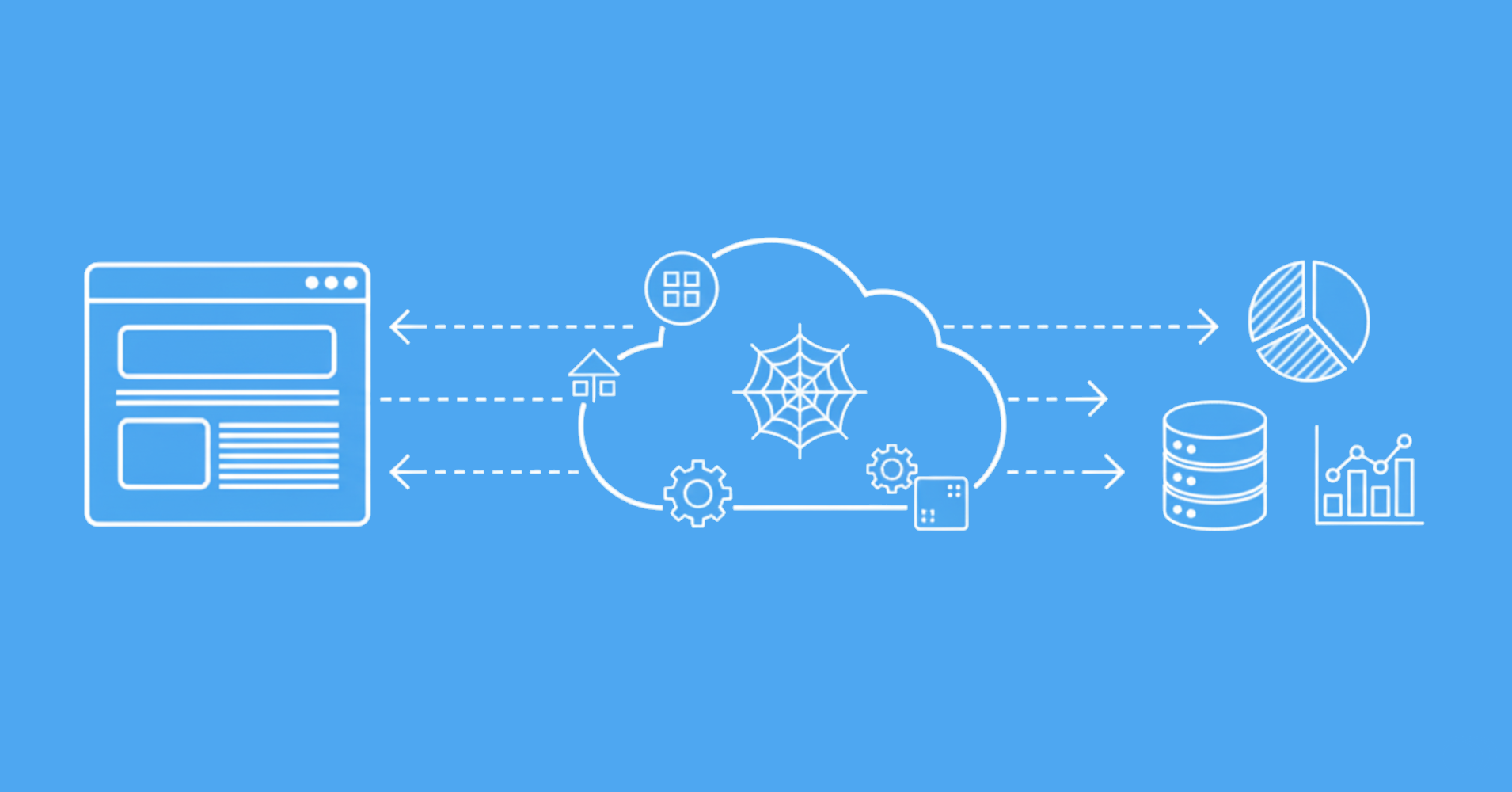

Web crawling is the automated process of systematically browsing and collecting data from websites. Unlike manual browsing, web crawlers can visit thousands of pages automatically, extract specific information, and organize it into structured datasets.

Key Features of Web Crawling:

Web crawling enables automated data collection at scale, allowing you to gather information from multiple sources efficiently.

- Automated Navigation: Systematically visit web pages following links or sitemaps

- Data Extraction: Parse HTML content and extract specific information

- Scale Management: Handle thousands or millions of pages efficiently

- Rate Limiting: Control request frequency to avoid overwhelming servers

- Data Processing: Clean, structure, and export collected data in various formats

Web crawling has become essential for businesses needing large-scale data collection, from e-commerce price monitoring to content aggregation and market research.

How Web Crawling Works

Before exploring specific tools, let's understand how web crawling typically works. This will help illustrate the core concepts and set the stage for comparing different crawler solutions.

Here's a basic example showing the fundamental workflow of web crawling:

from bs4 import BeautifulSoup

import requests

response = requests.get("https://web-scraping.dev/product/1")

html = response.text

# Create the soup object

soup = BeautifulSoup(html, "lxml")

This example demonstrates the basic crawler workflow: starting with seed URLs, following links, extracting data, and managing the crawling process.

Why Choose the Right Web Crawler Tool?

While basic web crawling might seem straightforward, real-world projects often face significant challenges that require specialized tools. As the web becomes more complex and anti-bot measures more sophisticated, developers and businesses are increasingly turning to purpose-built crawler solutions. Here are the most common reasons why choosing the right web crawler tool is crucial for successful data collection projects:

Scale and Performance Requirements

Simple scripts work fine for crawling a few hundred pages, but when you need to process millions of URLs daily, performance becomes critical. Professional crawler tools are optimized for high-throughput scenarios with features like parallel processing, distributed crawling, and intelligent queue management.

Most enterprise projects require crawling thousands of pages per hour while maintaining data quality and avoiding server overload. The right tool can mean the difference between completing a large-scale crawling project in hours versus weeks.

Anti-Bot Detection and Bypass

Modern websites employ sophisticated anti-bot measures including CAPTCHAs, rate limiting, IP blocking, and JavaScript challenges. Simple crawlers often get blocked within minutes, while advanced tools come with built-in bypass capabilities.

Professional crawler tools include features like rotating proxies, browser fingerprint rotation, and human-like behavior simulation to maintain access to protected content.

Data Quality and Consistency

Raw web data is often messy and inconsistent. Professional crawler tools include data cleaning capabilities, duplicate detection, and validation features to ensure high-quality output datasets.

Scraper vs Crawler: Understanding the Difference

Before diving into specific tools, it's important to clarify the distinction between web scrapers and web crawlers, as these terms are often used interchangeably but refer to different approaches in automated data collection.

Web Scrapers extract specific data from known web pages, focusing on precision and data quality. They work best when you know exactly what information you need and where to find it.

Web Crawlers are designed to automatically discover and visit web pages by following links from seed URLs. Their main goal is broad discovery, making them ideal for indexing, search engines, and collecting large datasets.

Modern tools often blur the line between these two roles, combining crawling and scraping capabilities into a single workflow. This allows users to both discover new content and extract structured data efficiently, which is especially useful for large-scale or ongoing data collection projects.

Understanding this distinction will help you choose the right tool and approach for your needs. now, let's explore the top tools that address these challenges and see how they compare in real-world scenarios.

Top Web Crawler Tools in 2026

Choosing the best web crawler tool depends on your project goals, technical skills, and the complexity of the websites you want to crawl. Below, we highlight the leading solutions for a variety of use cases, from API-first platforms to visual no-code tools and open-source frameworks.

Scrapfly

Scrapfly is a modern API-first web crawling service that handles JavaScript-heavy websites and provides clean, structured data output. It's designed for developers who need reliable crawling without managing infrastructure.Scrapfly stands out for its API-first design that makes integration seamless, advanced JavaScript support for modern websites, automatic data cleaning and structuring, and built-in anti-blocking protection. These strengths make it particularly well-suited for AI training data collection, content aggregation platforms, and market research automation.

Here's how to use Scrapfly's API to crawl a website and extract structured data:

from scrapfly import ScrapflyClient, ScrapeConfig

def crawl_with_scrapfly(url, max_pages=100):

"""Use Scrapfly to crawl websites with built-in anti-blocking."""

client = ScrapflyClient(key="YOUR_API_KEY")

result = client.scrape(ScrapeConfig(

url=url,

asp=True, # Anti-scraping protection

country="US",

render_js=True # Handle JavaScript-heavy sites

))

return result.scrape_result['content']

# Usage example

url = "https://web-scraping.dev/product/1"

content = crawl_with_scrapfly(url)

print(f"Successfully crawled {len(content)} characters")

Scrapfly's API approach eliminates the need for infrastructure management while providing enterprise-grade crawling capabilities with advanced anti-blocking features like proxy rotation, browser fingerprint management, and automatic retry logic.

Octoparse

Octoparse is a visual, no-code web scraping tool that allows users to build crawlers through a point-and-click interface. It's perfect for non-technical users who need powerful crawling capabilities.Octoparse's key strengths include its visual workflow builder requiring no coding knowledge, built-in cloud infrastructure for scalable crawling, pre-built templates for popular websites, and automatic data export to various formats. These features make it especially well-suited for business analysts and researchers, e-commerce price monitoring, and social media data collection.

Here's how Octoparse works conceptually (through its visual interface):

1. URL Input: Enter target website URL

2. Page Analysis: Octoparse automatically detects page elements

3. Data Selection: Click on elements you want to extract

4. Workflow Creation: Visual workflow is automatically generated

5. Schedule & Run: Set up automated crawling schedules

6. Data Export: Download results in CSV, Excel, or API format

Octoparse democratizes web crawling by making it accessible to users without programming experience.

Scrapy

Scrapy is the most popular open-source Python framework for web crawling and scraping. It provides a complete toolkit for building custom, production-ready crawlers.Scrapy's strengths include its highly customizable architecture for complex crawling logic, excellent performance with built-in parallelization, comprehensive middleware system for handling various scenarios, and strong community support with extensive documentation. These capabilities make it ideal for custom crawler development, large-scale data mining projects, and complex crawling workflows requiring specialized logic.

You can learn more about Scrapy and its advanced features in our dedicated guide.

Here's a simple Scrapy spider that demonstrates how to build a custom crawler.

import scrapy

class ReviewsSpider(scrapy.Spider):

name = "reviews"

allowed_domains = ["web-scraping.dev"]

def start_requests(self):

url = "https://web-scraping.dev/testimonials"

yield scrapy.Request(

url=url,

meta={

"playwright": True

}

)

def parse(self, response):

reviews = response.css("div.testimonial")

for review in reviews:

yield {

"rate": len(review.css("span.rating > svg").getall()),

"text": review.css("p.text::text").get()

}

Scrapy's framework approach provides maximum flexibility for developers building complex crawling solutions.

Scrapfly Alternative for Scrapy Users

If you prefer Scrapy's flexibility but want managed infrastructure and anti-blocking, you can integrate Scrapfly with Scrapy:

import scrapy

from scrapfly import ScrapflyClient, ScrapeConfig

class ScrapflySpider(scrapy.Spider):

name = "scrapfly_integration"

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.scrapfly_client = ScrapflyClient(key="YOUR_API_KEY")

def start_requests(self):

urls = ["https://web-scraping.dev/products"]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse_with_scrapfly)

def parse_with_scrapfly(self, response):

"""Use Scrapfly for individual requests while keeping Scrapy's workflow."""

# Extract product URLs from listing page

product_urls = response.css("a.product-link::attr(href)").getall()

for product_url in product_urls[:5]: # Limit to first 5 for demo

yield scrapy.Request(

url=product_url,

callback=self.parse_product_with_scrapfly,

meta={"product_url": product_url}

)

def parse_product_with_scrapfly(self, response):

"""Parse product details using Scrapfly for anti-blocking."""

product_url = response.meta["product_url"]

# Use Scrapfly for the actual product page

scrapfly_result = self.scrapfly_client.scrape(ScrapeConfig(

url=product_url,

asp=True,

render_js=True

))

# Parse the content

yield {

"url": product_url,

"content": scrapfly_result.scrape_result["content"]

}

This approach gives you the best of both worlds: Scrapy's powerful crawling framework with Scrapfly's anti-blocking capabilities.

You can learn more about Scrapy and its advanced features in our dedicated guide.

Playwright

Playwright is a browser automation framework that excels at crawling JavaScript-heavy websites by controlling real browsers. It's perfect for sites that require user interaction or have complex dynamic content.Playwright's key strengths include full browser automation with Chrome, Firefox, and Safari support, excellent JavaScript execution for dynamic content, built-in waiting strategies for async content loading, and screenshot and PDF generation capabilities. These features make it especially well-suited for single-page applications (SPAs), sites requiring user interaction simulation, and dynamic content that loads after page initialization.

Here's how to use Playwright to crawl a JavaScript-heavy website. We'll break this down into focused functions for better maintainability:

from playwright.sync_api import sync_playwright

def setup_browser():

"""Initialize Playwright browser with proper configuration."""

playwright = sync_playwright().start()

browser = playwright.chromium.launch(headless=False)

context = browser.new_context()

return playwright, browser, context

def scroll_to_load_content(page, max_scrolls=100):

"""Scroll down to load all dynamic content on the page."""

prev_height = -1

scroll_count = 0

while scroll_count < max_scrolls:

# Execute JavaScript to scroll to the bottom of the page

page.evaluate("window.scrollTo(0, document.body.scrollHeight)")

# Wait for new content to load

page.wait_for_timeout(1000)

# Check whether the scroll height changed - means more content loaded

new_height = page.evaluate("document.body.scrollHeight")

if new_height == prev_height:

break

prev_height = new_height

scroll_count += 1

return scroll_count

def extract_testimonials(page):

"""Extract testimonial data from the loaded page."""

results = []

for element in page.locator(".testimonial").element_handles():

text = element.query_selector(".text").inner_html()

results.append(text)

return results

# Main crawling function

def crawl_testimonials(url):

"""Main function to crawl testimonials from a JavaScript-heavy page."""

playwright, browser, context = setup_browser()

page = context.new_page()

try:

# Navigate to the website

page.goto(url)

# Scroll to load all content

scroll_count = scroll_to_load_content(page)

print(f"Scrolled {scroll_count} times to load content")

# Extract testimonials

results = extract_testimonials(page)

print(f"Scraped: {len(results)} testimonials!")

return results

finally:

browser.close()

playwright.stop()

# Usage

url = "https://web-scraping.dev/testimonials/"

testimonials = crawl_testimonials(url)

This approach breaks down the crawling process into focused functions that are easier to maintain and debug. Each function has a single responsibility, making the code more readable and testable.

Scrapfly Alternative for JavaScript-Heavy Sites

For JavaScript-heavy websites, Scrapfly provides a simpler alternative with built-in browser automation:

from scrapfly import ScrapflyClient, ScrapeConfig

def crawl_js_site_with_scrapfly(url):

"""Use Scrapfly to handle JavaScript-heavy sites without browser setup."""

client = ScrapflyClient(key="YOUR_API_KEY")

result = client.scrape(ScrapeConfig(

url=url,

render_js=True, # Execute JavaScript like a browser

asp=True, # Anti-scraping protection

wait_for=".testimonial", # Wait for specific element

scroll=True, # Auto-scroll to load dynamic content

scroll_delay=1000 # Delay between scrolls

))

return result.scrape_result['content']

# Usage

url = "https://web-scraping.dev/testimonials/"

content = crawl_js_site_with_scrapfly(url)

print(f"Successfully crawled JavaScript-heavy page: {len(content)} characters")

This approach eliminates the need to manage browser instances while providing the same JavaScript execution capabilities.

Playwright provides the most comprehensive solution for crawling modern, JavaScript-dependent websites.

Beautiful Soup

The combination of Beautiful Soup and Requests remains one of the most popular approaches for building custom Python crawlers. It offers simplicity and flexibility for straightforward crawling tasks.

This combination's strengths include its simple, intuitive API that's perfect for beginners, lightweight with minimal dependencies, excellent documentation and community support, and easy integration with other Python libraries. These features make it ideal for educational projects and learning web scraping, simple crawling tasks with static content, and rapid prototyping of scraping ideas.

Here's a simple crawler built with Beautiful Soup and Requests.

import requests

from bs4 import BeautifulSoup

def crawl_static_list(url):

# Send HTTP request to the target URL

response = requests.get(url, headers={"User-Agent": "Mozilla/5.0"})

# Parse the HTML content

soup = BeautifulSoup(response.text, "html.parser")

# Find all product items

items = soup.select("div.row.product")

# Extract data from each item

results = []

for item in items:

title = item.select_one("h3.mb-0 a").text.strip()

price = item.select_one("div.price").text.strip()

results.append({"title": title, "price": price})

return results

url = "https://web-scraping.dev/products"

data = crawl_static_list(url)

print(f"Found {len(data)} items")

for item in data[:3]: # Print first 3 items as example

print(f"Title: {item['title']}, Price: {item['price']}")

Beautiful Soup provides an excellent starting point for developers learning web crawling concepts.

Scrapfly Alternative for Simple Crawling

For simple crawling tasks, Scrapfly offers a straightforward API that handles the HTTP requests for you:

from scrapfly import ScrapflyClient, ScrapeConfig

from bs4 import BeautifulSoup

def crawl_with_scrapfly_and_bs4(url):

"""Use Scrapfly for requests and Beautiful Soup for parsing."""

client = ScrapflyClient(key="YOUR_API_KEY")

result = client.scrape(ScrapeConfig(

url=url,

asp=True, # Anti-scraping protection

country="US"

))

# Use Beautiful Soup for parsing

soup = BeautifulSoup(result.scrape_result['content'], 'html.parser')

# Extract data using the same Beautiful Soup logic

items = soup.select("div.row.product")

results = []

for item in items:

title = item.select_one("h3.mb-0 a")

title_text = title.text.strip() if title else "No title found"

price_element = item.select_one("div.price")

price = price_element.text.strip() if price_element else None

results.append({"title": title_text, "price": price})

return results

# Usage

url = "https://web-scraping.dev/products"

data = crawl_with_scrapfly_and_bs4(url)

print(f"Found {len(data)} items")

This approach keeps your familiar Beautiful Soup parsing logic while adding Scrapfly's anti-blocking capabilities.

Selenium

Selenium is a veteran browser automation tool that remains popular for crawling dynamic websites. While originally designed for testing, it's widely used for web crawling tasks requiring full browser simulation.Selenium's key strengths include mature, battle-tested framework with extensive documentation, support for multiple browsers and programming languages, excellent for sites requiring complex user interactions, and large community with abundant tutorials and resources. These features make it especially well-suited for legacy systems and established workflows, complex form interactions and multi-step processes, and teams already familiar with Selenium for testing.

Here's how to use Selenium for crawling dynamic content.

import json

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome()

driver.get("https://web-scraping.dev/product/1")

add_to_cart_button = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, ".add-to-cart")))

add_to_cart_button.click()

time.sleep(1) # wait for the Local Storage to get updated

local_storage_data_str = driver.execute_script("return JSON.stringify(localStorage);")

local_storage_data = json.loads(local_storage_data_str)

print(local_storage_data)

# {'cart': '{"1_orange-small":1}'}

cart = json.loads(local_storage_data["cart"])

cart['1_cherry-small'] = 1

driver.execute_script(f"localStorage.setItem('cart', JSON.stringify({json.dumps(cart)}));")

driver.get("https://web-scraping.dev/cart")

cart_items = WebDriverWait(driver, 10).until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, ".cart-item .cart-title")))

print(f'items in cart: {len(cart_items)}')

# items in cart: 2

driver.quit()

Selenium provides reliable browser automation for crawling complex, interactive websites.

Scrapfly Alternative for Complex Interactions

For sites requiring complex interactions, Scrapfly offers JavaScript execution capabilities:

from scrapfly import ScrapflyClient, ScrapeConfig

def interact_with_scrapfly(url):

"""Use Scrapfly to execute JavaScript and interact with pages."""

client = ScrapflyClient(key="YOUR_API_KEY")

# First, get the initial page

result = client.scrape(ScrapeConfig(

url=url,

render_js=True,

asp=True

))

# Execute custom JavaScript for interactions

js_code = """

// Simulate clicking add to cart button

const addButton = document.querySelector('.add-to-cart');

if (addButton) {

addButton.click();

return 'Button clicked successfully';

}

return 'Button not found';

"""

# Execute the JavaScript

js_result = client.scrape(ScrapeConfig(

url=url,

render_js=True,

asp=True,

js_code=js_code

))

return {

"initial_content": result.scrape_result['content'],

"js_result": js_result.scrape_result['content']

}

# Usage

url = "https://web-scraping.dev/product/1"

result = interact_with_scrapfly(url)

print("Interaction completed")

This approach provides JavaScript execution capabilities without the complexity of managing browser instances.

Web Crawler Tools Comparison

To help you quickly compare the main crawler tools, here's a table summarizing their key strengths and ideal use cases:

| Tool | Key Strengths | Best Use Cases |

|---|---|---|

| Scrapfly | API-first design, JavaScript support, automatic data cleaning, anti-blocking | AI training data collection, content aggregation, market research automation |

| Octoparse | No-code visual interface, cloud infrastructure, pre-built templates | Business analysts, e-commerce monitoring, social media data collection |

| Scrapy | Highly customizable, excellent performance, comprehensive middleware system | Custom crawler development, large-scale data mining, complex crawling workflows |

| Playwright | Full browser automation, JavaScript execution, modern browser support | Single-page applications (SPAs), user interaction simulation, dynamic content crawling |

| Beautiful Soup | Simple API, lightweight, excellent documentation, easy learning curve | Educational projects, simple static content, rapid prototyping |

| Selenium | Mature framework, multi-browser support, complex interaction handling | Legacy system integration, complex form interactions, established testing workflows |

This comparison highlights what each tool does best and the scenarios where it excels, making it easier to choose the right crawler for your data collection project.

Fully Managed Crawling with Crawler API

If you need to crawl entire domains without building crawler logic at all, Scrapfly's Crawler API handles the complete process: automatic URL discovery, link following, deduplication, and anti-bot bypass. It's ideal for projects where you need comprehensive domain data without managing crawling infrastructure or writing spider code.

Choosing the Right Approach

Choosing the right approach for building a web scraper depends on several factors, including your technical experience, team resources, project scale, and the complexity of the target website.

Beginners will find Beautiful Soup and Requests easy to learn and ideal for scraping static web pages, while those with more experience may prefer Scrapy for its advanced features and production-ready capabilities.

If your target sites are JavaScript-heavy, tools like Playwright or Selenium are better suited, as they can automate browsers and interact with dynamic content.

Web Crawlers for AI & LLM Applications

Large Language Models (LLMs) are becoming a big part of how businesses work these days. That means there's a growing need for good, current training data and context. Web crawlers help with this by giving RAG systems fresh information from different sources to make LLM responses more useful and real-world focused.

Why Web Crawlers Help with RAG and LLM Work

Web crawlers help AI applications in several key ways:

- Current Information: LLMs trained on old data miss out on what's happening now. Crawlers bring in the latest web content, news updates, and recent changes.

- Building Training Data: When you want to fine-tune LLMs, you need to gather lots of different high-quality web content in an organized way.

- Creating Knowledge Bases: RAG systems work better with large, well-organized collections of information that crawlers can keep updated automatically.

- Combining Multiple Sources: Pulling data from many different websites gives richer context than just one source.

Today's AI-focused crawlers do more than just grab data. They prepare content in formats that work well with LLMs, deal with anti-bot protections that stop AI training, and create outputs that fit easily into vector databases and embedding systems.

Tools Made for AI and LLM Work

| Tool | What It Does | When to Use It |

|---|---|---|

| Scrapfly Crawler API | Blocks anti-bot measures + creates markdown ready for LLMs, finds links automatically, removes duplicates | Large business RAG setups, full website crawling with AI-formatted results |

| Scrapfly AI Extraction | Smart extraction that detects patterns automatically, creates structured JSON for LLM training | Collecting training data, organizing data for AI models automatically |

| Crawl4AI | Free open-source tool, works with local LLMs, lets you customize how it extracts data | Building AI locally, research work, projects where privacy matters |

| Jina Reader | Easy URL to markdown conversion, works quickly, gives clean text | Testing ideas fast, simple RAG apps, summarizing content |

Here's one way to use Scrapfly's AI extraction for LLM projects:

from scrapfly import ScrapflyClient, ScrapeConfig

def extract_ai_ready_content(url):

"""Grab web content that's ready to use with LLMs."""

client = ScrapflyClient(key="YOUR_API_KEY")

result = client.scrape(ScrapeConfig(

url=url,

extraction_model="markdown", # Format that works well with LLMs

asp=True, # Gets around blocking

render_js=True # Handles interactive websites

))

return result.scrape_result['extracted_data']['data']

# Try it out

content = extract_ai_ready_content("https://example.com/article")

print(f"Got LLM-ready content: {len(content)} characters")

If you need to crawl a whole website and get AI-ready results, Scrapfly's Crawler API finds and grabs everything automatically:

import scrapfly

def crawl_domain_for_rag(domain_url):

"""Crawl a full website to build a RAG knowledge base."""

client = scrapfly.ScrapflyClient(key="YOUR_API_KEY")

# Grab the whole site with results optimized for AI

crawl_result = client.crawl(

url=domain_url,

max_pages=1000,

extraction_model="markdown",

format="llm" # Made for LLM use

)

return crawl_result['data']

# Build your RAG knowledge base

knowledge_base = crawl_domain_for_rag("https://docs.example.com")

Check out our docs for more on AI-powered data extraction and Crawler API for full website coverage.

Power Up with Scrapfly

While these crawler tools offer great capabilities, modern websites often employ sophisticated anti-bot measures that can block even the most advanced crawlers. Traditional solutions require constant maintenance of proxy pools, browser fingerprints, and bypass techniques.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

FAQ

Here are answers to some frequently asked questions about web crawler tools:

What's the difference between scraper vs crawler?

A web scraper focuses on extracting specific data from known web pages with precision and data quality as priorities. A web crawler systematically discovers and visits web pages, often following links to map entire websites or domains, with breadth and discovery as main goals. Many modern tools combine both capabilities.

Can Scrapfly handle JavaScript-heavy websites better than traditional tools?

Yes, Scrapfly is specifically designed to handle modern JavaScript websites through its API service. It executes JavaScript automatically and provides clean, structured data output, making it superior to simple HTTP-based crawlers for dynamic content.

How do I choose between open-source tools and managed services?

Open-source tools like Scrapy give you full control and no ongoing costs but require more maintenance and infrastructure management. Managed services like Scrapfly handle anti-blocking and scaling automatically but have usage-based pricing. Consider your team's technical expertise and long-term maintenance capabilities.

Summary

Choosing the right web crawler tool depends on your technical expertise, project requirements, and long-term maintenance capabilities. Beginners will find Beautiful Soup and Requests approachable, while developers building production systems should consider Scrapy's performance or API-based solutions for reliability.

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.

The key is matching the tool to your specific use case: use Octoparse for no-code solutions, Playwright for JavaScript-heavy sites, Scrapy for custom development, and API services like Scrapfly for managed infrastructure. Remember that successful web crawling isn't just about choosing the right tool; it's about understanding website structures, respecting rate limits, and handling the inevitable challenges that come with large-scale data collection.