Documentation is scattered across hundreds of pages. Traditional search doesn't understand what you're really asking. Let's fix that.

Finding answers in documentation is frustrating. You search through multiple pages, switch between versions, and still can't find what you need. Ctrl+F doesn't understand context. Regular search engines don't know your specific use case.

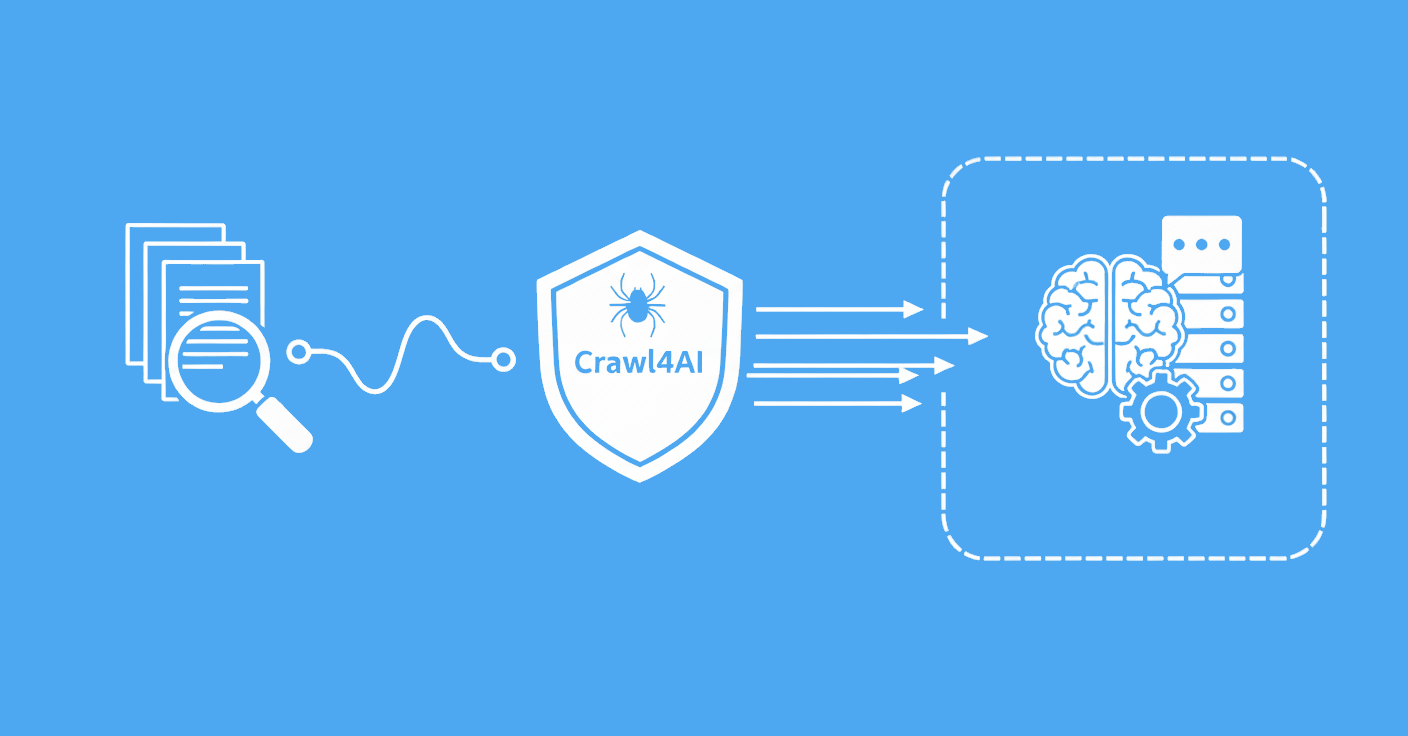

We're going to build a documentation chatbot Python developers can use to query any docs site, even ones protected by Cloudflare. This chatbot understands natural language, remembers conversation context, and cites its sources.

The secret? We'll use Scrapfly's Crawler API to scrape documentation (bypassing bot protection), create a RAG pipeline with LangChain and HuggingFace embeddings, and build an interface with Streamlit.

Key Takeaways

In this guide, you'll learn how to build a documentation chatbot from website content that works on any site.

- Build a documentation chatbot using Scrapfly Crawler API for reliable scraping from any site

- Use LangChain for RAG pipeline with chunking, embeddings, and vector storage

- Implement conversational memory with chat history for context-aware responses

- Create an interactive Streamlit web interface with chat functionality

- Deploy to Streamlit Cloud for free hosting

- Scrape protected documentation sites like Cloudflare Docs that block standard scrapers

Why Build a Documentation Chatbot?

Documentation has several problems. Pages are long and technical. Searching with Ctrl+F only finds exact matches, not concepts. You often need to read multiple pages to understand one feature.

Documentation chatbots solve these issues. Users ask questions in plain English. The chatbot finds relevant sections across all pages, understands context, and provides focused answers with code examples.

Real use cases include internal company docs (developers find answers faster), open source projects (contributors get onboarded quickly), and customer support (reduce ticket volume).

The approach we'll use is called RAG (Retrieval-Augmented Generation). Instead of training a model on your docs, we store them in a vector database. When users ask questions, we find relevant chunks and feed them to a language model. This keeps answers accurate and up to date.

Project Setup

You'll need Python 3.9 or later. You'll also need API keys from Scrapfly (for scraping), HuggingFace (for embeddings), and OpenAI (for the language model).

Install the required packages:

$ pip install requests langchain langchain-community langchain-huggingface faiss-cpu streamlit python-dotenv

Create a .env file in your project directory with your API keys:

SCRAPFLY_API_KEY=your_scrapfly_key_here

HUGGINGFACE_API_KEY=your_huggingface_token_here

OPENAI_API_KEY=your_openai_key_here

Get your Scrapfly API key from the Scrapfly dashboard. For HuggingFace, create a token in your account settings. OpenAI keys come from the OpenAI platform.

Scrape Documentation with Crawler API

Most documentation scraping tutorials fail when they hit protected sites. Cloudflare Workers docs, for example, block requests from Python's requests library or BeautifulSoup. This is where Scrapfly's Crawler API shines.

The Crawler API automatically discovers all pages from a starting URL, handles JavaScript rendering, and bypasses bot protection. You get clean markdown output perfect for feeding to language models.

We're using Crawler API instead of Scraper API because documentation sites have many interconnected pages. Crawler API finds all pages automatically, while Scraper API works best for known URLs. For a detailed comparison of when to use each, see our guide on Scraper API vs Crawler API.

Here's a comparison of what happens when you try different approaches:

| Feature | DIY (requests + BeautifulSoup) | Scrapfly Crawler API |

|---|---|---|

| Cloudflare bypass | ❌ Blocked | ✅ Works perfectly |

| Auto page discovery | ❌ Manual | ✅ Automatic |

| Clean markdown output | ❌ HTML parsing needed | ✅ Built-in |

| Time to implement | Hours of debugging | Minutes |

Let's scrape Cloudflare Workers documentation to prove this works on protected sites:

import requests

import json

import os

import time

from dotenv import load_dotenv

load_dotenv()

SCRAPFLY_KEY = os.getenv('SCRAPFLY_API_KEY')

# Start a crawl of Cloudflare Workers docs

response = requests.post(

'https://api.scrapfly.io/crawl',

params={'key': SCRAPFLY_KEY},

headers={'Content-Type': 'application/json'},

json={

'url': 'https://developers.cloudflare.com/workers/',

'page_limit': 50, # Limit to 50 pages for this example

'content_formats': ['markdown'], # Get clean markdown output

'rendering_delay': 2000, # Wait 2s for JavaScript

'asp': True # Enable anti-scraping protection

}

)

crawl_data = response.json()

crawler_uuid = crawl_data['data']['uuid']

print(f"Crawler started: {crawler_uuid}")

# Poll for completion (in production, use webhooks)

while True:

status_response = requests.get(

f'https://api.scrapfly.io/crawl/{crawler_uuid}/status',

params={'key': SCRAPFLY_KEY}

)

status = status_response.json()['data']

if status['is_finished']:

print(f"Crawl finished. Reason: {status['stop_reason']}")

break

print(f"Progress: {status['state']['urls_visited']} pages visited")

time.sleep(10)

# Retrieve the markdown content

contents_response = requests.get(

f'https://api.scrapfly.io/crawl/{crawler_uuid}/contents',

params={

'key': SCRAPFLY_KEY,

'format': 'markdown'

}

)

contents = contents_response.json()['data']

# Save to JSON for later use

docs_data = []

for item in contents:

docs_data.append({

'url': item['url'],

'content': item['content']['markdown'],

'title': item.get('title', '')

})

with open('cloudflare_docs.json', 'w') as f:

json.dump(docs_data, f, indent=2)

print(f"Saved {len(docs_data)} pages")

Output example

Crawler started: 550e8400-e29b-41d4-a716-446655440000

Progress: 15 pages visited

Progress: 32 pages visited

Progress: 50 pages visited

Crawl finished. Reason: page_limit

Saved 50 pages

The key here is content_formats=['markdown']. This gives you clean, LLM-ready text instead of raw HTML. The asp=True parameter enables Scrapfly's anti-scraping protection, which handles Cloudflare and other bot detection systems.

If you want to learn more about making HTTP requests in Python, check out our guide on web scraping with HTTPX. For understanding how to parse HTML when you need more control, see BeautifulSoup parsing.

Build the RAG Pipeline

RAG (Retrieval-Augmented Generation) combines search with language models. When a user asks a question, we find relevant documentation chunks (retrieval) and feed them to a language model (generation). This approach is better than fine-tuning because it's cheaper, easier to update, and more accurate.

For a deeper dive into RAG applications with web scraping, check out our comprehensive guide on powering up LLMs with web scraping and RAG.

Embeddings are the foundation of RAG. They convert text into vectors (lists of numbers) that represent meaning. Similar concepts have similar vectors. This lets us find relevant documentation even when the exact words don't match.

We'll use HuggingFace's Inference API for embeddings. It offers $0.10/month in free credits (free tier) or $2/month credits with PRO ($9/month), which is sufficient for development and testing. The API integrates smoothly with LangChain:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_huggingface import HuggingFaceHubEmbeddings

from langchain_community.vectorstores import FAISS

from langchain.docstore.document import Document

import json

import os

# Load the scraped documentation

with open('cloudflare_docs.json', 'r') as f:

docs_data = json.load(f)

# Convert to LangChain documents

documents = [

Document(

page_content=doc['content'],

metadata={'url': doc['url'], 'title': doc['title']}

)

for doc in docs_data

]

# Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000, # 1000 characters per chunk

chunk_overlap=200, # 200 characters overlap between chunks

separators=["\n\n", "\n", ". ", " ", ""]

)

chunks = text_splitter.split_documents(documents)

print(f"Split {len(documents)} documents into {len(chunks)} chunks")

# Initialize HuggingFace embeddings

embeddings = HuggingFaceHubEmbeddings(

model="sentence-transformers/all-MiniLM-L6-v2",

huggingfacehub_api_token=os.getenv('HUGGINGFACE_API_KEY')

)

# Create vector store

vectorstore = FAISS.from_documents(chunks, embeddings)

# Save for later use

vectorstore.save_local("cloudflare_docs_vectorstore")

print("Vector store saved")

Output example

Split 50 documents into 287 chunks

Vector store saved

Chunk size matters. Too small (100-200 chars) and you lose context. Too large (2000+ chars) and you get irrelevant information mixed with relevant content. We use 1000 characters with 200 character overlap. This keeps related information together while preventing important context from being split across chunks.

The RecursiveCharacterTextSplitter splits text at natural boundaries (paragraphs, sentences) rather than arbitrary character limits. This keeps code examples and explanations intact.

Implement Chatbot Logic with Memory

LangChain recently deprecated ConversationalRetrievalChain in favor of a more modular approach. The new API uses three components: create_history_aware_retriever() for reformulating questions with chat history, create_stuff_documents_chain() for formatting documents, and create_retrieval_chain() for combining everything.

Here's the complete chatbot implementation:

from langchain_openai import ChatOpenAI

from langchain.chains import create_retrieval_chain, create_history_aware_retriever

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.messages import HumanMessage, AIMessage

from langchain_community.vectorstores import FAISS

from langchain_huggingface import HuggingFaceHubEmbeddings

import os

# Load vector store

embeddings = HuggingFaceHubEmbeddings(

model="sentence-transformers/all-MiniLM-L6-v2",

huggingfacehub_api_token=os.getenv('HUGGINGFACE_API_KEY')

)

vectorstore = FAISS.load_local(

"cloudflare_docs_vectorstore",

embeddings,

allow_dangerous_deserialization=True

)

retriever = vectorstore.as_retriever(search_kwargs={"k": 4})

# Initialize LLM

llm = ChatOpenAI(

model="gpt-4o-mini",

temperature=0,

openai_api_key=os.getenv('OPENAI_API_KEY')

)

# Create history-aware retriever

contextualize_q_system_prompt = """Given a chat history and the latest user question \

which might reference context in the chat history, formulate a standalone question \

which can be understood without the chat history. Do NOT answer the question, \

just reformulate it if needed and otherwise return it as is."""

contextualize_q_prompt = ChatPromptTemplate.from_messages([

("system", contextualize_q_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

])

history_aware_retriever = create_history_aware_retriever(

llm, retriever, contextualize_q_prompt

)

# Create question-answer chain

qa_system_prompt = """You are a helpful assistant for Cloudflare Workers documentation. \

Use the following pieces of retrieved context to answer the question. \

If you don't know the answer, say that you don't know. \

Keep the answer concise and include code examples when relevant.

{context}"""

qa_prompt = ChatPromptTemplate.from_messages([

("system", qa_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

])

question_answer_chain = create_stuff_documents_chain(llm, qa_prompt)

# Combine into retrieval chain

rag_chain = create_retrieval_chain(history_aware_retriever, question_answer_chain)

# Chat loop with memory

chat_history = []

def ask_question(question):

response = rag_chain.invoke({

"input": question,

"chat_history": chat_history

})

# Update history

chat_history.append(HumanMessage(content=question))

chat_history.append(AIMessage(content=response["answer"]))

return response["answer"], response.get("context", [])

# Test the chatbot

answer, sources = ask_question("How do I create a Worker?")

print(f"Answer: {answer}\n")

print(f"Sources: {[doc.metadata['url'] for doc in sources]}")

# Follow-up question (uses memory)

answer, sources = ask_question("What languages can I use?")

print(f"\nFollow-up Answer: {answer}")

Example output

Answer: To create a Cloudflare Worker, you can use the Wrangler CLI or the Cloudflare dashboard.

With Wrangler, run `wrangler init my-worker` to create a new project, then `wrangler dev` to test

locally, and `wrangler deploy` to publish. Workers are JavaScript/TypeScript functions that run on

Cloudflare's edge network and can intercept HTTP requests.

Sources: ['https://developers.cloudflare.com/workers/get-started/',

'https://developers.cloudflare.com/workers/wrangler/commands/']

Follow-up Answer: Cloudflare Workers primarily support JavaScript and TypeScript. You can also use

any language that compiles to WebAssembly (Wasm), including Rust, C, and C++. The runtime is based

on V8, the same engine that powers Chrome and Node.js.

The history_aware_retriever is clever. If you ask "What about TypeScript?" after asking about Workers, it reformulates this to "What about TypeScript for Cloudflare Workers?" before searching. This makes follow-up questions work naturally.

Token usage for gpt-4o-mini costs $0.15 per 1M input tokens and $0.60 per 1M output tokens. A typical question with 4 retrieved chunks uses roughly 2000 tokens (input + output), costing about $0.0005 per query. For 1000 queries per month, expect around $0.50 in OpenAI costs.

For more on integrating AI with web scraping workflows, check out using ChatGPT with web scraping.

Build a Streamlit Interface

Streamlit makes it easy to build web interfaces for Python applications. It has built-in chat components that work perfectly for our chatbot.

Here's the complete Streamlit application:

Complete Streamlit App Code (click to expand)

import streamlit as st

from langchain_openai import ChatOpenAI

from langchain.chains import create_retrieval_chain, create_history_aware_retriever

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.messages import HumanMessage, AIMessage

from langchain_community.vectorstores import FAISS

from langchain_huggingface import HuggingFaceHubEmbeddings

import os

# Page config

st.set_page_config(

page_title="Documentation Chatbot",

page_icon="📚",

layout="wide"

)

st.title("📚 Documentation Chatbot")

st.caption("Ask questions about Cloudflare Workers documentation")

# Initialize session state

if "messages" not in st.session_state:

st.session_state.messages = []

if "chat_history" not in st.session_state:

st.session_state.chat_history = []

# Sidebar

with st.sidebar:

st.header("Settings")

show_sources = st.checkbox("Show sources", value=True)

if st.button("Clear Chat"):

st.session_state.messages = []

st.session_state.chat_history = []

st.rerun()

st.divider()

st.caption("Powered by Scrapfly + LangChain + Streamlit")

# Load the RAG chain (cached)

@st.cache_resource

def load_rag_chain():

# Load vector store

embeddings = HuggingFaceHubEmbeddings(

model="sentence-transformers/all-MiniLM-L6-v2",

huggingfacehub_api_token=os.getenv('HUGGINGFACE_API_KEY')

)

vectorstore = FAISS.load_local(

"cloudflare_docs_vectorstore",

embeddings,

allow_dangerous_deserialization=True

)

retriever = vectorstore.as_retriever(search_kwargs={"k": 4})

# Initialize LLM

llm = ChatOpenAI(

model="gpt-4o-mini",

temperature=0,

openai_api_key=os.getenv('OPENAI_API_KEY')

)

# Create history-aware retriever

contextualize_q_prompt = ChatPromptTemplate.from_messages([

("system", "Given a chat history and the latest user question, formulate a standalone question."),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

])

history_aware_retriever = create_history_aware_retriever(

llm, retriever, contextualize_q_prompt

)

# Create QA chain

qa_prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant for Cloudflare Workers documentation. Use the context to answer questions concisely.\n\n{context}"),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

])

question_answer_chain = create_stuff_documents_chain(llm, qa_prompt)

return create_retrieval_chain(history_aware_retriever, question_answer_chain)

rag_chain = load_rag_chain()

# Display chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

if show_sources and "sources" in message and message["sources"]:

with st.expander("Sources"):

for source in message["sources"]:

st.caption(f"- {source}")

# Chat input

if prompt := st.chat_input("Ask a question about the documentation..."):

# Add user message

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

st.markdown(prompt)

# Get response

with st.chat_message("assistant"):

with st.spinner("Thinking..."):

response = rag_chain.invoke({

"input": prompt,

"chat_history": st.session_state.chat_history

})

answer = response["answer"]

sources = [doc.metadata.get('url', 'Unknown') for doc in response.get("context", [])]

st.markdown(answer)

if show_sources and sources:

with st.expander("Sources"):

for source in sources:

st.caption(f"- {source}")

# Update history

st.session_state.chat_history.append(HumanMessage(content=prompt))

st.session_state.chat_history.append(AIMessage(content=answer))

# Save to messages

st.session_state.messages.append({

"role": "assistant",

"content": answer,

"sources": sources

})

Run the app with:

$ streamlit run app.py

The interface includes a chat input at the bottom, message history in the main area, and a sidebar with settings. The @st.cache_resource decorator loads the RAG chain only once, making subsequent queries fast.

Session state (st.session_state) persists data across reruns. This lets us keep chat history even when users interact with UI elements.

Deploy to Streamlit Cloud

Streamlit Cloud offers free hosting for public apps. It connects directly to your GitHub repository and automatically redeploys when you push changes.

Create a requirements.txt file:

requests

langchain

langchain-community

langchain-huggingface

langchain-openai

faiss-cpu

streamlit

python-dotenv

Create a .streamlit/secrets.toml file locally (don't commit this):

SCRAPFLY_API_KEY = "your_key_here"

HUGGINGFACE_API_KEY = "your_key_here"

OPENAI_API_KEY = "your_key_here"

Update your app to read from Streamlit secrets in production:

import os

# Try Streamlit secrets first, fallback to .env

try:

import streamlit as st

api_key = st.secrets.get("OPENAI_API_KEY", os.getenv("OPENAI_API_KEY"))

except:

api_key = os.getenv("OPENAI_API_KEY")

Push your code to GitHub, then:

- Go to share.streamlit.io

- Connect your GitHub account

- Select your repository and branch

- Set the main file path (e.g.,

app.py) - Add your secrets in the Advanced settings

- Click Deploy

Free tier limits: 1GB RAM, public apps only. For private apps or more resources, upgrade to a paid plan.

Advanced Features

Want to improve your chatbot? Here are some ideas:

Incremental updates: Re-scrape documentation periodically and update the vector store without rebuilding from scratch. FAISS supports adding new vectors with vectorstore.add_documents().

Feedback collection: Add thumbs up/down buttons in Streamlit. Store user feedback with questions and answers to identify areas for improvement.

col1, col2 = st.columns(2)

with col1:

if st.button("👍"):

# Log positive feedback

pass

with col2:

if st.button("👎"):

# Log negative feedback

pass

Multi-source docs: Combine documentation from multiple sites. Tag each chunk with its source during ingestion, then filter results or merge vector stores.

Source attribution: Show which specific page answered each question. This is already in the code above with response.get("context", []).

Cost optimization: Cache responses for common questions. Use gpt-4o-mini for cost-effective queries, or upgrade to gpt-4o for complex questions requiring deeper reasoning. Consider using cheaper embedding models for larger documentation sets.

FAQ

What makes Scrapfly better for documentation scraping?

Scrapfly's Crawler API handles bot protection (Cloudflare, Akamai), automatically discovers all pages from a starting URL, and outputs clean markdown perfect for LLMs. DIY scrapers fail on protected sites and require manual URL collection.

Can I use this for private documentation?

Yes. The Crawler API supports authentication via custom headers. Pass your auth token in the headers parameter. Your vector store stays local, so private docs never leave your infrastructure.

How much does it cost to run?

Initial scraping costs:

- Scrapfly Crawler API: Each page = 1 API credit (datacenter proxy baseline)

- With ASP enabled (for Cloudflare-protected sites): Cost varies based on anti-bot measures needed

- Example: 50 pages = 50+ credits depending on site protection level

Ongoing query costs:

- HuggingFace: $0.10/month in free credits (free tier) or $2/month credits with PRO ($9/month)

- OpenAI gpt-4o-mini: ~$0.0005 per query

- For 1000 queries/month: ~$0.50 in LLM costs

Total startup cost for a 50-page documentation site is minimal (under $1), with ongoing costs around $0.50-1/month for typical usage.

How do I handle documentation updates?

Re-run the Crawler API on a schedule (daily or weekly). Load your existing vector store and add new chunks with vectorstore.add_documents(). Delete outdated chunks by filtering metadata.

Can I add authentication-protected docs?

Yes. Pass authentication headers to the Crawler API. For session-based auth, use Scrapfly's session parameter to maintain cookies across pages.

How accurate are the answers?

RAG accuracy depends on retrieval quality and LLM capabilities. With good chunking (1000 chars) and 4 retrieved chunks, accuracy is typically 85-90% for factual questions. The chatbot will say "I don't know" when docs don't contain the answer.

Summary

You now have a fully functional documentation chatbot that works on any website, including Cloudflare-protected sites. The Scrapfly Crawler API handles scraping, LangChain manages the RAG pipeline with HuggingFace embeddings, and Streamlit provides the interface.

This approach works for any documentation site. Try it on your company's internal docs, your favorite open source project, or any technical documentation that needs better search.

The key advantage: this solution works on protected sites where traditional scrapers fail. No more blocked requests or manual URL collection.