Scraper API for pages, Crawler API for domains. Here's how to choose.

Building AI applications or collecting training data? You'll eventually face this question: should I scrape individual pages or crawl entire domains? Your use case determines the answer, and picking the wrong tool wastes both time and money.

This guide compares Scrapfly's Scrape API and Crawler API so you can make the right choice for your AI and data collection projects.

Key Takeaways

Master the differences between Scrape API and Crawler API for AI data collection, RAG applications, and large-scale web scraping projects.

- Use Scrape API for targeted single-page data extraction with full control over each request

- Use Crawler API for domain-wide discovery and automated multi-page data collection

- Configure anti-bot protection differently for each: ASP for Scrape API, polite crawling for Crawler API

- Optimize RAG data collection with markdown and clean HTML formats from both APIs

- Combine both APIs for hybrid workflows: discovery with Crawler, detail extraction with Scrape API

- Integrate Extraction API with either for LLM-powered structured data extraction

Quick Decision Guide

Before diving into the details, here's a quick guide to help you decide which API to use:

Use Scrape API when:

- Extracting data from specific, known URLs

- You need full control over each HTTP request

- Scraping product pages, articles, or search results

- Building real-time data APIs or monitoring systems

- Working with complex JavaScript-heavy single-page applications

- You need screenshots or custom JavaScript execution

Use Crawler API when:

- Crawling entire websites or documentation sites

- You need automatic URL discovery and link following

- Building comprehensive datasets from unknown site structures

- Collecting data for AI training or RAG applications at scale

- You want industry-standard outputs (WARC/HAR files)

- Real-time webhook notifications for scraped data

What is Scrape API?

Scrapfly's Scrape API focuses on single-page scraping with precise data extraction from individual URLs. Use it when you know exactly which pages to scrape and want full control over each request.

Core Features:

This API handles modern web scraping challenges on autopilot. Anti Scraping Protection (ASP) manages anti-bot systems, JavaScript rendering works with dynamic content, and you get responses in multiple formats: JSON, markdown, text, or raw HTML.

Key Capabilities:

- Smart defaults that prevent basic blocking with pre-configured headers and user-agents

- JavaScript rendering for single-page applications and dynamic content

- Screenshot capture for full pages or specific elements

- Session persistence to maintain cookies and IP consistency across requests

- Custom JavaScript execution for complex interactions

- Geolocation spoofing to access region-specific content

- Request caching with configurable time-to-live (TTL)

Ideal Use Cases:

Price monitoring, competitive intelligence, e-commerce product scraping, real-time data extraction, and AI training data from specific sources.

Want to scrape a single blog post for RAG? Here's how:

from scrapfly import ScrapflyClient, ScrapeConfig

# Initialize the client

scrapfly = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

# Scrape a single article

result = scrapfly.scrape(ScrapeConfig(

url="https://example.com/blog/article",

# Return markdown format - ideal for RAG

format="markdown",

# Enable anti-scraping protection

asp=True,

# Use US proxies

country="US"

))

# Get the markdown content

markdown_content = result.scrape_result['content']

print(f"Scraped {len(markdown_content)} characters")

See how easy it is to extract clean, formatted content ready for LLMs or RAG systems.

What is Crawler API?

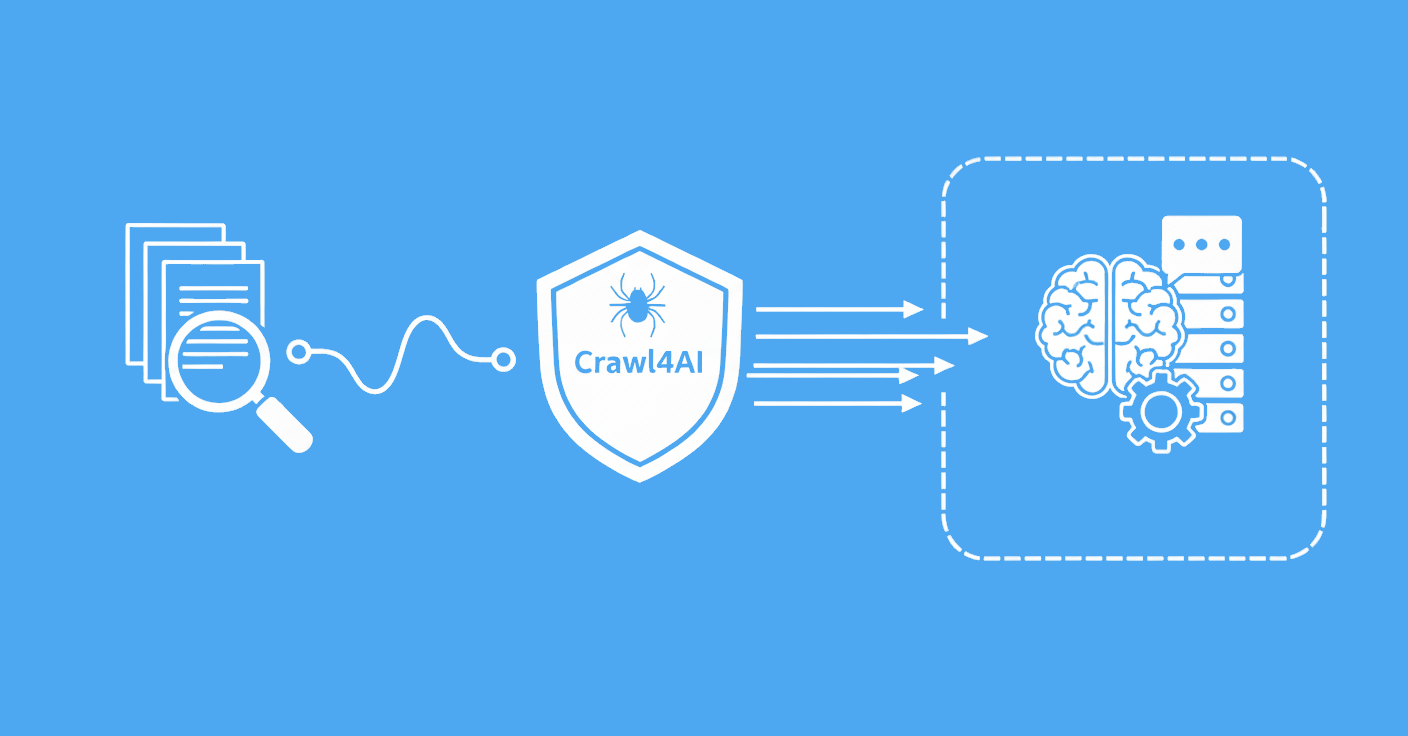

Scrapfly's Crawler API tackles domain-wide crawling by discovering and visiting multiple pages across websites. You give it a starting point, and it finds and extracts data from the entire site without you listing individual URLs.

Core Features:

Automatic URL discovery works through link following and sitemap integration. Content gets extracted in multiple formats at once, and you receive industry-standard artifacts like WARC and HAR files.

Key Capabilities:

- Automatic URL discovery by following links from seed URLs

- Configurable depth limits to control crawl scope

- Multiple content formats extracted simultaneously: HTML, clean HTML, markdown, text, JSON

- Extraction rules using prompts, models, or custom templates

- Webhooks for real-time event notifications as pages are crawled

- Polite crawling with configurable delays and concurrency

- Sitemap.xml integration for efficient site mapping

- Deduplication to avoid processing the same URL twice

Ideal Use Cases:

Batch website archiving, competitive intelligence across entire sites, SEO analysis, data migration from old systems, and building AI training datasets from complete sources.

Let's crawl an entire documentation site:

from scrapfly import ScrapflyClient, CrawlConfig

# Initialize the client

scrapfly = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

# Start a crawl job

crawl_result = scrapfly.crawl(CrawlConfig(

url="https://docs.example.com",

# Limit to 100 pages

page_limit=100,

# Crawl up to 3 levels deep

max_depth=3,

# Get both markdown and clean HTML

content_formats=["markdown", "clean_html"],

# Enable anti-scraping protection

asp=True

))

# Get the crawl job UUID

crawl_uuid = crawl_result.crawl_uuid

print(f"Crawl started: {crawl_uuid}")

# Poll for status and retrieve results

status = scrapfly.get_crawl_status(crawl_uuid)

print(f"Status: {status.status}, Pages: {status.pages_scraped}")

With just a few lines of code, you can collect data from an entire documentation site step by step.

Feature Comparison Table

Below is a detailed comparison showing the key differences:

| Feature | Scrape API | Crawler API |

|---|---|---|

| Use Case | Single-page data extraction | Domain-wide data collection |

| URL Specification | Explicit URLs required | Automatic discovery from seed URL |

| Scale | One page per request | Hundreds to thousands of pages per job |

| Output Formats | JSON, markdown, text, raw HTML | HTML, clean_html, markdown, text, JSON, extracted_data |

| Artifacts | Individual responses | WARC, HAR files |

| JavaScript Rendering | Yes, per request | Yes, across all pages |

| Anti-Bot (ASP) | Per-request configuration | Applied to all crawled pages |

| Extraction Rules | Use Extraction API separately | Built-in with prompt/model/template support |

| Webhooks | Not applicable | Real-time notifications per page |

| Session Management | Full control with session parameter | Automatic across crawl |

| Cost Model | Per request (varies by features) | Sum of all page requests in crawl |

| Best for AI/RAG | Targeted content extraction | Comprehensive knowledge base building |

| Rate Limiting | You control request timing | Automatic with configurable delays |

| Deduplication | Manual | Automatic |

Real-World Example: Single Page Scraping for RAG

Let's collect training data for a RAG (Retrieval-Augmented Generation) application using the Scrape API. We'll scrape blog posts and convert them to a clean format for LLM use.

from scrapfly import ScrapflyClient, ScrapeConfig

def scrape_blog_post_for_rag(url: str) -> dict:

"""

Scrape a blog post and prepare it for RAG ingestion.

Returns clean markdown with metadata.

"""

scrapfly = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

# Scrape with optimal settings for RAG

result = scrapfly.scrape(ScrapeConfig(

url=url,

# Markdown is ideal for LLM consumption

format="markdown",

# Enable anti-scraping protection

asp=True,

# Use residential proxies for better success rate

proxy_pool="public_residential_pool",

# Select proxy country

country="US",

# Enable JavaScript rendering if needed

render_js=True,

# Cache results to reduce costs

cache=True,

cache_ttl=3600 # 1 hour cache

))

# Extract content and metadata

content = result.scrape_result['content']

# You can also get the title and other metadata

# using the built-in selector

title = result.selector.css('title::text').get()

return {

'url': url,

'title': title,

'content': content,

'format': 'markdown',

'scraped_at': result.scrape_result.get('scrape_timestamp')

}

# Example usage

blog_urls = [

"https://example.com/blog/ai-trends-2025",

"https://example.com/blog/machine-learning-basics",

"https://example.com/blog/rag-applications"

]

# Scrape all blog posts

training_data = []

for url in blog_urls:

try:

data = scrape_blog_post_for_rag(url)

training_data.append(data)

print(f"Scraped: {data['title']}")

except Exception as e:

print(f"Error scraping {url}: {e}")

print(f"Collected {len(training_data)} documents for RAG")

Anti-Bot Configuration for Single Pages:

For the Scrape API, anti-bot configuration is applied per request:

# Maximum protection for difficult sites

result = scrapfly.scrape(ScrapeConfig(

url=url,

# Enable Anti Scraping Protection

asp=True,

# Use residential proxies (more expensive but better)

proxy_pool="public_residential_pool",

# Render JavaScript

render_js=True,

# Wait for specific element to load

wait_for_selector=".article-content",

# Add custom headers if needed

headers={

"Accept-Language": "en-US,en;q=0.9"

}

))

Real-World Example: Documentation Crawling for RAG

Building a complete knowledge base? The Crawler API can crawl an entire documentation site, which works great for RAG systems that answer questions about products or services.

from scrapfly import ScrapflyClient, CrawlConfig

import time

def crawl_documentation_for_rag(start_url: str) -> dict:

"""

Crawl an entire documentation site for RAG.

Returns WARC file path and crawl statistics.

"""

scrapfly = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

# Start the crawl

crawl_result = scrapfly.crawl(CrawlConfig(

url=start_url,

# Limit pages to control costs

page_limit=500,

# Crawl 5 levels deep

max_depth=5,

# Get multiple formats for flexibility

content_formats=["markdown", "clean_html", "text"],

# Enable anti-scraping protection

asp=True,

# Use datacenter proxies (cheaper for documentation)

proxy_pool="public_datacenter_pool",

# Set concurrency

concurrent_requests=3,

# Enable polite crawling

delay_between_requests=1000, # 1 second delay

# Add extraction rules for structured data

extraction_rules=[{

"type": "prompt",

"selector": "main", # Target main content

"prompt": "Extract the title, description, and main content of this documentation page",

"url_pattern": "/docs/*" # Apply to all docs pages

}]

))

crawl_uuid = crawl_result.crawl_uuid

print(f"Crawl started: {crawl_uuid}")

# Poll for completion

while True:

status = scrapfly.get_crawl_status(crawl_uuid)

print(f"Status: {status.status}, Pages: {status.pages_scraped}/{status.page_limit}")

if status.status in ['completed', 'failed']:

break

time.sleep(10) # Check every 10 seconds

# Download the WARC artifact (contains all pages)

if status.status == 'completed':

warc_path = f"crawl_{crawl_uuid}.warc.gz"

scrapfly.download_artifact(crawl_uuid, "warc", warc_path)

return {

'crawl_uuid': crawl_uuid,

'pages_scraped': status.pages_scraped,

'warc_path': warc_path,

'status': status.status

}

return {'status': 'failed', 'crawl_uuid': crawl_uuid}

# Example usage

result = crawl_documentation_for_rag("https://docs.scrapfly.io")

print(f"Crawl completed: {result['pages_scraped']} pages")

print(f"WARC file saved to: {result['warc_path']}")

Retrieving Individual Pages:

You can also retrieve individual crawled pages in batch:

# Get specific pages from the crawl

urls_to_retrieve = [

"https://docs.scrapfly.io/scrape-api/getting-started",

"https://docs.scrapfly.io/crawler-api/getting-started",

"https://docs.scrapfly.io/extraction-api/getting-started"

]

# Batch retrieve content

batch_result = scrapfly.get_crawl_contents_batch(

crawl_uuid=crawl_uuid,

urls=urls_to_retrieve,

format="markdown"

)

for url, content in batch_result.items():

print(f"Retrieved {len(content)} chars from {url}")

Anti-Bot Configuration for Crawling:

For the Crawler API, anti-bot settings apply to all pages in the crawl:

# Optimal configuration for large documentation crawls

crawl_result = scrapfly.crawl(CrawlConfig(

url=start_url,

# Enable ASP for all pages

asp=True,

# Datacenter proxies are usually sufficient for docs

proxy_pool="public_datacenter_pool",

# Respect robots.txt

respect_robots=True,

# Polite crawling settings

concurrent_requests=2,

delay_between_requests=2000, # 2 seconds

# Timeout per page

page_timeout=30000, # 30 seconds

# Maximum crawl duration

max_duration=10800 # 3 hours max

))

RAG-Specific Recommendations

Collecting data for RAG applications? Format selection and data quality matter. Here are best practices for both APIs:

Content Format Selection

For Scrape API:

- Markdown: Best for blog posts, articles, and documentation. Clean, structured, and LLM-friendly.

- Clean HTML: When you need to preserve some HTML structure while removing scripts and styles.

- Text: For simple text extraction without any formatting.

For Crawler API:

- Get both formats with

["markdown", "clean_html"]and pick later. - Markdown works best for most RAG use cases since it keeps structure without HTML bloat.

- Use clean HTML when you need custom parsing logic.

Handling Large Documentation Sites

# Strategy 1: Crawl in chunks using depth limits

for depth in range(1, 6):

crawl_result = scrapfly.crawl(CrawlConfig(

url="https://docs.example.com",

max_depth=depth,

page_limit=100

))

# Process each depth level separately

# Strategy 2: Use URL patterns to focus on specific sections

crawl_result = scrapfly.crawl(CrawlConfig(

url="https://docs.example.com",

# Only crawl documentation pages

url_pattern="/docs/*",

# Exclude API reference

exclude_url_pattern="/api-reference/*"

))

Deduplication and Quality Control

While the Crawler API handles deduplication on its own, you should add extra filtering:

def filter_quality_content(content: str) -> bool:

"""Filter out low-quality pages."""

# Minimum content length

if len(content) < 500:

return False

# Check for error pages

error_indicators = ['404', 'page not found', 'access denied']

if any(indicator in content.lower() for indicator in error_indicators):

return False

return True

# Apply filtering to crawled content

quality_documents = [

doc for doc in all_documents

if filter_quality_content(doc['content'])

]

Cost Optimization Strategies

For Scrape API:

- Turn on caching for pages you access often:

cache=True, cache_ttl=3600 - Stick with datacenter proxies when possible (cheaper than residential)

- Only use JavaScript rendering when you really need it

For Crawler API:

- Set smart

page_limitvalues to prevent costs from spiraling - Cap crawl time with

max_duration - Begin with datacenter proxies, switch to residential only when blocked

- Use extraction rules to skip expensive post-processing

# Cost-optimized crawl configuration

crawl_result = scrapfly.crawl(CrawlConfig(

url=start_url,

page_limit=200, # Cap at 200 pages

max_duration=3600, # 1 hour max

proxy_pool="public_datacenter_pool", # Cheaper

concurrent_requests=5, # Faster completion

content_formats=["markdown"], # Only what you need

# Use caching at API level

cache=True

))

Anti-Bot Configuration Comparison

Both APIs provide strong anti-bot protection, but you configure them differently depending on what you need.

Scrape API Anti-Bot Features

With the Scrape API, you control anti-bot settings for every single request:

ASP (Anti Scraping Protection) Levels:

# Basic protection (included in base price)

result = scrapfly.scrape(ScrapeConfig(

url=url,

asp=False # Just smart defaults

))

# Advanced protection (bypasses most anti-bot systems)

result = scrapfly.scrape(ScrapeConfig(

url=url,

asp=True, # Enables full ASP

render_js=True # Real browser rendering

))

Proxy Pool Selection:

# Datacenter proxies (faster, cheaper)

result = scrapfly.scrape(ScrapeConfig(

url=url,

proxy_pool="public_datacenter_pool",

country="US"

))

# Residential proxies (better success rate)

result = scrapfly.scrape(ScrapeConfig(

url=url,

proxy_pool="public_residential_pool",

country="US"

))

Session Management:

# Maintain session across multiple requests

session_id = "my_session_123"

# First request creates session

result1 = scrapfly.scrape(ScrapeConfig(

url="https://example.com/login",

session=session_id

))

# Subsequent requests reuse cookies and IP

result2 = scrapfly.scrape(ScrapeConfig(

url="https://example.com/dashboard",

session=session_id

))

Crawler API Anti-Bot Features

When you're crawling at scale, the Crawler API applies anti-bot protection across all pages:

Polite Crawling:

crawl_result = scrapfly.crawl(CrawlConfig(

url=start_url,

# Control request rate

concurrent_requests=3,

delay_between_requests=1000, # 1 second

# Respect site policies

respect_robots=True

))

Proxy Management:

# Apply proxy settings to entire crawl

crawl_result = scrapfly.crawl(CrawlConfig(

url=start_url,

asp=True,

proxy_pool="public_residential_pool",

country="US",

# Rotate sessions automatically

auto_rotate_proxies=True

))

When to Use Residential vs Datacenter Proxies:

| Proxy Type | Use With | Cost | Success Rate | Speed |

|---|---|---|---|---|

| Datacenter | Documentation sites, public APIs, low-protection sites | Lower | Good (85-90%) | Faster |

| Residential | E-commerce, social media, high-protection sites | Higher | Excellent (95-99%) | Slower |

Best Practices:

# Start with datacenter, fallback to residential

try:

result = scrapfly.scrape(ScrapeConfig(

url=url,

proxy_pool="public_datacenter_pool",

asp=True

))

except Exception as e:

# If blocked, retry with residential

result = scrapfly.scrape(ScrapeConfig(

url=url,

proxy_pool="public_residential_pool",

asp=True,

render_js=True # Add browser rendering

))

Combining Both APIs

Some workflows work better when you use both APIs together. Here are popular patterns:

Pattern 1: Crawler for Discovery + Scrape API for Detail

Let the Crawler API find all product URLs, then extract detailed data with the Scrape API:

from scrapfly import ScrapflyClient, CrawlConfig, ScrapeConfig

scrapfly = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

# Step 1: Crawl to discover all product URLs

crawl_result = scrapfly.crawl(CrawlConfig(

url="https://example.com/products",

# Only get URLs, not full content

content_formats=[],

url_pattern="/product/*",

page_limit=1000

))

# Step 2: Get all discovered URLs

product_urls = scrapfly.get_crawl_urls(crawl_result.crawl_uuid)

# Step 3: Scrape each product with full control

products = []

for url in product_urls[:100]: # Process first 100

result = scrapfly.scrape(ScrapeConfig(

url=url,

format="markdown",

asp=True,

render_js=True,

# Add extraction for structured data

extraction_config={

"template": "product"

}

))

products.append(result.scrape_result)

print(f"Scraped {len(products)} products")

Pattern 2: Scrape API for Monitoring + Crawler for Baselines

Monitor specific pages in real-time with the Scrape API, and grab periodic full-site snapshots with the Crawler API:

# Daily monitoring of specific pages (Scrape API)

def monitor_key_pages():

key_urls = [

"https://competitor.com/pricing",

"https://competitor.com/features",

"https://competitor.com/about"

]

for url in key_urls:

result = scrapfly.scrape(ScrapeConfig(

url=url,

asp=True,

cache=False # Always fresh data

))

# Store and compare with previous version

check_for_changes(url, result.scrape_result['content'])

# Weekly full-site crawl (Crawler API)

def weekly_site_snapshot():

crawl_result = scrapfly.crawl(CrawlConfig(

url="https://competitor.com",

page_limit=500,

content_formats=["markdown"],

asp=True

))

# Archive full WARC file

scrapfly.download_artifact(crawl_result.crawl_uuid, "warc")

Pattern 3: Hybrid RAG Data Collection

Build a complete knowledge base by mixing both APIs:

def build_comprehensive_rag_dataset(domains: list):

"""

Build RAG dataset using both APIs optimally.

"""

all_documents = []

for domain in domains:

# Use Crawler for bulk collection

crawl_result = scrapfly.crawl(CrawlConfig(

url=domain,

page_limit=200,

content_formats=["markdown"],

asp=True

))

# Get all URLs from crawl

urls = scrapfly.get_crawl_urls(crawl_result.crawl_uuid)

# Use Scrape API for high-value pages needing special handling

priority_urls = [url for url in urls if '/blog/' in url or '/guide/' in url]

for url in priority_urls:

result = scrapfly.scrape(ScrapeConfig(

url=url,

format="markdown",

asp=True,

render_js=True, # Extra care for important content

# Extract metadata

extraction_config={

"type": "prompt",

"prompt": "Extract title, author, publish date, and main content"

}

))

all_documents.append(result)

return all_documents

Extraction API Integration

You can boost both Scrape API and Crawler API with the Extraction API for structured data extraction using LLMs.

With Scrape API

Extract structured data from individual pages:

result = scrapfly.scrape(ScrapeConfig(

url="https://example.com/product/123",

asp=True,

# Add LLM-based extraction

extraction_config={

"type": "prompt",

"prompt": """Extract the following from this product page:

- Product name

- Price

- Description

- Specifications

- Customer reviews summary

Return as JSON."""

}

))

# Get both raw content and extracted data

raw_content = result.scrape_result['content']

extracted_data = result.scrape_result['extracted_data']

With Crawler API

Apply extraction rules across all crawled pages:

crawl_result = scrapfly.crawl(CrawlConfig(

url="https://docs.example.com",

page_limit=100,

content_formats=["markdown"],

# Define extraction rules for different page types

extraction_rules=[

{

"type": "prompt",

"url_pattern": "/docs/*",

"prompt": "Extract the documentation title, section, and code examples"

},

{

"type": "model",

"url_pattern": "/api/*",

"model": "article" # Use pre-trained article model

}

]

))

Use Cases for Extraction API

RAG Knowledge Graph Building:

# Extract structured data for knowledge graph

result = scrapfly.scrape(ScrapeConfig(

url=article_url,

extraction_config={

"type": "prompt",

"prompt": """Extract:

- Main entities mentioned (people, companies, products)

- Relationships between entities

- Key facts and dates

Return as structured JSON for knowledge graph ingestion."""

}

))

# Build knowledge graph from extracted data

add_to_knowledge_graph(result.scrape_result['extracted_data'])

Cost Considerations:

All extraction methods cost 5 API credits per request, regardless of whether you use:

- LLM prompts (most flexible)

- Pre-trained models (fastest)

- Custom templates (most precise)

For large crawls, consider extracting only from high-value pages to control costs.

Power Up with Scrapfly

Scrape API and Crawler API solve different web scraping problems. Need targeted data extraction? Want to crawl entire domains? Either way, Scrapfly handles the messy parts of modern web scraping.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

FAQs

Here are answers to frequently asked questions about Scrape API vs Crawler API:

Can I use both Scrape API and Crawler API together?

Absolutely, and we recommend it for many workflows. Let Crawler API handle discovery and bulk collection, then switch to Scrape API for detailed extraction from high-value pages that need custom JavaScript execution or special formatting.

Which is better for AI training data collection?

Depends on your source. Got specific URLs for blog posts or articles? Scrape API with markdown format works great. Building datasets from entire domains like documentation sites? Crawler API with built-in extraction rules is your friend.

How does pricing differ between the two APIs?

Scrape API charges per request based on your enabled features (ASP, rendering, proxy type). Crawler API adds up all the Scrape API calls during your crawl. Crawling 100 pages with ASP on? That's the same cost as 100 individual Scrape API requests with ASP.

What's the difference in anti-bot capabilities?

Same core anti-bot technology (ASP) powers both. The difference? Configuration. Scrape API lets you tweak anti-bot settings for each request, while Crawler API applies one setting across all pages. Need max control for highly protected sites? Scrape API wins.

Can I get real-time data with the Crawler API?

Yes! Crawler API supports webhooks that fire HTTP callbacks as each page gets scraped. Process data instantly instead of waiting for the entire crawl. Just configure a webhook URL when you start, and you'll get immediate notifications.

Summary

Your use case determines whether you need Scrape API or Crawler API. Pick Scrape API for precise control over individual pages - perfect for real-time monitoring, targeted extraction, or building data APIs. Go with Crawler API when you need to discover and collect data across entire domains, like building AI training sets from documentation or archiving competitor sites.

For RAG and AI data collection? Crawler API builds complete knowledge bases through automatic discovery and multi-format extraction. Scrape API works better for targeted, high-quality content where you want markdown formatting and custom processing.

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.

Both APIs pack strong anti-bot protection through ASP and work with multiple proxy pools. Match your tool to your data needs: Scrape API gives you depth and control, Crawler API delivers breadth and automation, or mix both for workflows that tap into each one's strengths.