Crawler API

Crawl Entire Websites Automatically with One API Call

Recursively crawl entire domains, discover links automatically, and collect structured data from thousands of pages without writing complex spider logic. Built to bypass the toughest anti-bot protections at enterprise scale.

- Recursive Domain Crawling - Automatically discover and crawl entire websites. Configure depth limits, URL patterns, and content filters to collect exactly what you need.

- Bypass Anti-Bot Protection Automatically - Defeat fingerprinting, CAPTCHAs, and behavior-based detection with advanced browser stealth technology. No manual intervention required.

- Real Browser Rendering - Crawl React, Vue, and Angular SPAs with full JavaScript execution. Automatically waits for dynamic content, handles AJAX requests, and captures lazy-loaded data.

- 120+ Country Proxy Pool - Automatic IP rotation across residential and datacenter proxies. Smart session management prevents bans and bypasses geo-restrictions.

- LLM-Powered Data Extraction - Transform raw HTML into clean JSON, markdown, or structured data. No XPath or CSS selectors needed.

Start Crawling in 5 Minutes

-

Define Your Own Crawl LimitsSelect what to crawl, what to ignore and how many pages to collect.

-

Select Output Formats That Fit YouGet multiple format, from raw html, cleaned html, text, markdown or directly the extracted data. You can combine multiple formats.

-

Use Real Web BrowsersEnable crawling using real web browsers and wait for dynamic content to load for collection and don't miss any data.

-

Use Scrapfly Cache for Repeat ActionsLet Scrapfly store your crawl results for faster re-crawls directly from scrapfly cache.

-

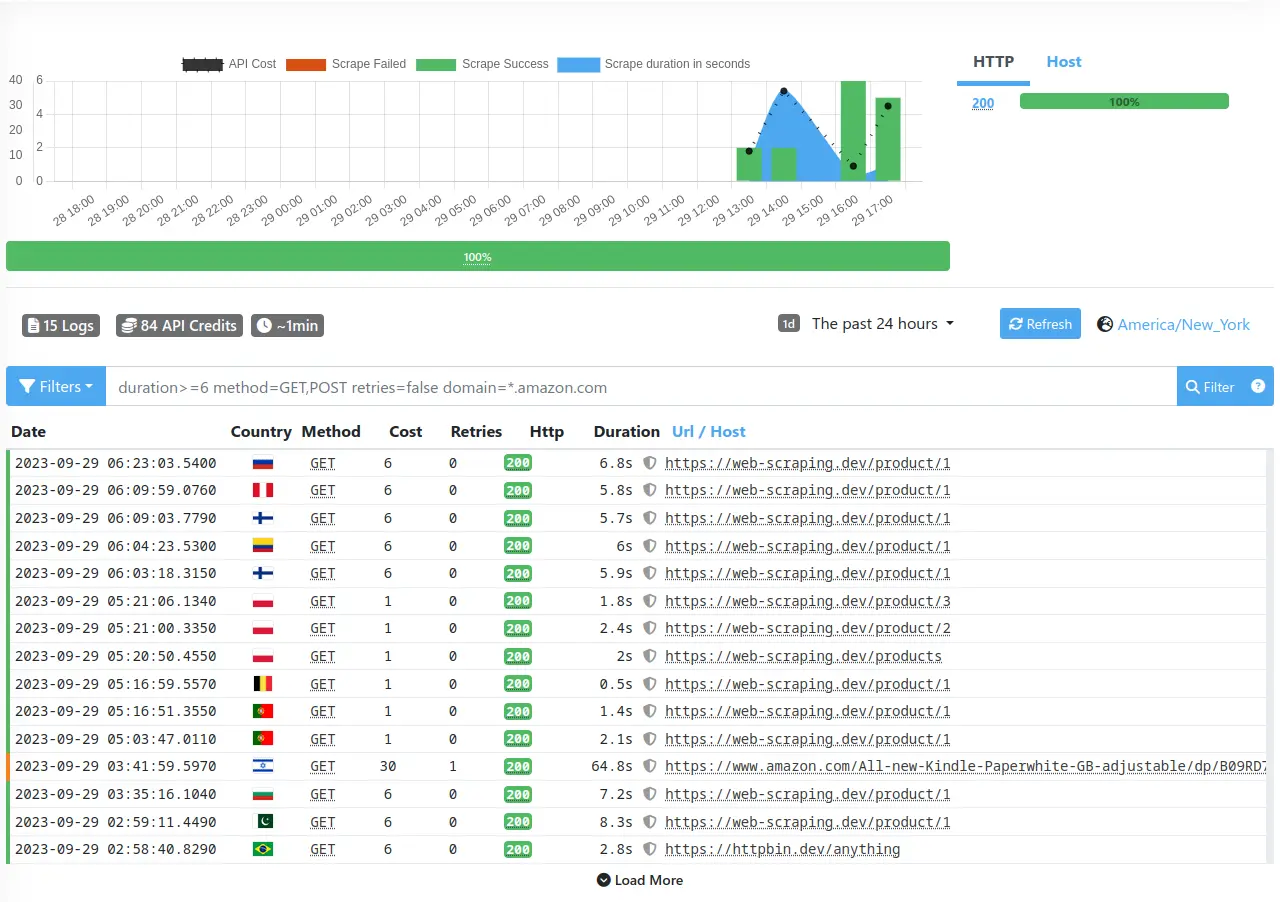

Inspect Live Results in DashboardUse Scrapfly's monitoring dashboard to see every detail of your crawl scrapes and have the ability to modify and replay each scrape.

-

Collect Results in Industry Standard FormatsCollect all results in industry standard

warcandharpage formats complete with all the metadata and extra page information. See all the metadata and rerun on demand! -

Attach Webhooks to Every Step of the ProcessUse webhooks to stay informed of every step of you crawling process: collect real time results, track failures and final data.

We got Your Industry Covered!

AI Training

Crawl the latest images, videos and user generated content for AI training.

Compliance

Crawl online presence to validate compliance and security.

eCommerce

Crawl products, reviews and more to enhance your eCommerce and brand awareness.

Financial Service

Crawl the latest stock, shipping and financial data to enhance your finance datasets.

Fraud Detection

Crawl products and listings to detect fraud and counterfeit activity.

Jobs Data

Crawl the latest job listings, salaries and more to enhance your job search.

Lead Generation

Crawl online profiles and contact details to enhance your lead generation.

Logistics

Crawl logistics data like shipping, tracking, container prices to enhance your deliveries.

Explore

More

Use Cases

Developer-First Experience

We made Scrapfly for ourselves in 2017 and opened it to public in 2020. In that time, we focused on working on the best developer experience possible.

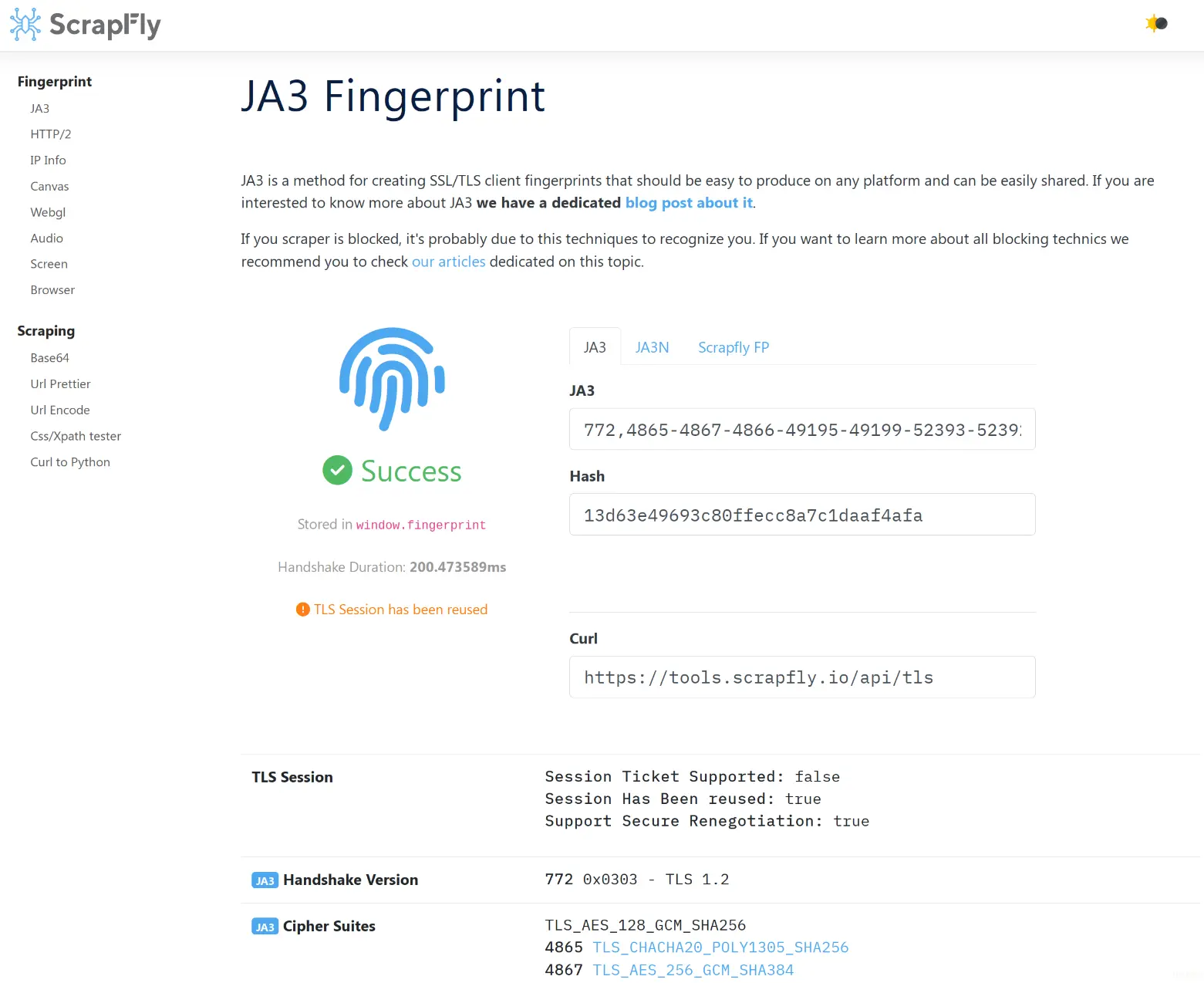

Master Web Data with our Docs and Tools

Access a complete ecosystem of documentation, tools, and resources designed to accelerate your data journey and help you get the most out of Scrapfly.

-

Learn with Scrapfly Academy

Learn everything about data retrieval and web scraping with our interactive courses.

-

Develop with Scrapfly Tools

Streamline your web data development with our web tools designed to enhance every step of the process.

-

Stay Up-To-Date with our Newsletter and Blog

Stay updated with the latest trends and insights in web data with our monthly newsletter weekly blog posts.

Seamlessly Integrate with Frameworks & Platforms

Easily integrate Scrapfly with your favorite tools and platforms, or customize workflows with our Python and TypeScript SDKs.

Powerful Web UI

One-stop shop to configure, control and observe all of your Scrapfly activity.

-

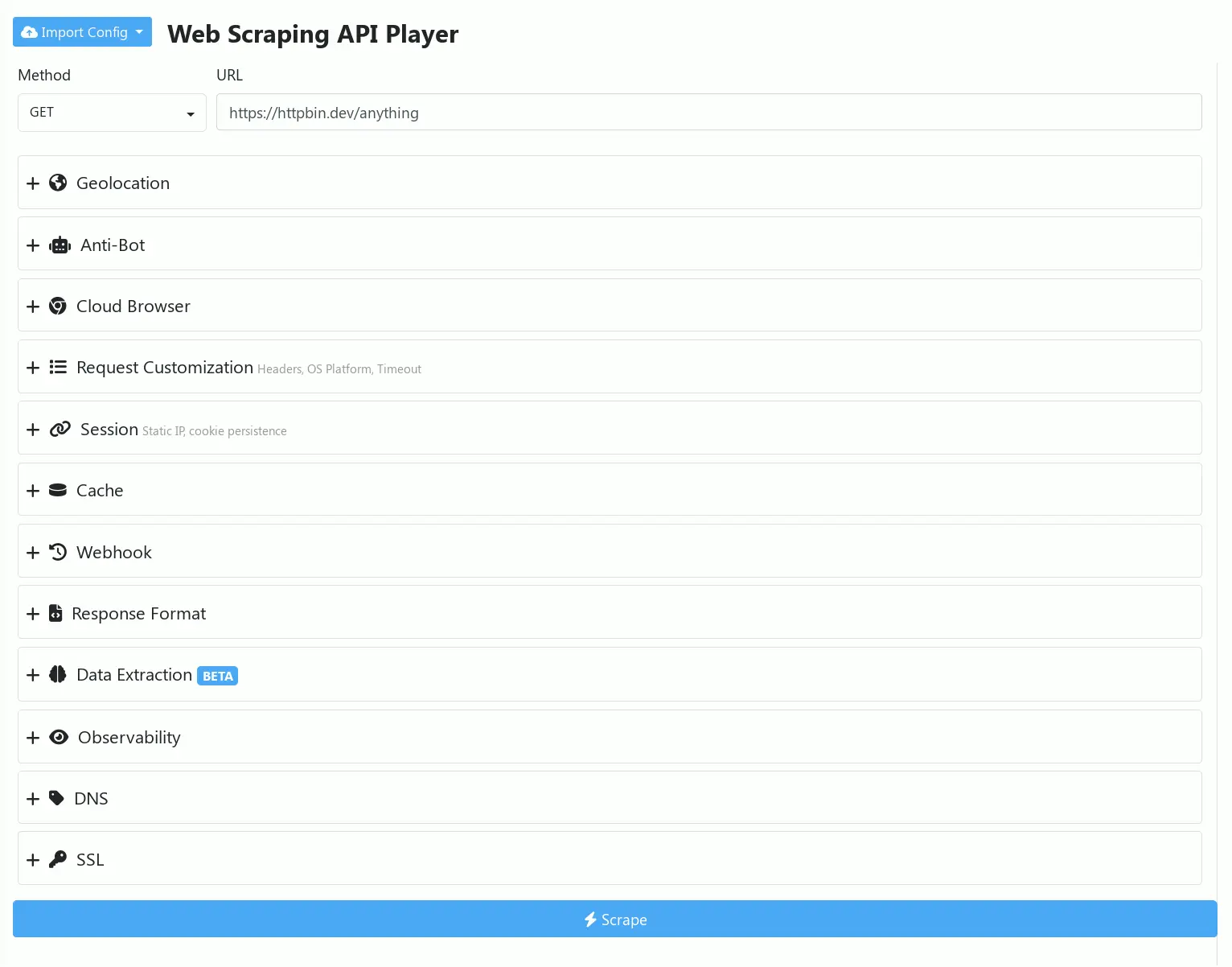

Experiment with Web API Player

Use our Web API player for easy testing, experimenting and sharing for collaboration and seamless integration.

-

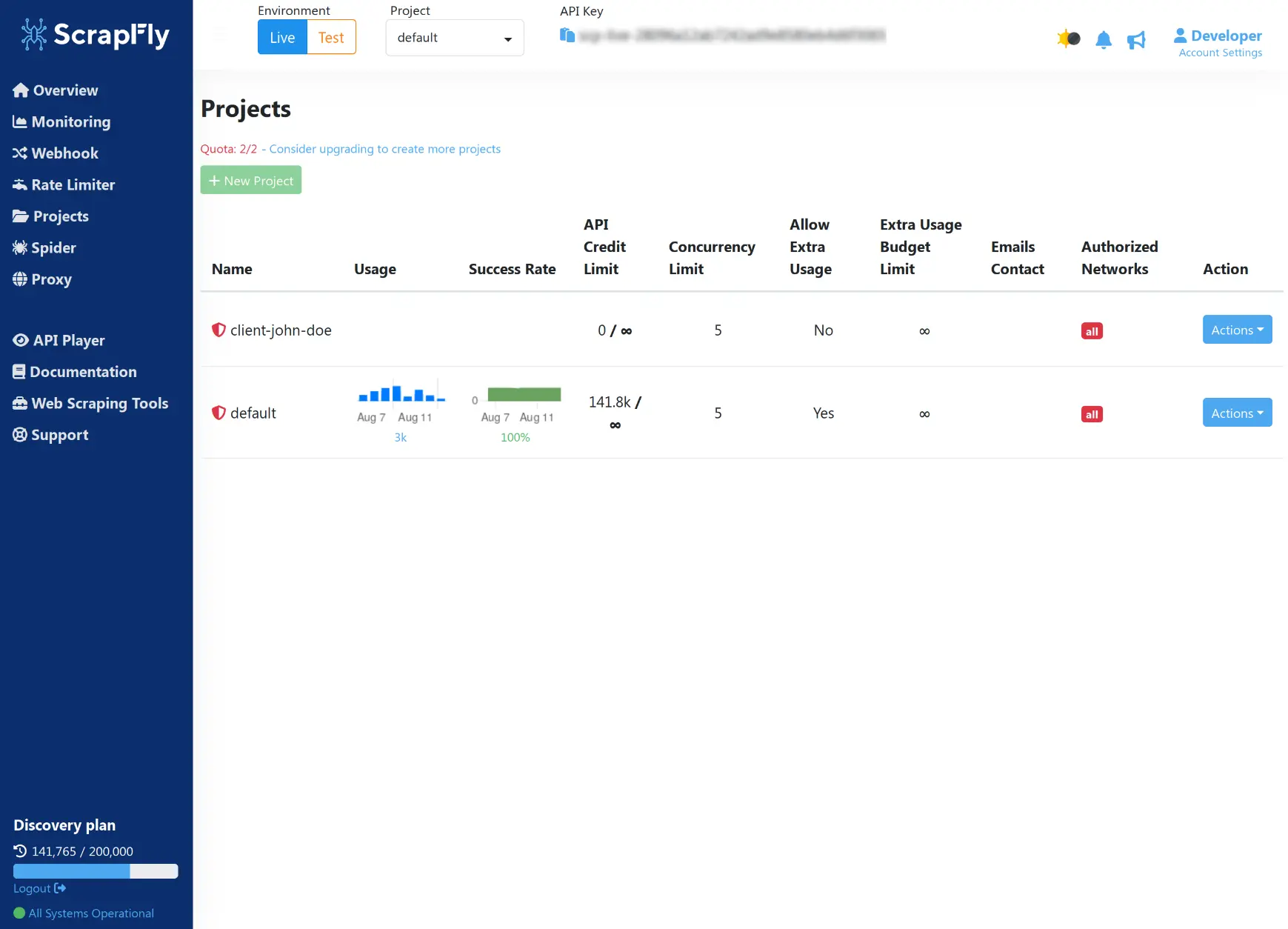

Manage Multiple Projects

Manage multiple projects with ease - complete with built-in testing environements for full control and flexibility.

-

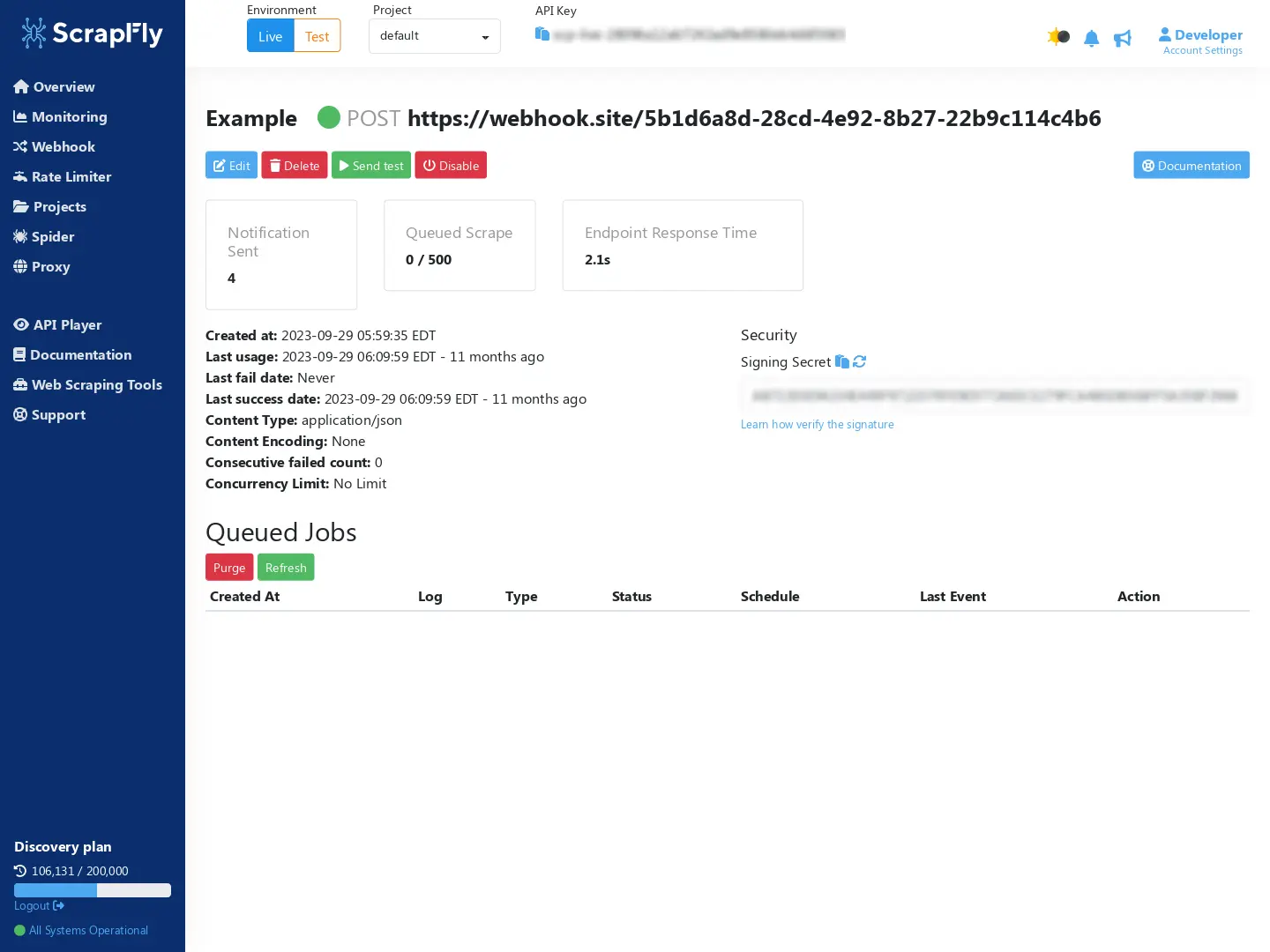

Attach Webhooks & Throttlers

Upgrade your API calls with webhooks for true asynchronous architecture and throttlers to control your usage.

What Do Our Users Say?

"Scrapfly’s Web Scraping API has completely transformed our data collection process. The automatic proxy rotation and anti-bot bypass are game-changers. We no longer have to worry about scraping blocks, and the setup was incredibly easy. Within minutes, we had a reliable scraping system pulling the data we needed. I highly recommend this API for any serious developer!"

John M. - Senior Data Engineer

"We’ve tried multiple scraping tools, but Scrapfly’s Web Scraping API stands out for its reliability and speed. With the cloud browser functionality, we were able to scrape dynamic content from JavaScript-heavy websites seamlessly. The real-time data collection helped us make faster, more informed decisions, and the 99.99% uptime is just unmatched."

Samantha C. - CTO

"Scalability was a major concern for us as our data scraping needs grew. Scrapfly’s Web Scraping API not only handled our increased requests but did so without a hitch. The proxy rotation across 120+ countries ensured we could access data from any region, and their comprehensive documentation made implementation a breeze. It's the most robust API we’ve used."

Alex T. - Founder

Frequently Asked Questions

What is a Crawler API?

Crawler API is a service for recursively crawling all desired pages of a given domain. Crawl jobs can be configured with various crawling rules like what pages to crawl and what to ignore, and when completed the results are returned in a structured format. Crawler API also supports automatic scraper blocking bypass, headless browsers, AI-driven data extraction and many other features.

What's the difference between Crawler API and Scraping API?

Scraping API retrieves single pages you specify. Crawler API automatically discovers and crawls entire websites by following links recursively. Use Scraping API for targeted page extraction. Use Crawler API for domain-wide data collection, website indexing, or when you don't know all the URLs upfront.

How can I access Crawler API?

Crawler API is accessed through Scrapfly Dashboard or directly through the API. For first-class support we offer Python, Typescript and Golang SDKs.

Is web crawling legal?

Yes, generally web crawling is legal in most places around the world. For more see our in-depth web scraping laws article.

Does Crawler API use AI for web scraping?

Yes, Scrapfly incorporate some of AI and machine learning algorithms to successfully retrieve web pages. Scrapfly cloud browsers are configured with real browser fingerprints that ensure all collected data is retrieved as it appears on the web.

How long does it take to get results from the Crawler API?

Crawl duration varies based on crawled domain and full crawl can take just from few seconds to several minutes depending on your crawl configuration and throttling limits.

What Data Formats Does Crawler API Support?

Crawler API returns results in industry standard WARC and HAR formats. The data formats themselves can be configured

from raw HTML, to clean markdown, JSON, text, and PDF. This makes it perfect for AI training datasets, website indexing, and content analysis.

Can I convert entire websites to markdown for AI/LLM training?

Yes! Crawler API can automatically convert entire websites to clean markdown format, perfect for LLM training datasets and RAG (Retrieval-Augmented Generation) systems.

Simply set content_formats=['markdown'] in your crawl configuration, and the API will recursively crawl all pages and return them in AI-ready markdown format.

This is ideal for building documentation datasets, knowledge bases, or training data from websites.

Can I use Crawler API to index entire websites for search?

Absolutely! Crawler API is perfect for website indexing. It automatically discovers all pages on a domain, extracts content in structured formats, and can be configured to respect crawl rules and path restrictions. Use it to build search indexes, create sitemaps, monitor content changes, or archive entire websites. The webhook integration allows you to process pages in real-time as they're crawled.

How many pages can I crawl in one job?

There's no hard limit on pages per crawl job. You control the scope with max depth, page count limits, and URL patterns.

Our customers regularly crawl 10,000+ pages in single jobs. Larger crawls can be split across multiple jobs or run with higher concurrency on Enterprise plans.

Use the page_limit parameter to cap the maximum number of pages crawled.

Can I crawl JavaScript-heavy single-page applications?

Yes! Enable render_js=True to crawl React, Vue, Angular, and other JavaScript frameworks.

Our headless Chrome browsers execute JavaScript, wait for dynamic content, and extract the fully rendered page.

You can configure custom wait times with rendering_delay and JavaScript execution for complex SPAs.