Web crawling has evolved significantly with the rise of AI applications and large language models. Traditional scraping tools often fall short when it comes to preparing data for AI consumption, requiring extensive preprocessing and manual optimization. Crawl4AI bridges this gap by providing an intelligent, AI-friendly web crawling framework designed specifically for modern data extraction needs.

In this detailed tutorial, we'll explore Crawl4AI's unique features, from adaptive crawling algorithms to LLM-friendly output formats. We'll walk through installation, practical examples, and advanced use cases that demonstrate how this framework improves web data collection for AI applications.

Key Takeaways

Master Crawl4AI framework for AI-friendly web crawling with adaptive algorithms, LLM-optimized output formats, and intelligent data extraction for modern AI applications.

- Use Crawl4AI for intelligent web crawling with adaptive algorithms that determine when sufficient information has been gathered

- Configure LLM-friendly output formats including JSON, Markdown, and cleaned HTML for optimal AI consumption

- Implement adaptive crawling strategies that automatically adjust behavior based on content analysis and structure

- Apply high-performance asynchronous architecture for concurrent crawling of multiple URLs and domains

- Extract comprehensive media content including images, audio, and video for complete data collection

- Use specialized tools like ScrapFly for automated Crawl4AI integration with anti-blocking features

What is Crawl4AI?

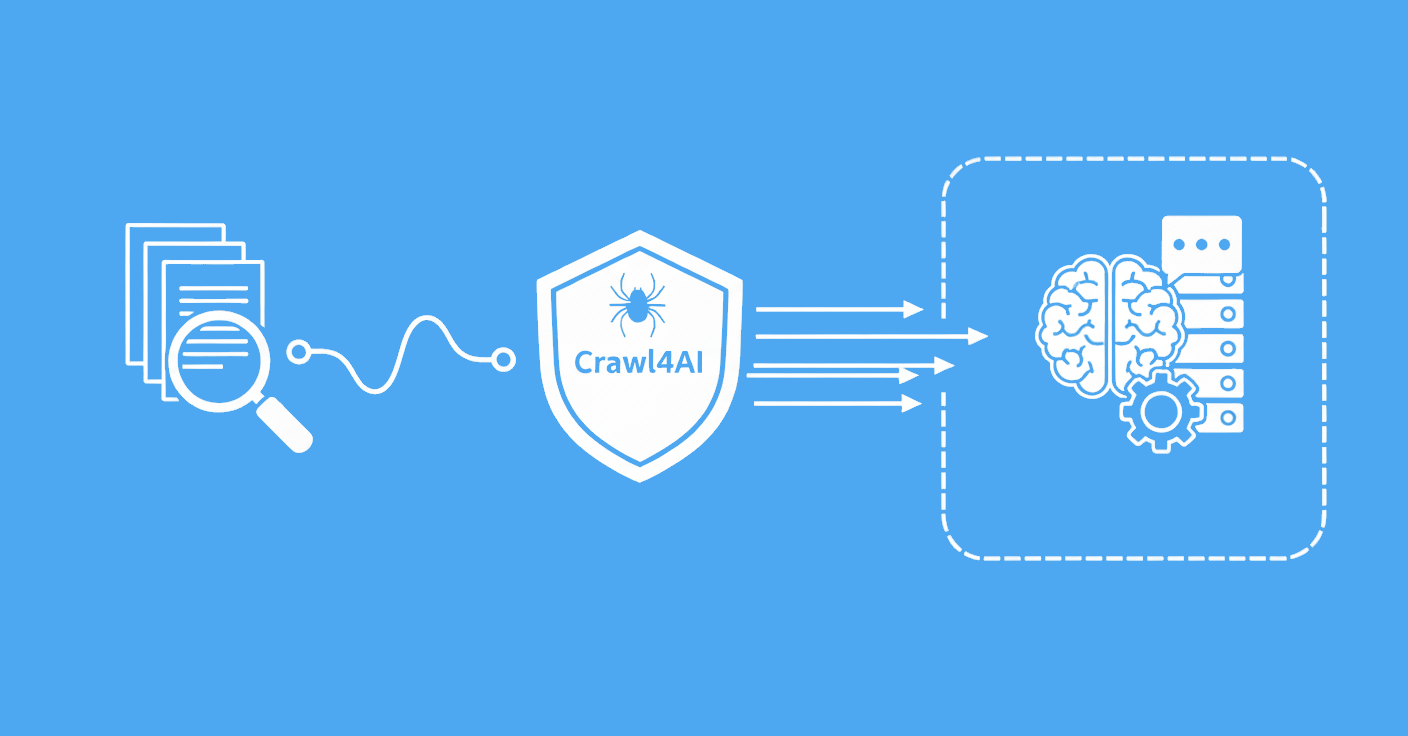

Crawl4AI is an open-source, AI-friendly web crawling and scraping framework designed to help with efficient data extraction for large language models (LLMs) and AI applications. Unlike traditional web scrapers that focus solely on data extraction, Crawl4AI is built with AI workflows in mind, offering intelligent crawling strategies and improved output formats. For more on traditional scraping approaches, see Web Scraping with Python.

The framework addresses common challenges in AI data collection:

- Intelligent Information Foraging - Uses advanced algorithms to determine when sufficient information has been gathered

- LLM-Friendly Output - Provides multiple output formats (JSON, Markdown, cleaned HTML) perfect for AI consumption

- Adaptive Crawling - Automatically adjusts crawling behavior based on content analysis

- High-Performance Architecture - Asynchronous design enables concurrent crawling of multiple URLs

- Comprehensive Media Extraction - Captures all media types including images, audio, and video

Crawl4AI represents a new approach to web scraping, moving from simple data extraction to intelligent information gathering designed for AI applications.

Key Features and Capabilities

Crawl4AI offers a comprehensive set of features that make it particularly well-suited for AI and machine learning workflows. Let's explore the core capabilities that set it apart from traditional web scraping tools.

Adaptive Web Crawling

Traditional web crawlers follow predetermined patterns, crawling pages blindly without knowing when they've gathered enough information. Crawl4AI's adaptive crawling changes this paradigm by introducing intelligence into the crawling process.

import asyncio

from crawl4ai import AsyncWebCrawler

from crawl4ai.extraction_strategy import LLMExtractionStrategy

async def adaptive_crawling_example():

async with AsyncWebCrawler() as crawler:

# Adaptive crawling with LLM extraction strategy

result = await crawler.arun(

url="https://web-scraping.dev/products",

extraction_strategy=LLMExtractionStrategy(

instruction="Extract all product information including name, price, and description"

)

)

print(f"Extracted content: {result.extracted_content}")

print(f"Success rate: {result.success}")

The adaptive crawling feature uses advanced information foraging algorithms to determine when sufficient information has been gathered, optimizing the crawling process and reducing unnecessary requests.

LLM-Friendly Output Formats

Crawl4AI supports multiple output formats specifically designed for AI consumption:

async def multiple_output_formats():

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(url="https://example.com")

# Access different output formats

markdown_content = result.markdown # Clean markdown for LLMs

json_content = result.json # Structured JSON data

html_content = result.html # Cleaned HTML

raw_html = result.raw_html # Original HTML

# Metadata for context

metadata = result.metadata

print(f"Page title: {metadata.get('title')}")

print(f"Word count: {metadata.get('word_count')}")

This multi-format approach ensures compatibility with various AI models and processing pipelines, making data integration seamless.

High-Performance Asynchronous Architecture

Crawl4AI's asynchronous design enables concurrent crawling of multiple URLs, significantly reducing data collection time. Learn more about concurrency and parallelism in web scraping for better performance.

async def concurrent_crawling():

urls = [

"https://web-scraping.dev/product/1",

"https://web-scraping.dev/product/2",

"https://web-scraping.dev/product/3"

]

async with AsyncWebCrawler() as crawler:

# Crawl multiple URLs concurrently

tasks = [crawler.arun(url=url) for url in urls]

results = await asyncio.gather(*tasks)

for i, result in enumerate(results):

print(f"URL {i+1}: {result.success}")

print(f"Content length: {len(result.markdown)}")

The asynchronous architecture makes Crawl4AI particularly effective for large-scale data collection projects where speed and efficiency are crucial.

Advanced Chunking Strategies

Crawl4AI offers multiple chunking strategies to improve data for different AI use cases:

from crawl4ai.chunking_strategy import RegexChunking, NlpSentenceChunking

async def chunking_examples():

async with AsyncWebCrawler() as crawler:

# Regex-based chunking

result1 = await crawler.arun(

url="https://web-scraping.dev/products",

chunking_strategy=RegexChunking(

patterns=[r'\n\n', r'\. '],

chunk_size=1000

)

)

# NLP-based sentence chunking

result2 = await crawler.arun(

url="https://web-scraping.dev/products",

chunking_strategy=NlpSentenceChunking(

chunk_size=500,

overlap=50

)

)

print(f"Regex chunks: {len(result1.chunks)}")

print(f"NLP chunks: {len(result2.chunks)}")

These chunking strategies help improve content for specific AI models and use cases, ensuring better processing and analysis.

Installation and Setup

Getting started with Crawl4AI is straightforward, with comprehensive setup instructions and verification tools.

Basic Installation

Install Crawl4AI with pip and run the setup utility to get started quickly. The following commands will prepare your environment for use.

# Install Crawl4AI

pip install crawl4ai

# Run post-installation setup

crawl4ai-setup

# Verify installation

crawl4ai-doctor

After installing, run crawl4ai-setup to configure your environment. Use crawl4ai-doctor to verify everything is ready for crawling.

Browser Setup

Crawl4AI uses Playwright for browser automation. The setup tool usually installs browsers automatically, but you can install them manually if needed.

If you encounter browser-related issues, install them manually:

# Install Playwright browsers

python -m playwright install --with-deps chromium

Quick Start Example

Here's a simple example to get you started:

import asyncio

from crawl4ai import AsyncWebCrawler

async def main():

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(url="https://crawl4ai.com")

print(result.markdown)

# Run the async main function

asyncio.run(main())

The setup process includes automatic dependency management and browser installation, making it easy to get started quickly.

Advanced Features and Use Cases

Crawl4AI's advanced features make it suitable for a wide range of AI and data science applications. Let's explore some practical use cases and implementation examples.

Content Aggregation for LLMs

One of the most effective use cases is aggregating content from multiple sources for LLM training or context enhancement:

async def content_aggregation():

sources = [

"https://news-site.com/tech",

"https://blog.example.com/ai",

"https://research.org/papers"

]

aggregated_content = []

async with AsyncWebCrawler() as crawler:

for url in sources:

result = await crawler.arun(

url=url,

extraction_strategy=LLMExtractionStrategy(

instruction="Extract main article content, removing ads and navigation"

)

)

if result.success:

aggregated_content.append({

'url': url,

'content': result.markdown,

'metadata': result.metadata

})

return aggregated_content

This approach enables you to build comprehensive datasets from multiple web sources, perfect for training or fine-tuning language models.

Real-time Information Retrieval

Crawl4AI excels at providing AI assistants with current information from the web:

async def real_time_search(query, max_results=5):

search_urls = [

f"https://search.example.com?q={query}",

f"https://news.example.com/search?q={query}"

]

results = []

async with AsyncWebCrawler() as crawler:

for url in search_urls:

result = await crawler.arun(

url=url,

extraction_strategy=LLMExtractionStrategy(

instruction=f"Extract search results related to: {query}"

)

)

if result.success:

results.extend(result.extracted_content)

return results[:max_results]

This capability is particularly valuable for AI assistants that need to provide up-to-date information beyond their training data.

Dynamic Content Handling

Crawl4AI's JavaScript execution capabilities make it ideal for modern web applications. For more on handling dynamic content, see Web Scraping with Playwright and Python.

async def dynamic_content_extraction():

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(

url="https://spa-example.com",

js_code="""

// Wait for dynamic content to load

await new Promise(resolve => setTimeout(resolve, 2000));

// Click load more button if present

const loadMoreBtn = document.querySelector('.load-more');

if (loadMoreBtn) {

loadMoreBtn.click();

await new Promise(resolve => setTimeout(resolve, 1000));

}

""",

wait_for=".content-loaded"

)

return result.markdown

This JavaScript execution capability ensures that Crawl4AI can handle modern single-page applications and dynamic content that traditional scrapers might miss.

Integration with AI Workflows

Crawl4AI's design makes it particularly well-suited for integration with various AI workflows and frameworks.

Integration with LangChain

Crawl4AI integrates easily with frameworks like LangChain, making it simple to build AI pipelines that use fresh, high-quality web data. Explore LangChain alternatives for more AI framework options.

from langchain.document_loaders import Crawl4AILoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

def langchain_integration():

# Use Crawl4AI with LangChain

loader = Crawl4AILoader(

urls=["https://example.com"],

extraction_strategy="LLMExtractionStrategy"

)

documents = loader.load()

# Split documents for processing

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200

)

splits = text_splitter.split_documents(documents)

return splits

Crawl4AI works smoothly with LangChain, making it easy to build AI apps that use fresh, structured web data in just a few lines of code.

Custom AI Pipeline

Crawl4AI fits easily into custom AI pipelines, letting you automate web data collection and processing for your models.

async def ai_pipeline(urls):

# Step 1: Crawl and extract content

crawled_data = []

async with AsyncWebCrawler() as crawler:

for url in urls:

result = await crawler.arun(

url=url,

extraction_strategy=LLMExtractionStrategy(

instruction="Extract main content and key insights"

)

)

crawled_data.append(result)

# Step 2: Process with AI model

processed_data = []

for data in crawled_data:

if data.success:

# Your AI processing logic here

processed = process_with_ai(data.markdown)

processed_data.append(processed)

return processed_data

Power Up with Scrapfly

While Crawl4AI provides excellent capabilities for AI-friendly web crawling, you might need additional features like advanced proxy management, anti-detection measures, or enterprise-grade reliability for production applications.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

For projects requiring domain-wide crawling without building custom spider logic, Scrapfly's Crawler API provides fully managed crawling with automatic URL discovery, anti-bot bypass, and AI-ready output formats like markdown and cleaned HTML.

FAQ

Here are answers to common questions about Crawl4AI and its capabilities.

Is Crawl4AI suitable for non-technical users?

Yes, Crawl4AI is designed to be user-friendly, enabling anyone, regardless of technical experience, to extract valuable web data. The framework provides both programmatic APIs for developers and simplified interfaces for non-technical users, making web data extraction accessible to everyone.

What makes Crawl4AI different from traditional web scrapers?

Crawl4AI is specifically designed for AI applications, offering adaptive crawling algorithms, LLM-friendly output formats, and intelligent content extraction. Unlike traditional scrapers that focus solely on data extraction, Crawl4AI improves content for AI consumption and provides built-in strategies for information foraging.

Is Crawl4AI free and open-source?

Yes, Crawl4AI is completely free and open-source, making it accessible for everyone. You can use it for personal projects, commercial applications, or contribute to its development on GitHub.

Conclusion

Crawl4AI represents a significant advancement in web crawling technology, specifically designed to meet the unique needs of AI applications and large language models. Its adaptive crawling algorithms, LLM-friendly output formats, and intelligent content extraction capabilities make it an invaluable tool for anyone working with AI-driven data collection.

As AI applications continue to evolve and require more sophisticated data collection methods, frameworks like Crawl4AI will play an increasingly important role in bridging the gap between web content and AI systems. By understanding and implementing Crawl4AI's capabilities, you can build more effective, data-driven AI applications that use the full potential of web-based information.

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.