Screenshot API

Effortless Web Screenshots with Real Browsers for Developers

Capture high-quality web page screenshots without interruptions. Our engineered solution adapts to your needs and scales.

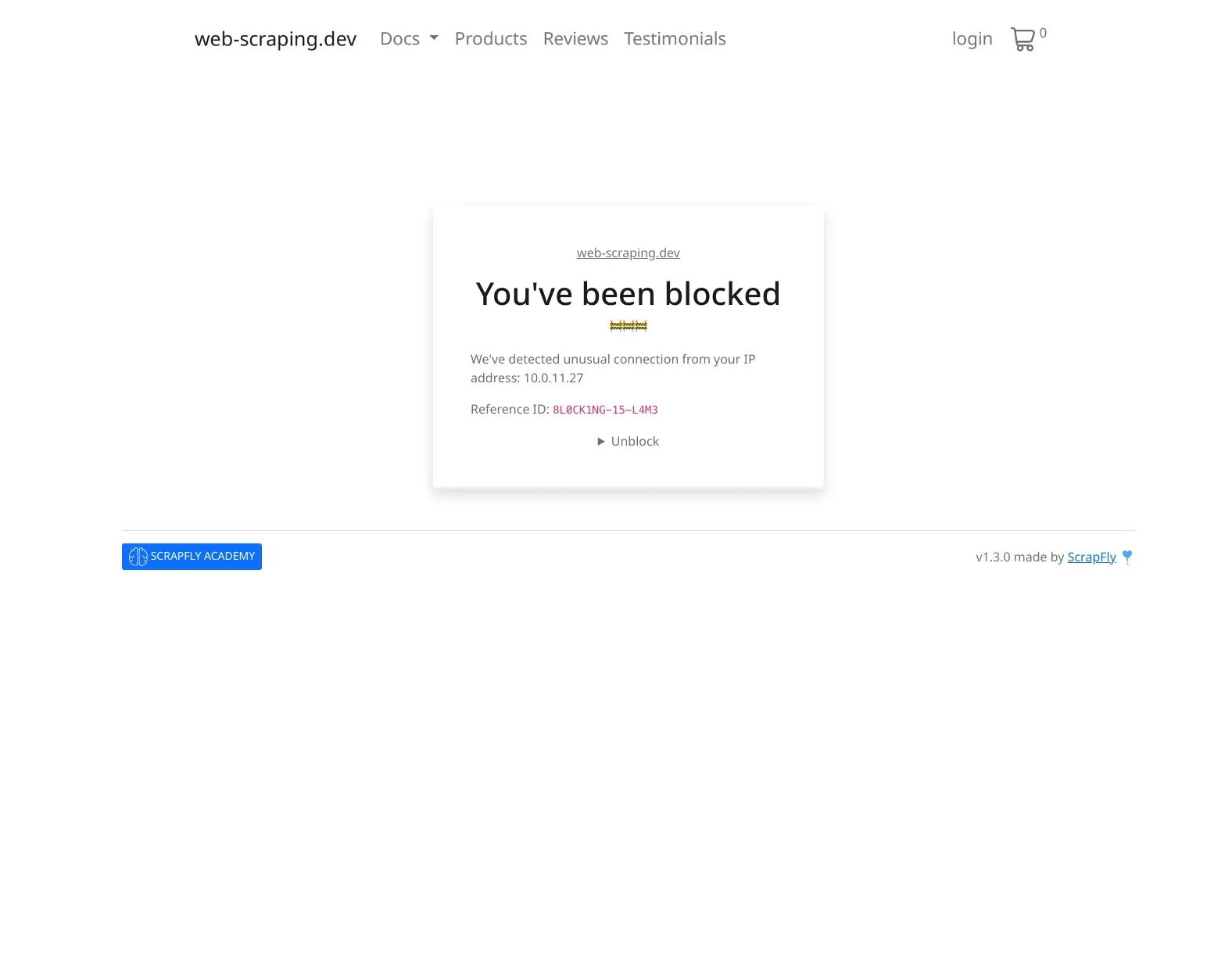

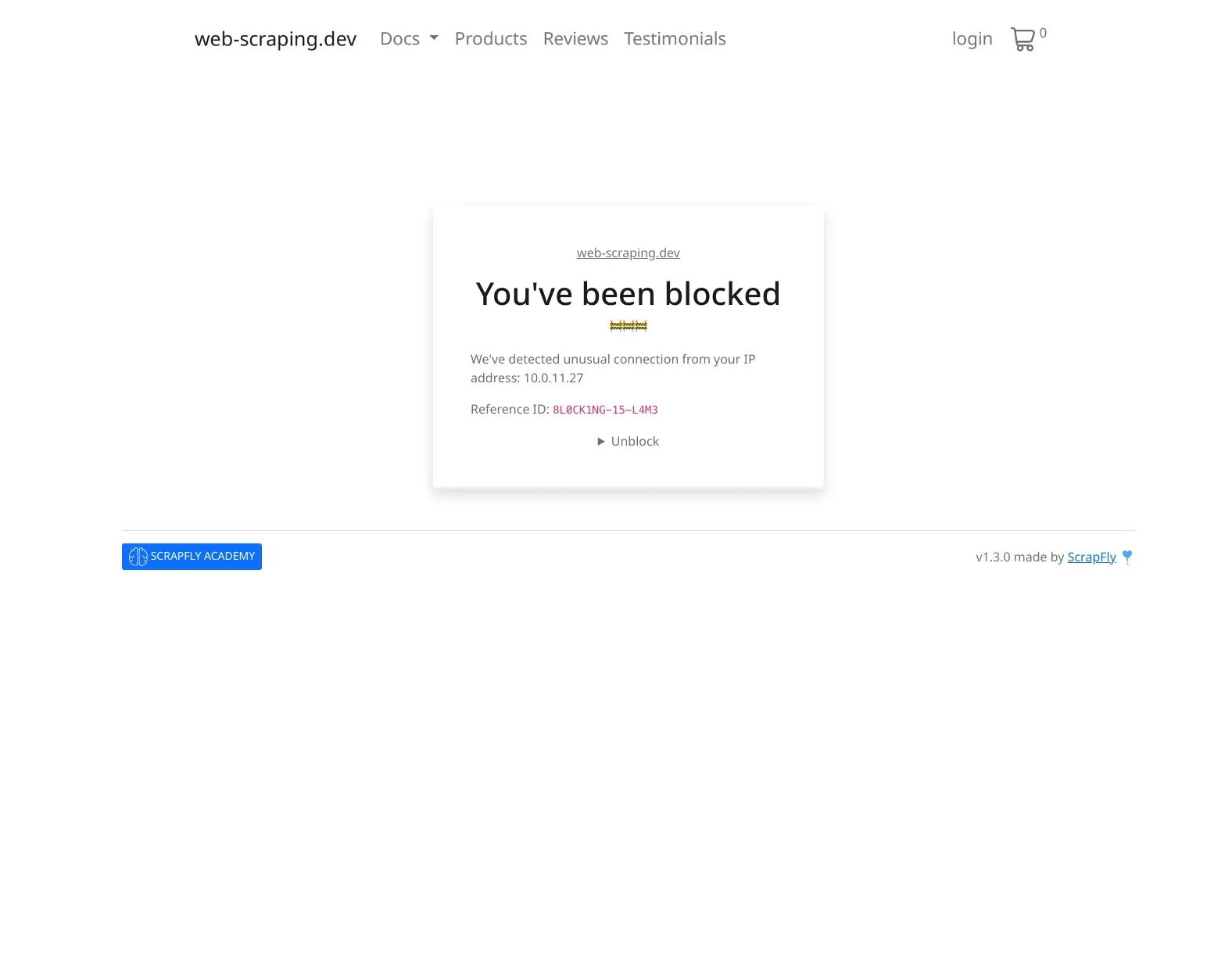

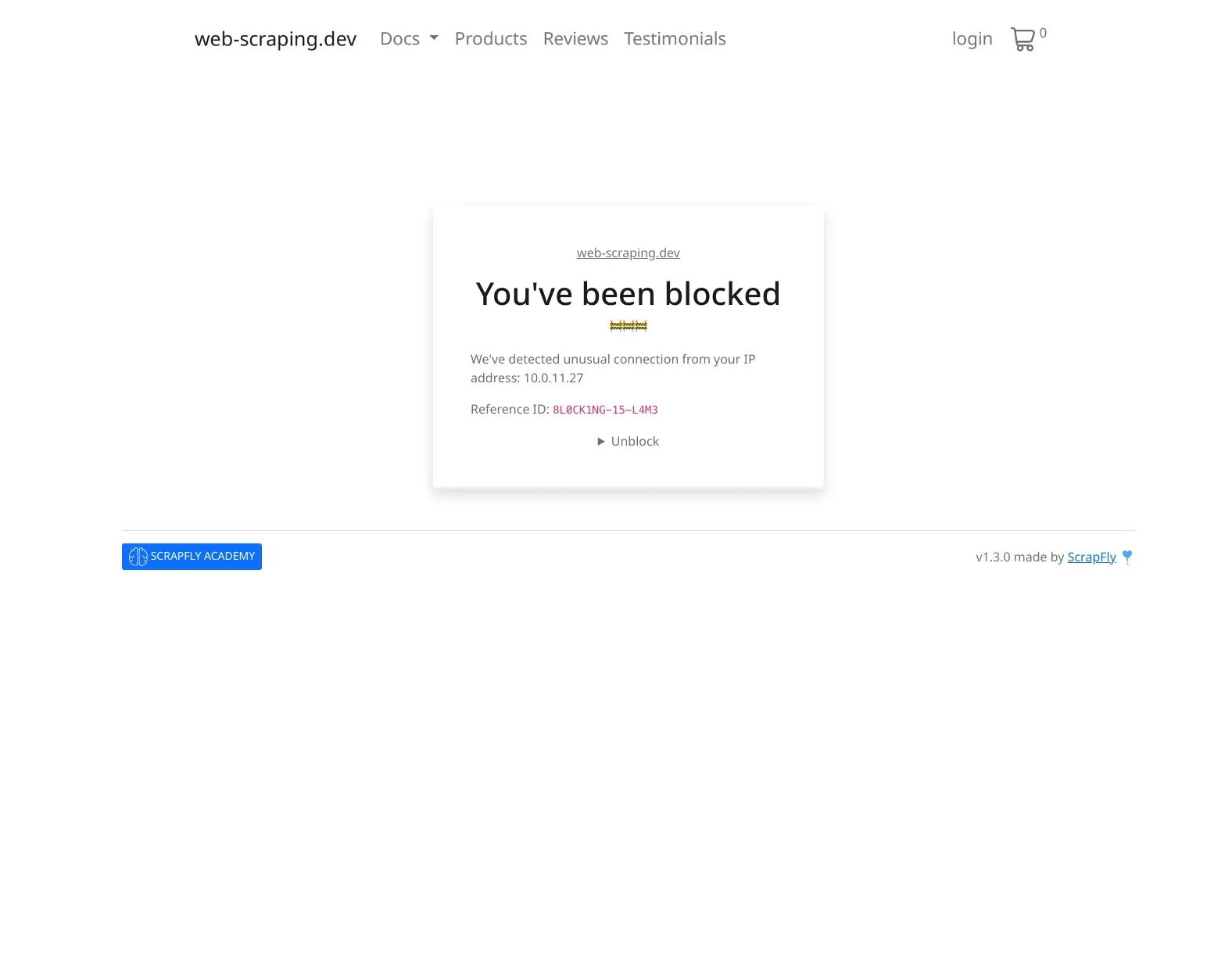

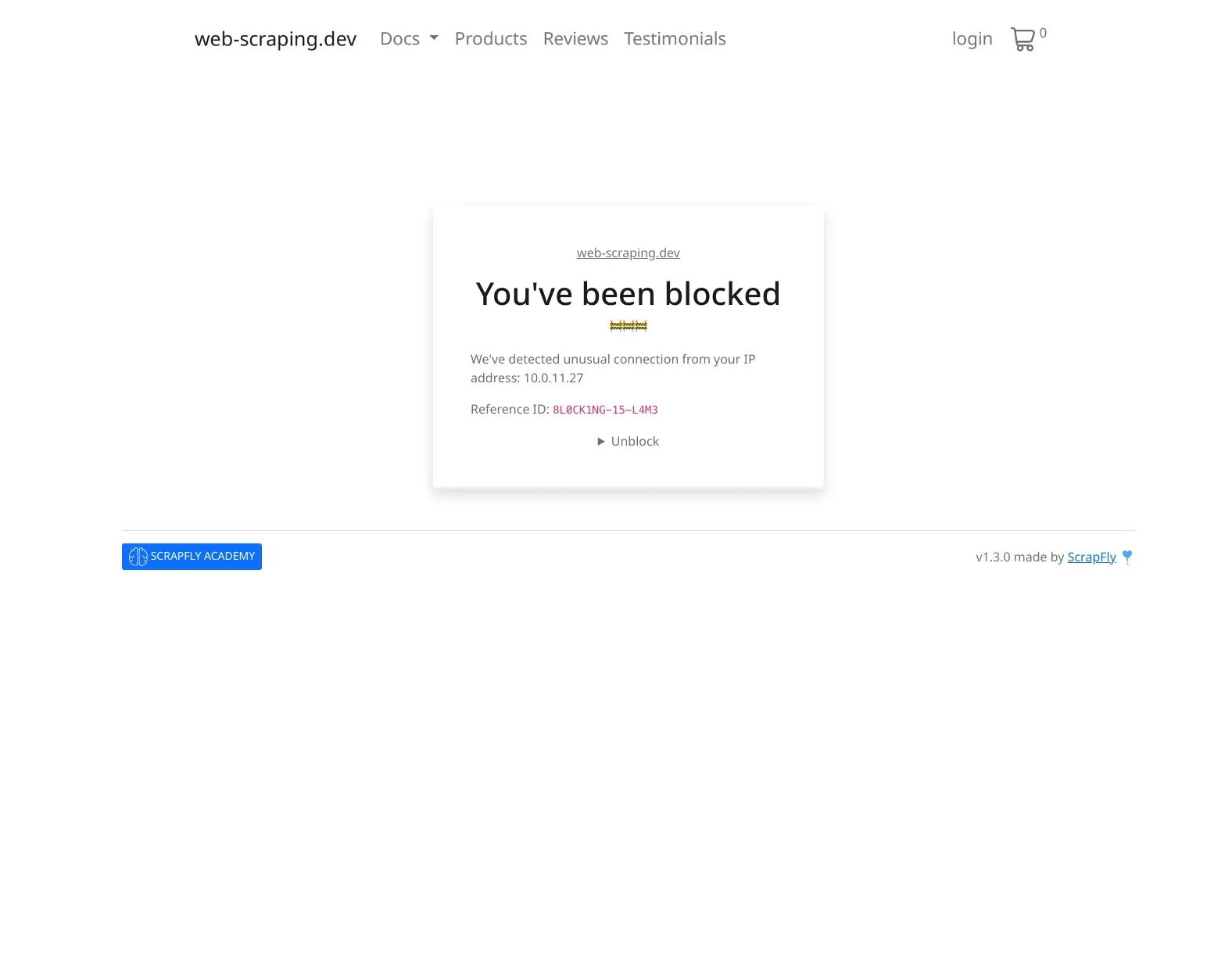

- Bypass bot detection effortlessly and avoid anti-bot measures.

- Use real web browsers to capture complex, dynamic pages.

- Get clean, clutter-free screenshots by blocking ads and cookie pop-ups.

- Integrate seamlessly with Python, TypeScript, and popular platforms.

Simple to Use, Powerful Features

-

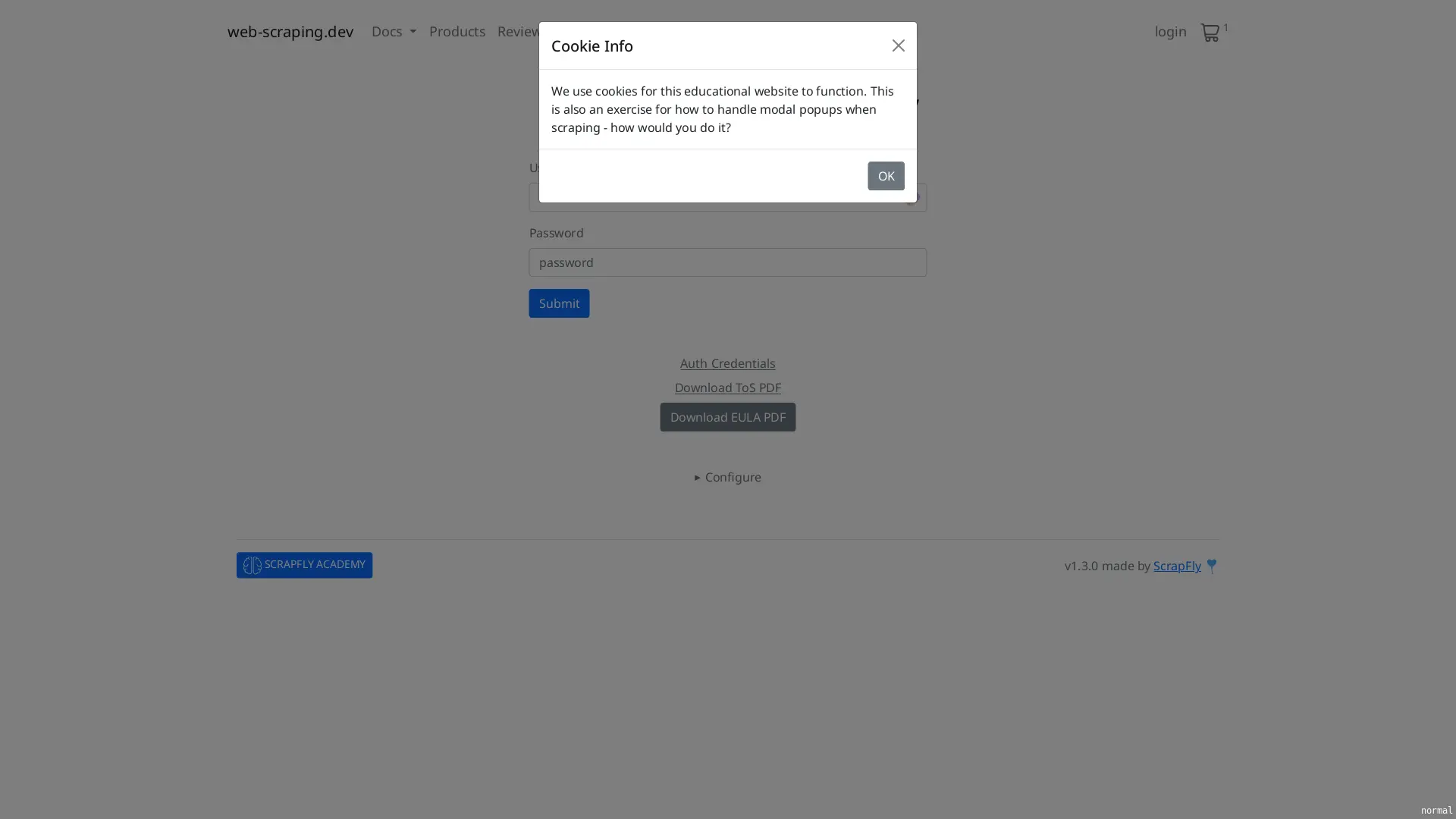

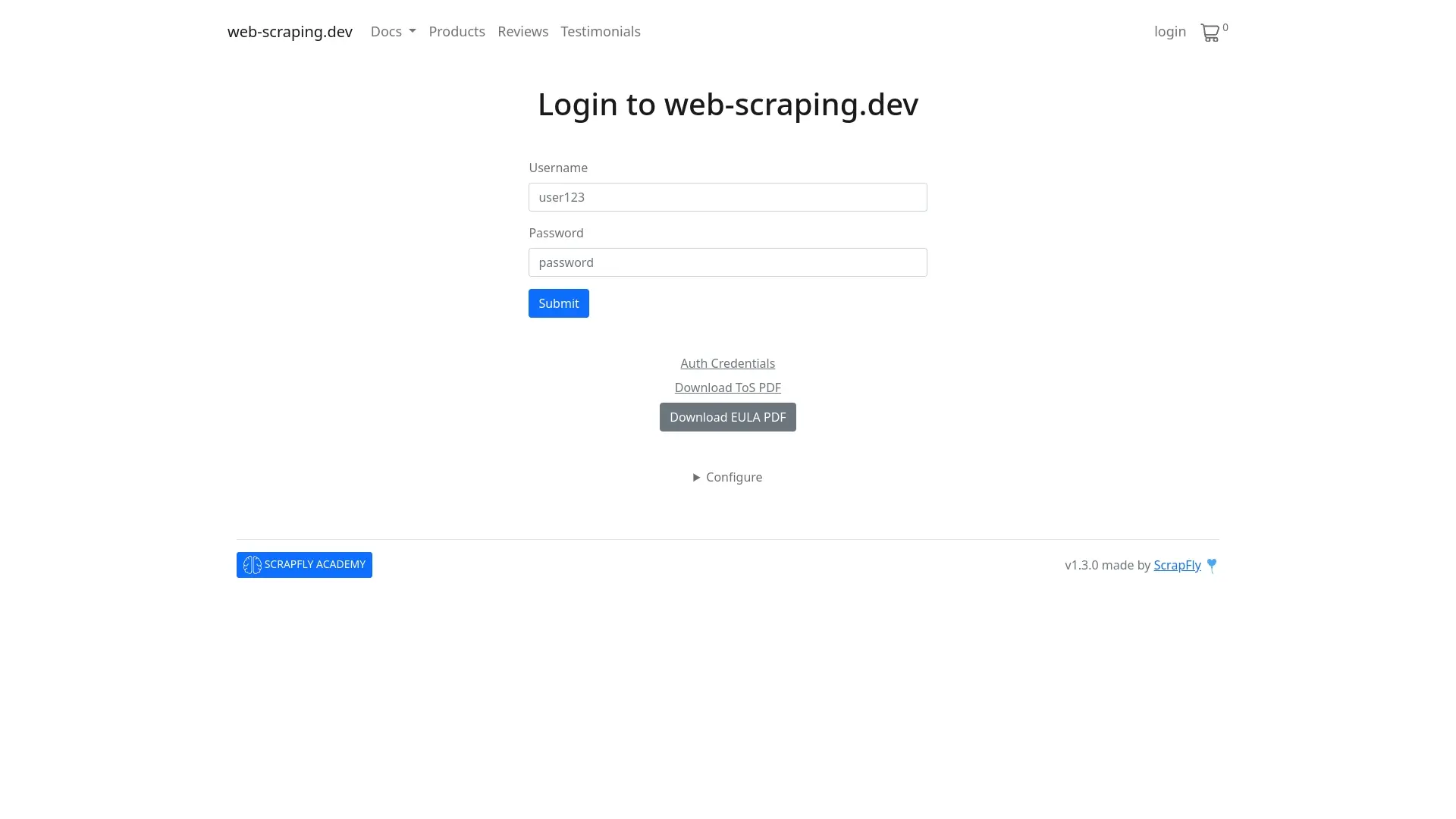

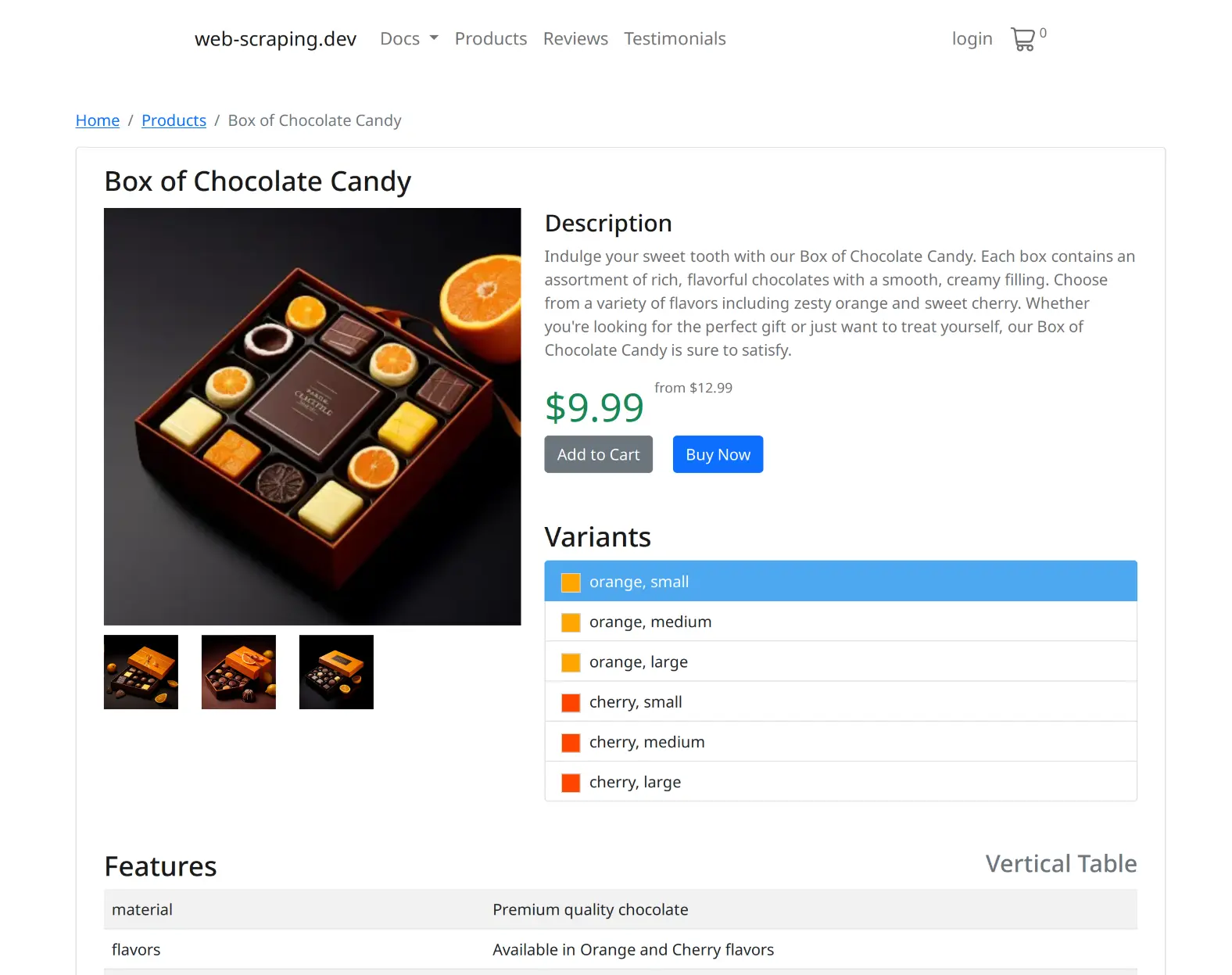

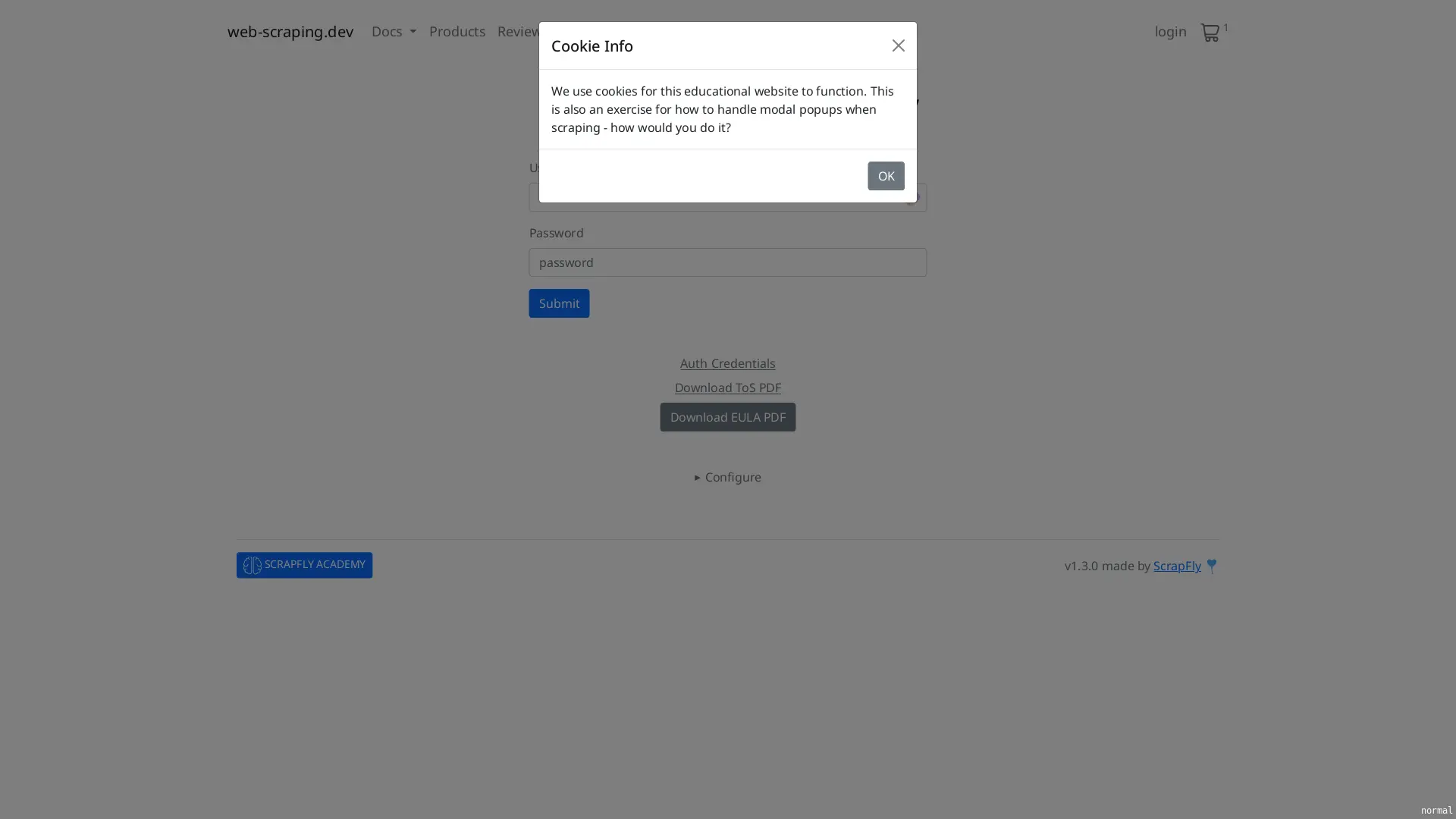

Capture Clean ScreenshotsAutomatically block ads, cookie pop-ups, and other on-page distractions to get clean, accurate screenshots.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/login?cookies', # use one of many modifiers to modify the page options=["block_banners"], ) ) Path('sa_options.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/reviews', // use one of many modifiers to modify the page options: ['block_banners'] }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/login?cookies \ options==block_banners

-

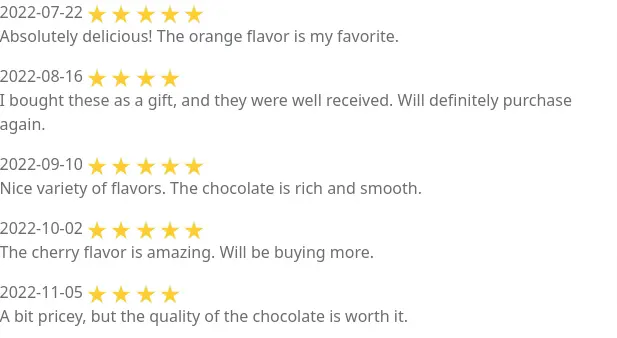

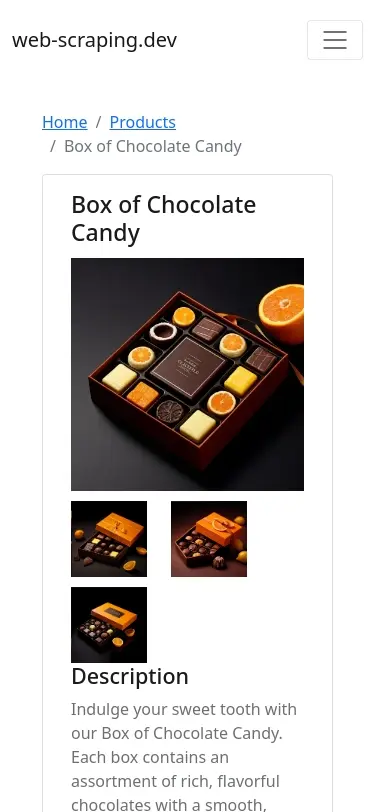

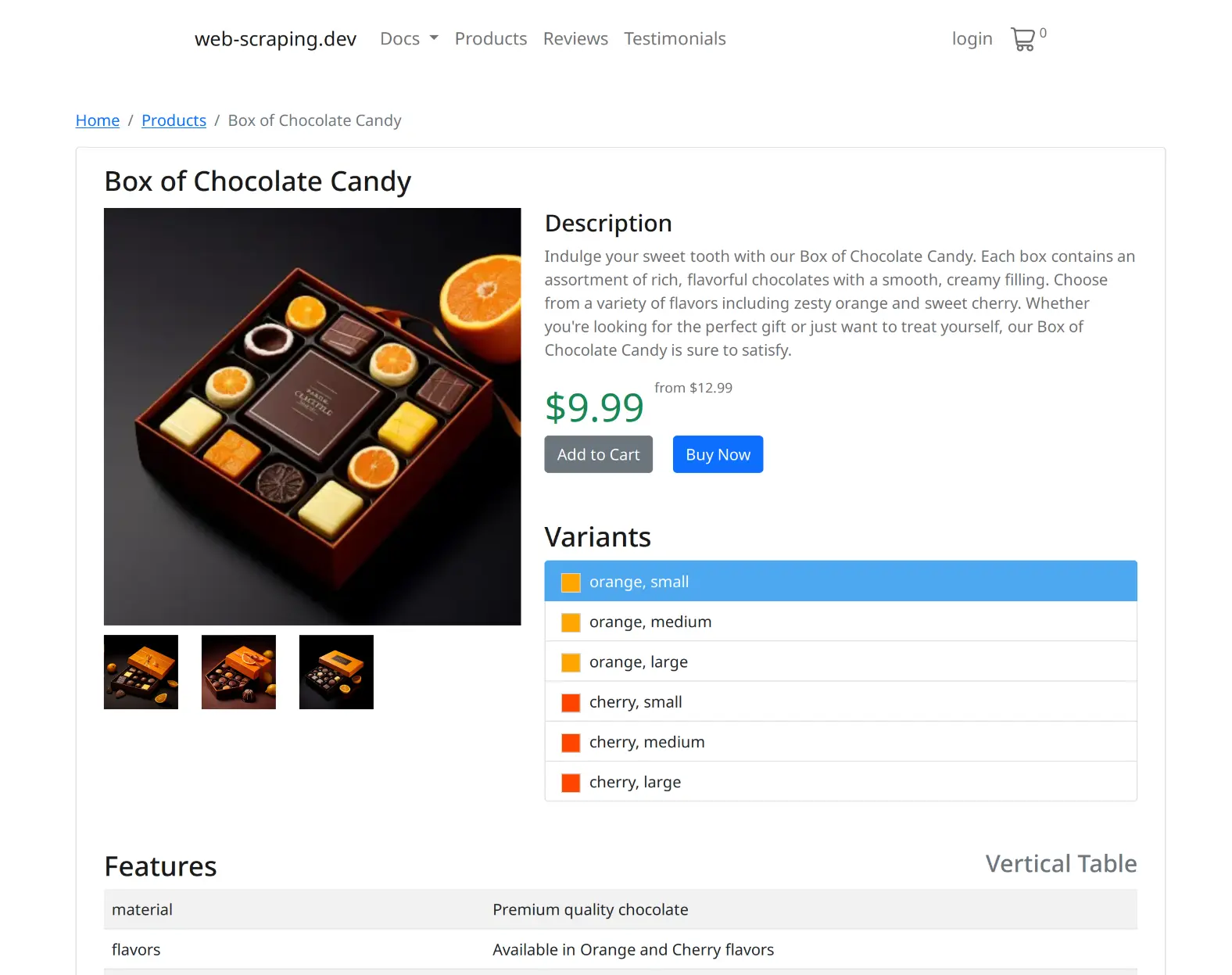

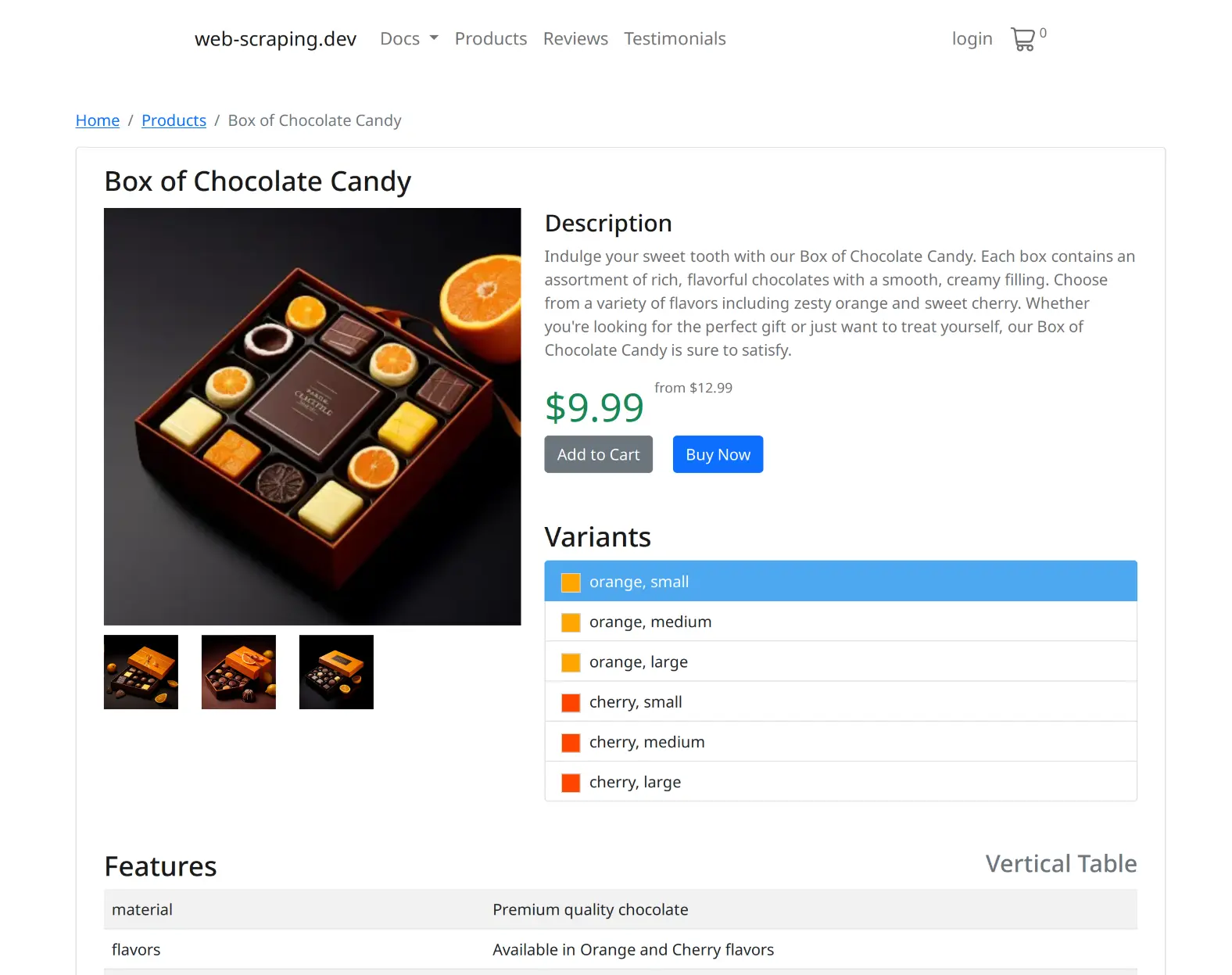

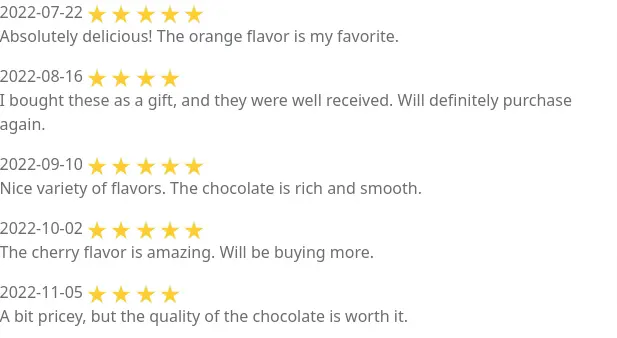

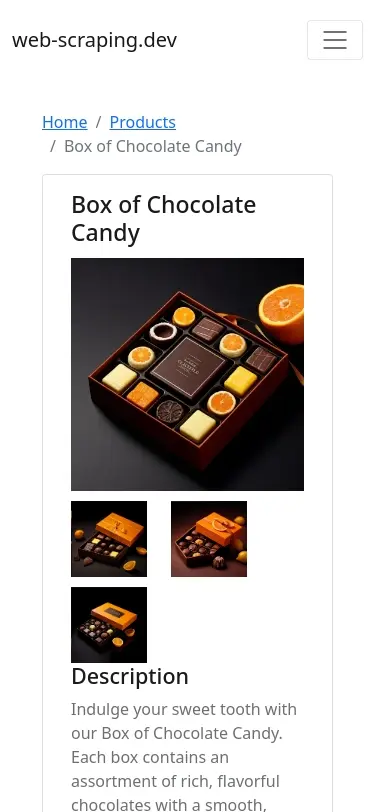

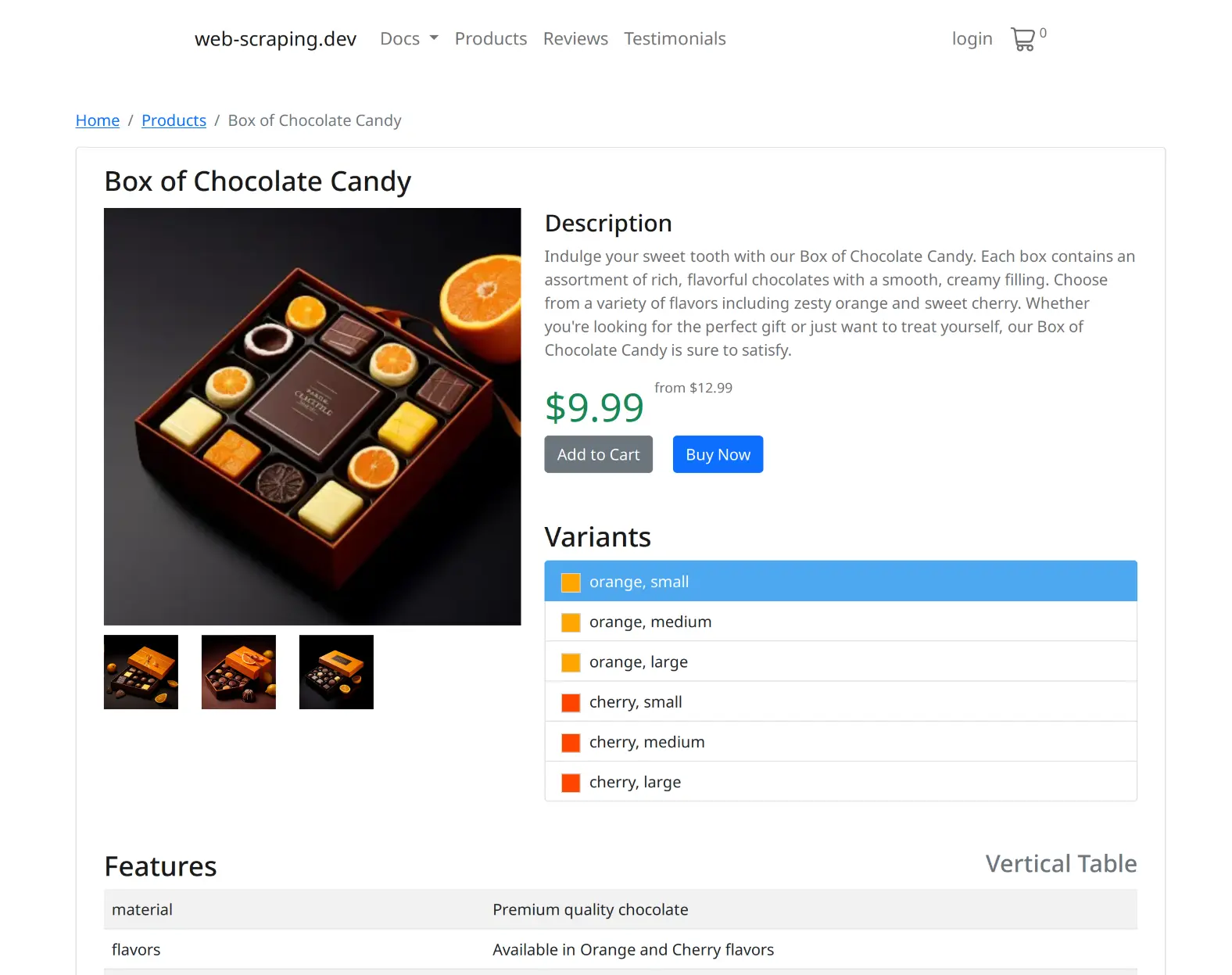

Target Full Page or Specific AreasChoose between full-page captures or select specific areas of the webpage for precise control.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/product/1', # use XPath or CSS selectors to tell what to screenshot capture='#reviews', # force scrolling to the bototm of the page to load all areas auto_scroll=True, ) ) Path('sa_areas.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/product/1', // use XPath or CSS selectors to tell what to screenshot capture: '#reviews', // force scrolling to the bototm of the page to load all areas auto_scroll: true, }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/product/1 \ capture==#reviews \ auto_scroll==true

-

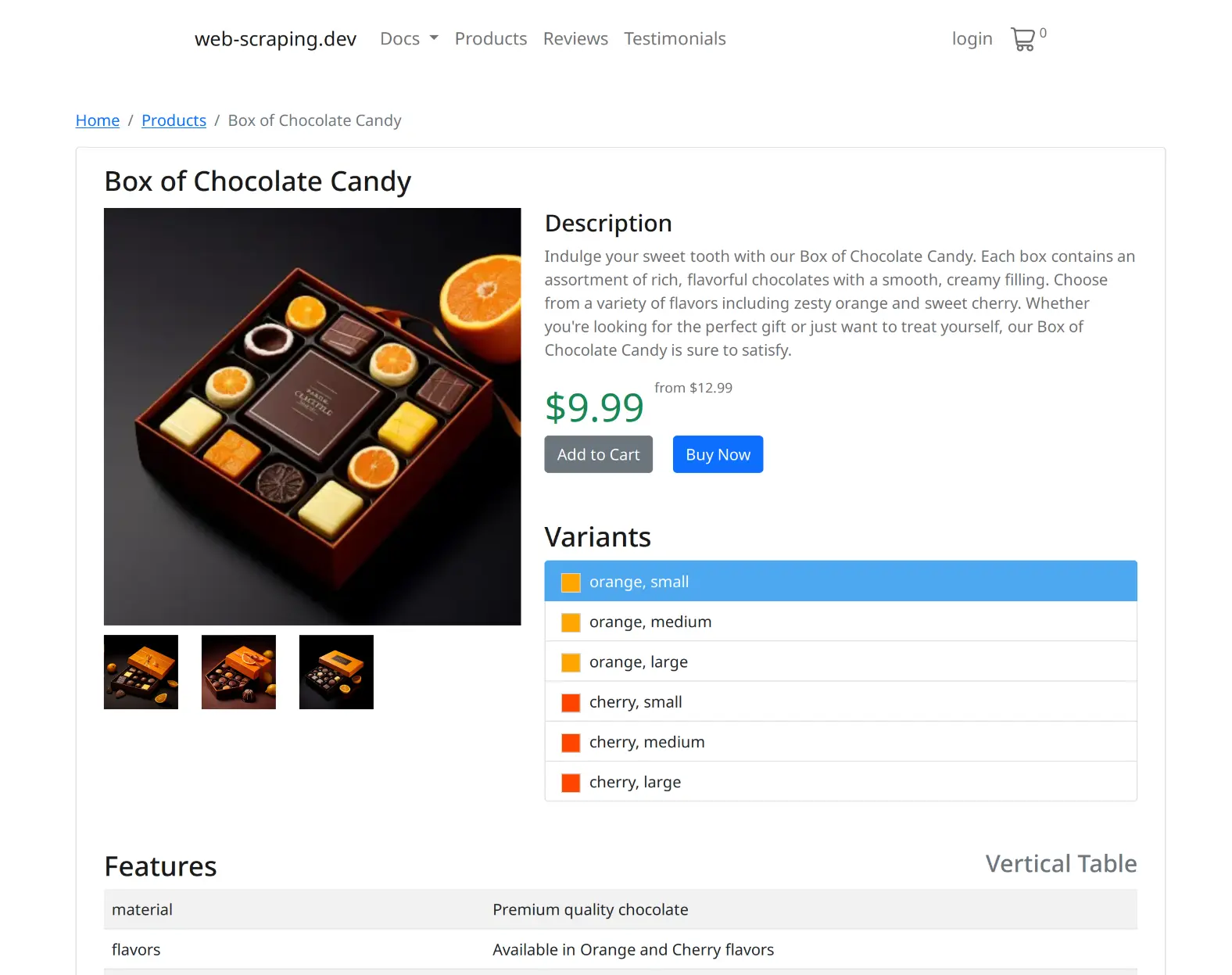

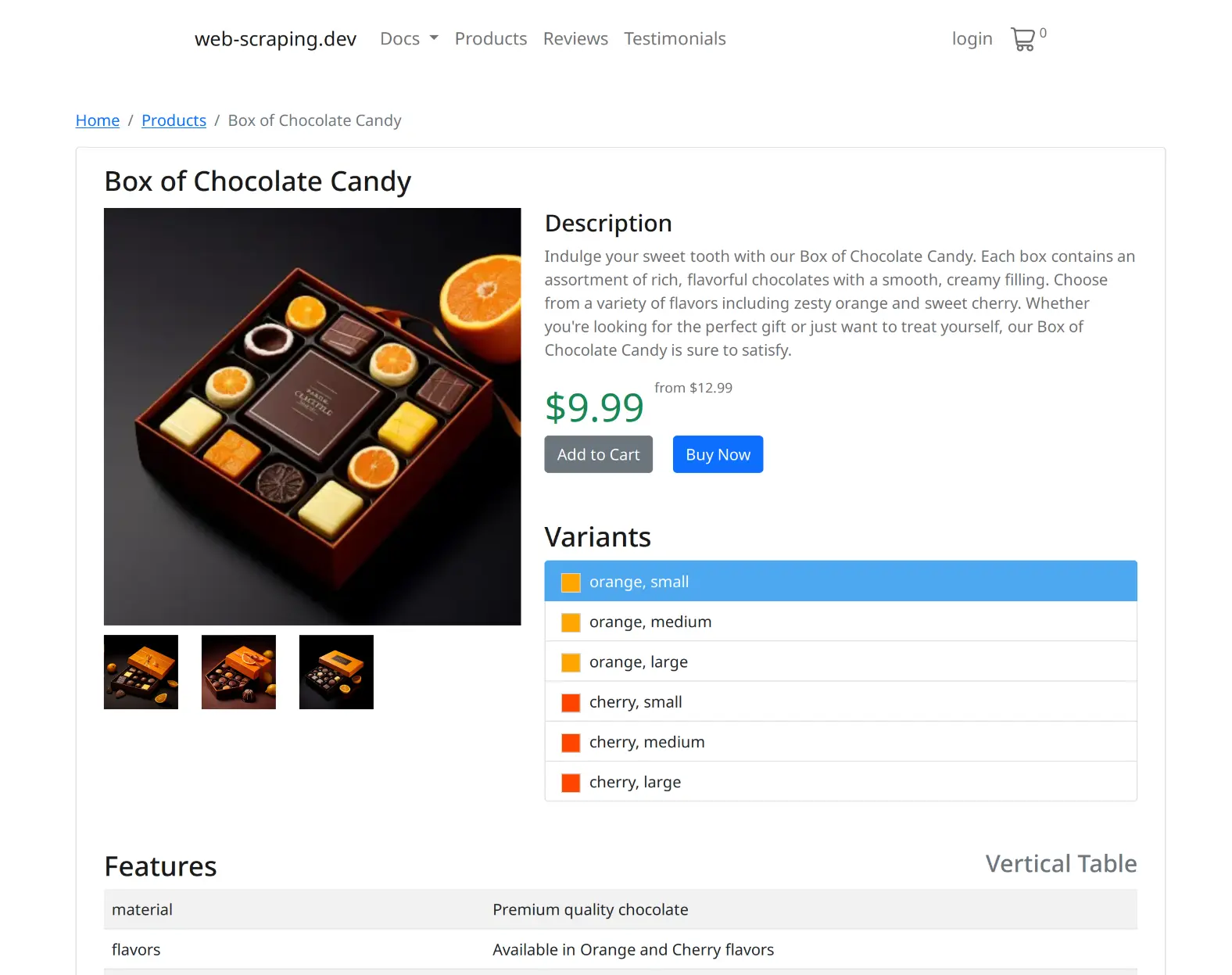

Change Viewport ResolutionAdjust the viewport resolution to match your device requirements or target display settings.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/product/1', # for desktop resolution="1920x1080", # default # for mobile resolution="375x812", # for tables resolution="1024x768", ) ) Path('sa_resolution.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/product/1', // for desktop resolution: "1920x1080", // default // for mobile resolution: "375x812", // for tables resolution: "1024x768", }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/product/1 \ resolution==1920x1080

-

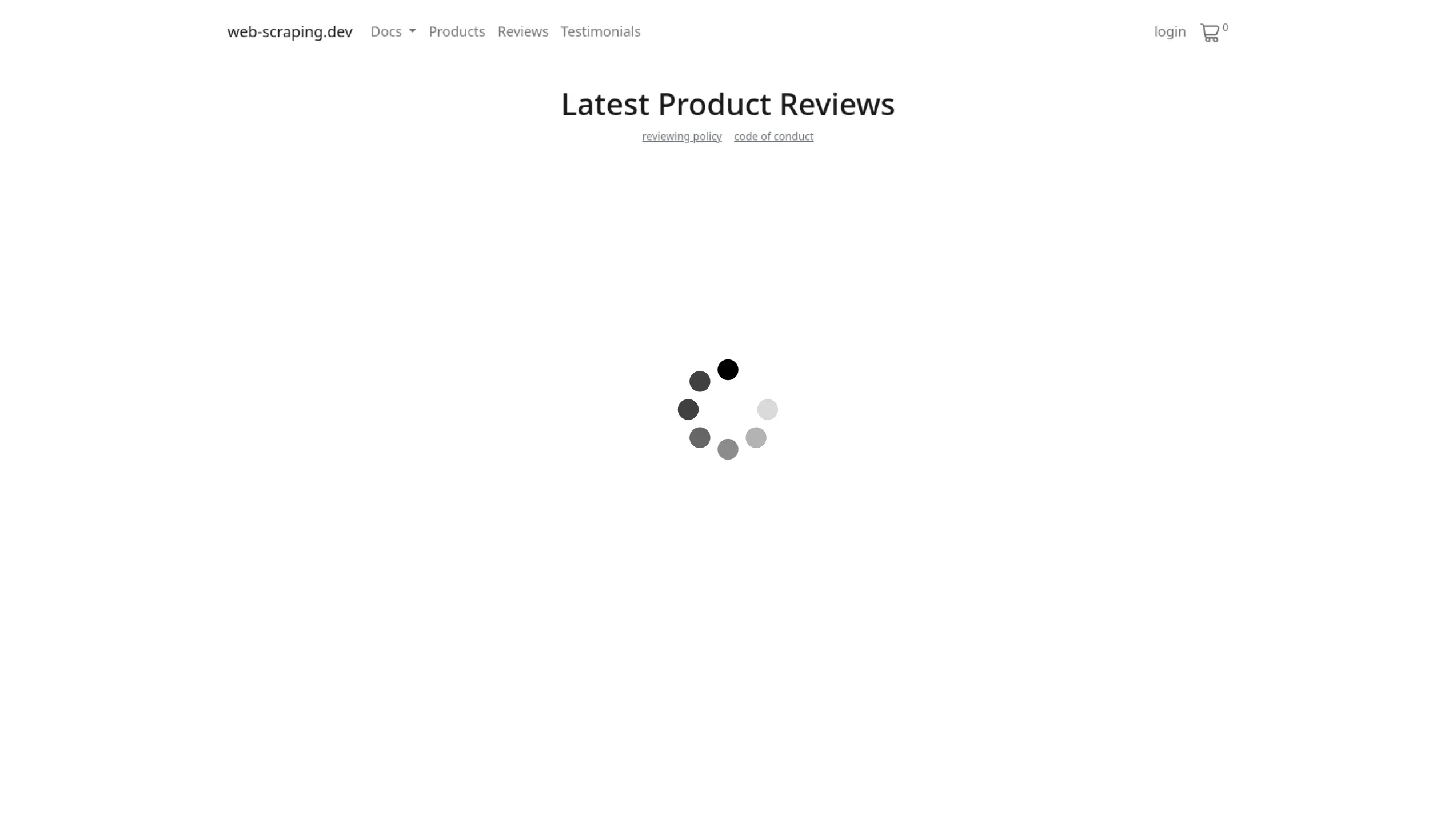

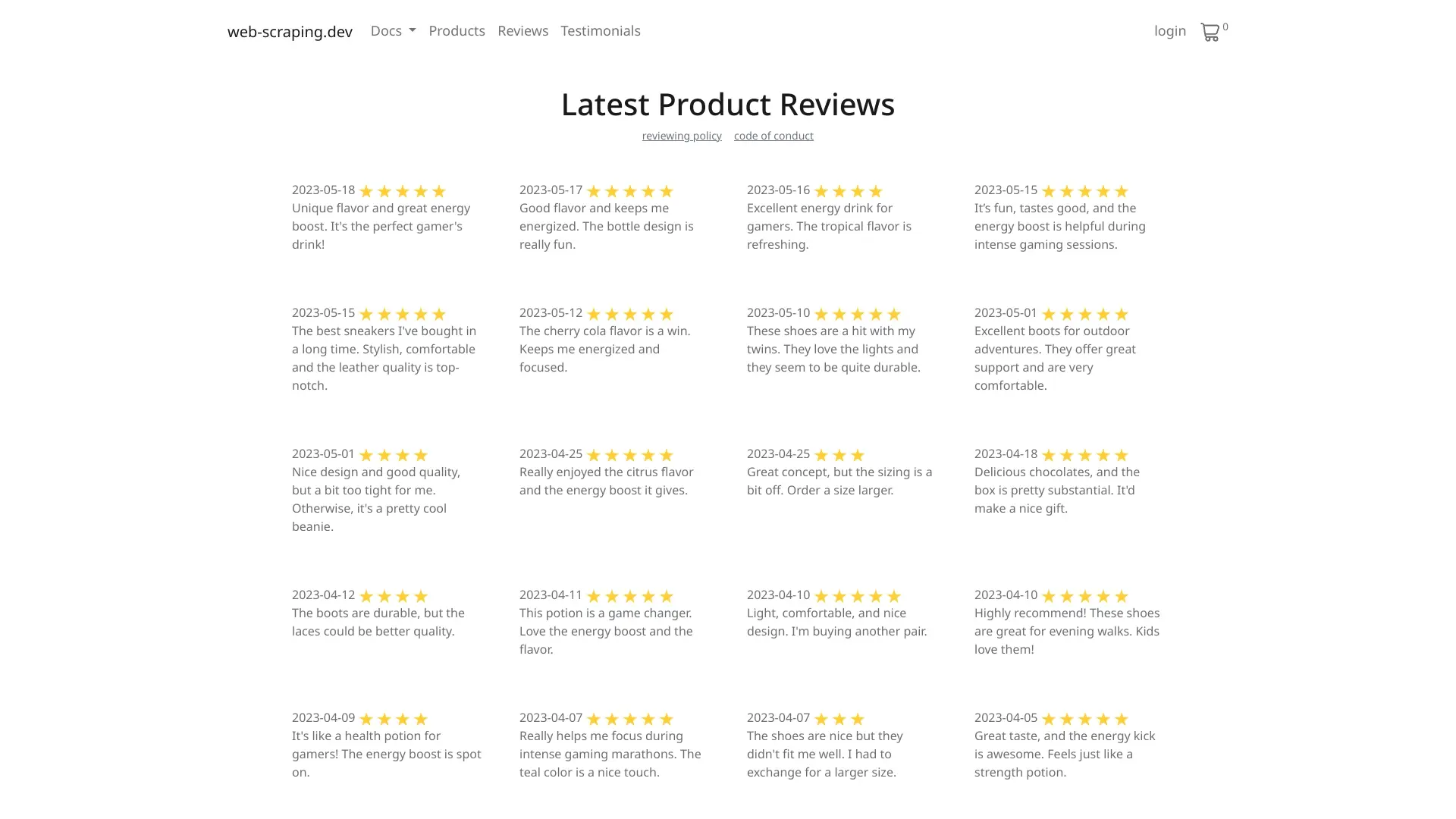

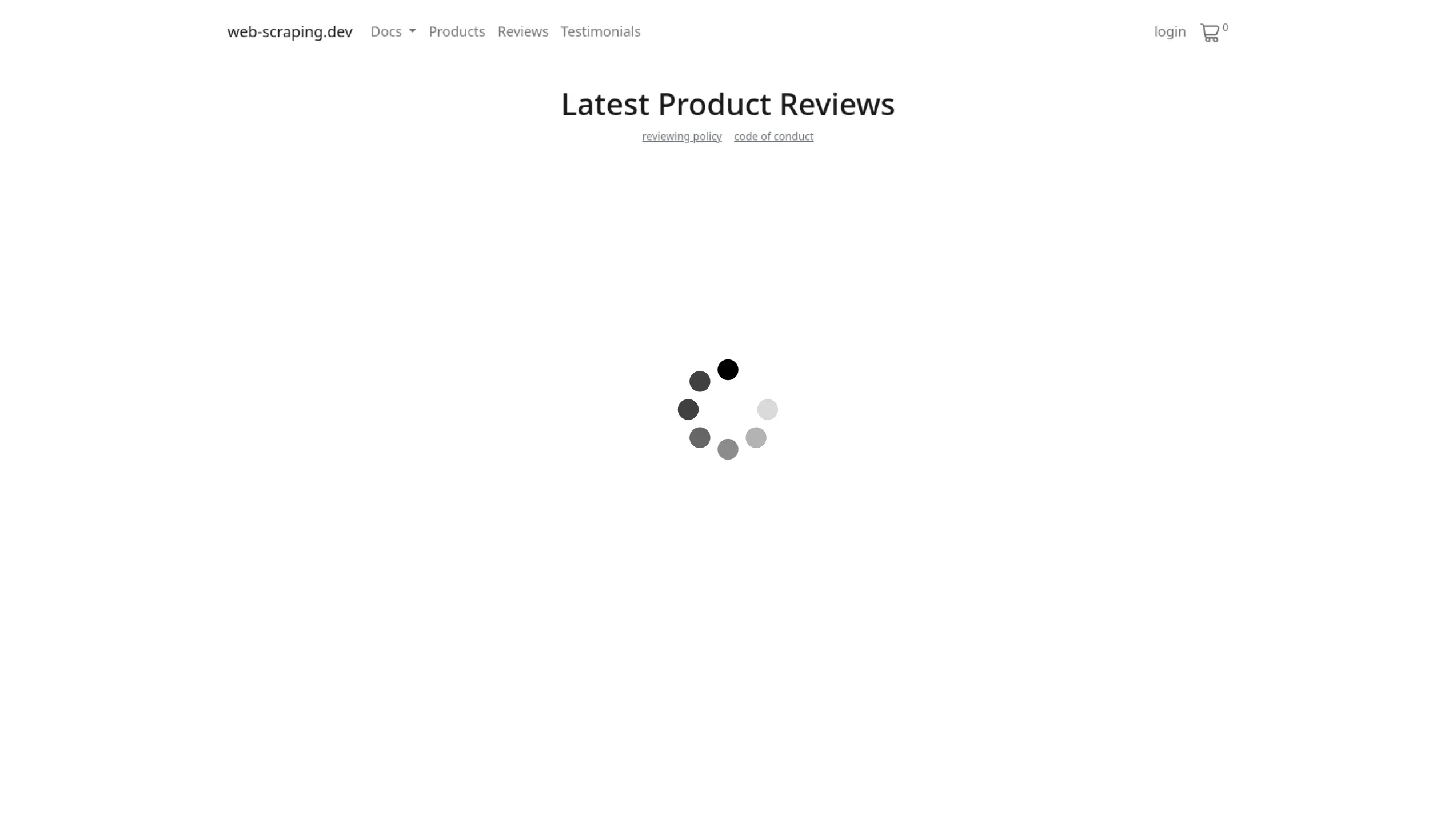

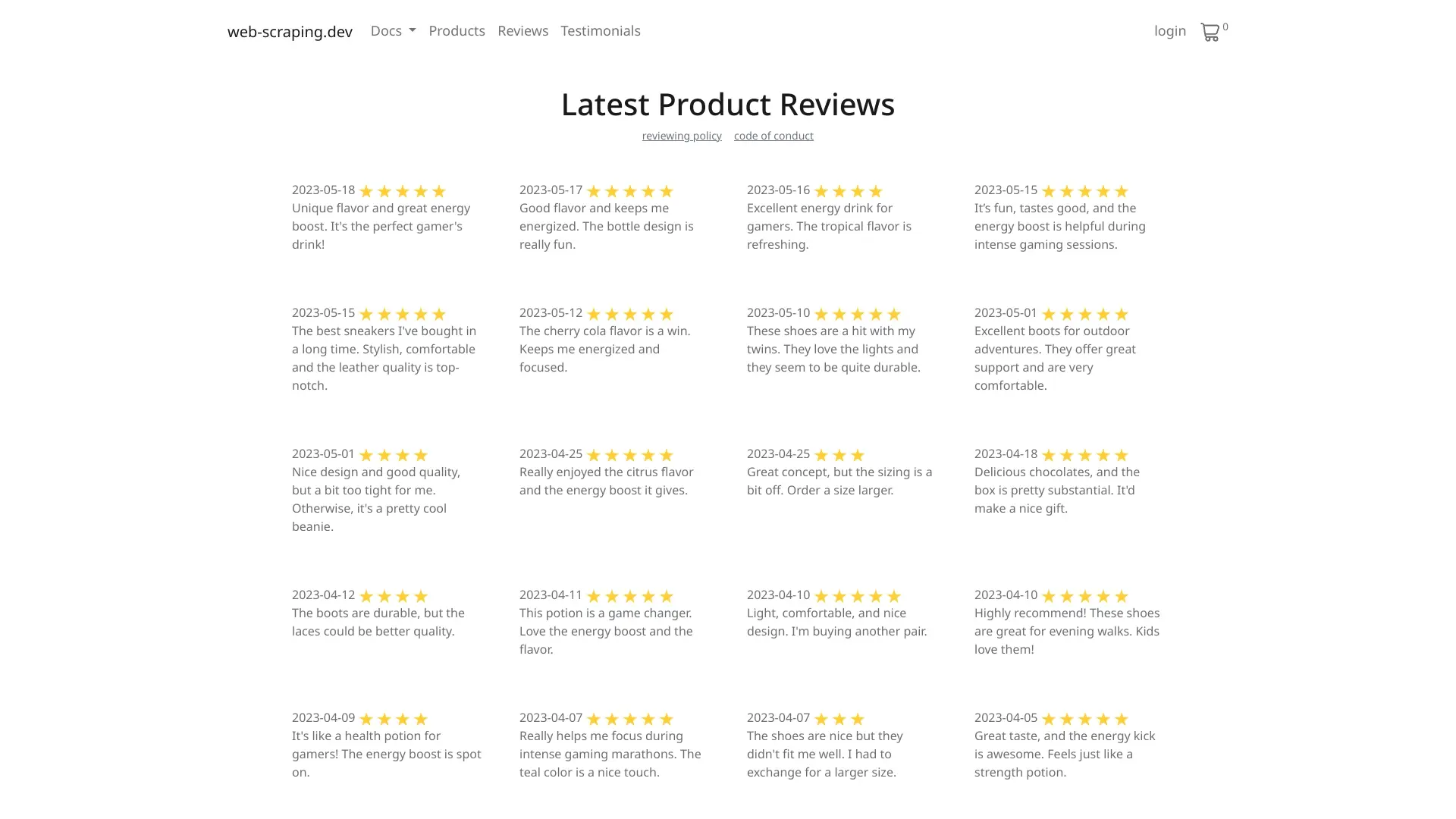

Control the Screenshot FlowManage the entire screenshot flow, from start to finish. Wait for elements to load, scroll, execute javascript.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/reviews', # for for specific element to appear wait_for_selector=".review", # or for a specific time rendering_wait=3000, # 3 seconds ) ) Path('sa_flow.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/reviews', // for for specific element to appear wait_for_selector: ".review", // or for a specific time rendering_wait: 3000, // 3 seconds }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/reviews \ wait_for_selector==.review \ rendering_wait==3000

-

Bypass Anti-Bot Measures AutomaticallyAutomatically solve JS challenges and optimize requests for seemless anti-bot bypass.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/product/1', # ^ auto anti-bot bypass, no extra configuration needed ) ) Path('sa_flow.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/product/1', // enable cache cache: true, // optionally set expiration cache_ttl: 3600, // 1 hour // or clear cache any time // cache_clear: true, }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/product/1 \ cache==true \ cache_ttl==3600 \ cache_clear==true

-

Automatic Proxy Rotation from 130M+ Proxies From Over 120+ Countries.Access any page at scale with automatic proxy rotation that assigns a new IP address for each request.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/product/1', # ^ auto anti-bot bypass, no extra configuration needed ) ) Path('sa_flow.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/product/1', // enable cache cache: true, // optionally set expiration cache_ttl: 3600, // 1 hour // or clear cache any time // cache_clear: true, }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/product/1 \ cache==true \ cache_ttl==3600 \ cache_clear==true

-

Use Server-Side Caching to Speed UpCache captured screenshots on Scrapfly servers for an easy integration with real time tools.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/product/1', # enable cache cache=True, # optionally set expiration cache_ttl=3600, # 1 hour # or clear cache any time # cache_clear=True, ) ) Path('sa_flow.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/product/1', // ^ auto anti-bot bypass, no extra configuration needed }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/product/1 \

-

Export to Different Screenshot FormatsScreenshot as jpg, png, webp or gif formats directly.

from pathlib import Path from scrapfly import ScreenshotConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") api_response = client.screenshot( ScreenshotConfig( url='https://web-scraping.dev/product/1', # directly capture in your file type format="jpg", # jpg, png, webp, gif etc. ) ) Path('sa_flow.jpg').write_bytes(api_response.result['result'])import { ScrapflyClient, ScreenshotConfig, } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); let api_result = await client.screenshot( new ScreenshotConfig({ url: 'https://web-scraping.dev/product/1', // directly capture in your file type format: "jpg", // jpg, png, webp, gif etc. }) ); console.log(api_result.image);http https://api.scrapfly.io/screenshot \ key==$SCRAPFLY_KEY \ url==https://web-scraping.dev/product/1 \ format==jpg

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/login?cookies',

# use one of many modifiers to modify the page

options=["block_banners"],

)

)

Path('sa_options.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/reviews',

// use one of many modifiers to modify the page

options: ['block_banners']

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/login?cookies \

options==block_banners

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/product/1',

# use XPath or CSS selectors to tell what to screenshot

capture='#reviews',

# force scrolling to the bototm of the page to load all areas

auto_scroll=True,

)

)

Path('sa_areas.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/product/1',

// use XPath or CSS selectors to tell what to screenshot

capture: '#reviews',

// force scrolling to the bototm of the page to load all areas

auto_scroll: true,

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/product/1 \

capture==#reviews \

auto_scroll==true

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/product/1',

# for desktop

resolution="1920x1080", # default

# for mobile

resolution="375x812",

# for tables

resolution="1024x768",

)

)

Path('sa_resolution.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/product/1',

// for desktop

resolution: "1920x1080", // default

// for mobile

resolution: "375x812",

// for tables

resolution: "1024x768",

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/product/1 \

resolution==1920x1080

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/reviews',

# for for specific element to appear

wait_for_selector=".review",

# or for a specific time

rendering_wait=3000, # 3 seconds

)

)

Path('sa_flow.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/reviews',

// for for specific element to appear

wait_for_selector: ".review",

// or for a specific time

rendering_wait: 3000, // 3 seconds

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/reviews \

wait_for_selector==.review \

rendering_wait==3000

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/product/1',

# ^ auto anti-bot bypass, no extra configuration needed

)

)

Path('sa_flow.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/product/1',

// enable cache

cache: true,

// optionally set expiration

cache_ttl: 3600, // 1 hour

// or clear cache any time

// cache_clear: true,

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/product/1 \

cache==true \

cache_ttl==3600 \

cache_clear==true

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/product/1',

# ^ auto anti-bot bypass, no extra configuration needed

)

)

Path('sa_flow.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/product/1',

// enable cache

cache: true,

// optionally set expiration

cache_ttl: 3600, // 1 hour

// or clear cache any time

// cache_clear: true,

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/product/1 \

cache==true \

cache_ttl==3600 \

cache_clear==true

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/product/1',

# enable cache

cache=True,

# optionally set expiration

cache_ttl=3600, # 1 hour

# or clear cache any time

# cache_clear=True,

)

)

Path('sa_flow.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/product/1',

// ^ auto anti-bot bypass, no extra configuration needed

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/product/1 \

from pathlib import Path

from scrapfly import ScreenshotConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

api_response = client.screenshot(

ScreenshotConfig(

url='https://web-scraping.dev/product/1',

# directly capture in your file type

format="jpg",

# jpg, png, webp, gif etc.

)

)

Path('sa_flow.jpg').write_bytes(api_response.result['result'])

import {

ScrapflyClient, ScreenshotConfig,

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

let api_result = await client.screenshot(

new ScreenshotConfig({

url: 'https://web-scraping.dev/product/1',

// directly capture in your file type

format: "jpg",

// jpg, png, webp, gif etc.

})

);

console.log(api_result.image);

http https://api.scrapfly.io/screenshot \

key==$SCRAPFLY_KEY \

url==https://web-scraping.dev/product/1 \

format==jpg

Capture Data for Your Industry!

AI Training

Capture the latest images, videos and user generated content for AI training.

Compliance

Capture online presence to validate compliance and security.

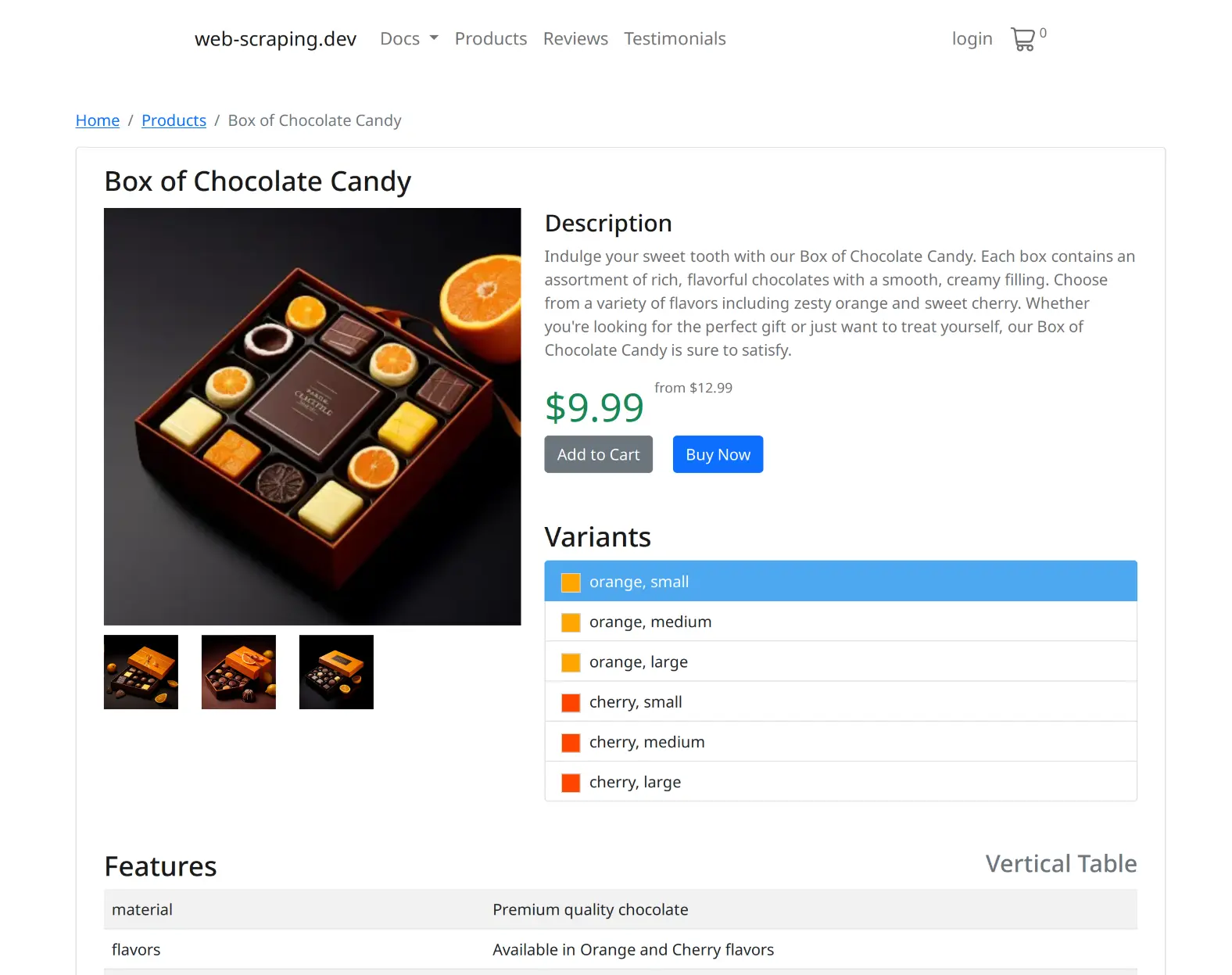

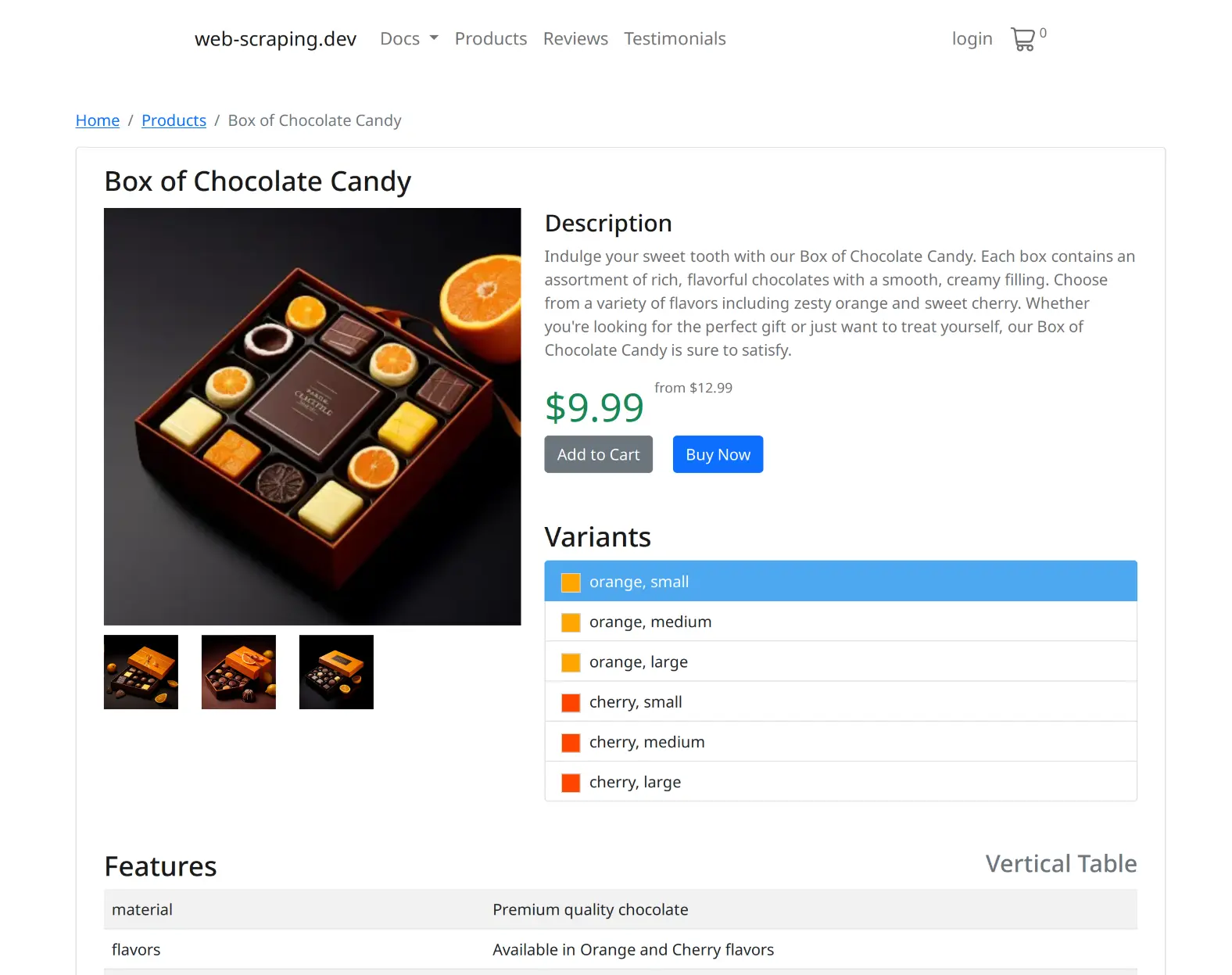

eCommerce

Capture product listings, images, reviews and prices

Financial Service

Capture the latest stock, shipping and financial data to enhance your finance datasets.

Fraud Detection

Capture products and listings to detect fraud and counterfeit activity.

Jobs Data

Capture the latest job listings, salaries and more to enhance your job search.

Lead Generation

Capture online profiles and contact details to enhance your lead generation.

Logistics

Capture logistics data like shipping, tracking, container prices to enhance your deliveries.

Explore

More

Use Cases

Developer-First Experience

We made Scrapfly for ourselves in 2017 and opened it to public in 2020. In that time, we focused on working on the best developer experience possible.

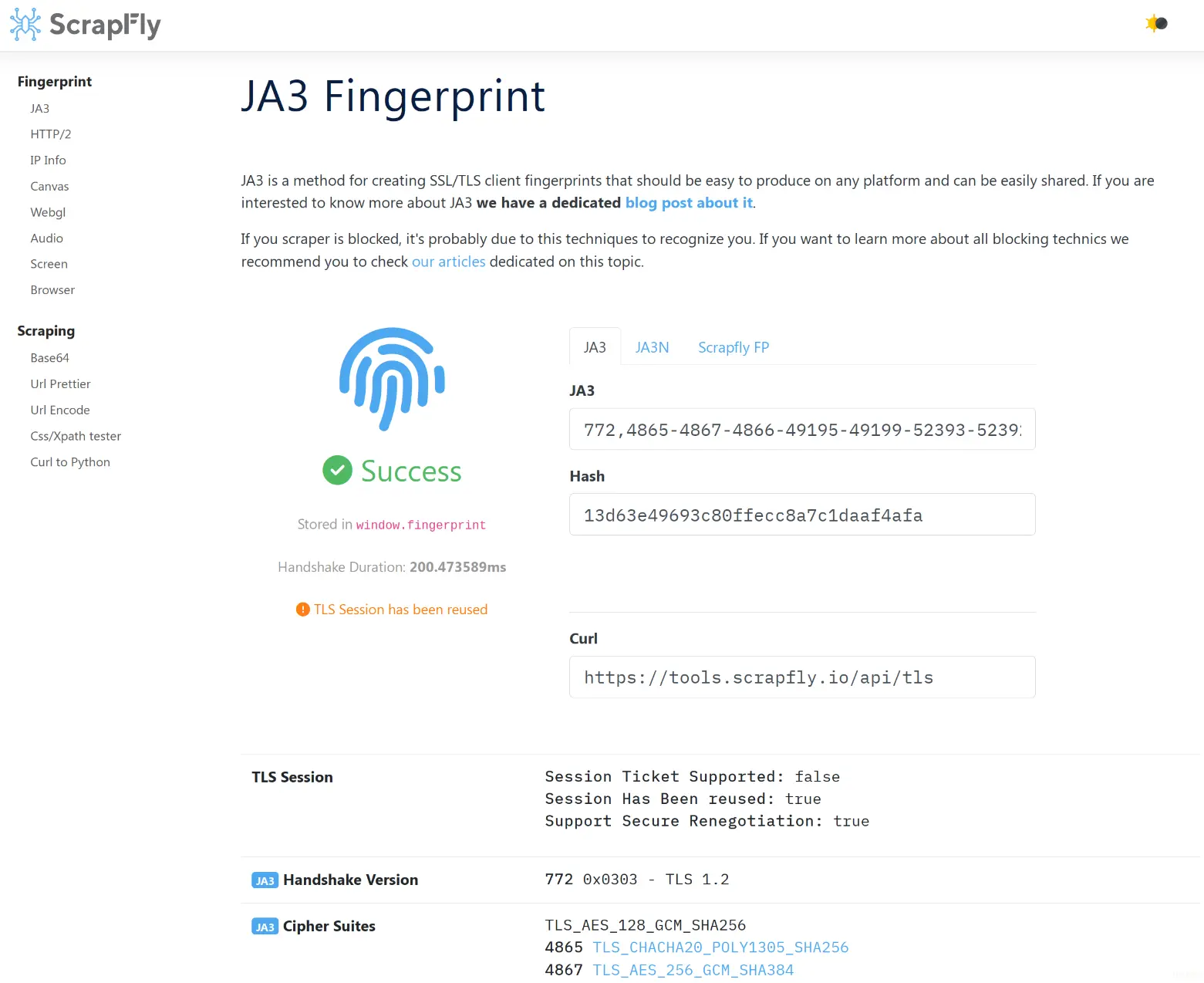

Master Web Data with our Docs and Tools

Access a complete ecosystem of documentation, tools, and resources designed to accelerate your data journey and help you get the most out of Scrapfly.

-

Learn with Scrapfly Academy

Learn everything about data retrieval and web scraping with our interactive courses.

-

Develop with Scrapfly Tools

Streamline your web data development with our web tools designed to enhance every step of the process.

-

Stay Up-To-Date with our Newsletter and Blog

Stay updated with the latest trends and insights in web data with our monthly newsletter weekly blog posts.

Seamlessly Integrate with Frameworks & Platforms

Easily integrate Scrapfly with your favorite tools and platforms, or customize workflows with our Python and TypeScript SDKs.

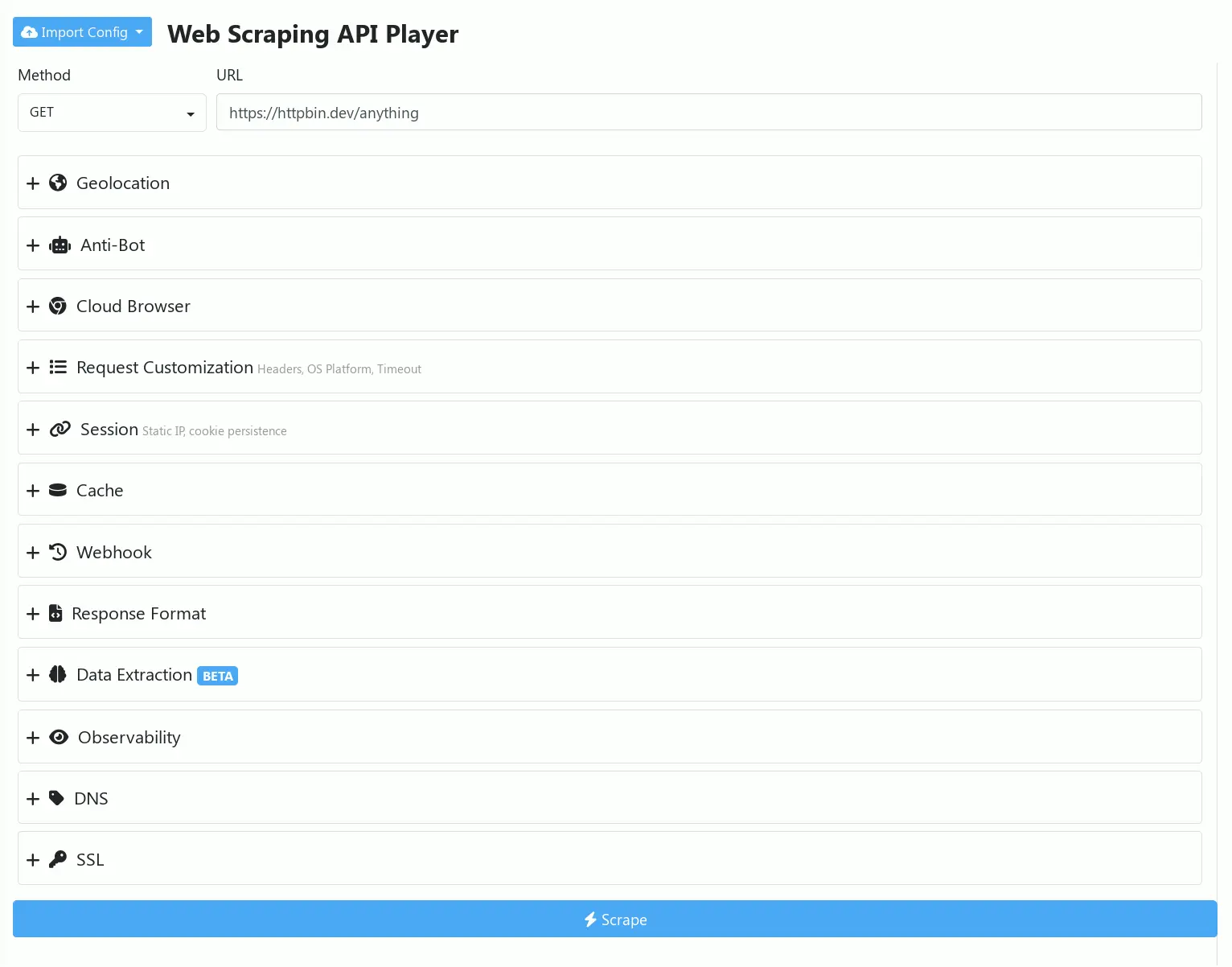

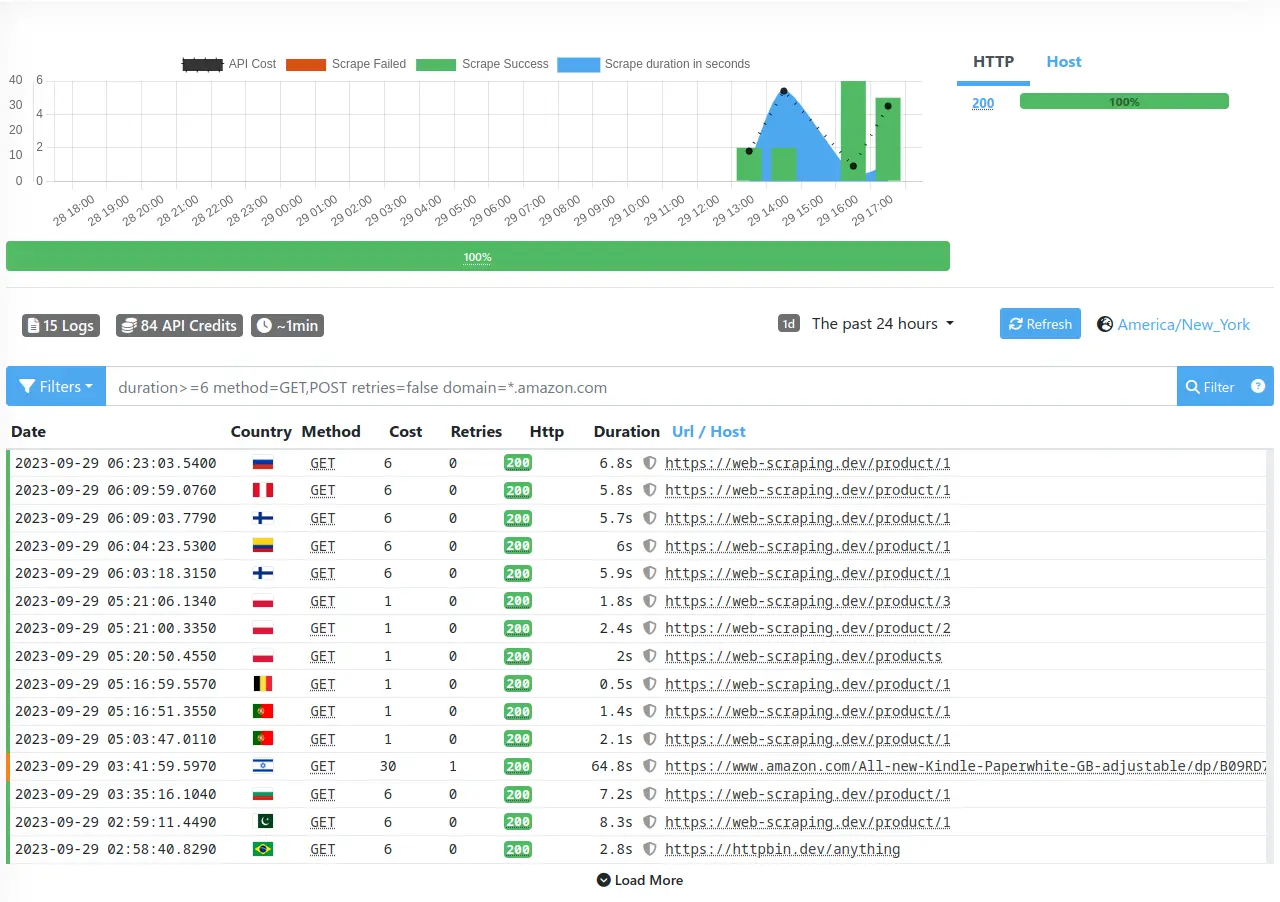

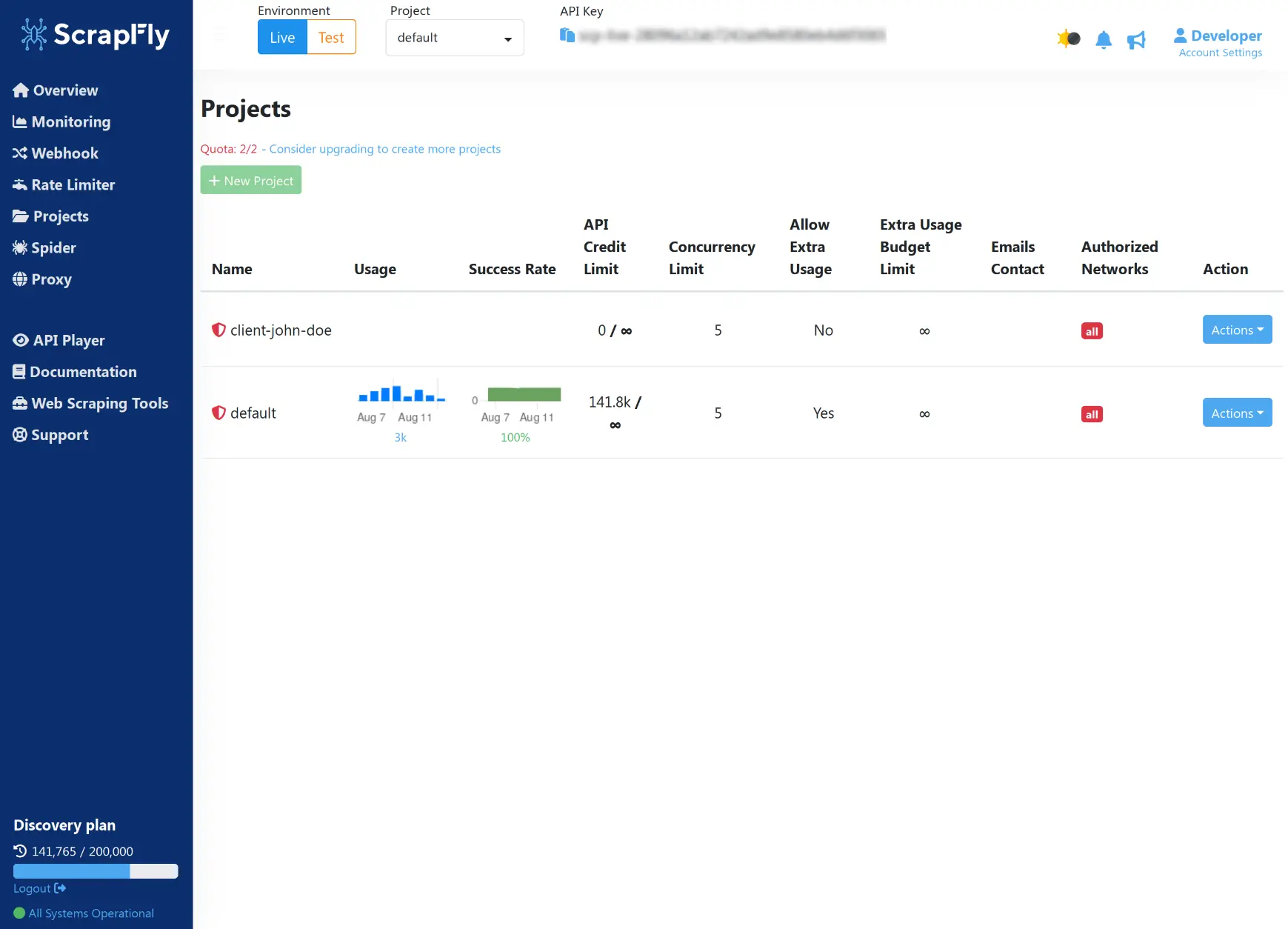

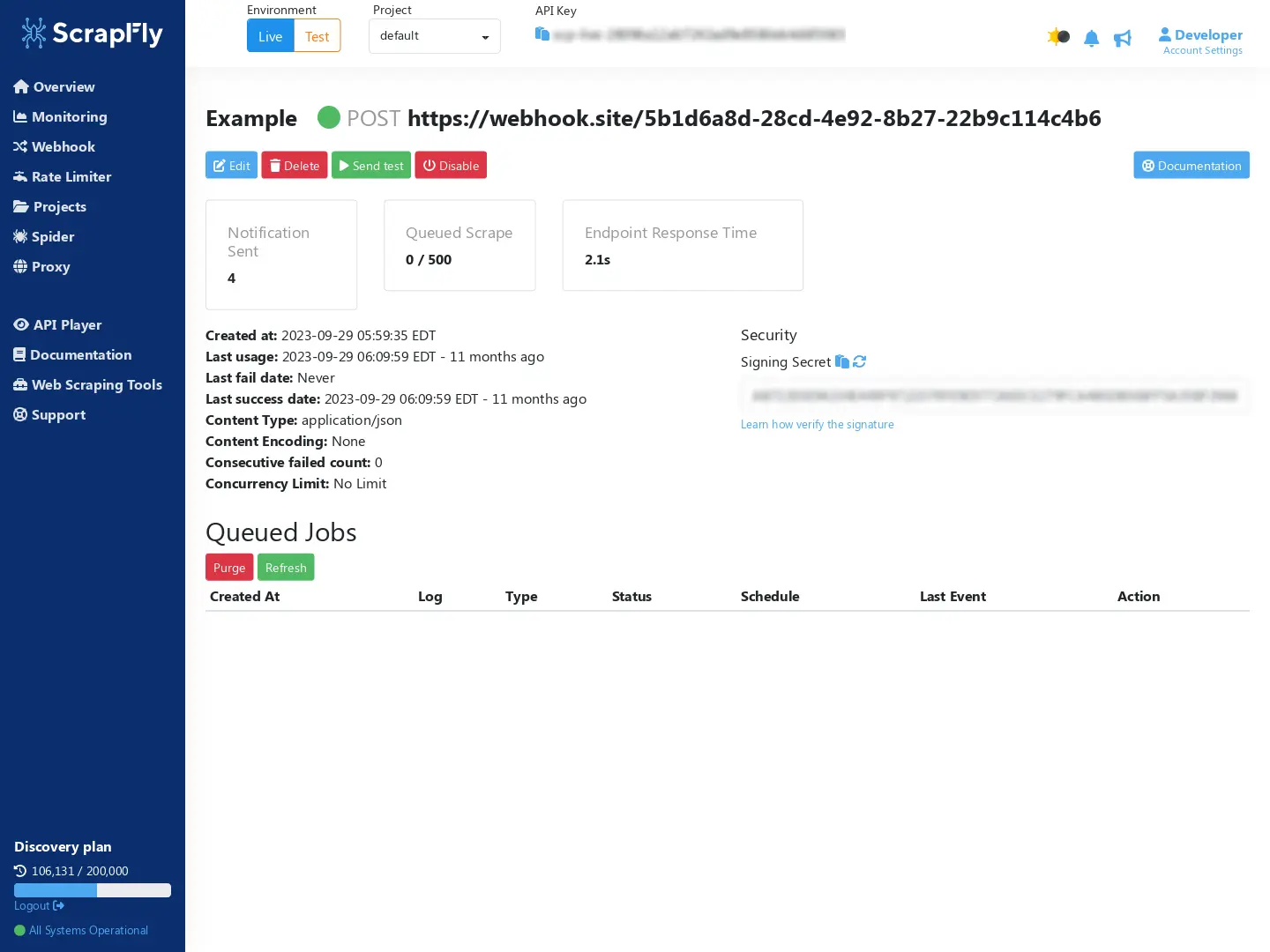

Powerful Web UI

One-stop shop to configure, control and observe all of your Scrapfly activity.

-

Experiment with Web API Player

Use our Web API player for easy testing, experimenting and sharing for collaboration and seamless integration.

-

Manage Multiple Projects

Manage multiple projects with ease - complete with built-in testing environements for full control and flexibility.

-

Attach Webhooks & Throttlers

Upgrade your API calls with webhooks for true asynchronous architecture and throttlers to control your usage.

Simple Pricing

How Many Screenshots per Month?

∞ /mo

Custom

|

$500/mo

Enterprise

|

$250/mo

Startup

|

$100/mo

Pro

|

$30/mo

Discovery

|

|

|---|---|---|---|---|---|

| Included API Credits | ∞ | 5,500,000 | 2,500,000 | 1,000,000 | 200,000 |

| Screenshots | ∞ | 91,667 | 41,667 | 16,667 | 3,333 |

| Extra API Credits | ∞ per 10k | $1.20 per 10k | $2.00 per 10k | $3.50 per 10k | ✖ |

| Concurrent Request | ∞ | 100 | 50 | 20 | 5 |

| Log Retention | ∞ weeks | 4 weeks | 3 weeks | 2 weeks | 1 week |

| Residential Proxy | ✓ | ✓ | ✓ | ✓ | ✓ |

| Geo targeting | ✓ | ✓ | ✓ | ✓ | ✓ |

| Team Management | ✓ | ✓ | ✓ | x | x |

| Support | Premium Support | Premium Support | Standard Support | Standard Support | Basic Support |

What Do Our Users Say?

"Scrapfly’s Screenshot API has revolutionized the way we capture web pages. It’s incredibly simple to integrate, and we now generate thousands of clean, ad-free screenshots without worrying about pop-ups or cookie banners. The real browser support ensures we get pixel-perfect screenshots, every time!"

David R. - Lead Developer

"We needed a reliable solution to capture full-page screenshots for our reports, and Scrapfly’s Screenshot API delivered beyond our expectations. The API handles complex pages, even those that rely on JavaScript, with no issues. It’s fast, reliable, and the clean capture feature saves us a ton of time by automatically blocking ads and pop-ups."

Melissa G. - CTO

"The Screenshot API from Scrapfly allowed us to streamline our entire screenshot process. The customization options, like controlling viewport resolution and blocking unwanted elements, gave us full control over our captures. It’s fast, scalable, and the support has been fantastic. We wouldn’t trust any other solution for our screenshot needs!"

Jason M. - Data Engineer

Frequently Asked Questions

What is a Screenshot API?

Screenshot API is a service that'll capture a screenshot of any web page or part of the web page. It's designed to abstract away major screenshot capturing challenges like anti-bot protection and scaling.

How can I access the Screenshot API?

Screenshot HTTP API can be accessed in any http client like curl, httpie or any http client library in any programming language. For first-class support we offer Python and Typescript SDKs.

Is screenshot capture legal?

Yes, generally screesnhot capture of web pages is legal in most places around the world. For more see our in-depth web scraping laws article.

Does Screenshot API use AI for capturing screenshots?

No, screenshots are being captured using real web browsers as directed by the API user. However, AI is integrated with Scrapfly browsers to bypass any potential blocks.

How long does it take to get results from the Screenshot API?

Capture duration varies between 1 second up to 160 seconds as Scrapfly provides a execution budget for running your own browser actions with each request to modify the page before capture. So, it entirely depends on used feature set though Scrapfly always has a browser pool ready to perform scrape requests without any warmup.

Can I capture only specific areas using the Screenshot API?

Yes, you can identify capture areas using the capture parameter and Xpath or CSS selectors.

What capture file formats Screenshot API supports?

Screenshots can be captured in jpg (default), png, webp or gif formats with more formats coming soon.