Your Puppeteer script works perfectly in development. Then you deploy to production and get blocked on the first request. Raw headless browsers are instantly detectable, and patching fingerprints manually is a losing battle against constantly evolving bot detection.

Browser as a Service solves this by providing cloud-hosted browser infrastructure with managed stealth. Instead of running puppeteer.launch() on your own server, you connect to browsers in the cloud that handle fingerprint patching, proxy rotation, and detection bypass automatically. Your code stays the same. The infrastructure changes.

This guide covers how Browser as a Service works, why managed solutions beat self-hosted approaches, and how to choose the right provider for web scraping, testing, and AI agent workflows.

Key Takeaways

Master Browser as a Service fundamentals for production-ready web automation with cloud infrastructure, managed stealth features, and AI agent integration that scales beyond local browser limitations.

- Connect to cloud browsers via WebSocket using standard Playwright, Puppeteer, or Selenium code

- Managed fingerprint patching handles canvas, WebGL, fonts, and detection countermeasures automatically

- Bypass Cloudflare and anti-bot systems through residential proxy rotation and browser updates

- Scale to thousands of parallel sessions without local resource constraints or infrastructure management

- Integrate AI agents with Human-in-the-Loop fallbacks for handling CAPTCHAs and edge cases

- Avoid raw Puppeteer detection through managed stealth that updates with new protection methods

How Browser as a Service Works

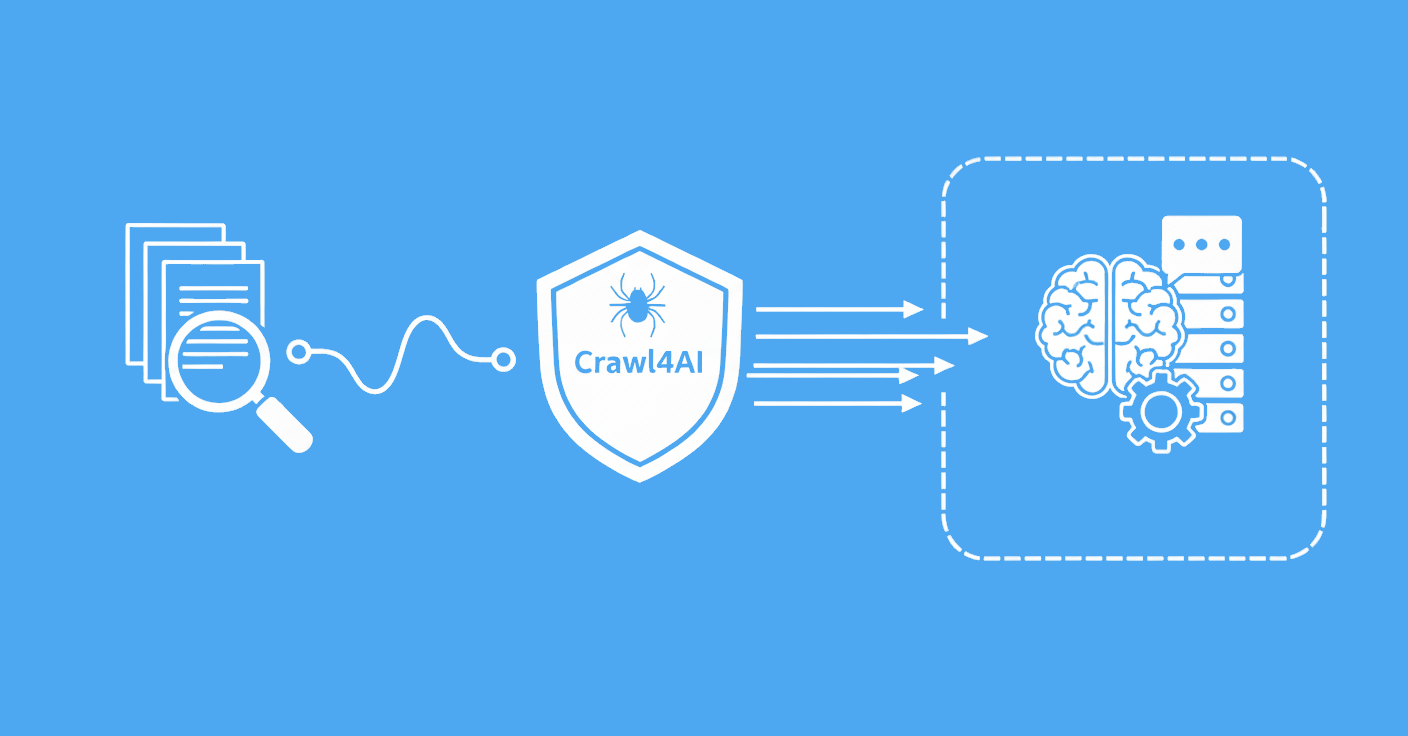

Browser as a Service follows a split-horizon architecture where your code runs locally while browser execution happens in the cloud. This separation exists because browser operations are resource-heavy and require complex fingerprint management that's impractical to handle locally at scale.

Split-Horizon Architecture

The connection flow works like this:

- Your Code (Client-Side): Playwright, Puppeteer, or Selenium scripts running on your machine or server

- WebSocket Connection: A CDP connection sending commands to the cloud

- Cloud Browser Infrastructure: Browser instances, proxy rotation, fingerprint management, and session persistence

When you connect to a cloud browser, you're accessing an entire infrastructure stack including browser pools for parallel execution, proxy rotation for IP reputation management, fingerprint patching to avoid detection, and session persistence for multi-step workflows.

The Chrome DevTools Protocol serves as the communication layer. Your local code sends commands through a WebSocket URL pointing to the cloud browser instance and receives responses as if the browser were running locally.

Here's what a basic CDP connection looks like:

from playwright.async_api import async_playwright

async def connect_to_cloud_browser():

async with async_playwright() as pw:

# WebSocket URL points to cloud browser infrastructure

browser = await pw.chromium.connect_over_cdp(

"wss://cloud-browser.example.com/session?token=YOUR_TOKEN"

)

page = await browser.new_page()

await page.goto("https://example.com")

content = await page.content()

await browser.close()

return content

The key insight: your code doesn't change much. You're still writing Playwright or Puppeteer scripts. The difference is where those scripts execute and what infrastructure supports them.

Managed Stealth vs Raw Puppeteer

Raw Puppeteer and Playwright are instantly detectable when launched on your own server. This works for basic testing, but production scraping and automation fail against any site with bot detection.

The Self-Hosted Problem

When you launch a standard headless browser, you're broadcasting automation signals:

navigator.webdriverflag: Set totrueby default, immediately marking the browser as automated- Chrome headless signatures: Missing plugins, predictable screen dimensions, and telltale rendering differences

- Fingerprint consistency: Every request from your server shares identical fingerprints, making patterns easy to spot

- IP reputation: Datacenter IPs are flagged by default, regardless of browser setup

You can try patching these issues manually with libraries like puppeteer-extra-plugin-stealth. But you're fighting a losing battle as detection systems update constantly and maintaining stealth patches becomes a full-time job.

Managed Fingerprint Patching

The core value of Browser as a Service isn't just hosting the browser. It's patching fingerprints and keeping those patches current.

A proper cloud browser infrastructure provides:

- Fingerprint patching: Canvas, WebGL, fonts, plugins, and dozens of other browser properties are randomized or spoofed to match real user patterns

- Residential proxy rotation: Requests come from residential IPs with good reputation, not flagged datacenter ranges

- Browser version management: Automatic updates ensure you're running current browser versions with matching fingerprints

- Detection monitoring: When new detection methods emerge, the service updates patches automatically

Compare the approaches:

# ❌ Raw Playwright - Will be detected

from playwright.async_api import async_playwright

async def raw_approach():

async with async_playwright() as pw:

browser = await pw.chromium.launch(headless=True)

page = await browser.new_page()

# This will likely be blocked on protected sites

await page.goto("https://protected-site.com")

# ✅ Scrapfly Cloud Browser - Managed stealth

from scrapfly import ScrapflyClient, ScrapeConfig

client = ScrapflyClient(key="YOUR_API_KEY")

result = client.scrape(ScrapeConfig(

url="https://protected-site.com",

render_js=True,

# Fingerprint patching, proxy rotation handled automatically

asp=True # Anti-scraping protection enabled

))

You can test your current browser's fingerprint exposure using Scrapfly's automation detector to see exactly what signals you're leaking.

For deeper technical detail on fingerprint management, refer to our guides on bypassing proxy detection and browser fingerprinting with CreepJS.

Use Cases for Browser as a Service

Browser as a Service infrastructure supports several distinct workflows that use managed browser execution in different ways.

Web Scraping at Scale

JavaScript-heavy websites require actual browser rendering. HTTP requests alone won't retrieve dynamically loaded content. Browser as a Service provides:

- JavaScript rendering for single-page applications and lazy-loaded content

- Anti-bot bypass through managed fingerprints and residential proxies

- Parallel session management for scraping thousands of pages at once

The alternative of running hundreds of local browser instances creates infrastructure headaches and detection issues that managed services remove. For implementation details, see web scraping with cloud browsers.

Automated Testing in CI/CD

Testing pipelines use cloud browsers for:

- Consistent browser environments across all test runs, removing "works on my machine" issues

- No browser installation required in CI/CD containers

- Parallel test execution without local resource limits

AI Agent Browser Control

This use case is growing fast as AI agents need browser capabilities to navigate websites, fill forms, and extract information. LLMs can reason about web pages but cannot render JavaScript. They need browser infrastructure to act on their decisions.

Frameworks like Browser Use and Stagehand connect AI models to browser automation, but those frameworks need somewhere to run. Cloud browser infrastructure provides the execution environment for AI-powered web automation.

The challenge: what happens when an AI agent hits a CAPTCHA, an unexpected login wall, or a novel challenge it can't solve? This is where Human-in-the-Loop becomes critical.

Website Monitoring

Monitoring workflows use Browser as a Service for:

- Visual regression testing through automated screenshots

- Uptime monitoring with full JavaScript support (not just HTTP pings)

- Screenshot automation for compliance, archival, or reporting

AI Agents and Human-in-the-Loop

AI agents represent the fastest-growing use case for Browser as a Service, but purely automated approaches hit fundamental limits. Understanding these limits separates production-ready systems from demo projects.

Why AI Agents Need Browser Infrastructure

Large language models can analyze web pages, plan navigation sequences, and decide what actions to take. What they cannot do is render JavaScript, click buttons, or fill forms. They need execution infrastructure.

When an AI agent decides "click the 'Add to Cart' button," something has to perform that click in an actual browser. Cloud browser infrastructure provides that execution layer, connecting agent frameworks to real browser instances.

The Automation Limit Problem

Pure automation works until it doesn't. Consider what happens when an agent encounters:

- A CAPTCHA requiring human visual recognition

- A two-factor authentication prompt

- An unusual site layout the agent hasn't seen before

- A popup or interstitial the agent can't parse

Some providers position their products as fully autonomous where the agent handles everything without human help. This works for demos and simple workflows, but production systems need fallback mechanisms.

Human-in-the-Loop as Fallback

HITL provides the safety net that pure automation lacks. The pattern works like this:

- AI agent runs its workflow normally

- Agent hits an obstacle it cannot resolve

- System triggers HITL, pausing automation and alerting a human operator

- Human steps in (solves CAPTCHA, handles edge case, provides input)

- Automation resumes from the intervention point

This isn't admitting defeat. It's production engineering. Even the most advanced AI agents will encounter situations requiring human judgment, and systems designed with HITL support handle these without failing completely.

Scrapfly's Cloud Browser supports HITL workflows natively, setting it apart from providers focused solely on autonomous execution. For detailed implementation patterns, see the Human-in-the-Loop documentation.

For AI agent integration specifically, Scrapfly provides direct support for Browser Use and Stagehand frameworks.

Browser as a Service vs Alternatives

Understanding what Browser as a Service is also requires understanding what it isn't. Several related tools serve different purposes and shouldn't be confused.

vs Anti-Detect Browsers

Anti-detect browsers like Multilogin and GoLogin are designed for manual multi-accounting. They run multiple browser profiles with distinct fingerprints for account management, social media marketing, or e-commerce operations.

The key difference is human vs programmatic operation. Anti-detect browsers expect a person clicking through interfaces. Browser as a Service expects API calls and automated scripts. A social media manager running ten Instagram accounts uses anti-detect browsers. A developer scraping product data uses Browser as a Service.

vs Proxies

Proxies route network traffic through different IP addresses but don't run JavaScript or render pages. When you use a proxy with a standard HTTP client, you're changing your apparent location, not running a browser.

Browser as a Service runs actual browsers with JavaScript execution, DOM manipulation, and full rendering. These services often include proxy rotation as part of their infrastructure, but the proxy is one component, not the entire solution.

For more on proxy fundamentals, see our guide on proxies in web scraping.

vs Self-Hosted Puppeteer/Playwright

Self-hosting gives you complete control but requires:

- Infrastructure management (servers, containers, scaling)

- Detection countermeasures (fingerprint patching, proxy integration)

- Maintenance burden (browser updates, stealth patch updates)

For development and testing, self-hosted browsers work fine. For production at scale against protected sites, the overhead typically doesn't justify the control.

For getting started with these tools, see our guides on Puppeteer and Selenium.

vs Local Headless Browsers

Running headless browsers locally suits development workflows and small-scale tasks. You maintain full debuggability and don't pay per-request costs.

The limits emerge at scale: local resources cap your parallelism, detection becomes problematic without stealth features, and operational overhead grows with volume.

Key Features to Look For

When evaluating Browser as a Service providers, look for these capabilities that separate mature platforms from basic offerings.

Framework Support: Compatibility with Playwright, Puppeteer, and Selenium means you're not locked into proprietary APIs. Standard CDP connections let you migrate code between providers if needed.

Anti-Detection: Fingerprint management, stealth mode configurations, and residential proxy integration determine whether you can access protected sites. Ask specifically about canvas fingerprinting, WebGL spoofing, and navigator property patching.

Proxy Integration: Built-in residential and datacenter proxy rotation with geographic targeting. Separate proxy subscription shouldn't be required.

Session Management: Persistence across page loads, reconnection after network interruptions, and parallel session limits affect workflow complexity.

AI Agent Support: Native integration with frameworks like Browser Use and Stagehand for AI-powered automation.

Human-in-the-Loop: Fallback mechanisms for when automation fails. This capability is rare but critical for production systems.

Pricing Model: Per-session, per-minute, or bundled with scraping API. Understand what you're paying for and how costs scale with usage.

Scrapfly Cloud Browser Infrastructure

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Scrapfly's Cloud Browser provides managed browser infrastructure integrated with their broader scraping platform. Several features set it apart from standalone browser services.

Unified Platform: Cloud Browser integrates with Scrapfly's Scraping API, meaning you can combine browser rendering with HTTP scraping, use consistent authentication, and manage everything through one dashboard.

Human-in-the-Loop Support: Unlike providers focused solely on autonomous execution, Scrapfly supports HITL workflows for handling CAPTCHAs, authentication challenges, and edge cases that automation can't resolve.

Framework Compatibility: Full support for Playwright, Puppeteer, and Selenium through standard CDP connections. Your existing code works with minimal changes.

AI Agent Integration: Direct support for Browser Use and Stagehand frameworks, with documentation covering common agent patterns.

Managed Stealth: Fingerprint patching, residential proxy rotation, and browser version management handled automatically.

Here's a connection example using Playwright:

import asyncio

from playwright.async_api import async_playwright

from scrapfly import ScrapflyClient

async def scrape_with_cloud_browser():

client = ScrapflyClient(key="YOUR_API_KEY")

# Get cloud browser session

session = client.cloud_browser_session()

async with async_playwright() as pw:

browser = await pw.chromium.connect_over_cdp(session.cdp_url)

page = await browser.new_page()

await page.goto("https://web-scraping.dev/products")

# Extract product data

products = await page.query_selector_all(".product-card")

for product in products:

title = await product.query_selector(".title")

print(await title.inner_text())

await browser.close()

asyncio.run(scrape_with_cloud_browser())

Additional capabilities include screenshot and PDF generation, markdown extraction for LLM consumption, and MCP support for AI integration.

For complete implementation details, see the Cloud Browser documentation.

For more, explore web scraping API and its documentation.

FAQs

What is a cloud browser?

A browser running on remote servers that you control via API instead of running it on your own machine.

What is Browser Use cloud?

Browser Use is an AI agent framework. "Browser Use cloud" means running these AI agents on cloud browser infrastructure like Scrapfly instead of local browsers.

Is Browser as a Service the same as BaaS?

No. "BaaS" usually means Backend as a Service (like Firebase). Browser as a Service is cloud browser infrastructure for automation and scraping. Different things with similar abbreviations.

How is Browser as a Service different from anti-detect browsers?

Anti-detect browsers are manual tools for humans managing multiple accounts. Browser as a Service is API-driven infrastructure for automated scripts. Different users, different purposes.

Can I use my existing Puppeteer code with Browser as a Service?

Yes. Browser as a Service works with standard Playwright, Puppeteer, and Selenium code through CDP connections. You change the connection endpoint, not your automation logic.

How does Browser as a Service handle CAPTCHAs?

Most providers use managed fingerprints and proxies to avoid CAPTCHAs. Some (like Scrapfly) also support Human-in-the-Loop for manual CAPTCHA solving when automation can't proceed.

Summary

Browser as a Service moves you from managing local Selenium grids to using managed cloud browsers through simple API connections. The key benefits are managed stealth (fingerprint patching that keeps up with detection changes), scaling without infrastructure headaches, and AI agent support with Human-in-the-Loop fallbacks for production reliability.

For scraping, testing, AI agents, and monitoring workflows, cloud browser infrastructure removes the operational burden of browser management while providing capabilities that self-hosted solutions can't match.

Scrapfly's Cloud Browser offers a free tier to explore the platform. Start with the getting started documentation or review pricing options to understand how the service scales with your usage.