Effortlessly Extract Data

with Our AI-Powered Extraction API

Unlock the power of our AI-Powered Extraction API to transform your data extraction process.

- Boost productivity with LLM prompts for faster, smarter extractions.

- Automate and adapt with AI-driven tools for automatic structured data extraction.

- Create and customize your own extraction rules to suit any document structure.

- Seamlessly integrate with platforms, Python and Typescript.

What Can it Do?

-

Understand Your Data with LLM PromptsAsk freeform questions or give specific commands using LLM prompts for flexible data querying.

from pathlib import Path from scrapfly import ExtractionConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") # product from https://web-scraping.dev/product/1 document_text = Path("product.html").read_text() api_response = client.extract( ExtractionConfig( body=document_text, content_type="text/html", extraction_prompt="summarize the review sentiment" ) ) print(api_response.result)Output

import { ScrapflyClient, ExtractionConfig } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); // product from https://web-scraping.dev/product/1 const document_text = Deno.readTextFileSync("./product.html").toString(); const api_result = await client.extract( new ExtractionConfig({ body: document_text, url: "https://web-scraping.dev/product/1", content_type: "text/html", extraction_prompt: "summarize the review sentiment", }) ); console.log(JSON.stringify(api_result));Output

-

Use LLMs to Extract Structured DataExtract clean, structured data like JSON or CSV from any document using the power of LLMs.

from pathlib import Path from scrapfly import ExtractionConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") # product from https://web-scraping.dev/product/1 document_text = Path("product.html").read_text() api_response = client.extract( ExtractionConfig( body=document_text, content_type="text/html", url="https://web-scraping.dev/product/1", extraction_prompt="extract price as JSON", ) ) print(api_response.result)Output

import { ScrapflyClient, ExtractionConfig } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); // product from https://web-scraping.dev/product/1 const document_text = Deno.readTextFileSync("./product.html").toString(); const api_result = await client.extract( new ExtractionConfig({ body: document_text, url: "https://web-scraping.dev/product/1", content_type: "text/html", extraction_prompt: "extract price as json", }) ); console.log(JSON.stringify(api_result));Output

-

Use Almost Any Data FormatSupports a wide range of formats, from HTML and PDFs to JSON, CSV, and more.

from pathlib import Path from scrapfly import ExtractionConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") # product from https://web-scraping.dev/product/1 document_text = Path("product.html").read_text() api_response = client.extract( ExtractionConfig( body=document_text, extraction_prompt="find the product price", # use almost any file type identified through content_type content_type="text/html", content_type="text/xml", content_type="text/plain", content_type="text/markdown", content_type="application/json", content_type="application/csv", ) ) print(api_response.result)Output

import { ScrapflyClient, ExtractionConfig } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); // product from https://web-scraping.dev/product/1 const document_text = Deno.readTextFileSync("./product.html").toString(); const api_result = await client.extract( new ExtractionConfig({ body: document_text, url: "https://web-scraping.dev/product/1", extraction_prompt: "find the product price", // use almost any file type identified through content_type content_type: "text/html", // content_type: "text/xml", // content_type: "text/plain", // content_type: "text/markdown", // content_type: "application/json", // content_type: "application/csv", }) ); console.log(JSON.stringify(api_result));Output

-

Auto extract objects like products, reviews etc.Automatically extract structured data like products, reviews, and more with AI-powered precision in a predictable schema format.

import json from pathlib import Path from scrapfly import ExtractionConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") # product from https://web-scraping.dev/product/1 document_text = Path("product.html").read_text() api_response = client.extract( ExtractionConfig( body=document_text, content_type="text/html", # use one of dozens of defined data models: extraction_model="product", # optional: provide file's url for converting relative links to absolute url="https://web-scraping.dev/product/1", ) ) print(json.dumps(api_response.extraction_result))Output

import { ScrapflyClient, ExtractionConfig } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); // product from https://web-scraping.dev/product/1 const document_text = Deno.readTextFileSync("./product.html").toString(); const api_result = await client.extract( new ExtractionConfig({ body: document_text, content_type: "text/html", url: "https://web-scraping.dev/product/1", extraction_model: "product" }) ); console.log(JSON.stringify(api_result));Output

-

Quality self reportsEach extraction reports coverage and quality of the extraction.

import json from pathlib import Path from scrapfly import ExtractionConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") # product from https://web-scraping.dev/product/1 document_text = Path("product.html").read_text() api_response = client.extract( ExtractionConfig( body=document_text, content_type="text/html", # use one of dozens of defined data models: extraction_model="product", # optional: provide file's url for converting relative links to absolute url="https://web-scraping.dev/product/1", ) ) # the data_quality field describes how much was found print(json.dumps(api_response.extraction_result['data_quality']))Output

import { ScrapflyClient, ExtractionConfig } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); // product from https://web-scraping.dev/product/1 const document_text = Deno.readTextFileSync("./product.html").toString(); const api_result = await client.extract( new ExtractionConfig({ body: document_text, content_type: "text/html", url: "https://web-scraping.dev/product/1", extraction_model: "product" }) ); console.log(JSON.stringify(api_result));Output

-

Define your own extraction rules

Create and customize your own templates using XPath/CSS selectors and built-in processors to extract exactly the data you need.

Templates allow you to fine-tune your data extraction process, ensuring you get exactly the data you need in the format you want. You can customize your extraction rules using CSS selectors, formatters, and processors to meet any use case.

import json from pathlib import Path from scrapfly import ExtractionConfig, ScrapflyClient client = ScrapflyClient(key="API KEY") # product from https://web-scraping.dev/reviews document_text = Path("reviews.html").read_text() # define your JSON template template = { "source": "html", "selectors": [ { "name": "date_posted", # use css selectors "type": "css", "query": "[data-testid='review-date']::text", "multiple": True, # one or multiple? # post process results with formatters "formatters": [ { "name": "datetime", "args": {"format": "%Y, %b %d — %A"} } ] } ] } api_response = client.extract( ExtractionConfig( body=document_text, content_type="text/html", # use one of dozens of defined data models: ephemeral_template=template ) ) print(json.dumps(api_response.extraction_result))Output

import { ScrapflyClient, ExtractionConfig } from 'jsr:@scrapfly/scrapfly-sdk'; const client = new ScrapflyClient({ key: "API KEY" }); // product from https://web-scraping.dev/reviews const document_text = Deno.readTextFileSync("./reviews.html").toString(); // define your template as JSON const template = { "source": "html", "selectors": [ { "name": "date_posted", // use css selectors "type": "css", "query": "[data-testid='review-date']::text", "multiple": true, // one or multiple? // post process results with formatters "formatters": [ { "name": "datetime", "args": {"format": "%Y, %b %d — %A"} } ] } ] } const api_result = await client.extract( new ExtractionConfig({ body: document_text, url: "https://web-scraping.dev/product/1", content_type: "text/html", ephemeral_template: template, }) ); console.log(JSON.stringify(api_result));Output

from pathlib import Path

from scrapfly import ExtractionConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

# product from https://web-scraping.dev/product/1

document_text = Path("product.html").read_text()

api_response = client.extract(

ExtractionConfig(

body=document_text,

content_type="text/html",

extraction_prompt="summarize the review sentiment"

)

)

print(api_response.result)Output

import {

ScrapflyClient, ExtractionConfig

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

// product from https://web-scraping.dev/product/1

const document_text = Deno.readTextFileSync("./product.html").toString();

const api_result = await client.extract(

new ExtractionConfig({

body: document_text,

url: "https://web-scraping.dev/product/1",

content_type: "text/html",

extraction_prompt: "summarize the review sentiment",

})

);

console.log(JSON.stringify(api_result));Output

from pathlib import Path

from scrapfly import ExtractionConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

# product from https://web-scraping.dev/product/1

document_text = Path("product.html").read_text()

api_response = client.extract(

ExtractionConfig(

body=document_text,

content_type="text/html",

url="https://web-scraping.dev/product/1",

extraction_prompt="extract price as JSON",

)

)

print(api_response.result)Output

import {

ScrapflyClient, ExtractionConfig

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

// product from https://web-scraping.dev/product/1

const document_text = Deno.readTextFileSync("./product.html").toString();

const api_result = await client.extract(

new ExtractionConfig({

body: document_text,

url: "https://web-scraping.dev/product/1",

content_type: "text/html",

extraction_prompt: "extract price as json",

})

);

console.log(JSON.stringify(api_result));Output

from pathlib import Path

from scrapfly import ExtractionConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

# product from https://web-scraping.dev/product/1

document_text = Path("product.html").read_text()

api_response = client.extract(

ExtractionConfig(

body=document_text,

extraction_prompt="find the product price",

# use almost any file type identified through content_type

content_type="text/html",

content_type="text/xml",

content_type="text/plain",

content_type="text/markdown",

content_type="application/json",

content_type="application/csv",

)

)

print(api_response.result)Output

import {

ScrapflyClient, ExtractionConfig

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

// product from https://web-scraping.dev/product/1

const document_text = Deno.readTextFileSync("./product.html").toString();

const api_result = await client.extract(

new ExtractionConfig({

body: document_text,

url: "https://web-scraping.dev/product/1",

extraction_prompt: "find the product price",

// use almost any file type identified through content_type

content_type: "text/html",

// content_type: "text/xml",

// content_type: "text/plain",

// content_type: "text/markdown",

// content_type: "application/json",

// content_type: "application/csv",

})

);

console.log(JSON.stringify(api_result));Output

import json

from pathlib import Path

from scrapfly import ExtractionConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

# product from https://web-scraping.dev/product/1

document_text = Path("product.html").read_text()

api_response = client.extract(

ExtractionConfig(

body=document_text,

content_type="text/html",

# use one of dozens of defined data models:

extraction_model="product",

# optional: provide file's url for converting relative links to absolute

url="https://web-scraping.dev/product/1",

)

)

print(json.dumps(api_response.extraction_result))Output

import {

ScrapflyClient, ExtractionConfig

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

// product from https://web-scraping.dev/product/1

const document_text = Deno.readTextFileSync("./product.html").toString();

const api_result = await client.extract(

new ExtractionConfig({

body: document_text,

content_type: "text/html",

url: "https://web-scraping.dev/product/1",

extraction_model: "product"

})

);

console.log(JSON.stringify(api_result));Output

import json

from pathlib import Path

from scrapfly import ExtractionConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

# product from https://web-scraping.dev/product/1

document_text = Path("product.html").read_text()

api_response = client.extract(

ExtractionConfig(

body=document_text,

content_type="text/html",

# use one of dozens of defined data models:

extraction_model="product",

# optional: provide file's url for converting relative links to absolute

url="https://web-scraping.dev/product/1",

)

)

# the data_quality field describes how much was found

print(json.dumps(api_response.extraction_result['data_quality']))Output

import {

ScrapflyClient, ExtractionConfig

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

// product from https://web-scraping.dev/product/1

const document_text = Deno.readTextFileSync("./product.html").toString();

const api_result = await client.extract(

new ExtractionConfig({

body: document_text,

content_type: "text/html",

url: "https://web-scraping.dev/product/1",

extraction_model: "product"

})

);

console.log(JSON.stringify(api_result));Output

import json

from pathlib import Path

from scrapfly import ExtractionConfig, ScrapflyClient

client = ScrapflyClient(key="API KEY")

# product from https://web-scraping.dev/reviews

document_text = Path("reviews.html").read_text()

# define your JSON template

template = {

"source": "html",

"selectors": [

{

"name": "date_posted",

# use css selectors

"type": "css",

"query": "[data-testid='review-date']::text",

"multiple": True, # one or multiple?

# post process results with formatters

"formatters": [ {

"name": "datetime",

"args": {"format": "%Y, %b %d — %A"}

} ]

}

]

}

api_response = client.extract(

ExtractionConfig(

body=document_text,

content_type="text/html",

# use one of dozens of defined data models:

ephemeral_template=template

)

)

print(json.dumps(api_response.extraction_result))Output

import {

ScrapflyClient, ExtractionConfig

} from 'jsr:@scrapfly/scrapfly-sdk';

const client = new ScrapflyClient({ key: "API KEY" });

// product from https://web-scraping.dev/reviews

const document_text = Deno.readTextFileSync("./reviews.html").toString();

// define your template as JSON

const template = {

"source": "html",

"selectors": [

{

"name": "date_posted",

// use css selectors

"type": "css",

"query": "[data-testid='review-date']::text",

"multiple": true, // one or multiple?

// post process results with formatters

"formatters": [ {

"name": "datetime",

"args": {"format": "%Y, %b %d — %A"}

} ]

}

]

}

const api_result = await client.extract(

new ExtractionConfig({

body: document_text,

url: "https://web-scraping.dev/product/1",

content_type: "text/html",

ephemeral_template: template,

})

);

console.log(JSON.stringify(api_result));Output

We got Your Industry Covered!

AI Training

Extract the latest images, videos and user generated content for AI training.

Compliance

Extract online presence data to validate compliance and security.

eCommerce

Extract products, reviews, prices using LLMs or AI Auto

Financial Service

Extract the latest stock, shipping and financial data to enhance your finance datasets.

Fraud Detection

Extract products and listings to detect fraud and counterfeit activity.

Jobs Data

Extract the latest job listings, salaries and more to enhance your job search.

Lead Generation

Extract profile and contact details to enhance your lead datasets.

Logistics

Extract logistics data like shipping, tracking, container prices to enhance your deliveries.

Explore

More

Use Cases

Developer-First Experience

We made Scrapfly for ourselves in 2017 and opened it to public in 2020. In that time, we focused on working on the best developer experience possible.

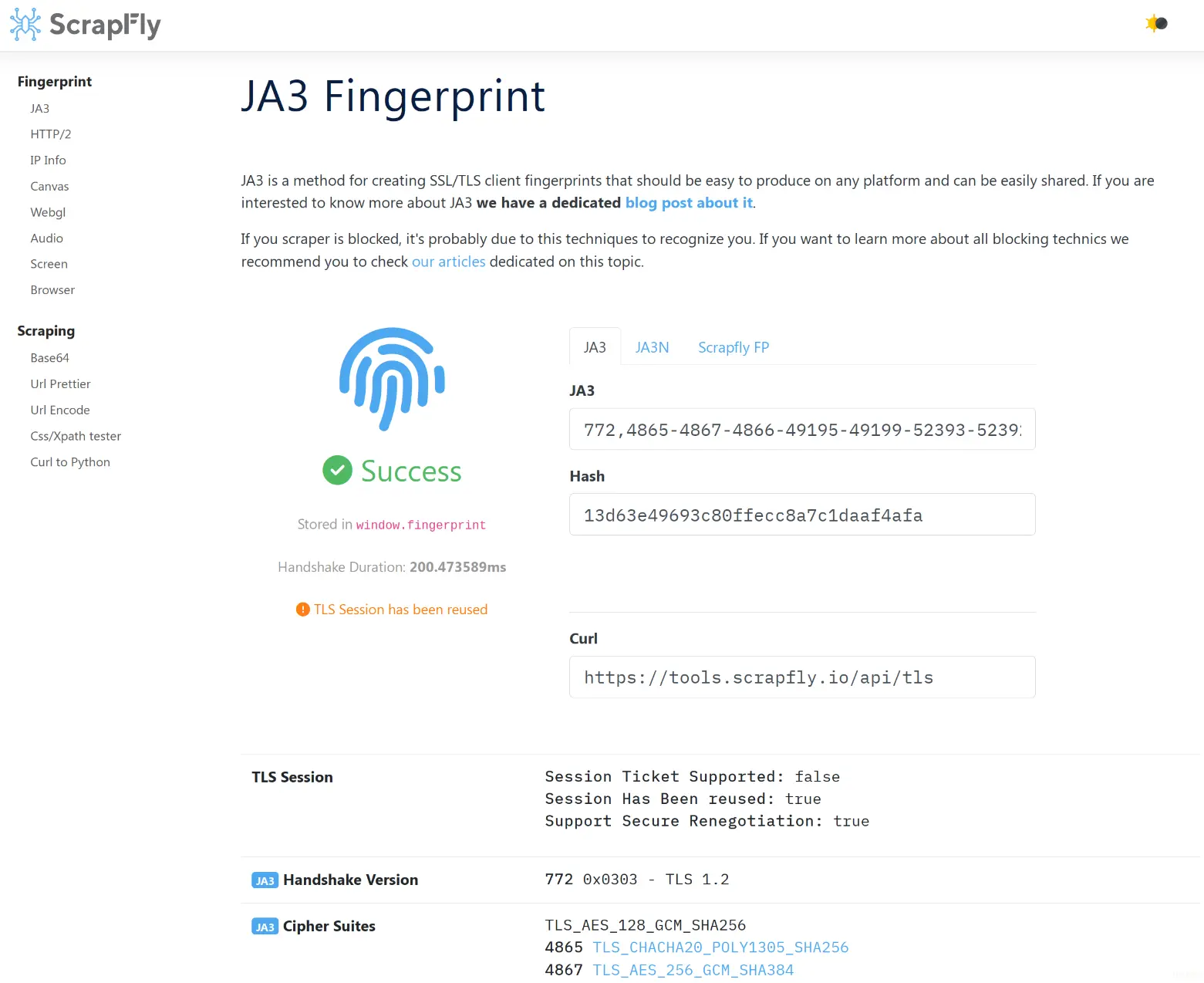

Master Web Data with our Docs and Tools

Access a complete ecosystem of documentation, tools, and resources designed to accelerate your data journey and help you get the most out of Scrapfly.

-

Learn with Scrapfly Academy

Learn everything about data retrieval and web scraping with our interactive courses.

-

Develop with Scrapfly Tools

Streamline your web data development with our web tools designed to enhance every step of the process.

-

Stay Up-To-Date with our Newsletter and Blog

Stay updated with the latest trends and insights in web data with our monthly newsletter weekly blog posts.

Seamlessly Integrate with Frameworks & Platforms

Easily integrate Scrapfly with your favorite tools and platforms, or customize workflows with our Python and TypeScript SDKs.

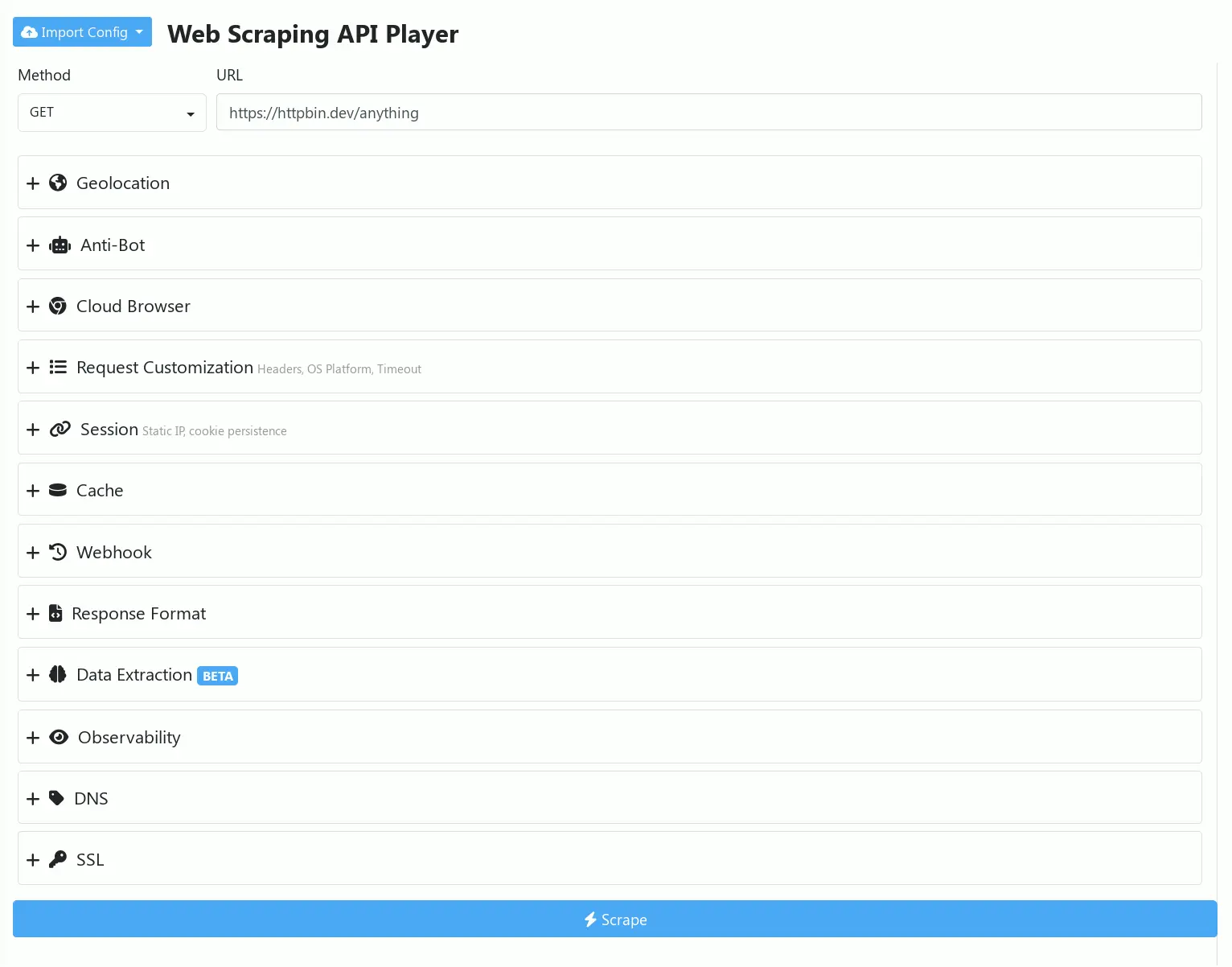

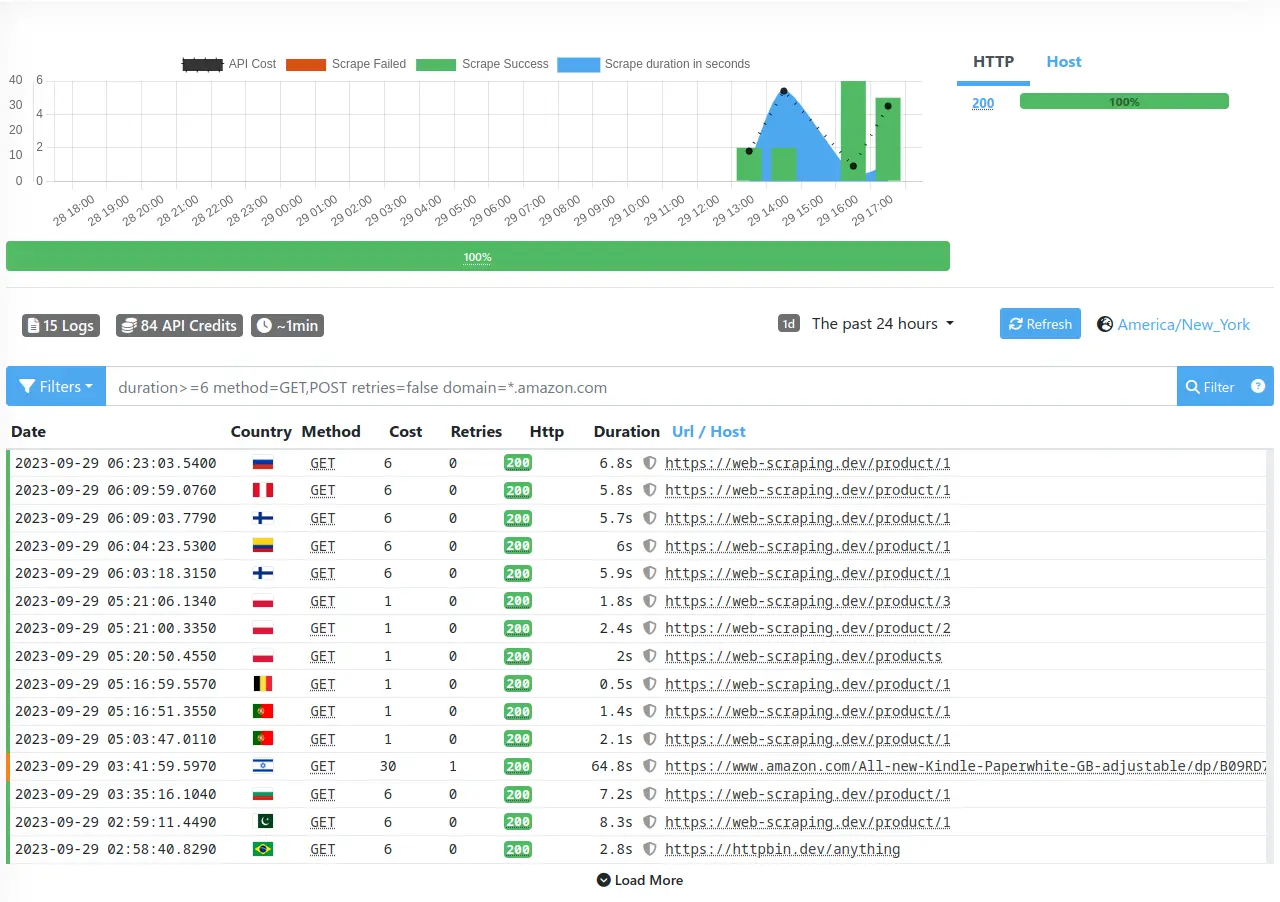

Powerful Web UI

One-stop shop to configure, control and observe all of your Scrapfly activity.

-

Experiment with Web API Player

Use our Web API player for easy testing, experimenting and sharing for collaboration and seamless integration.

-

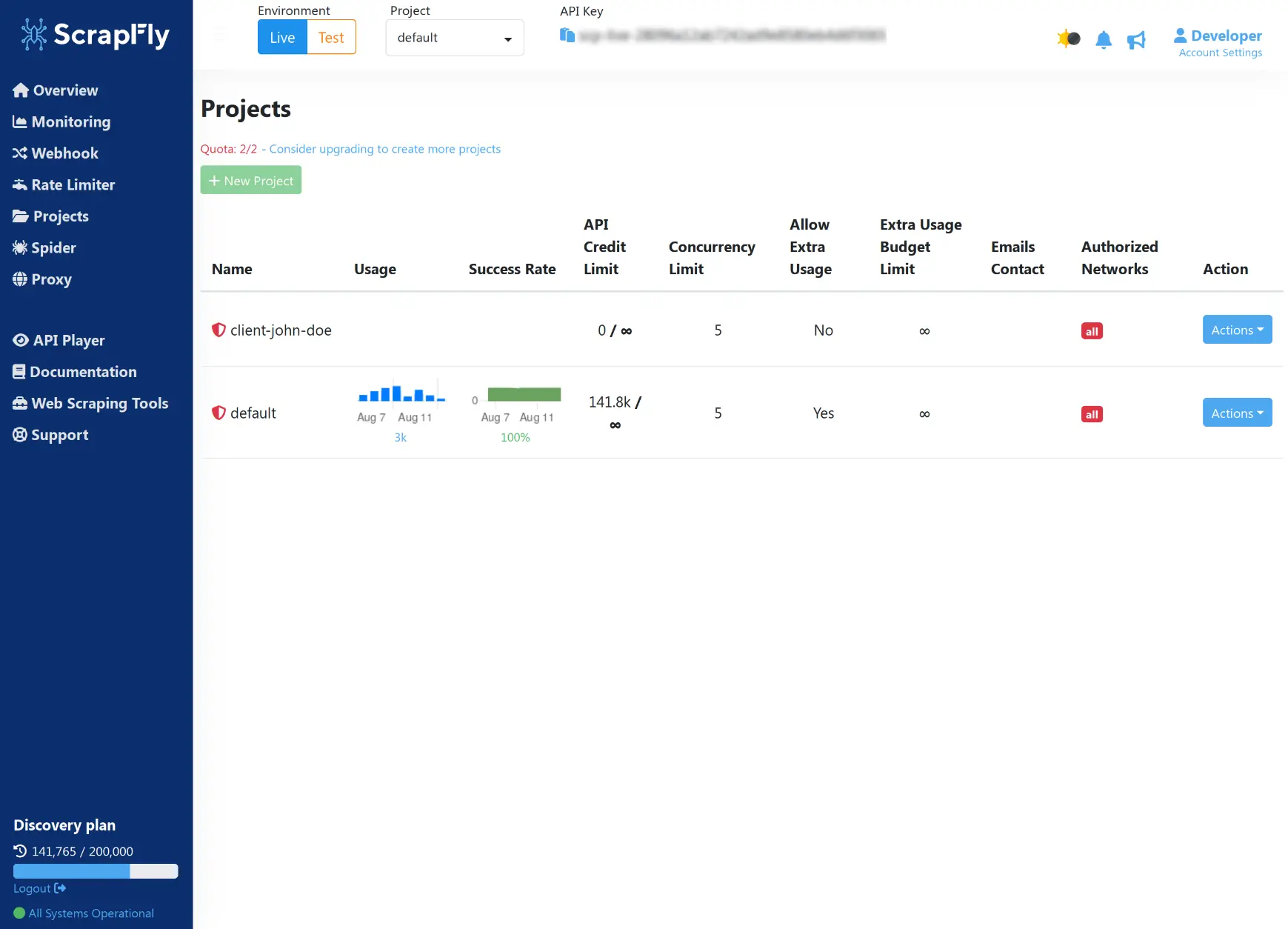

Manage Multiple Projects

Manage multiple projects with ease - complete with built-in testing environements for full control and flexibility.

-

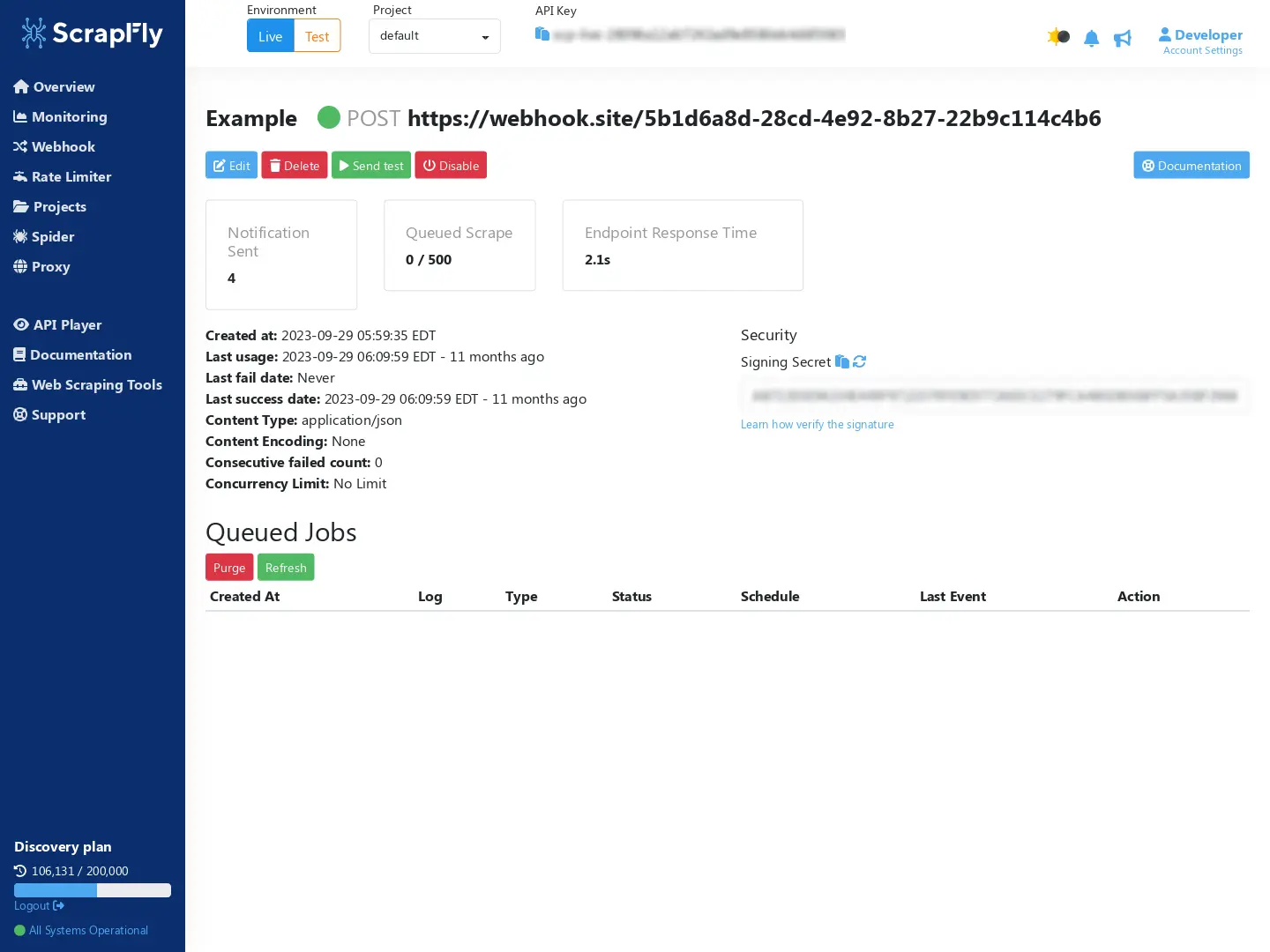

Attach Webhooks & Throttlers

Upgrade your API calls with webhooks for true asynchronous architecture and throttlers to control your usage.

Simple & Fair Pricing

How Many Extractions per Month?

∞ /mo

Custom

|

$500/mo

Enterprise

|

$250/mo

Startup

|

$100/mo

Pro

|

$30/mo

Discovery

|

|

|---|---|---|---|---|---|

| Included API Credits | ∞ | 5,500,000 | 2,500,000 | 1,000,000 | 200,000 |

| Extra API Credits | ∞ per 10k | $1.20 per 10k | $2.00 per 10k | $3.50 per 10k | ✖ |

| Concurrent Request | ∞ | 100 | 50 | 20 | 5 |

| Log Retention | ∞ weeks | 4 weeks | 3 weeks | 2 weeks | 1 week |

| AI Auto Extract | ✓ | ✓ | ✓ | ✓ | ✓ |

| LLM Prompting | ✓ | ✓ | ✓ | ✓ | ✓ |

| Template Extraction | ✓ | ✓ | ✓ | ✓ | ✓ |

| Team Management | ✓ | ✓ | ✓ | x | x |

| Support | Premium Support | Premium Support | Standard Support | Standard Support | Basic Support |

What Do Our Users Say?

"The Extraction API has transformed how we handle product data. We can now automatically extract detailed product information from hundreds of sites using just a few lines of code. The AI-powered auto extraction feature has saved us countless hours, and the schema-based outputs mean no more messy data. It’s incredibly reliable and easy to integrate!"

Jessica T. - Senior Data Engineer

"Scrapfly’s Extraction API is a game-changer for data parsing at scale. The ability to customize our extraction rules with templates or let the AI handle it for us makes it versatile for all our projects. We’ve been able to extract thousands of reviews and product details effortlessly, and the accuracy is simply outstanding. Highly recommended!"

Michael Bennett - CTO

"We’ve tried several data extraction tools, but Scrapfly’s Extraction API is by far the best. The LLM prompts and AI auto extraction made it simple to pull structured data from documents and webpages. Our team uses it daily to extract everything from articles to product details, and the accuracy and speed have exceeded our expectations!"

Olivia Martinez - Lead Developer

Frequently Asked Questions

What is an Extraction API?

Extraction API is a service for automating data parsing tasks like parsing HTML for structured data, extracting details from PDFs or generally making sense from text data. Extraction APIs pair well with web scraping and data harvesting and for that see Scrapfly Web Scraping API.

How can I access Extraction API?

Extraction HTTP API can be accessed in any http client like curl, httpie or any http client library in any programming language. For first-class support we offer Python and Typescript SDKs.

What type of documents the Extraction API supports?

The Extraction API currently supports most text documents for automated parsing service. This includes HTML, XML, JSON, CSV, RSS, Markdown and plain text. PDF support is coming soon.

Does Extraction API use AI to extract data from my documents?

Yes, AI is used in both LLM prompt and AI Auto Extract features. Both of these features use proprietary AI implementations to either prompt documents or extract data objects.

Is my data safe when used with the Extraction API?

Yes, we follow strict data privacy and security guidelines. We do not store your data, we do not share it with third parties or use it in AI training. For more see our privacy policy and terms of service.

Can I define my own extraction rules?

Yes, the extraction templates feature can be used to define your own parsing instructions in a JSON schema. This feature supports CSS and XPath selectors for selecting data, many different formatters for formatting and cleaning up the data and much more!