Ever wondered why your LinkedIn scraper gets stopped dead in its tracks, even when you’re only accessing public data? You get away with viewing a few profiles then suddenly, You've been hit by a login wall, while your regular browser can still navigate LinkedIn just fine.

That’s not just bad luck. LinkedIn’s defenses go far beyond simple IP blocks; they combine advanced fingerprinting, behavioral detection, and real-time fraud scoring to permanently target bots and automated scripts.

In this guide, you’ll learn how LinkedIn blocks scrapers and how ScrapFly’s tools bypass those blocks, with step-by-step Python code for scraping profiles, companies, jobs, and searches.

Let’s break through LinkedIn’s walls safely, efficiently, and at scale.

Want to skip the technical details?

Clone ScrapFly's production-ready LinkedIn scraper:

git clone https://github.com/scrapfly/scrapfly-scrapers.git

cd scrapfly-scrapers/linkedin-scraper

Latest LinkedIn Scraper Code

Why Scrape LinkedIn?

consider what makes LinkedIn data uniquely valuable. open LinkedIn profiles and company data fuel smarter business strategies and give organizations a crucial edge.

Here’s what you can achieve by accessing professional data effectively:

Recruitment Intelligence: Gain insights into job market trends, salary benchmarks, and analyze skill demand.

Sales Prospecting: Identify decision-makers, understand company org charts, and enrich contact details.

Market Research: Discover industry growth patterns, track competitor hiring, and study talent migration.

Competitive Analysis: Spot company expansion signals, examine team structures, and monitor strategic moves.

LinkedIn holds professional data that's publicly visible but defensively protected. The challenge isn't accessing data, it's accessing it at scale without detection. Below we break down the specific defenses you’ll hit when you try to scale a scraper.

Why LinkedIn Scraping Fails Without Proper Tools

LinkedIn has an advanced security system in place to stop web scrapers who try to access its public data. It rapidly creates authentication constraints requiring users to log in after only a few profile views.

In addition to behavioral analysis, LinkedIn uses advanced request fingerprinting techniques. This involves evaluating factors like the quality and origin of IP addresses, browser specific headers and cookies, and device attributes. LinkedIn synthesizes all of these signals to generate a fraud score for each visitor.

Understanding the nature of these constraints will give context for the hands on strategies and technical workarounds covered in the following sections.

1. Authentication Wall

LinkedIn aggressively limits access to its vast trove of data unless users are authenticated. Most profile, company, and job information is locked behind a login barrier after just a few page views.

anonymous visitors are hit with sign-in prompts and blocked from seeing further details. This strict authentication wall is LinkedIn’s primary line of defense against unauthorized scraping. here are some common examples:

- Profile views: After viewing just 3–5 profiles, LinkedIn requires you to log in.

- Company data: Much of it is hidden unless you’re logged in.

- Search: Public users are completely blocked from using search.

About Logging In: Scraping while logged into an account might seem like an easy workaround. However, this goes against LinkedIn’s Terms of Service and can quickly lead to your accounts being permanently banned.

2. Behavioral Tracking

LinkedIn watches how people behave on the site and compares that to each incoming request. Scrapers usually stand out because their behavior looks different from a real user. Common differences include:

- Request timing: Human users don't view 100 profiles per minute, while bots do

- Navigation patterns: Real users click on buttons, scroll down pages, pause on random timing; bots don't

- Mouse movements: Real users have a natural mouse activity, for highlighting text, hovering into elements, etc. Bots don't have mouse activity.

- Referrer and navigation flow: Actual users navigate through internal links, such as search pages. On the other hand, bots request the URL directly without having common navigation profiles.

If your scraper does not mimic these behaviors, LinkedIn will notice. To avoid detection you need realistic timing, navigation, and interaction patterns. see our headless browser guide that shows how to add natural clicks, scrolls, and pauses.

3. Request Fingerprinting

Beyond behavior, LinkedIn checks the technical fingerprint of each request. This includes several signals that are hard for simple scripts to fake:

- IP address quality: Whether the requested IP address is residential coming from a real home internet provider, or a datacenter associated with a hosting network.

- TLS analysis: When a request is sent to the application's web server, it establishes a TLS handshake. This handshake leads to creating a fingerprint called JA3. LinkedIn uses this fingerprint and compares it with those of normal users.

- Headers and Cookies: Whether the request uses the standard browser headers or it has a cookie a normal user might have. Such cookie chains are often obtained through natural navigation through the web app.

- Device fingerprints: Whether the used browser's attributes meet real browser requirements, these include the web browser metadata, device hardware capabilities, operating system and version, and other related specifications that couldn't meet real browser values.

Fraud Scoring Logic

LinkedIn combines all the signals above to calculate a fraud score for each incoming client. This score is then compared against the average patterns of real users. Based on that comparison, LinkedIn decides whether to:

- Approve the request as legitimate and allow it through.

- Flag or block the request if it appears automated or suspicious.

In short, every request to LinkedIn is evaluated for authenticity before any data is served.

If your score looks different from typical users, you will be forced to log in, or blocked. For technical deep dives and defensive techniques, check our guide on browser fingerprint impersonation.

How LinkedIn Data is Loaded and How to Scrape it?

LinkedIn is a single-page application that loads its data mainly through a couple of methods. Our LinkedIn scraper will extract LinkedIn data through multiple approaches. So let's briefly explain each, how it works under the hood, how to inspect it in the browser, and how to extract it in code!

Rendering an HTML Template

This is the oldest and simplest way websites load data. In this method, the server sends a full HTML page that already includes all the data you need. When your browser opens a page, it just receives and displays that pre-built HTML template.

To scrape data from a page like this on LinkedIn, you only need to mimic what a browser does, then extract the information you care about. Here’s the general flow:

- Send a request to the page URL and wait for a successful response.

- Read the response body, which contains the full HTML of the page.

- Parse the HTML and extract the fields you want using CSS selectors or XPath.

This approach is straightforward because the data is already there in the HTML so no need to load extra scripts or wait for background requests.

Hydrating the Page Using Script Tags

Many modern websites load data inside <script> tags instead of directly in the HTML. This is common in web apps that use server-side or client-side rendering. In these cases, the page may include a script tag that holds all the data in JSON format, which the browser later uses to build the visible content.

To scrape LinkedIn data from pages like this, you can:

- Request the page URL and get the full HTML response.

- Parse the HTML and find the

<script>tag that contains the JSON data. - Extract and load that JSON into an object for further processing.

This technique is often called hidden data scraping because the useful information is buried inside script tags that most users and basic scrapers ignore.

Loading The Data from XHR Calls

Modern websites don’t always load everything in one go. Instead, they often use small background requests called XHR calls or fetch requests to get extra data as you browse.

For example, when you open a LinkedIn search page, the site first loads the layout, then sends hidden requests to get the actual profile or job results in JSON format. Once the data arrives, the page updates automatically.

To collect this kind of data, you can do the following:

- Start a headless browser

- Open the target page and watch its network activity

- Wait for or trigger the XHR

- Capture and read the response from the browser’s network logs

These XHR calls are often called hidden APIs because they work behind the scenes. In web scraping, getting data from them is known as hidden API scraping, since you’re using the same background requests that a browser already makes.

ScrapFly's Solution

We know that managing LinkedIn scraping can be a real overhead. That's why we've built ScrapFly's LinkedIn-scraper an open source tool ready to use! It's configured to leverage ScrapFly's web scraping API, which manages:

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input, and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Learn more about ScrapFly's anti-bot capabilities on our Web Scraping API page.

For pricing details, visit our pricing page.

What LinkedIn Data Can You Scrape?

Web scraping is about collecting raw web data, avoiding detection, and turning it into clean and structured datasets. Our LinkedIn scraper handles this entire process automatically, from fetching the data to parsing it, and delivers the output directly in JSON format that you can use right away.

With ScrapFly, you can collect different types of LinkedIn data such as:

- Profiles: Name, headline, current position, work history, education, skills, number of connections (if visible), and profile picture URL

- Companies: Name, industry, size, headquarters, specialties, follower count, recent posts, and related pages

- Jobs: Title, location, description, number of applicants, posted date, seniority level, employment type, and required skills

- Search results: People search (name, headline, location) and job search (title, company, location, posted date)

Scraping LinkedIn data with ScrapFly is simple and efficient. Here is an example:

from scrapfly import ScrapflyClient, ScrapeConfig

client = ScrapflyClient(key="YOUR_API_KEY")

result = client.scrape(ScrapeConfig(

"https://linkedin.com/in/username",

asp=True, # Enable anti-scraping protection

render_js=True, # Handle dynamic content

country="US"

))

This setup takes care of the complex parts such as JavaScript rendering, location targeting, and anti-bot protection, so you can focus on analyzing the data instead of fighting detection systems.

Scraping LinkedIn Code Examples

So far, we have covered all the essential details needed to scrape LinkedIn data. Now it is time to move on to some practical examples. In the following sections, we will show ready-to-use Python code snippets for extracting data from different parts of LinkedIn.

These examples use ScrapFly's Python SDK, which takes care of all the complex parts for you. If you prefer using libraries like HTTPX or requests directly, you will need to manage proxy rotation, fingerprint randomization, and session handling on your own. Without these measures, your scraper will likely get detected after just a few requests.

To follow along with the examples, install the ScrapFly SDK with this command:

$ pip install scrapfly-sdk

Scraping LinkedIn Profile Data

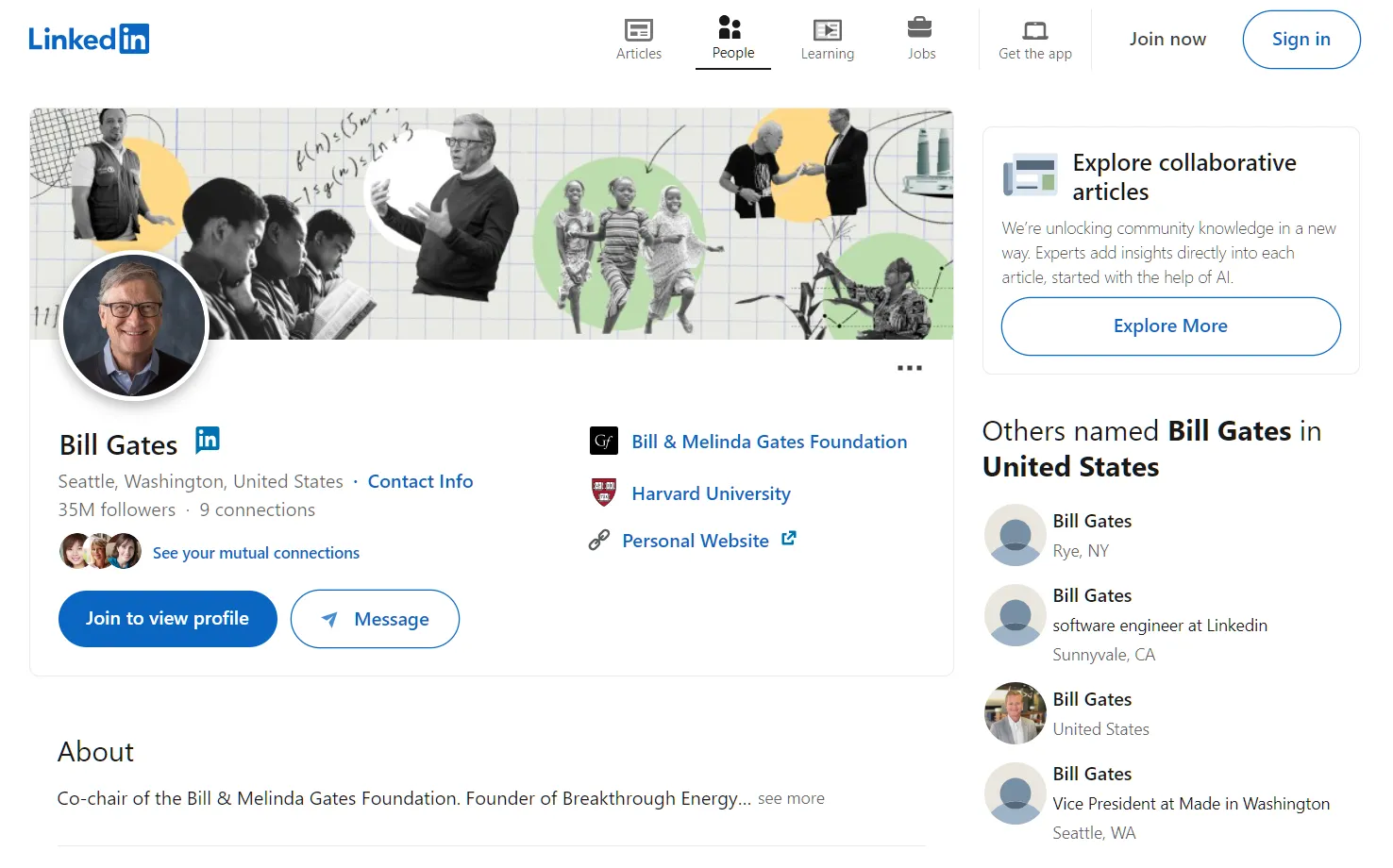

In this section, we'll extract data from publicly available data on LinkedIn user profiles. If we take a look at one of the public LinkedIn profiles (like the one for Bill Gates) we can see loads of valuable public data:

Before we start scraping LinkedIn profiles, let's identify the HTML parsing approach. We can manually parse each data point from the HTML or extract data from hidden script tags.

To locate this hidden data, we can follow these steps:

- Open the browser developer tools by pressing the

F12key. - Search for the selector:

//script[@type='application/ld+json'].

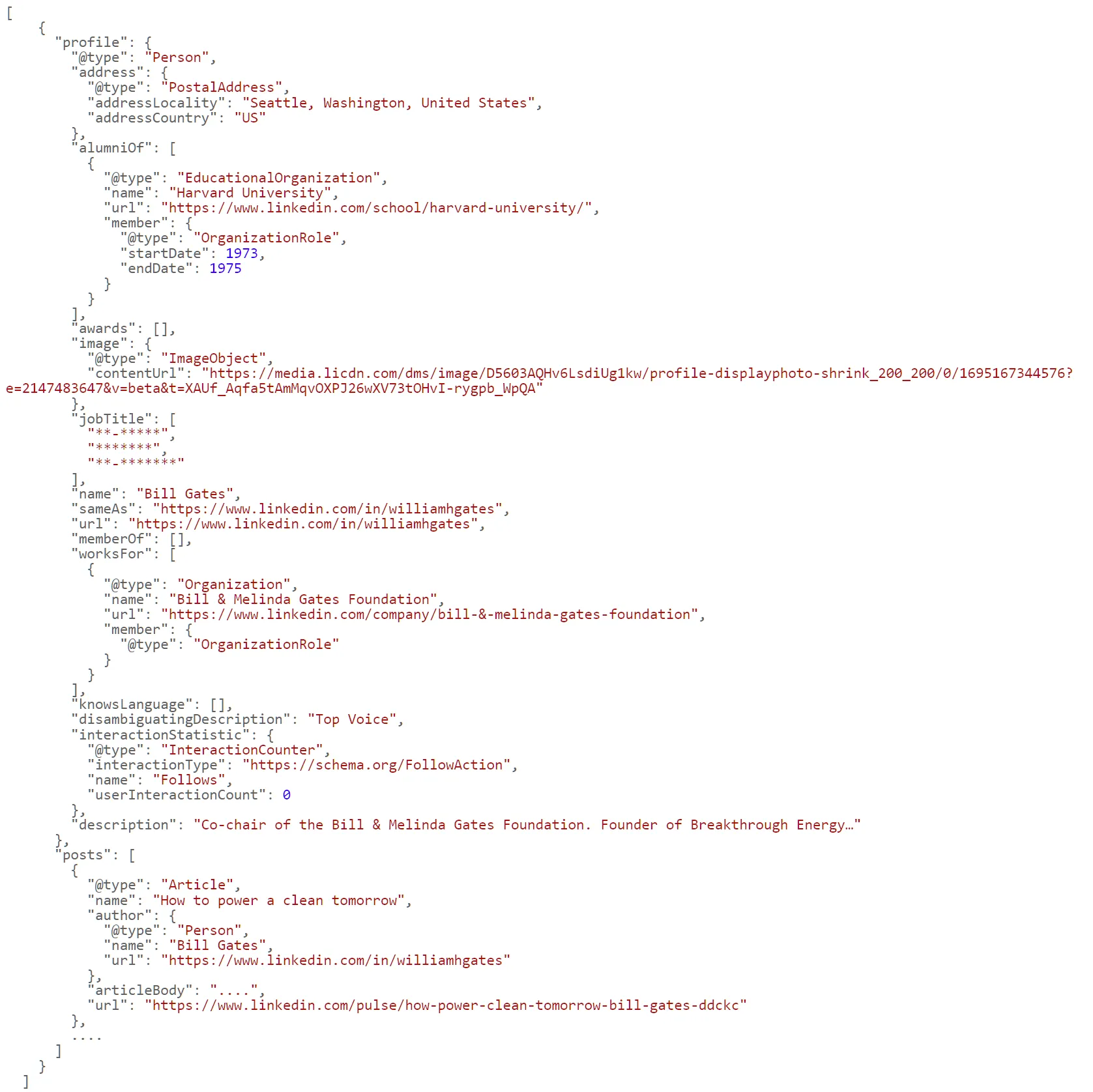

This will lead to a script tag with the following details:

This gets us the core details available on the page, though a few fields like the job title are missing, as the page is viewed publicly. To scrape it, we'll extract the script and parse it:

import json

from typing import Dict, List

from parsel import Selector

from loguru import logger as log

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

BASE_CONFIG = {

# bypass linkedin.com web scraping blocking

"asp": True,

# set the proxy country to US

"country": "US",

"headers": {

"Accept-Language": "en-US,en;q=0.5"

}

}

def refine_profile(data: Dict) -> Dict:

"""refine and clean the parsed profile data"""

parsed_data = {}

profile_data = [key for key in data["@graph"] if key["@type"]=="Person"][0]

profile_data["worksFor"] = [profile_data["worksFor"][0]]

articles = [key for key in data["@graph"] if key["@type"]=="Article"]

for article in articles:

selector = Selector(article["articleBody"])

article["articleBody"] = "".join(selector.xpath("//p/text()").getall())

parsed_data["profile"] = profile_data

parsed_data["posts"] = articles

return parsed_data

def parse_profile(response: ScrapeApiResponse) -> Dict:

"""parse profile data from hidden script tags"""

selector = response.selector

data = json.loads(selector.xpath("//script[@type='application/ld+json']/text()").get())

refined_data = refine_profile(data)

return refined_data

async def scrape_profile(urls: List[str]) -> List[Dict]:

"""scrape public linkedin profile pages"""

to_scrape = [ScrapeConfig(url, **BASE_CONFIG) for url in urls]

data = []

# scrape the URLs concurrently

async for response in SCRAPFLY.concurrent_scrape(to_scrape):

profile_data = parse_profile(response)

data.append(profile_data)

log.success(f"scraped {len(data)} profiles from Linkedin")

return data

Run the code

async def run():

profile_data = await scrape_profile(

urls=[

"https://www.linkedin.com/in/williamhgates"

]

)

# save the data to a JSON file

with open("profile.json", "w", encoding="utf-8") as file:

json.dump(profile_data, file, indent=2, ensure_ascii=False)

if __name__ == "__main__":

asyncio.run(run())

In the above LinkedIn profile scraper, we define three functions. Let's break them down:

scrape_profile(): To request LinkedIn account URLs concurrently and utilize the parsing logic to extract each profile data.parse_profile(): To parse thescripttag containing the profile data.refine_profile(): To refine and organize the extracted data.

Here's a sample output of the LinkedIn profile data retrieved

With this LinkedIn lead scraper, we can successfully gather detailed information on potential leads, given their job titles, companies, industries, and contact information from LinkedIn profiles. This contact data allows for more personalized and strategic outreach efforts.

For the full retrieved JSON output, you can view the example onour example dataset on ScrapFly's LinkedIn scraper.

Next, let's explore how to scrape company data!

Scraping LinkedIn Company Data

LinkedIn company profiles include various valuable data points like the company's industry, addresses, number of employees, jobs, and related company businesses. Moreover, the company profiles are public, meaning that we can scrape their full details!

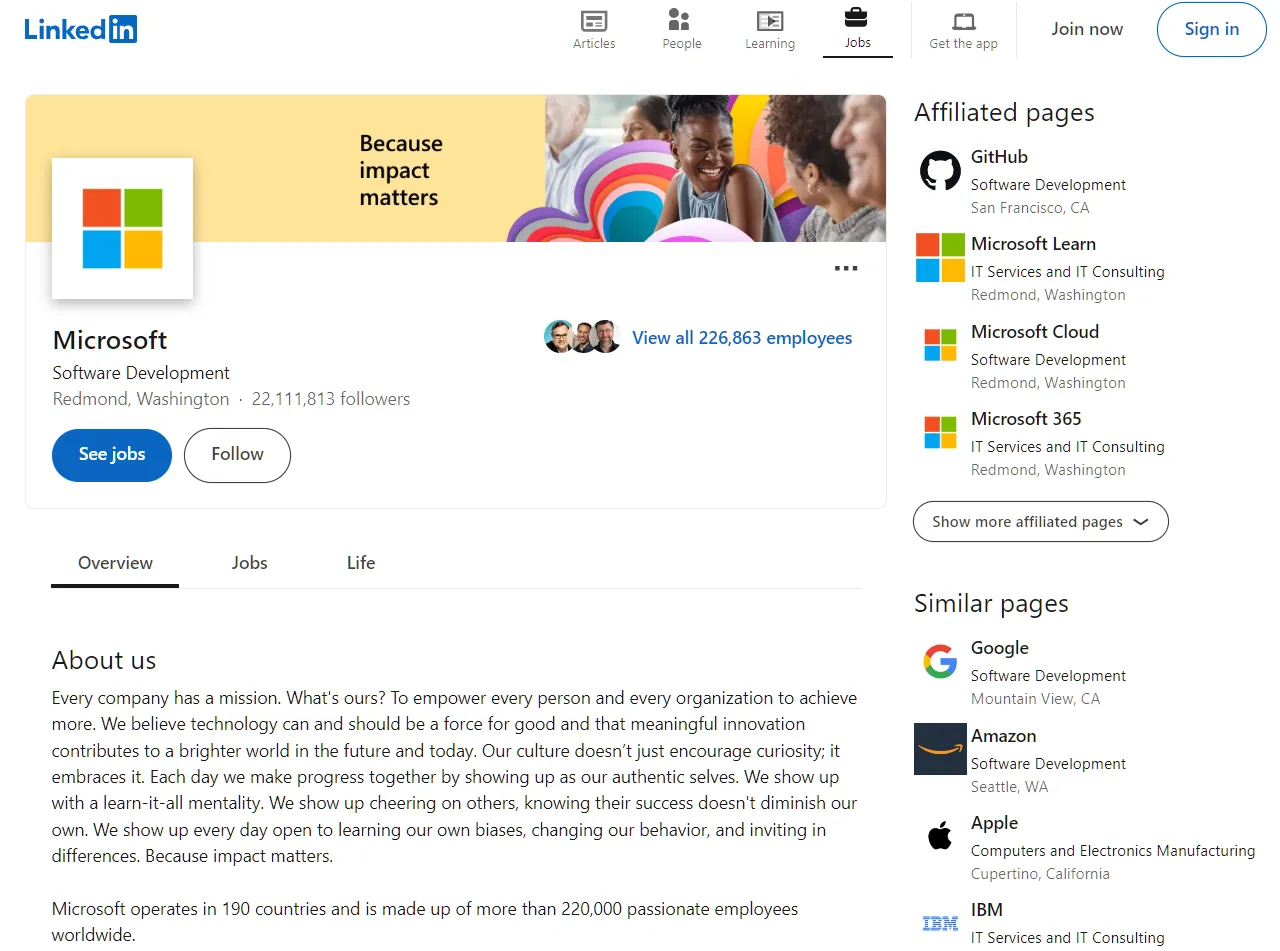

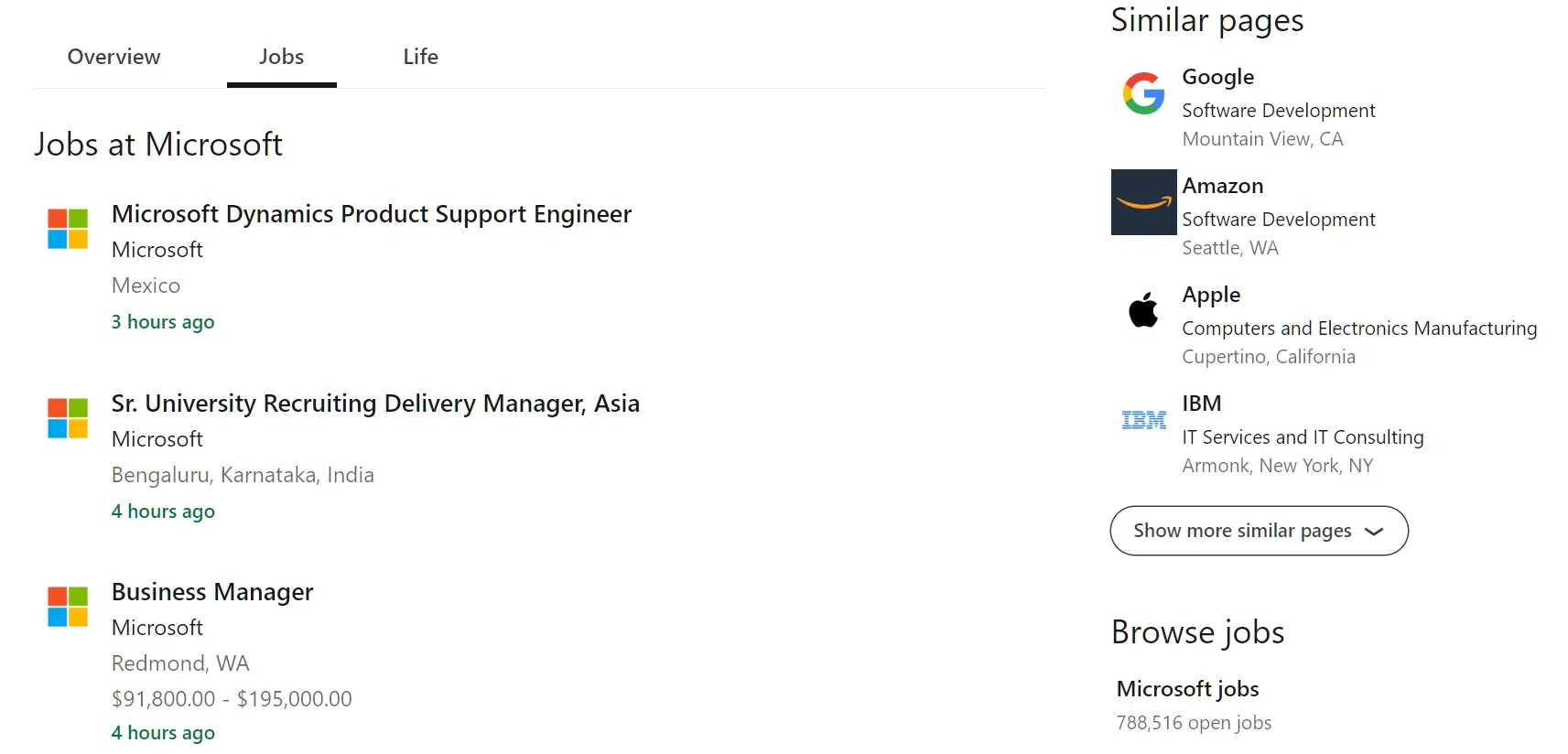

Let's start by taking a look at a company profile page on LinkedIn such as Microsoft:

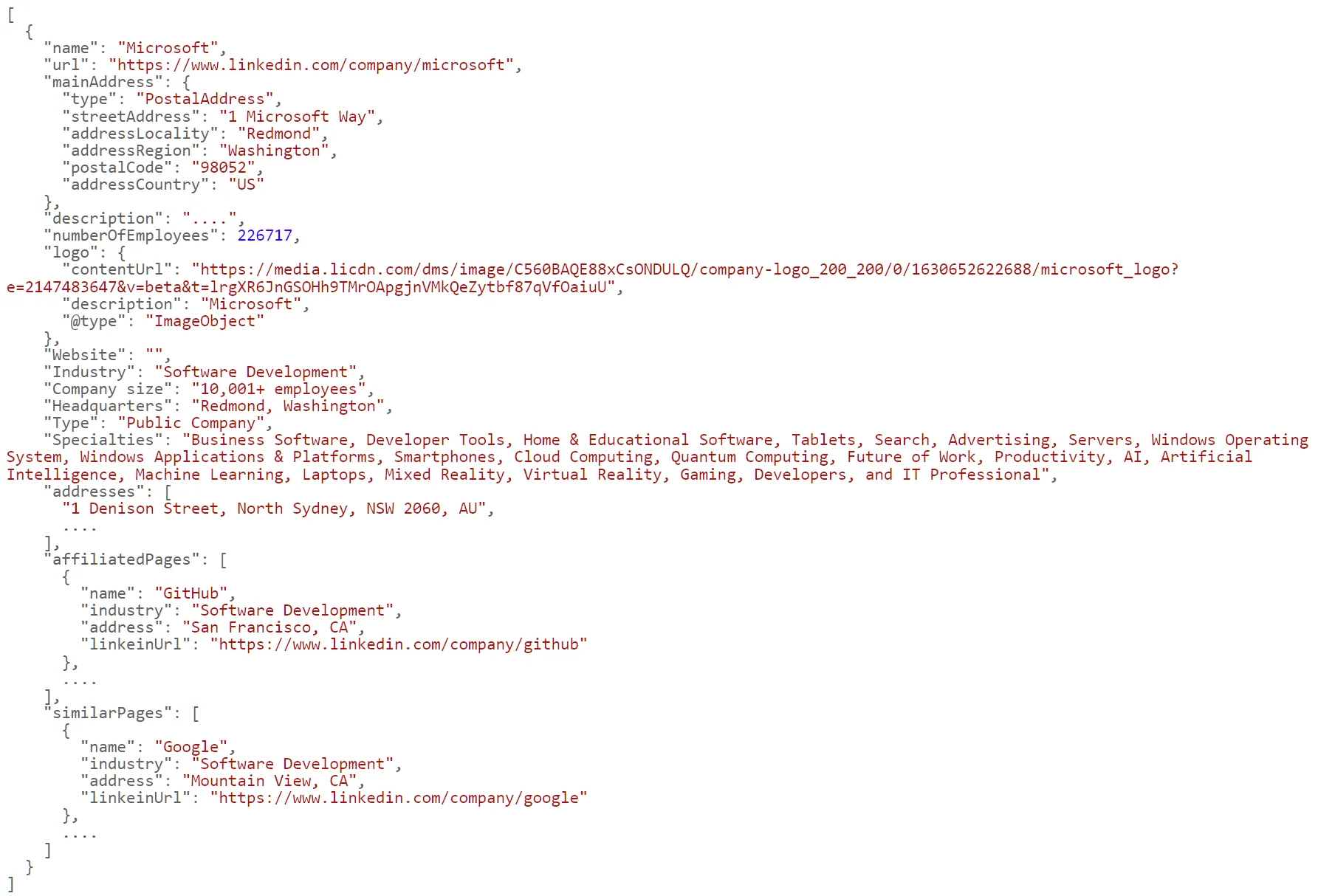

Just like with people pages, the LinkedIn company page data can also be found in hidden script tags:

From the above image, we can see that the script tag doesn't contain the full company details. Therefore to extract the entire company dataset we'll use a bit of HTML parsing as well:

import json

import jmespath

from typing import Dict, List

from loguru import logger as log

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

BASE_CONFIG = {

"asp": True,

"country": "US",

"headers": {

"Accept-Language": "en-US,en;q=0.5"

}

}

def strip_text(text):

"""remove extra spaces while handling None values"""

return text.strip() if text != None else text

def parse_company(response: ScrapeApiResponse) -> Dict:

"""parse company main overview page"""

selector = response.selector

script_data = json.loads(selector.xpath("//script[@type='application/ld+json']/text()").get())

script_data = jmespath.search(

"""{

name: name,

url: url,

mainAddress: address,

description: description,

numberOfEmployees: numberOfEmployees.value,

logo: logo

}""",

script_data

)

data = {}

for element in selector.xpath("//div[contains(@data-test-id, 'about-us')]"):

name = element.xpath(".//dt/text()").get().strip()

value = element.xpath(".//dd/text()").get().strip()

data[name] = value

addresses = []

for element in selector.xpath("//div[contains(@id, 'address') and @id != 'address-0']"):

address_lines = element.xpath(".//p/text()").getall()

address = ", ".join(line.replace("\n", "").strip() for line in address_lines)

addresses.append(address)

affiliated_pages = []

for element in selector.xpath("//section[@data-test-id='affiliated-pages']/div/div/ul/li"):

affiliated_pages.append({

"name": element.xpath(".//a/div/h3/text()").get().strip(),

"industry": strip_text(element.xpath(".//a/div/p[1]/text()").get()),

"address": strip_text(element.xpath(".//a/div/p[2]/text()").get()),

"linkeinUrl": element.xpath(".//a/@href").get().split("?")[0]

})

similar_pages = []

for element in selector.xpath("//section[@data-test-id='similar-pages']/div/div/ul/li"):

similar_pages.append({

"name": element.xpath(".//a/div/h3/text()").get().strip(),

"industry": strip_text(element.xpath(".//a/div/p[1]/text()").get()),

"address": strip_text(element.xpath(".//a/div/p[2]/text()").get()),

"linkeinUrl": element.xpath(".//a/@href").get().split("?")[0]

})

data = {**script_data, **data}

data["addresses"] = addresses

data["affiliatedPages"] = affiliated_pages

data["similarPages"] = similar_pages

return data

async def scrape_company(urls: List[str]) -> List[Dict]:

"""scrape prublic linkedin company pages"""

to_scrape = [ScrapeConfig(url, **BASE_CONFIG) for url in urls]

data = []

async for response in SCRAPFLY.concurrent_scrape(to_scrape):

data.append(parse_company(response))

log.success(f"scraped {len(data)} companies from Linkedin")

return data

Run the code

async def run():

profile_data = await scrape_company(

urls=[

"https://linkedin.com/company/microsoft"

]

)

# save the data to a JSON file

with open("company.json", "w", encoding="utf-8") as file:

json.dump(profile_data, file, indent=2, ensure_ascii=False)

if __name__ == "__main__":

asyncio.run(run())

In the above LinkedIn scraping code, we define two functions. Let's break them down:

parse_company(): To parse the company data fromscripttags while using JMESPath to refine it and parse other HTML elements using XPath selectors.scrape_company(): To request the company page URLs while utilizing the parsing logic.

Here's a sample output of the extracted company information:

For the full retrieved JSON output, you can view the example onour example dataset on ScrapFly's LinkedIn scraper.

The above data represents the "about" section of the company pages. Next, we'll scrape data from the dedicated section for company jobs.

Scraping Company Jobs

The company jobs are found in a dedicated section of the main page, under the /jobs path of the primary LinkedIn URL for a company:

The page data here is being loaded dynamically on mouse scroll. We could use a real headless browser to emulate a scroll action though this approach isn't practical, as the job pages can include thousands of results!

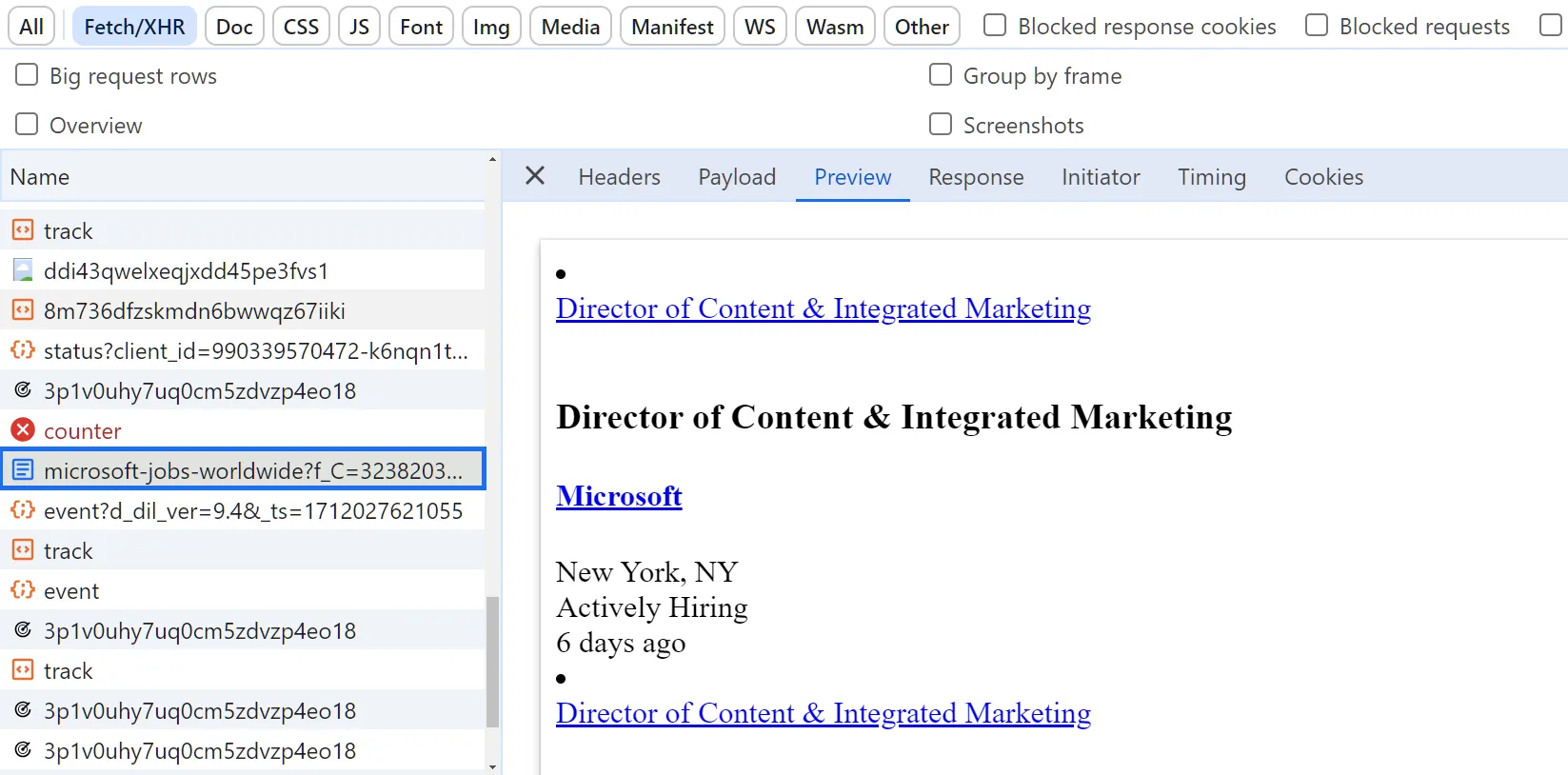

Instead, we'll utilize a more efficient data extraction approach: scraping hidden APIs!

When a scroll action reaches the browser, the website sends an API request to retrieve the following page data as HTML. We'll replicate this mechanism in our scraper.

First, to find this hidden API, we can use our web browser:

- Open the browser developer tools.

- Select the network tab and filter by

Fetch/XHRrequests. - Scroll down the page to activate the API.

There API requests should be captured as the page is being scrolled:

We can see that the results are paginated using the start URL query parameter:

https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/microsoft-jobs-worldwide?start=75

To scrape LinkedIn company jobs, we'll request the first job page to get the maximum results available and then use the above API endpoint for pagination:

import json

import asyncio

from typing import Dict, List

from loguru import logger as log

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

BASE_CONFIG = {

"asp": True,

"country": "US",

"headers": {

"Accept-Language": "en-US,en;q=0.5"

}

}

def strip_text(text):

"""remove extra spaces while handling None values"""

return text.strip() if text != None else text

def parse_jobs(response: ScrapeApiResponse) -> List[Dict]:

"""parse job data from Linkedin company pages"""

selector = response.selector

total_results = selector.xpath("//span[contains(@class, 'job-count')]/text()").get()

total_results = int(total_results.replace(",", "").replace("+", "")) if total_results else None

data = []

for element in selector.xpath("//section[contains(@class, 'results-list')]/ul/li"):

data.append({

"title": element.xpath(".//div/a/span/text()").get().strip(),

"company": element.xpath(".//div/div[contains(@class, 'info')]/h4/a/text()").get().strip(),

"address": element.xpath(".//div/div[contains(@class, 'info')]/div/span/text()").get().strip(),

"timeAdded": element.xpath(".//div/div[contains(@class, 'info')]/div/time/@datetime").get(),

"jobUrl": element.xpath(".//div/a/@href").get().split("?")[0],

"companyUrl": element.xpath(".//div/div[contains(@class, 'info')]/h4/a/@href").get().split("?")[0],

"salary": strip_text(element.xpath(".//span[contains(@class, 'salary')]/text()").get())

})

return {"data": data, "total_results": total_results}

async def scrape_jobs(url: str, max_pages: int = None) -> List[Dict]:

"""scrape Linkedin company pages"""

first_page = await SCRAPFLY.async_scrape(ScrapeConfig(url, **BASE_CONFIG))

data = parse_jobs(first_page)["data"]

total_results = parse_jobs(first_page)["total_results"]

# get the total number of pages to scrape, each page contain 25 results

if max_pages and max_pages * 25 < total_results:

total_results = max_pages * 25

log.info(f"scraped the first job page, {total_results // 25 - 1} more pages")

# scrape the remaining pages using the API

search_keyword = url.split("jobs/")[-1]

jobs_api_url = "https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/" + search_keyword

to_scrape = [

ScrapeConfig(jobs_api_url + f"&start={index}", **BASE_CONFIG)

for index in range(25, total_results + 25, 25)

]

async for response in SCRAPFLY.concurrent_scrape(to_scrape):

page_data = parse_jobs(response)["data"]

data.extend(page_data)

log.success(f"scraped {len(data)} jobs from Linkedin company job pages")

return data

Run the code

async def run():

job_search_data = await scrape_jobs(

url="https://www.linkedin.com/jobs/microsoft-jobs-worldwide",

max_pages=3

)

# save the data to a JSON file

with open("company_jobs.json", "w", encoding="utf-8") as file:

json.dump(job_search_data, file, indent=2, ensure_ascii=False)

if __name__ == "__main__":

asyncio.run(run())

Let's break down the above LinkedIn scraper code:

parse_jobs(): For parsing the jobs data on the HTML using XPath selectors.scrape_jobs(): For the main scraping tasks. It requests the company page URL and the jobs hidden API for pagination.

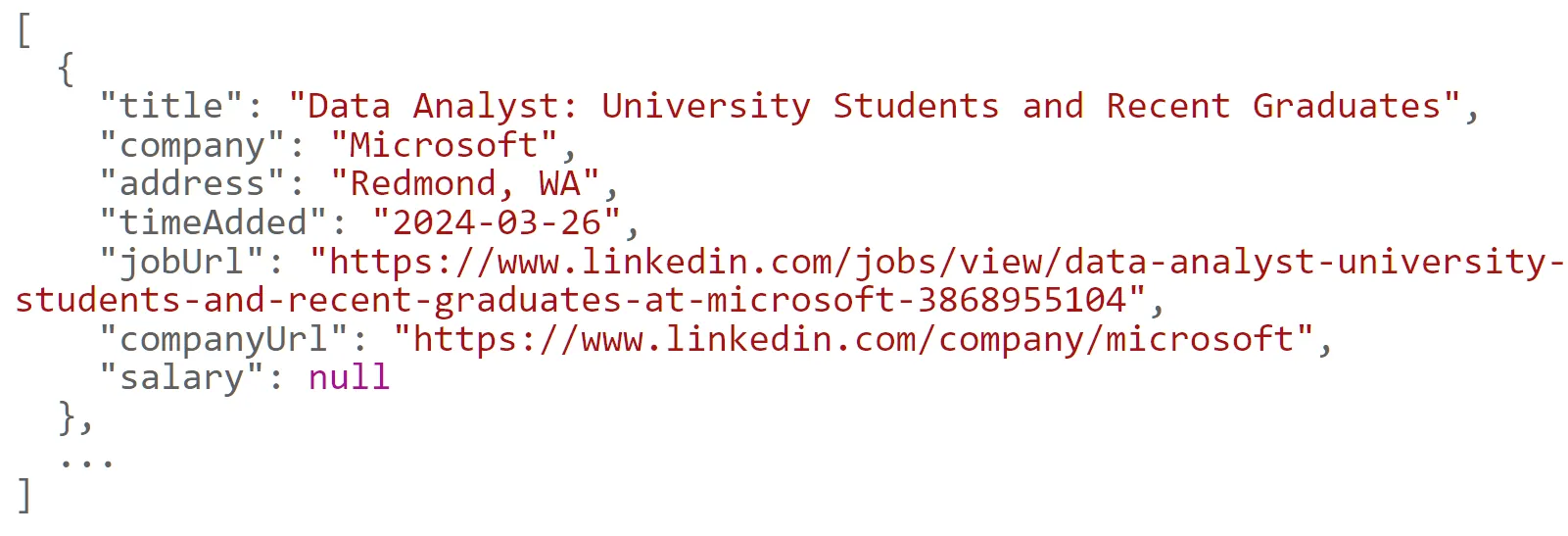

Here's an example output of the above LinkedIn data extracted:

Next, as we have covered the parsing logic for job listing pages, let's apply it to another section of LinkedIn - job search pages.

How to Scrape LinkedIn Job Search Pages?

LinkedIn has a robust job search system that includes millions of job listings across different industries across the globe. The job listings on these search pages have the same HTML structure as the ones listed on the company profile page. Hence, we'll utilize almost the same scraping logic as in the previous section.

To define the URL for job search pages on LinkedIn, we have to add search keywords and location parameters, like the following:

https://www.linkedin.com/jobs/search?keywords=python%2Bdeveloper&location=United%2BStates

The above URL uses basic search filters. However, it accepts further parameters to narrow down the search, such as date, experience level, or city.

We'll request the first page URL to retrieve the total number of results and paginate the remaining pages using the jobs hidden API:

import json

import asyncio

from typing import Dict, List

from loguru import logger as log

from urllib.parse import urlencode, quote_plus

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

BASE_CONFIG = {

"asp": True,

"country": "US",

"headers": {

"Accept-Language": "en-US,en;q=0.5"

}

}

def strip_text(text):

"""remove extra spaces while handling None values"""

return text.strip() if text != None else text

def parse_job_search(response: ScrapeApiResponse) -> List[Dict]:

"""parse job data from job search pages"""

selector = response.selector

total_results = selector.xpath("//span[contains(@class, 'job-count')]/text()").get()

total_results = int(total_results.replace(",", "").replace("+", "")) if total_results else None

data = []

for element in selector.xpath("//section[contains(@class, 'results-list')]/ul/li"):

data.append({

"title": element.xpath(".//div/a/span/text()").get().strip(),

"company": element.xpath(".//div/div[contains(@class, 'info')]/h4/a/text()").get().strip(),

"address": element.xpath(".//div/div[contains(@class, 'info')]/div/span/text()").get().strip(),

"timeAdded": element.xpath(".//div/div[contains(@class, 'info')]/div/time/@datetime").get(),

"jobUrl": element.xpath(".//div/a/@href").get().split("?")[0],

"companyUrl": element.xpath(".//div/div[contains(@class, 'info')]/h4/a/@href").get().split("?")[0],

"salary": strip_text(element.xpath(".//span[contains(@class, 'salary')]/text()").get())

})

return {"data": data, "total_results": total_results}

async def scrape_job_search(keyword: str, location: str, max_pages: int = None) -> List[Dict]:

"""scrape Linkedin job search"""

def form_urls_params(keyword, location):

"""form the job search URL params"""

params = {

"keywords": quote_plus(keyword),

"location": location,

}

return urlencode(params)

first_page_url = "https://www.linkedin.com/jobs/search?" + form_urls_params(keyword, location)

first_page = await SCRAPFLY.async_scrape(ScrapeConfig(first_page_url, **BASE_CONFIG))

data = parse_job_search(first_page)["data"]

total_results = parse_job_search(first_page)["total_results"]

# get the total number of pages to scrape, each page contain 25 results

if max_pages and max_pages * 25 < total_results:

total_results = max_pages * 25

log.info(f"scraped the first job page, {total_results // 25 - 1} more pages")

# scrape the remaining pages concurrently

other_pages_url = "https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/search?"

to_scrape = [

ScrapeConfig(other_pages_url + form_urls_params(keyword, location) + f"&start={index}", **BASE_CONFIG)

for index in range(25, total_results + 25, 25)

]

async for response in SCRAPFLY.concurrent_scrape(to_scrape):

page_data = parse_job_search(response)["data"]

data.extend(page_data)

log.success(f"scraped {len(data)} jobs from Linkedin job search")

return data

Run the code

async def run():

job_search_data = await scrape_job_search(

keyword="Python Developer",

location="United States",

max_pages=3

)

# save the data to a JSON file

with open("job_search.json", "w", encoding="utf-8") as file:

json.dump(job_search_data, file, indent=2, ensure_ascii=False)

if __name__ == "__main__":

asyncio.run(run())

Here, we start the scraping process by defining the job page URL using the search query and location. Then, request and parse the pages the same way we've done in the previous section.

Here's an example output of the above code for scraping LinkedIn job search:

We can successfully scrape the job listings. However, the data returned doesn't contain the details. Let's scrape them from their dedicated pages!

How to Scrape LinkedIn Job Pages?

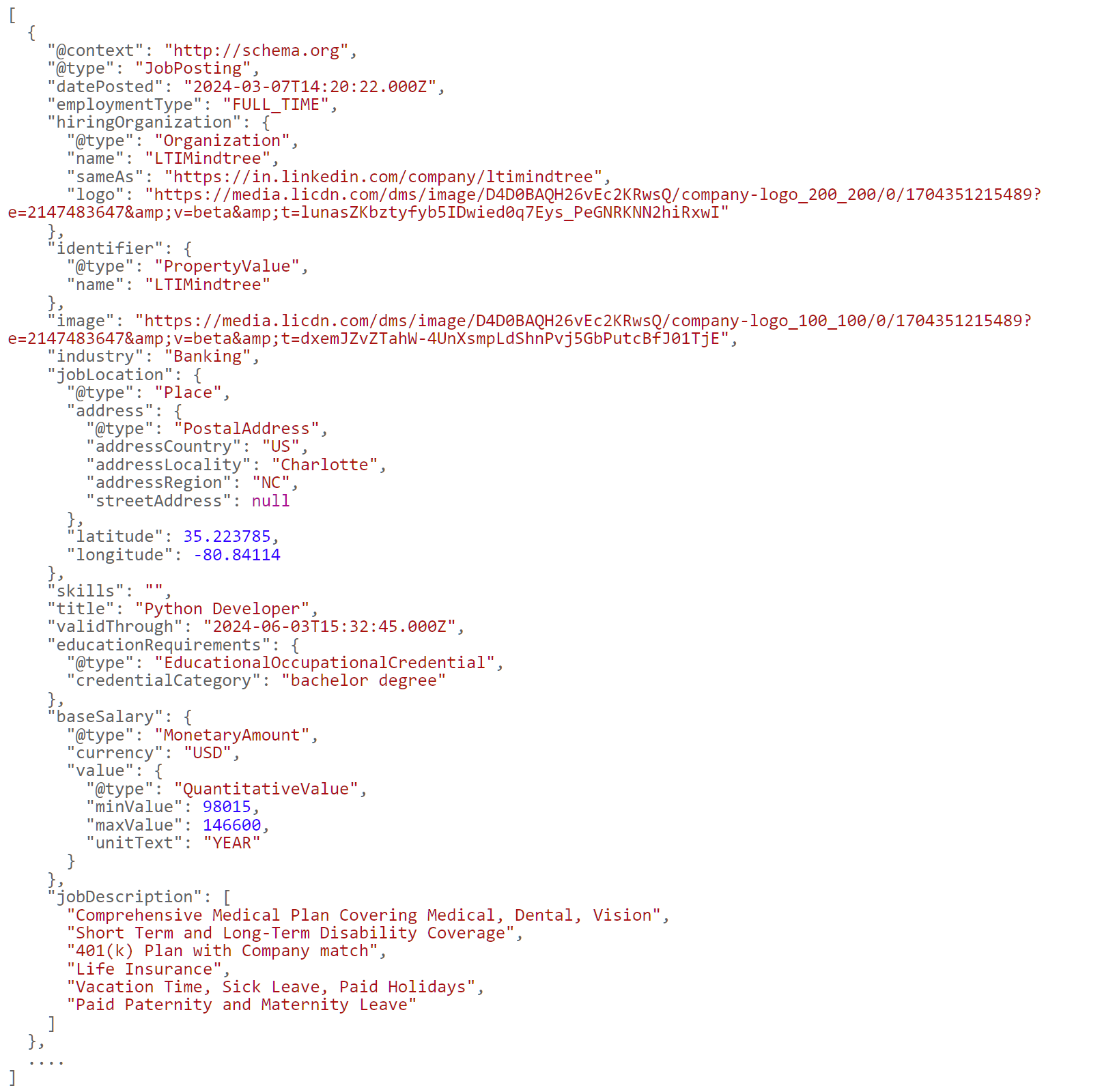

To scrape LinkedIn job pages, we'll utilize the hidden web data approach once again.

To start, search for the selector //script[@type='application/ld+json'], and you will find results similar to the below:

If we take a closer look at the description field, we'll find the job description encoded in HTML. Therefore, we'll extract the script tag hidden data and parse the description field to get the full job details:

import json

import asyncio

from typing import Dict, List

from loguru import logger as log

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

BASE_CONFIG = {

"asp": True,

"country": "US",

"headers": {

"Accept-Language": "en-US,en;q=0.5"

}

}

def parse_job_page(response: ScrapeApiResponse):

"""parse individual job data from Linkedin job pages"""

selector = response.selector

script_data = json.loads(selector.xpath("//script[@type='application/ld+json']/text()").get())

description = []

for element in selector.xpath("//div[contains(@class, 'show-more')]/ul/li/text()").getall():

text = element.replace("\n", "").strip()

if len(text) != 0:

description.append(text)

script_data["jobDescription"] = description

script_data.pop("description") # remove the key with the encoded HTML

return script_data

async def scrape_jobs(urls: List[str]) -> List[Dict]:

"""scrape Linkedin job pages"""

to_scrape = [ScrapeConfig(url, **BASE_CONFIG) for url in urls]

data = []

# scrape the URLs concurrently

async for response in SCRAPFLY.concurrent_scrape(to_scrape):

data.append(parse_job_page(response))

log.success(f"scraped {len(data)} jobs from Linkedin")

return data

Run the code

async def run():

job_data = await scrape_jobs(

urls=[

"https://in.linkedin.com/jobs/view/data-center-engineering-operations-engineer-hyd-infinity-dceo-at-amazon-web-services-aws-4017265505",

"https://www.linkedin.com/jobs/view/content-strategist-google-cloud-content-strategy-and-experience-at-google-4015776107",

"https://www.linkedin.com/jobs/view/sr-content-marketing-manager-brand-protection-brand-protection-at-amazon-4007942181"

]

)

# save the data to a JSON file

with open("jobs.json", "w", encoding="utf-8") as file:

json.dump(job_data, file, indent=2, ensure_ascii=False)

if __name__ == "__main__":

asyncio.run(run())

Similar to our previous LinkedIn scraping logic, we add the job page URLs to a scraping list and request them concurrently. Then, we use the parse_job_page() function to parse the job data from the hidden script tag, including the HTML inside the description field.

Here's what the above LinkedIn extractor output looks like:

The job page scraping code can be extended with further LinkedIn crawling logic to scrape their pages after they are retrieved from the job search pages.

For more on web crawling with Python take a look at our dedicated tutorial on web crawling with Python.

With this last feature, our LinkedIn scrapers are complete. They can successfully scrape LinkedIn profiles, company, and job data. However, attempts to increase the scraping rate will lead the website to detect and

Hence, make sure to rotate high-quality residential proxies.

FAQ

Below are quick answers to common questions about How to Scrape LinkedIn.

How long before LinkedIn detects and bans scraping attempts?

LinkedIn continuously monitors user behavior, including request timing, scrolling activity, TLS fingerprints, and IP reputation. Its detection models are trained on millions of real user sessions, so avoiding detection requires mimicking human-like interaction, not just rotating proxies.

Can I scrape LinkedIn without an account?

You can scrape limited public data such as basic profiles and company overviews, but access usually stops after a few page views. Job listings and search results require authentication, while most company data is only partially visible. In short, unauthenticated scraping is very limited.

Why do LinkedIn accounts get banned?

LinkedIn bans accounts that show automated or unnatural behavior, such as viewing dozens of profiles in a few minutes, sending uniform requests, using datacenter IPs, or sharing sessions across devices. Once flagged, bans are permanent and cannot be appealed.

Is scraping LinkedIn legal?

The hiQ Labs vs. LinkedIn case (2017–2022) ruled that scraping publicly accessible data is legal under U.S. law. However, LinkedIn’s Terms of Service prohibit automated access. While scraping public data carries minimal legal risk, it can still lead to IP or account bans. Always seek legal advice for commercial projects.

How much does ScrapFly cost for LinkedIn scraping?

Pricing depends on your request volume. As a general estimate:

1,000 profiles usually cost between $15 and $30.

10,000 job listings cost around $80 to $150.

You can check ScrapFly’s pricing page for the most up-to-date rates. Most users find it far more cost-effective than maintaining their own proxy or account infrastructure.

How often does LinkedIn change its structure?

LinkedIn’s internal Voyager API and frontend code change frequently. Voyager endpoints update every four to eight weeks, while frontend elements change every two to four weeks. JSON-LD structures usually remain stable for several months. ScrapFly’s monitoring system detects these changes and updates its LinkedIn scrapers within 48 hours.

Why can't I just use Puppeteer/Playwright for LinkedIn?

While Puppeteer and Playwright can render LinkedIn pages, they lack the full anti-detection setup required. You would still need to handle residential proxy rotation, account authentication, session persistence, human-like browsing patterns, and browser fingerprint rotation. ScrapFly manages all of this automatically, making scraping much more reliable.

Can I scrape LinkedIn Sales Navigator?

Sales Navigator is a paid product that requires a logged-in LinkedIn account. Scraping it violates LinkedIn’s Terms of Service, so it’s not recommended.

Can I Scrape LinkedIn Company Employees?

Employee listings are only visible to logged-in users. Scraping this data would require authentication, which breaches LinkedIn’s Terms of Service. However, public company information can still be accessed safely using ScrapFly.

Summary

LinkedIn protects professional data through multi-layered blocking: authentication walls, behavioral tracking, and request fingerprinting. DIY scraping requires managing session persistence, residential proxy rotation, TLS fingerprint randomization, and behavior emulation - a very time consuming maintenance burden!

ScrapFly eliminates this complexity:

- Automated anti-bot bypass: Handles fingerprinting, behavioral tracking, and fraud score evasion

- Maintained scrapers: Updated within 48 hours when LinkedIn changes structure

- Production-ready code: Extract profiles, companies, jobs, and search results without managing infrastructure

- Residential proxy pools: Rotate IPs automatically to prevent detection

When LinkedIn updates its defenses, ScrapFly updates the scraper. You focus on data, not anti-bot engineering.

Ready to start?

git clone https://github.com/scrapfly/scrapfly-scrapers.git

cd scrapfly-scrapers/linkedin-scraper

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.