LangChain takes web scraping beyond just pulling data from websites. Instead of getting raw HTML, you get smart systems that understand context and can make decisions about what information matters. When you combine LangChain's agent framework with Scrapfly's reliable scraping tools, you can create AI systems that automatically find, extract, and analyze web content at any scale.

We'll explore how to integrate LangChain with web scraping to create smart agents, build retrieval-augmented generation (RAG) applications, and automate complex data workflows. see our article on crawling with python

Key Takeaways

Learn how to combine LangChain with Scrapfly to create AI agents and RAG applications that can automatically gather and understand web data.

- Integrate ScrapflyLoader with LangChain to scrape web pages as markdown or text documents for LLM consumption

- Build LangChain agents with web scraping tools that can autonomously discover and extract data from websites

- Create RAG applications using ScrapflyLoader, vector stores, and retrieval chains for smart AI systems

- Handle anti-bot protection, JavaScript rendering, and proxy rotation automatically through Scrapfly's API

- Implement custom tools and chains for domain-specific web scraping workflows with error handling and retry logic

- Scale web scraping operations using Scrapfly's Crawler API for complete domain-wide data collection

Latest LangChain Web Scraping Code

What is LangChain?

LangChain is an open-source framework designed to simplify building applications powered by large language models (LLMs). Think of it as a toolkit that helps developers connect LLMs to external data sources, tools, and systems. LangChain provides helpful tools for common patterns like prompt management, memory handling, and tool integration, making it easier to build smart AI applications.

For web scraping, LangChain offers several key components. Document loaders parse web content. Agents can use scraping tools to autonomously gather information. Chains combine multiple operations into reusable workflows. Together, these components let you build AI systems that smartly extract and process web data.

LangChain supports dozens of LLM providers, from cloud services like OpenAI and Anthropic to local models via Ollama. This flexibility means you can build web scraping applications that work with any LLM, whether you need the power of GPT-4 or the privacy of a local model. see our comparison of LangChain alternatives

Why Use LangChain for Web Scraping?

Traditional web scraping usually means pulling raw HTML or data from sites. LangChain goes beyond that by adding smarts and context, so you can build systems that don't just grab data—they actually understand and work with it meaningfully.

Benefits of using LangChain for web scraping include:

- Intelligent Data Extraction: Go beyond pulling HTML, build agents that understand the context of what they're scraping and can make dynamic decisions about what data to extract next.

- Autonomous Navigation: LangChain agents can navigate websites on their own, decide which pages to visit, and extract information based on natural language instructions. This is especially useful for complex, interactive, or ever-changing sites.

- Seamless RAG Integration: Easily turn scraped content (like documentation, product catalogs, or articles) into searchable knowledge bases for Retrieval Augmented Generation (RAG) applications, enabling conversational AI that answers questions using fresh web content.

- Automatic Chunking and Embedding: The framework handles document chunking, vector embedding, and retrieval automatically, greatly simplifying creation of smart AI systems.

With LangChain, web scraping becomes the starting point for complete data workflows and smart automation, taking your projects way beyond basic data collection.

What Data Can You Scrape with LangChain?

When you pair LangChain with Scrapfly, you can pull almost any web content and format it for language models. It handles both structured data (like tables) and unstructured content (like articles), plus sites that rely heavily on JavaScript.

Some typical use cases for LangChain web scraping include:

- E-commerce analysis: Scraping product catalogs, prices, and reviews for tracking competitors or trends

- Building knowledge bases: Extracting technical documentation, help articles, or FAQs for enterprise search or RAG applications

- Content aggregation: Gathering news stories, blog posts, and editorial articles for downstream analysis or aggregation

- Social media or forum monitoring: Collecting posts, comments, and engagement data for sentiment analysis or trend detection

- Real estate and classifieds: Pulling listings, agent contact info, and location details for property research

Basically, LangChain lets you turn messy web data into organized, useful information, opening up a lot more possibilities for your scraping projects.

Project Setup

To start using LangChain for web scraping, you'll need a few Python packages. The main LangChain library gives you the core framework, and the other packages add specific features you need.

Install the required packages using pip:

pip install scrapfly-sdk langchain langchain-community

Here's what each package provides:

- langchain: The core framework for building LLM applications

- langchain-community: Community integrations including ScrapflyLoader

- scrapfly-sdk: Scrapfly Python SDK required by ScrapflyLoader

You'll also need additional packages depending on your specific use case (like langchain-openai for OpenAI models, langchain-chroma for vector stores, etc.).

You'll also need API keys. Get your Scrapfly API key from the Scrapfly dashboard, and if using OpenAI, get your key from the OpenAI platform. see our python web scraping fundamentals

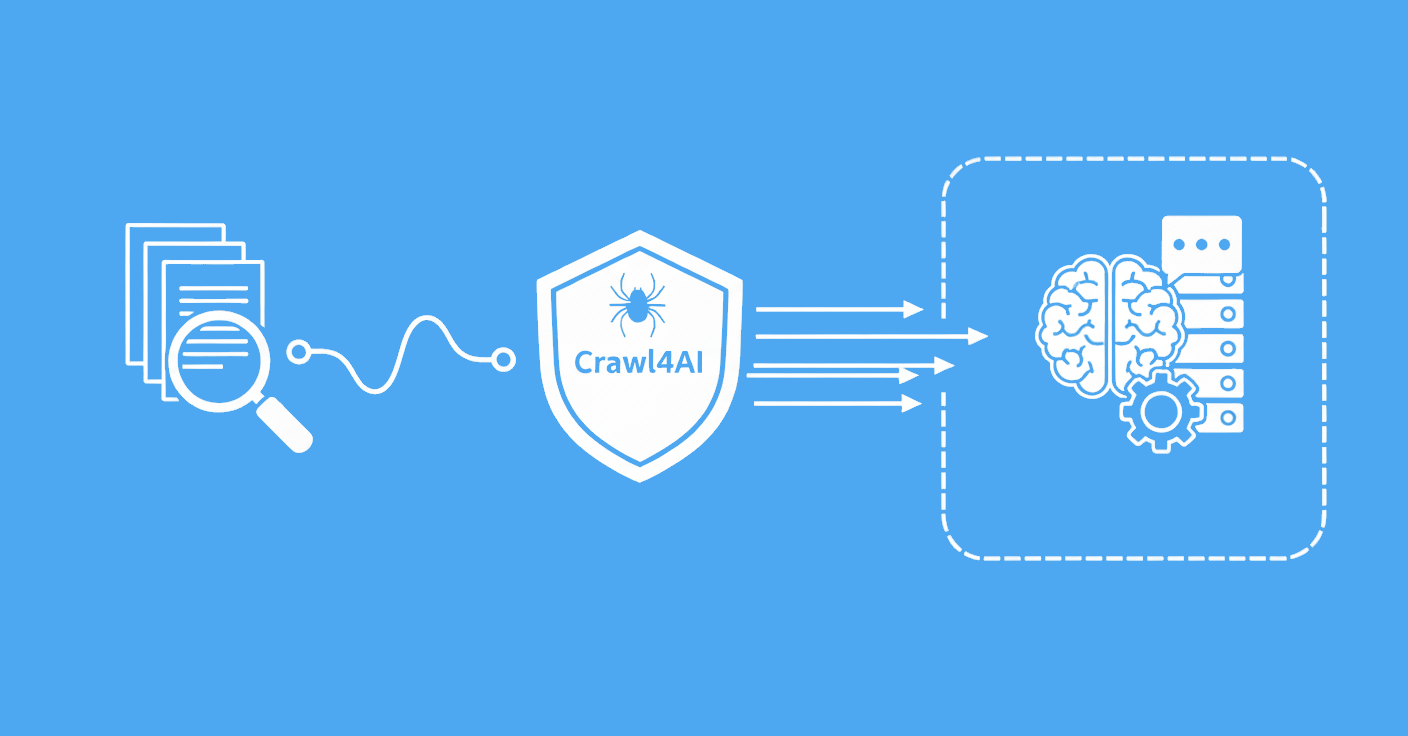

How LangChain Web Scraping Works

The process is pretty simple. ScrapflyLoader grabs web pages and turns them into documents that LangChain can work with. From there, you can use these documents in agents, chains, or RAG applications.

Document Loading with ScrapflyLoader

ScrapflyLoader is basically a bridge between LangChain and Scrapfly's API. It fetches web pages while automatically handling anti-bot measures, JavaScript rendering, and proxy rotation. What you get back are LangChain Document objects with the page content and metadata ready to use.

You configure the loader with a scrape_config dictionary that specifies how Scrapfly should fetch the page. Options include enabling JavaScript rendering, selecting proxy pools, setting geographic locations, and configuring anti-bot bypass settings.

Agent-Based Scraping

You can give LangChain agents web scraping as one of their tools. You create a scraping function that the agent can call whenever it needs web data. The agent figures out on its own when scraping makes sense for the task. This creates independent systems that can find and extract data without you having to spell out every single step.

RAG Pipeline Integration

For RAG applications, you load documents, break them into smaller chunks, create embeddings, and store everything in a vector database. LangChain gives you all the building blocks for this, so it's straightforward to create searchable knowledge bases from your scraped content. see our guide on using web scraping for RAG applications see our guide to LLM training and RAG

Basic LangChain Web Scraping Example

Let's start with a simple example that scrapes a web page and loads it as a LangChain document:

from langchain_community.document_loaders import ScrapflyLoader

# Create the loader with basic configuration

loader = ScrapflyLoader(

["https://example.com/page"],

api_key="YOUR_SCRAPFLY_API_KEY",

continue_on_failure=True, # Continue if a page fails

)

# Load the documents

documents = loader.load()

# Each document contains page_content and metadata

for doc in documents:

print(f"Content length: {len(doc.page_content)}")

print(f"Source URL: {doc.metadata.get('source')}")

This basic setup loads pages as markdown by default. ScrapflyLoader handles the scraping automatically.

Advanced Configuration

For more control over how pages are scraped, you can pass a scrape_config dictionary:

from langchain_community.document_loaders import ScrapflyLoader

# Configure Scrapfly scraping options

scrapfly_config = {

"asp": True, # Enable anti-bot bypass

"render_js": True, # Render JavaScript

"proxy_pool": "public_residential_pool", # Use residential proxies

"country": "us", # Set proxy location

"auto_scroll": True, # Auto-scroll for lazy-loaded content

}

# Create the loader with advanced configuration

loader = ScrapflyLoader(

["https://example.com/page"],

api_key="YOUR_SCRAPFLY_API_KEY",

continue_on_failure=True, # Continue if a page fails

scrape_config=scrapfly_config,

scrape_format="markdown", # Return as markdown

)

# Load the documents

documents = loader.load()

With advanced configuration, ScrapflyLoader can bypass anti-bot measures, render JavaScript, and use residential proxies for more reliable scraping.

Building a LangChain Agent with Web Scraping

LangChain agents can use web scraping as a tool to gather information autonomously. Here's how to create an agent that can scrape websites: see our practical guide to LLM agents

from langchain.agents import AgentExecutor, create_openai_tools_agent

from langchain_openai import ChatOpenAI

from langchain.tools import Tool

from langchain_community.document_loaders import ScrapflyLoader

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# Define a web scraping tool

def scrape_website(url: str) -> str:

"""Scrape a website and return its content as markdown."""

loader = ScrapflyLoader(

[url],

api_key="YOUR_SCRAPFLY_API_KEY",

continue_on_failure=True,

scrape_config={"asp": True, "render_js": True},

scrape_format="markdown",

)

documents = loader.load()

if documents:

return documents[0].page_content

return "Failed to scrape the website."

# Create the tool

scraping_tool = Tool(

name="scrape_website",

description="Scrape a website URL and return its content as markdown. Use this to gather information from web pages.",

func=scrape_website,

)

# Initialize the LLM

llm = ChatOpenAI(model="gpt-4", temperature=0)

# Create the agent prompt

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant that can scrape websites to gather information."),

("human", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

])

# Create the agent

tools = [scraping_tool]

agent = create_openai_tools_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# Use the agent

result = agent_executor.invoke({

"input": "Scrape https://example.com and tell me what the page is about."

})

print(result["output"])

The agent can figure out on its own when websites need scraping. It gets a task, realizes it needs web data, calls your scraping tool, and then works with the results.

Building a RAG Application with LangChain and Scrapfly

RAG applications combine web scraping with vector databases to create searchable knowledge bases. Here's a complete example:

import os

from langchain_community.document_loaders import ScrapflyLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_chroma import Chroma

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain import hub

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

# Set API keys

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

os.environ["SCRAPFLY_API_KEY"] = "YOUR_SCRAPFLY_API_KEY"

# Load documents from web pages

loader = ScrapflyLoader(

[

"https://example.com/page1",

"https://example.com/page2",

"https://example.com/page3",

],

api_key=os.environ["SCRAPFLY_API_KEY"],

continue_on_failure=True,

scrape_config={

"asp": True,

"render_js": True,

"proxy_pool": "public_residential_pool",

},

scrape_format="markdown",

)

documents = loader.load()

# Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200,

)

splits = text_splitter.split_documents(documents)

# Create vector store

vectorstore = Chroma.from_documents(

documents=splits,

embedding=OpenAIEmbeddings(),

)

# Create retriever

retriever = vectorstore.as_retriever()

# Format documents for the prompt

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

# Create the RAG chain

llm = ChatOpenAI(model="gpt-4", temperature=0)

prompt = hub.pull("rlm/rag-prompt")

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

# Query the RAG system

response = rag_chain.invoke("What information is available on these pages?")

print(response)

This shows a full RAG setup. You scrape web pages, break them into chunks, create embeddings, and store them in a vector database. When someone asks a question, the system finds relevant chunks and feeds them to the LLM for an answer.

Power Up with Scrapfly

Scrapfly takes care of all the infrastructure headaches, so you can focus on building your LangChain app instead of battling anti-bot systems.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

FAQs

Here are some common questions about using LangChain for web scraping.

How does LangChain compare to other frameworks for web scraping?

LangChain is specifically designed for LLM applications, making it ideal when you need AI-powered data extraction. For simple scraping tasks, traditional libraries like Scrapy or BeautifulSoup might be more appropriate.

Can I use LangChain with local LLMs instead of cloud services?

Yes, LangChain supports local LLMs through frameworks like Ollama. Replace ChatOpenAI with ChatOllama and use local embedding models.

How do I handle rate limiting when scraping many pages?

Scrapfly automatically handles rate limiting through its proxy infrastructure and request spacing. For additional control, implement delays between requests, use Scrapfly's caching feature to avoid re-scraping, and consider using the Crawler API for coordinated crawling.

What's the difference between ScrapflyLoader and other LangChain document loaders?

ScrapflyLoader is specifically designed for production web scraping with built-in anti-bot bypass, JavaScript rendering, and proxy management. Other loaders like WebBaseLoader are simpler but don't handle blocking or complex JavaScript sites as effectively.

Can I scrape authenticated or private pages with LangChain?

Yes, you can pass authentication cookies or headers through ScrapflyLoader's scrape_config. Use the headers parameter for authentication tokens or the cookies parameter for session cookies.

How do I extract structured data from scraped pages?

ScrapflyLoader returns markdown or text. For structured extraction, use LangChain's output parsers with LLMs to convert the content into JSON or other formats. Alternatively, combine ScrapflyLoader with traditional parsing libraries for hybrid approaches.

Summary

LangChain turns web scraping into smart data extraction by pairing LLM power with solid scraping tools. Here's what we've covered in this guide:

- How to use ScrapflyLoader to fetch web pages as LangChain documents

- Building independent agents that can scrape websites based on natural language instructions

- Creating RAG applications that turn scraped content into searchable knowledge bases

- Configuring Scrapfly for different scraping scenarios including JavaScript rendering and international sites

- Implementing error handling and retry logic for production applications

- Scaling scraping operations with Scrapfly's Crawler API

When you combine LangChain's adaptable approach with Scrapfly's reliable scraping, you can build smart AI systems that understand and process web data at any scale.

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.