When it comes to identifying web scrapers javascript is by far the most powerful tool because it allows arbitrary code execution on the client machine. This code has access to enormous amount of unique datapoints that can be used to build a client fingerprint or even instantly identify web scraper controlled browsers.

In this article, we'll take a look at how javascript is used to identify web scrapers through fingerprinting. We'll cover common fingerprinting techniques and fingerprint leaks caused by a headless browser usage. We'll also take a look at how to prevent and patch these leaks in web scrapers which are using browser automation toolkits like Selenium, Playwright or Puppeteer.

Key Takeaways

Bypass JavaScript fingerprinting blocking by patching automation leaks, randomizing non-critical elements, and using browser automation tools that provide genuine JavaScript environments.

- Patch automation indicators including navigator.webdriver, headless detection, and uncommon OS patterns

- Implement fingerprint randomization for screen resolutions, timezones, and other non-critical elements

- Use browser automation tools like Selenium, Playwright, and Puppeteer for authentic JavaScript environments

- Detect robot fingerprinting by identifying when browsers are controlled by programs rather than humans

- Apply identity fingerprinting techniques to create unique identifiers and monitor connection patterns

How Does Browser Fingerprinting Work?

Javascript in the browser can access thousands of different environment details such as javascript runtime variables, what are the display capabilities such as resolution and color footprint and so on. All this information can be used to identify and block web scrapers, so let's take a look at how fingerprinting works and how can we avoid it as web scraper developers.

In this article, we'll cover two different concepts of javascript usage to identify web scrapers:

- Robot fingerprint leaking for when javascript environment can be used to identify whether something is human or a robot.

- Identity fingerprinting when javascript environment is used to create unique identity for tracking users. In web scraping this mostly means that if our scraper is being tracked, it can be identified after making too many unnatural connections. In other words if ID 1234 is browsing the web page at non-human rate the server can confidently deduce that the client is not a human.

These two concepts is are closely related, however hiding robot identity is significantly more important as that is a really common way of blocking scrapers powered by Playwright/Puppeteer/Selenium.

Browser Automation Leaking

Fingerprinting tech can be powerful enough to instantly identify a web scraper. Unfortunately, many web-browser automation tools leak information about themselves to javascript execution context - meaning javascript can easily tell the browser is being controlled by a program rather than a human being. This should be our first step to fingerprint fortification - we need to cover tracks left by our scraping environment.

Scraper controlled browsers often contain extra javascript environment information that indicate the browser is running without GUI elements (aka headless) or run on uncommon operating systems (e.g. Linux)

For example, the most commonly known leak when it comes to browser automation tools like Selenium, Playwright or Puppeteer is the navigator.webdriver leak where an automated browser would have navigator.webdriver value set to an unusual value of true:

Above, we see that the controlled browser has navigator.webdriver set to true where natural browsers always have it set to false. These variables can be read by any website making identification of robots extremely easy!

We can easily explore these leaks by firing up real and automated browser side by side and exploring the javascript console (F12 in most browsers).

How to Patch Fingerprint Leaks

To prevent leaking data through javascript variables, we can patch our browser's javascript environment with fake values using javascript:

// change navigator.webdriver getter to always return value `false`:

Object.defineProperty(navigator, 'webdriver', {get: () => false})

This short script redefines navigator.webdriver value to always return false - which fixes our leak!

To patch these leaks in our browser automation tools we can take advantage of page initiation script functionality:

Playwright (Python)

from playwright.sync_api import Page

page: Page

script = "Object.defineProperty(navigator, 'webdriver', {get: () => false})"

page.add_init_script(script)

Selenium (Python)

from selenium.webdriver import Chrome

driver: Chrome

script = "Object.defineProperty(navigator, 'webdriver', {get: () => false})"

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {"source": script})

Puppeteer (Javascript)

const script = "Object.defineProperty(navigator, 'webdriver', {get: () => false})"

page.evaluateOnNewDocument(script)

The above code will attach our navigator.webdriver fix script to page/browser initiation process which will plug this leak for the entire scraping session!

Now that we know how to plug these leaks let's take a look at how we can find them.

Which Browsers Leak the Least?

Every browser type has different leak vectors and have to be handled individually, so unfortunately, we can't apply all the same fortification rules across the board - we must deal with each browser type individually.

Generally speaking Chrome tends to have the more leaks than Firefox, so fortifying Firefox is easier. That being said, Chrome has been around in the web automation scene significantly longer so it's easier to work with as there are more public resources. Also is Chrome really worse or we just know it better?

Another thing to note is that Chrome has a significantly higher market share thus if our goal is to blend in at scale we want to stick with it.

We'll explore both Chrome and Firefox browser javascript environments, but largely, once we learn how to correctly identify leaks and patch them, we can fortify any browser to be used for web scraping!

Fortifying Browsers

Javascript exposes a colossal amount of information about the client and taming all of it to perfection would take thousands of hours of work. That being said, not all information is equal, and we can plug the biggest holes quite confidently!

Not every leak is treated in a binary fashion (either you're a robot or not) but some definitely are. Anti bot protection systems want to avoid false positives, and the web-space is diverse enough to provide some breathing room. That being said, plugging the biggest leaks is vital for any web scrapers that tries to access protected websites.

Note: we're working with headless browsers here, so to follow along make sure you're working with browser in headless mode otherwise the leaks we discuss here might appear already plugged.

Leak Detection Tools

There are many online tools that can analyze a web browser for the most common leaks and fingerprint values:

- https://bot.sannysoft.com/

- http://arh.antoinevastel.com/bots/areyouheadless

- https://antoinevastel.com/bots/

- https://github.com/paulirish/headless-cat-n-mouse

- https://abrahamjuliot.github.io/creepjs/

It's worth noting that none of these tools are perfect and since the web browser environment is constantly evolving and changing with every new browser release we should confirm all of this data ourselves.

For this, let's use a small test script that will let us compare our automated browsers against the real ones:

checkplaywright.py - script to compare Playwright browsers

#!/usr/bin/env python3

# check-playwright.py

import sys

from playwright.sync_api import sync_playwright, Page, Browser, BrowserType

def run(browser: str, headless: str, script: str, url=None):

headless = 'headless' in headless.lower()

with sync_playwright() as pw:

browser_type: BrowserType = getattr(pw, browser)

browser: Browser = browser_type.launch(headless=headless)

page: Page = browser.new_page(viewport={"width": 1920, "height": 1080})

if url:

page.goto(url)

result = page.evaluate(script)

return result

if __name__ == "__main__":

print(run(*sys.argv[1:]))

checkselenium.py - script to compare Selenium browsers

#!/usr/bin/env python3

# check-selenium.py

import sys

from selenium import webdriver

def run(browser: str, headless: str, script:str, url=None):

headless = headless.lower()

if browser == "chromium":

browser = 'chrome'

if browser == 'chrome':

from selenium.webdriver.chrome.options import options

if browser == 'firefox':

from selenium.webdriver.firefox.options import options

options = options()

if 'headless' in headless:

options.headless = true

browser = getattr(webdriver, browser.title())(options=options)

if url:

browser.get(url)

result = browser.execute_script(f"return {script}")

browser.close()

return result

if __name__ == "__main__":

print(run(*sys.argv[1:]))

checkall.py - script to compare both Selenium and Playwright browsers

import sys

from checkselenium import run as run_selenium

from checkplaywright import run as run_playwright

def run(script:str, url=None):

data = {}

for toolkit, tookit_script in [('selenium', run_selenium), ('playwright', run_playwright)]:

for browser in ['chromium', 'firefox']:

for head in ['headless', 'headful']:

data[f'{toolkit:<10}:{head:<8}:{browser:<8}:{url or ""}'.strip(':')] = tookit_script(browser, head, script, url)

return data

if __name__ == "__main__":

for query, result in run(*sys.argv[1:]).items():

print(f"{query}: {result}")

Here, we have two tiny pythons scripts for comparing javascript execution values with either Selenium or Playwright. Using these scripts and the real browser's javscript console (F12 key in most web browsers) we can quickly compare different environment values:

$ python checkall.py "navigator.webdriver"

selenium :headless:chromium: True

selenium :headful :chromium: True

selenium :headless:firefox : True

selenium :headful :firefox : True

playwright:headless:chromium: True

playwright:headful :chromium: True

playwright:headless:firefox : False

playwright:headful :firefox : False

As you can see, both Selenium and Playwright have navigator.webdriver variable set to True on Chrome while it's False on both native browsers. For Firefox, we see that Playwright does have correct value while Selenium still fails.

Using this setup we can quickly compare and debug our web scraping environment for javascript environment differences, which helps us to identify common identity leaks. Now that we have the right tools, let's take a look at some of the most common leaks used in identifying web scrapers.

Common Leaks

Many of these leaks are publicly known, and many browser automation libraries have existing tools to deal with them:

- puppeteer-stealth - plugin for Puppeteer

- playwright-stealth - plugin for Playwright

- selenium-stealth - plugin for Selenium

- headless-cat-and-mouse - explores leaks from both sides of client and server

Unfortunately, since web browsers change rapidly open source libraries often lag behind as it takes a lot of volunteer effort to keep up. The knowledge of fingerprint leaking is a valuable trade secret for people working in the industry, so there's very little incentive to have the best solutions available publicly.

That being said, we have our tools, and we have to start somewhere. Let's take a look at some well known leak types and leaks and how can we plug them.

For this, good starting point is exploring puppeteer-stealth plugin which while dated and inaccurate at places, still contains a lot of important techniques and strategies we can learn from.

Browser Capabilities

Common way to identify and fingerprint browsers is to test their capabilities.

Most widely known example of this is navigator.plugins and navigator.mimetypes variables on Chrome which while deprecated still exist in all version of Chrome browser as hard-coded values.

These two variables indicate plugins used by the browser and supported document types. Since headless browsers do not support visual details this variable resolves to an empty array:

$ python checkall.py "[navigator.plugins.length, navigator.mimeTypes.length]"

selenium :headless:chromium: [0, 0]

selenium :headful :chromium: [5, 2]

selenium :headless:firefox : [0, 0]

selenium :headful :firefox : [0, 0]

playwright:headless:chromium: [0, 0]

playwright:headful :chromium: [5, 2]

playwright:headless:firefox : [0, 0]

playwright:headful :firefox : [0, 0]

So, when using a headless browser for web scraping we want to ensure that we mimic the headful browser for these values.

These objects are pretty big so see this puppeteer-stealth github repository for how to correctly mimic this browser feature.

Different Behavior in HTTP and HTTPS

Browser javascript environments can differ based on the fact whether current connection is secured with SSL or not.

Most widely known example of this is Notification.permission variable on Chrome browsers:

While Firefox always has this value as "default", Chrome has it as "Denied" for unsecure websites only. Unfortunately, browser automation toolkits fail to match this behavior making it dead easy to identify scrapers:

# check secure connections - result should always be "default"

$ python checkall.py "Notification.permission" https://httpbin.dev/headers

selenium :headless:chromium:https://httpbin.dev/headers: denied

# ^ ❌ should be "default"

selenium :headful :chromium:https://httpbin.dev/headers: default

selenium :headless:firefox :https://httpbin.dev/headers: default

selenium :headful :firefox :https://httpbin.dev/headers: default

playwright:headless:chromium:https://httpbin.dev/headers: denied

# ^ ❌ should be "default"

playwright:headful :chromium:https://httpbin.dev/headers: default

playwright:headless:firefox :https://httpbin.dev/headers: default

playwright:headful :firefox :https://httpbin.dev/headers: default

# check unsecure connections - result should be "denied" for chromium and "default" for firefox

$ python checkall.py "Notification.permission" https://httpbin.dev/headers

selenium :headless:chromium:https://httpbin.dev/headers: denied

selenium :headful :chromium:https://httpbin.dev/headers: denied

selenium :headless:firefox :https://httpbin.dev/headers: default

selenium :headful :firefox :https://httpbin.dev/headers: default

playwright:headless:chromium:https://httpbin.dev/headers: denied

playwright:headful :chromium:https://httpbin.dev/headers: denied

playwright:headless:firefox :https://httpbin.dev/headers: default

playwright:headful :firefox :https://httpbin.dev/headers: default

# all are ✅ here!

We can see that both Selenium and Playwright fail when using headless Chrome. So if we're running headless Chrome we must patch Notification.permission to return default when scraping SSL secured websites:

const isSecure = document.location.protocol.startsWith('https')

if (isSecure){

Object.defineProperty(Notification, 'permission', {get: () => 'default'})

}

Tip: Sometimes it's worth setting this value to granted to improve our fingerprint rating for websites where majority of users have enabled notifications.

Browser Specific JS Objects

Another common fingerprinting area is browser specific javascript objects.

Most widely known example of this is the Chrome object on Chrome browsers. This object is used by Chrome extensions, and the headless version of the browser does not include it, meaning we need to recreate it ourselves manually.

This part of the fingerprint is pretty old and well understood, so we advise to refer to puppeteer stealth plugin's chrome.* evasions (starting with chrome.app one). Essentially, we want to recreate the same Chrome object we see in the headful version of the browser and since it's a static object we can just copy everything over.

Browser Process Flags

Browsers are very complex software suites and to deal with this complexity every browser can be customized via launch flags. Unfortunately, browser automation tools often add extra launch flags that can lead to unusual browser behavior which results in identity leak. Let's take a look at some of them.

To find default flags our browser is using we need to enable debug log which will print browser launch command:

Playwright - enable debug logs

Playwright's debug logs can be enabled through DEBUG environmental variable:

$ export DEBUG="pw*"

$ python checkplaywright.py chromium headless ""

<...>

pw:browser <launching> /home/dex/.cache/ms-playwright/chromium-939194/chrome-linux/chrome --disable-background-networking --enable-features=NetworkService,NetworkServiceInProcess --disable-background-timer-throttling --disable-backgrounding-occluded-windows --disable-breakpad --disable-client-side-phishing-detection --disable-component-extensions-with-background-pages --disable-default-apps --disable-dev-shm-usage --disable-extensions --disable-features=ImprovedCookieControls,LazyFrameLoading,GlobalMediaControls,DestroyProfileOnBrowserClose,MediaRouter,AcceptCHFrame --allow-pre-commit-input --disable-hang-monitor --disable-ipc-flooding-protection --disable-popup-blocking --disable-prompt-on-repost --disable-renderer-backgrounding --disable-sync --force-color-profile=srgb --metrics-recording-only --no-first-run --enable-automation --password-store=basic --use-mock-keychain --no-service-autorun --headless --hide-scrollbars --mute-audio --blink-settings=primaryHoverType=2,availableHoverTypes=2,primaryPointerType=4,availablePointerTypes=4 --no-sandbox --user-data-dir=/tmp/playwright_chromiumdev_profile-7lVx0j --remote-debugging-pipe --no-startup-window +0ms

<...>

Selenium - enable debug logs (Python)

Selenium's debug logs have to be enabled through native logging manager. In case of Python it's the logging module:

selenium_logger = logging.getLogger('selenium.webdriver.remote.remote_connection')

selenium_logger.setLevel(logging.DEBUG)

logging.basicConfig(level=logging.DEBUG)

# results in logs like:

# DEBUG:selenium.webdriver.remote.remote_connection:POST http://127.0.0.1:52799/session {"capabilities": {"firstMatch": [{}], "alwaysMatch": {"browserName": "chrome", "platformName": "any", "goog:chromeOptions": {"extensions": [], "args": ["--headless"]}}}, "desiredCapabilities": {"browserName": "chrome", "version": "", "platform": "ANY", "goog:chromeOptions": {"extensions": [], "args": ["--headless"]}}}

Puppeteer - show default arguments

Puppeteer allows us to access default variables directly:

const puppeteer = require('puppeteer')

console.log(puppeteer.defaultArgs());

// will display something like:

// ['--disable-background-networking', '--enable-features=NetworkService,NetworkServiceInProcess', '--disable-background-timer-throttling', '--disable-backgrounding-occluded-windows', '--disable-breakpad', '--disable-client-side-phishing-detection', '--disable-component-extensions-with-background-pages', '--disable-default-apps', '--disable-dev-shm-usage', '--disable-extensions', '--disable-features=Translate', '--disable-hang-monitor', '--disable-ipc-flooding-protection', '--disable-popup-blocking', '--disable-prompt-on-repost', '--disable-renderer-backgrounding', '--disable-sync', '--force-color-profile=srgb', '--metrics-recording-only', '--no-first-run', '--enable-automation', '--password-store=basic', '--use-mock-keychain', '--enable-blink-features=IdleDetection', '--export-tagged-pdf', '--headless', '--hide-scrollbars', '--mute-audio', 'about:blank' ]

Most of these flags are harmless and actually makes the browser more performant. However, some aren't:

--disable-extensions- disables browser extensions which makes the browser appear unnatural.--disable-default-apps- disables installation of default browser apps when starting a new tab.--disable-component-extensions-with-background-pages- disables default browser extensions which use background pages.

So, we should start by at least getting rid of these:

Playwright - ignore default arguments using ignore_default_args option

# playwright has a handy `ignore_default_args` argument:

browser: Browser = chromium.launch(

ignore_default_args=[

'--disable-extensions',

'--disable-default-apps',

'--disable-component-extensions-with-background-pages'

]

)

Selenium - disable flags using experimental excludeSwitches option

from selenium import webdriver

chromeOptions = webdriver.ChromeOptions()

chromeOptions.add_experimental_option(

'excludeSwitches', [

'disable-extensions',

'disable-default-apps',

'disable-component-extensions-with-background-pages',

])

chromeDriver = webdriver.Chrome(chrome_options=chromeOptions)

Puppeteer - ignore default arguments using ignoreDefaultArgs option

const browser = await puppeteer.launch({

ignoreDefaultArgs: [

'--disable-extensions',

'--disable-default-apps',

'--disable-component-extensions-with-background-pages'

]

})

User Agent Identity

We've covered headers in great detail in our related article so most of it applies here as well:

When it comes to javascript we want to ensure that header values match the browser capabilities. So if we're using a Windows based User-Agent header on a Linux based web scraper, we need to modify the javascript namespaces (like navigator.platform etc) to reflect the correct operating system.

We also want to use user agent string with the same browser version we are using as every browser version has unique features which can be determined from the javascript environment. In other words, if our user agent is saying it's Chrome 94 while our browser is on Chrome 99, the javascript fingerprint can see that we have some features not available in Chrome 94, so it's likely we're lying about our user agent string - a huge red flag!

Most automation tools allow you to configure user-agent string via some option, however these options just change the header and not the remaining javascript space so they should be avoided. Instead, one shortcut to handle this is the Network.setUserAgentOverride Chrome Developer Protocol (CDP) command, which not only updates the User-Agent header but a lot of javascript details related to it as well.

USER_AGENT = {

# usual user agent string

"userAgent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36",

"platform": "Win32",

"acceptLanguage": "en-US, en",

"userAgentMetadata": {

# ensure the order of this array matches real browser!

"brands": [

# at the time of writing this is always == 99

{"brand": " Not A;Brand", "version": "99"},

# ensure that the versions here match ones from User-Agent string

{"brand": "Chromium", "version": "74"},

{"brand": "Google Chrome", "version": "74"},

],

"fullVersion": "74.0.3729.169",

"platform": "Windows",

"platformVersion": "10.0",

"architecture": "x86",

"model": "",

"mobile": False,

},

}

cdp.send('Network.setUserAgentOverride', USER_AGENT)

Here, we are setting the whole user agent profile of Windows 10 instead of just the user agent header.

Playwright - how to send a CDP signal?

# we can access CDP connection through page context:

USER_AGENT = {}

cdp = page.context.new_cdp_session(page)

cdp_response = cdp.send('Network.setUserAgentOverride', USER_AGENT)

Selenium - how to send a CDP signal?

# Selenium allows CDP communication directly through Browser object

USER_AGENT = {}

browser.execute_cdp_cmd("Network.setUserAgentOverride", USER_AGENT)

Puppeteer - how to send a CDP signal?

// we can access CDP connection through page target:

const USER_AGENT = {}

const client = await page.target().createCDPSession();

await client.send("Network.setUserAgentOverride", USER_AGENT);

For more on existing Chrome Devtools Protocol (CDP) commands see the official CDP documentation command list

Resisting Fingerprinting

With a fortified browser we can avoid instant identification, however our web scraper can still be blocked as the anti-bot services gather data about our connection patterns and tie them to an unique fingerprint ID.

Javascript fingerprints can extract thousands of details about the connecting browser such as rendering and audio capabilities, hardware details, what fonts are used etc.

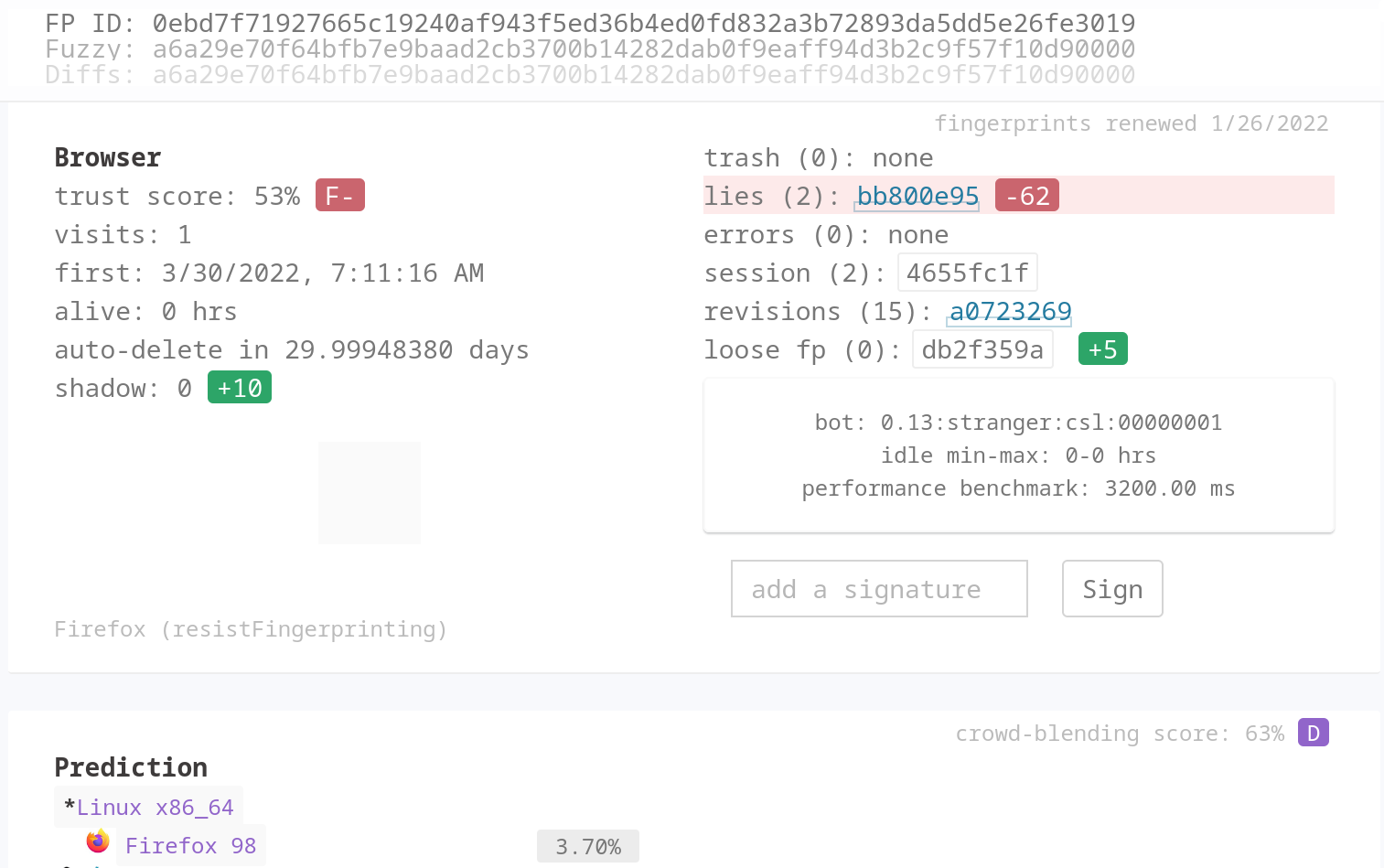

To further understand this, let's refer to a popular open source fingerprint analysis tool called creepjs:

Here, thousands of identifier details that are exposed by javascript. Luckily, we don't need to randomize everything. Just by changing few details we can make our fingerprint unique enough to avoid detection. To add, detailed fingerprinting is a resource intensive operation and most websites cannot afford to have their users wait there 2 seconds for the content to load.

So, we should start by randomizing the basics:

- Headers - we already covered user-agent string which is the most important one but we can also change some details like language headers, encoding capabilities etc.

- Viewport - while 1920x1080 is the most popular resolution it's not the only one. We can easily randomize through the most popular resolutions and go further than that as many natural users don't use full screen browsers. See the creepjs' screen test for more.

- Locale, Timezone, geolocation - another easy way to introduce randomness to the browser fingerprint. Take note that if you're using proxy you should stick to proxies geolocation.

The further we scale our web scraping environment the more important profile randomization is. That being said, it's only worth delving into this territory once we fully fortified our browser.

ScrapFly

At ScrapFly we spend countless hours working on understanding javascript fingerprinting to provide javascript rendering and anti scraping protection bypass features.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale. Each product is equipped with an automatic bypass for any anti-bot system and we achieve this by:

- Maintaining a fleet of real, reinforced web browsers with real fingerprint profiles.

- Millions of self-healing proxies of the highest possible trust score.

- Constantly evolving and adapting to new anti-bot systems.

- We've been doing this publicly since 2020 with the best bypass on the market!

ScrapFly's API allows users to retrieve any url through our fortified and fingerprint-resistent browser pool via javascript rendering feature. This API can either be used directly or through our python-sdk:

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly = ScrapflyClient(key="YOUR_API_KEY")

result: ScrapeApiResponse = scrapfly.scrape(

ScrapeConfig(

url="https://website-to-scrape.com",

render_js=True,

# ^^^ enable browser based javascript rendering

),

)

html = result.scrape_result['content']

Further, for websites that are under various anti web scraping services we provide an anti scraping protection bypass feature which solves javascript fingerprinting challenges that are presented before the web content:

result: ScrapeApiResponse = scrapfly.scrape(

ScrapeConfig(

url="https://website-to-scrape.com",

render_js=True,

asp=True,

# ^^^ enable anti scraping protection bypass

),

)

Finally, ScrapFly offers high quality proxies which can further improve scraper connection fingerprint:

result: ScrapeApiResponse = scrapfly.scrape(

ScrapeConfig(

url="https://website-to-scrape.com",

render_js=True,

asp=True,

country='US',

# ^^^ select only US based proxies

proxy_pool='public_mobile_pool',

# ^^^ use mobile proxies or residential ones:

proxy_pool='public_residential_pool',

),

)

ScrapFly's API is designed to offload web scraping complexity to a convenient, feature-rich abstraction layer, so developers can focus on developing rather than reverse engineering the complexities of content access!

Summary

In this article, we introduced ourselves with the complex world of javascript fingerprinting.

We started off by learning how to fortify our browser to avoid instant detection by plugging various javascript leaks left by headless browser or automation systems such as Selenium, Playwright or Puppeteer. However, just plugging holes is not enough to scrape well protected targets at scale, so we have also taken a look at how to inspect and randomize non-critical fingerprint elements which prevents web scraper identification in the long run.

All that being said, javascript fingerprinting is one of the biggest and most complex subjects in web scraping. It constantly evolves and changes with each web browser iteration, so it's a perfect place for an abstraction layer! ScrapFly's API provides javascript rendering and anti scraping protection bypass which will make any target easy to reach, so check out for free!