Many modern websites in 2023 rely heavily on javascript to render interactive data using frameworks such as React, Angular, Vue.js and so on which makes web scraping a challenge.

In this tutorial, we'll take a look at how can we use headless browsers to scrape data from dynamic web pages. What are existing available tools and how to use them? And what are some common challenges, tips and shortcuts when it comes to scraping using web browsers.

What is a dynamic web page?

One of the most commonly encountered web scraping issues is:

why can't my scraper see the data I see in the web browser?

Dynamic pages use complex javascript-powered web technologies that unload processing to the client. In other words, it gives users the data and logic but they have to put them together to see the whole, rendered web page.

An example of such a page would be as simple as:

<html>

<head>

<title>Example Dynamic Page</title>

</head>

<body>

<h1>loading...</h1>

<p>loading...</p>

<script>

var data = {

title: "Awesome Product 3000",

content: "Available 2024 on scrapfly.io, maybe."

};

document.addEventListener("DOMContentLoaded", function(event) {

// when page loads take data from variable and put it on page:

document.querySelector("h1").innerHTML = data["title"];

document.querySelector("p").innerHTML = data["content"];

});

</script>

</body>

</html>

Opening this page with javascript disabled, we will not see any content just loading indicators like spinning circles or text asking us to enable javascript:

This is because the content is loaded from a variable using javascript after the page loads. So, the scraper doesn't see the fully rendered page as it's not a web browser capable of loading pages with javascript.

How to scrape dynamic web pages?

There are a few ways to deal with dynamic javascript-generated content when scraping:

First, we could reverse engineer website's behavior and replicate it in our scraper program.

Unfortunately, this approach is very time-consuming and challenging. It requires in-depth web development skills and specialty tools.

How to Scrape Hidden Web Data

The visible HTML doesn't always represent the whole dataset available on the page. In this article, we'll be taking a look at scraping of hidden web data. What is it and how can we scrape it using Python?

Alternatively, we can automate a real web browser to scrape dynamic web pages by integrating it into our web scraper program. For this, there are various browser automation libraries that we'll be taking a look at today: Selenium, Puppeteer and Playwright.

How Does Browser Automation Work?

Modern browsers such as Chrome and Firefox (and their derivatives) come with built-in automation protocols allowing other programs to control these web browsers.

Currently, there are two popular browser automation protocols:

- The older webdriver protocol which is implemented through an extra browser layer called webdriver. Webdriver intercepts action requests and issues browser control commands.

- The newer Chrome DevTools Protocol (CDP for short). Unlike webdriver, the CDP control layer is implicitly available in most modern browsers.

In this article, we will be mostly covering CDP, but the developer experience of these protocols is very similar, often even interchangeable. For more on these protocols, see official documentation pages Chrome DevTools Protocol and WebDriver MDN Documentation.

Example Scrape Task

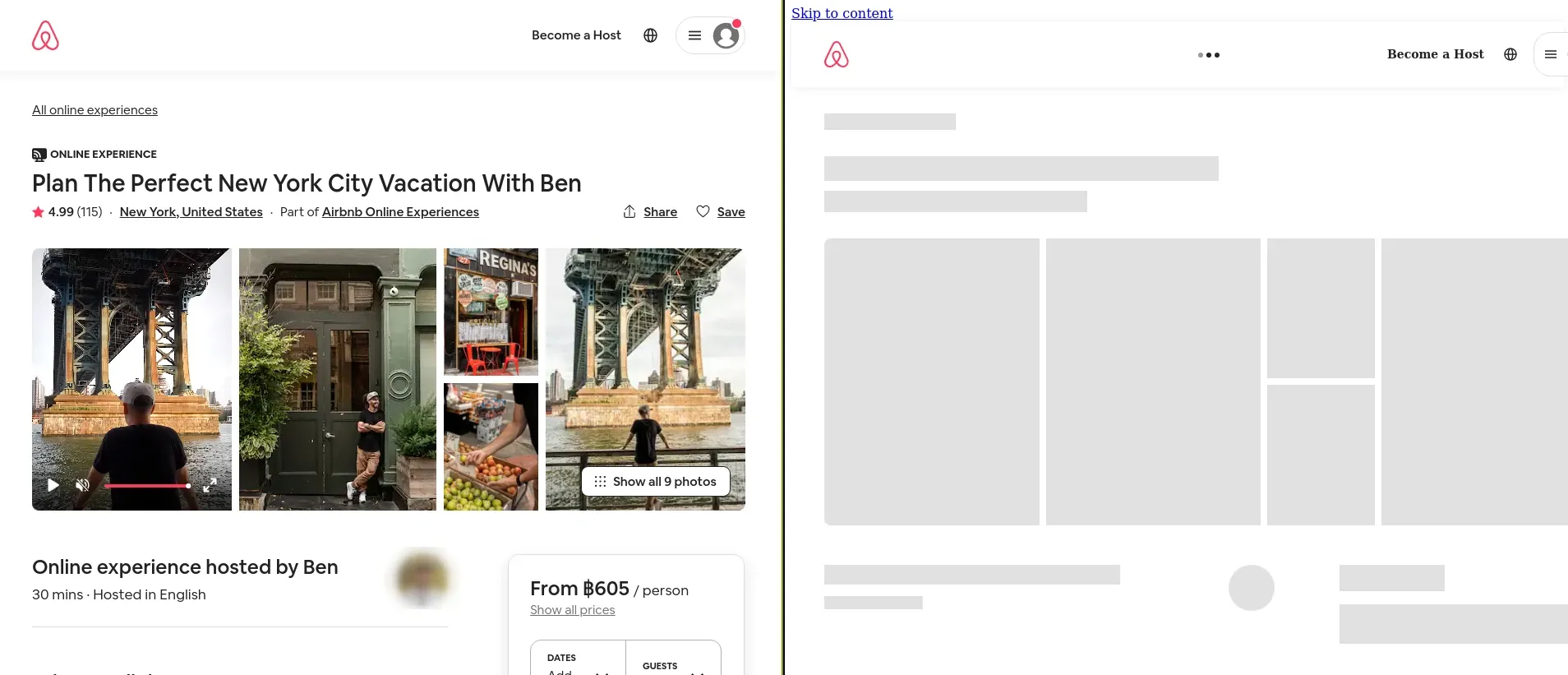

To better illustrate this challenge, we'll be using a real-world web-scraping example.

We'll be scraping online experience data from https://www.airbnb.com/experiences.

We'll keep our demo task short and see how to fully render a single experience page like: https://www.airbnb.com/experiences/2496585 and return the fully rendered contents for further processing.

Airbnb is one of the most popular websites that uses React Javascript framework to generate its dynamic pages. Without browser emulation, we'd have to reverse-engineer the website's javascript code before we could see and scrape its full HTML content.

However, with browser automation, our process is much more straightforward:

- Launch a web browser (like Chrome or Firefox).

- Go to the page https://www.airbnb.com/experiences/2496585.

- Wait for the dynamic contents to page load and render.

- Scrape the full page source contents and parse it with the usual tools like BeautifulSoup

Let's try implementing this flow using 4 popular browser automation tools: Selenium, Puppeteer, Playwright and ScrapFly's API and see how they match up!

Selenium

Selenium is one of the first big automation clients created for automating website testing. It supports both of browser control protocols: webdriver and CDP (only since Selenium v4+).

Selenium is the oldest tool on our list today which means it has a considerable community and loads of features as well as being supported in almost every programming language and running almost every web browser:

Languages: Java, Python, C#, Ruby, JavaScript, Perl, PHP, R, Objective-C and Haskell

Browsers: Chrome, Firefox, Safari, Edge, Internet Explorer (and their derivatives)

Pros: Big community that has been around for a while - meaning loads of free resources. Easy to understand synchronous API for common automation tasks.

As a down-side, some of Selenium's functions are dated and not very intuitive. This also means that Selenium cannot take advantage of new language features like Python's asyncio thus, it's significantly slower than other tools like Playwright or Puppeteer which both support asynchronous client API.

Web Scraping with Selenium and Python

Introduction to web scraping dynamic javascript powered websites and web apps using Selenium browser automation library and Python.

Let's take a look at how we can use Selenium webdriver to solve our airbnb.com scraper problem:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support.expected_conditions import visibility_of_element_located

browser = webdriver.Chrome() # start a web browser

browser.get("https://www.airbnb.com/experiences/272085") # navigate to URL

# wait for page to load

# by waiting for <h1> element to appear on the page

title = (

WebDriverWait(driver=browser, timeout=10)

.until(visibility_of_element_located((By.CSS_SELECTOR, "h1")))

.text

)

# retrieve fully rendered HTML content

content = browser.page_source

browser.close()

# we then could parse it with beautifulsoup

from bs4 import BeautifulSoup

soup = BeautifulSoup(content, "html.parser")

print(soup.find("h1").text)

Above, we start by initiating a web browser window and navigate to a single Airbnb experience page. We then wait for the page to load by waiting for the first header element to appear on the page. Finally, we extract the fully loaded HTML content and parse it with BeautifulSoup.

Next let's take a look at faster and more modern alternatives to Selenium: Puppeteer for Javascript and Playwright for Python.

Puppeteer

Puppeteer is an asynchronous web browser automation library for Javascript by Google (as well as Python through the unofficial Pyppeteer package).

Languages: Javascript, Python (unofficial)

Browsers: Chrome, Firefox (Experimental)

Pros: First strong implementation of CDP, maintained by Google, intended to be a general browser automation tool.

Compared to Selenium, puppeteer supports fewer languages and browsers but it fully implements CDP protocol and has a strong team by Google behind it.

Puppeteer also describes itself as a general-purpose browser automation client rather than fitting itself into the web testing niche - which is good news as web-scraping issues receive official support.

Let's take a look at how our airbnb.com example would look in puppeteer and javascript:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://airbnb.com/experiences/272085');

await page.waitForSelector("h1");

await page.content();

await browser.close();

})();

As you can see, Puppeteer example looks almost identical to our Selenium example except for the await keyword that indicates async nature of this program. Puppeteer also has a friendlier, more modern API for waiting for elements to appear on the page and other common functions.

How to Web Scrape with Puppeteer and NodeJS in 2025

Introduction to using Puppeteer in Nodejs for web scraping dynamic web pages and web apps. Tips and tricks, best practices and example project.

Puppeteer is great, but Chrome browser + Javascript might not be the best option when it comes to maintaining complex web-scraping systems. For that, let's continue our browser automation journey and take a look at Playwright, which is implemented in many more languages and browsers, making it more accessible and easier to scale.

Playwright

Playwright is a synchronous and asynchronous web browser automation library available in multiple languages by Microsoft.

The main goal of Playwright is reliable end-to-end modern web app testing, though it still implements all of the general-purpose browser automation functions (like Puppeteer and Selenium) and has a growing web-scraping community.

Languages: Javascript, .Net, Java and Python

Browsers: Chrome, Firefox, Safari, Edge, Opera

Pros: Feature rich, cross-language, cross-browser and provides both asynchronous and synchronous client implementations. Maintained by Microsoft.

Web Scraping with Playwright and Python

Playwright is the new, big browser automation toolkit - can it be used for web scraping? In this introduction article, we'll take a look how can we use Playwright and Python to scrape dynamic websites.

Let's continue with our airbnb.com example and see how it would look in Playwright and Python:

# asynchronous example:

import asyncio

from playwright.async_api import async_playwright

async def run():

async with async_playwright() as pw:

browser = await pw.chromium.launch()

pages = await browser.new_page()

await page.goto('https://airbnb.com/experiences/272085')

await page.wait_for_selector('h1')

return url, await page.content()

asyncio.run(run())

# synchronous example:

from playwright.sync_api import sync_playwright

def run():

with sync_playwright() as pw:

browser = pw.chromium.launch()

pages = browser.new_page()

page.goto('https://airbnb.com/experiences/272085')

page.wait_for_selector('h1')

return url, page.content()

Playwright's API doesn't differ much from that of Selenium or Puppeteer, and it offers both a synchronous client for simple script convenience and an asynchronous client for additional performance scaling.

Playwright seems to tick all the boxes for browser automation: it's implemented in many languages, supports most web browsers and offers both async and sync clients.

ScrapFly API

Web browser automation can be a complex, time-consuming process: there are many moving parts and variables - for this at ScrapFly, we're offering to do the heavy lifting for you by providing cloud-based browser automation API.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Let's take a quick look at how we can replicate our airbnb.com scraper in ScrapFly's Python SDK:

import asyncio

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

async def run():

scrapfly = ScrapflyClient(key="YOURKEY", max_concurrency=2)

to_scrape = [

ScrapeConfig(

url="https://www.airbnb.com/experiences/272085",

render_js=True,

wait_for_selector="h1",

),

]

results = await scrapfly.concurrent_scrape(to_scrape)

print(results[0]['content'])

ScrapFly API simplifies the whole process to a few parameter configurations. Not only that, but it automatically configures the backend browser for the best browser configurations for the given scrape target and avoids all scraper blocking automatically.

For more on ScrapFly's browser rendering and more, see our official documentation: https://scrapfly.io/docs/scrape-api/javascript-rendering

Which One To Choose?

We've covered three major browser automation clients: Selenium, Puppeteer and Playwright - so which one to choose?

Well, it entirely depends on the project you are working on. However, Playwright and Puppeteer have a big advantage over Selenium by providing asynchronous clients, which are much faster.

Generally, Selenium has the most educational resources and community support, but Playwright is quickly gaining popularity in the web scraping community.

Alternatively, ScrapFly offers a generic, scalable and easy-to-manage solution if you're not interested in maintaining your own browser automation infrastructure.

To wrap this introduction up let's take a quick look at common challenges browser-based web scrapers have to deal with, which can help you decide which one of these libraries to choose.

Challenges and Tips

While automating a single instance of a browser appears to be an easy task, when it comes to web-scraping there are a lot of extra challenges that need solving, such as:

- Avoiding being blocked.

- Session persistence.

- Proxy integration.

- Scaling and resource usage optimization.

Unfortunately, none of the browser automation clients are designed for web-scraping first, so solutions to these problems have to be implemented by each developer either through community extensions or custom code.

Fingerprinting and Blocking

Unfortunately, modern web browsers provide so much information about themselves that they can be easily identified and potentially blocked from accessing a website.

For web scraping, automated browsers need to be fortified against fingerprinting, which can be done by applying various patches that hide common leaks.

In the above screenshot, we can see a popular analysis tool creepjs is successfully identifying a playwright-powered headless browser.

How Javascript is Used to Block Web Scrapers? In-Depth Guide

Introduction to how javascript is used to detect web scrapers. What's in javascript fingerprint and how to correctly spoof it for web scraping.

Fortunately, the web scraping community has been working on this problem for years and there are existing tools that patch up major fingerprinting holes automatically:

Playwright:

- Playwright Extra for Javascript contains various scraping addons and browser stealth extensions

- Stealth for Python

Puppeteer:

Selenium:

However, these tools are not perfect and can be easily detected by more advanced fingerprinting tools.

Scaling using Asynchronous Clients

Web browsers are super complex thus they can be really difficult to work with and scale up.

One of the easiest ways to scale up browser automation is to run multiple instances of the browser in parallel and the best way to do that is to use asynchronous client which allow to control multiple browser tabs concurrently. In other words, while one browser tab is loading we can switch to another one.

Above, we see how the synchronous scraper is waiting for the browser to finish loading page before it can continue. On the other hand, the asynchronous scraper is using 4 different browser tabs concurrently and can skip all of the wait wait.

In this imaginary scenario, our async scraper can perform 4 requests while sync only manages one, however in real-life this number could be significantly higher!

As an example, we'll use Python and Playwright and schedule 3 different URLs to be scraped asynchronously:

import asyncio

from asyncio import gather

from playwright.async_api import async_playwright

from playwright.async_api._generated import Page

from typing import Tuple

async def scrape_3_pages_concurrently():

async with async_playwright() as pw:

# launch 3 browsers

browsers = await gather(*(pw.chromium.launch() for _ in range(3)))

# start 1 tab each on every browser

pages = await gather(*(browser.new_page() for browser in browsers))

async def get_loaded_html(page: Page, url: str) -> Tuple[str, str]:

"""go to url, wait for DOM to load and return url and return page content"""

await page.goto(url)

await page.wait_for_load_state("domcontentloaded")

return url, await page.content()

# scrape 3 pages asynchronously on 3 different pages

urls = [

"http://url1.com",

"http://url2.com",

"http://url3.com",

]

htmls = await gather(*(

get_loaded_html(page, url)

for page, url in zip(pages, urls)

))

return htmls

if __name__ == "__main__":

asyncio.run(scrape_3_pages_concurrently())

In this short example, we start three web browser instances, then we can use them asynchronously to retrieve multiple pages.

Disabling Unnecessary Load

Big chunk of web browser processing is rendering the page display which is not necessary for web scraping as scrapers have no eyes.

We can get a significantly performance and speed boost by disabling image/style loading and other unnecessary resources.

For example, in Playwright and Python we can implement these simple route options to block image rendering:

page = await browser.new_page()

# block requests to png, jpg and jpeg files

await page.route("**/*.{png,jpg,jpeg}", lambda route: route.abort())

await page.goto("https://example.com")

await browser.close()

Not only this speeds up loading but saves bandwidth which can be critical when proxies are used for web scraping.

FAQ

To wrap this article up let's take a look at some frequently asked questions about web scraping using headless browsers that we couldn't quite fit into this article:

How can I tell whether it's a dynamic website?

The easiest way to determine whether any of the dynamic content is present on the web page is to disable javascript in your browser and see if data is missing. Sometimes data might not be visible in the browser but is still present in the page source code - we can click "view page source" and look for data there. Often, dynamic data is located in javascript variables under <script> HTML tags. For more on that see How to Scrape Hidden Web Data

Should I parse HTML using browser or do it in my scraper code?

While the browser has a very capable javascript environment generally using HTML parsing libraries (such as beautifulsoup in Python) will result in faster and easier-to-maintain scraper code.

A popular scraping idiom is to wait for the dynamic data to load and then pull the whole rendered page source (HTML code) into scraper code and parse the data there.

Can I scrape web applications or SPAs using browser automation?

Yes, web applications or Single Page Apps (SPA) function the same as any other dynamic website. Using browser automation toolkits we can click around, scroll and replicate all the user interactions a normal browser could do!

What are static page websites?

Static websites are essentially the opposite of dynamic websites - all the content is always present in the page source (HTML source code). However, static page websites can still use javascript to unpack or transform this data on page load, so browser automation can still be beneficial.

Can I scrape a javascript website with python without using browser automation?

When it comes to using python in web scraping dynamic content we have two solutions: reverse engineer the website's behavior or use browser automation.

That being said, there's a lot of space in the middle for niche, creative solutions. For example, a common tool used in web scraping is Js2Py which can be used to execute javascript in python. Using this tool we can quickly replicate some key javascript functionality without the need to recreate it in Python.

What is a headless browser?

A headless browser is a browser instance without visible GUI elements. This means headless browsers can run on servers that have no displays. Headless chrome and headless firefox also run much faster compared to their headful counterparts making them ideal for web scraping.

Headless Browser Scraping Summary

In this overview article, we've taken a look at the capabilities of the most popular browser automation libraries in the context of web-scraping: the classic Selenium client, newer Google's approach - Puppeteer and Microsoft's Playwright.

We wrote a simple scraper for airbnb experiences in each of these tools and compared their performance and usability. Finally, we covered common challenges like headless browser blocking, scraping speed and resource optimization.

Browser automation tools can be an easy solution for dynamic website scraping though it can be difficult to scale. If need a helping hand check out ScrapFly's Javascript Rendering feature!