GraphQL is becoming an increasingly popular way to deal with major datasets in dynamic websites. Often data heavy websites would use graphql as a backend for javascript powered front-ends, which load data dynamically while user is navigating the web page. Major downside of this is that scraping graphql websites is more difficult as it requires either browser emulation or reverse engineering website's backend functionality.

In this article we'll take a look at what is Graphql and how do we deal with it in web scraping. We'll take a look at query building basics, how to inspect web targets and graphql queries for web scraping.

How to Scrape Dynamic Websites Using Headless Web Browsers

Introduction to using web automation tools such as Puppeteer, Playwright, Selenium and ScrapFly to render dynamic websites for web scraping

What is GraphQL

Graphql is a query language for graph based datasets. Graphs are becoming increasingly popular in data heavy websites as it's a more efficient and flexible way of transferring data. Compared to REST services, the graphql client can request specific parts of the dataset explicitly, which is a desired feature for web developers as it allows more efficient data transfer and faster, more flexible development.

When it comes to web scraping we can too share the same benefits - by reducing our queries we can scrape only the details we need which would save us scraping resources and bandwidth.

Some examples of graphql powered websites are: https://www.walmart.com/, https://www.airbnb.com/, https://www.coursera.org/, https://opensea.io/; For more see official user list at https://graphql.org/users/.

Scraping Graphql powered websites is often a bit harder as the data protocol is more complex and can be difficult to understand and replicate. So let's take a look what makes graphql protocol so special and how can we take advantage of it in web scraping.

Understanding Graphql

To successfully scrape GraphQL powered websites we need to understand two things: a bit of graphql query language and a bit of website reverse engineering. Let's start off with graphql basics and how can we apply them in Python.

Graphql clients usually transmit queries through JSON documents which consist of two to three keywords: mandatory query and optional operationName and variables:

{

"operationName": "QueryName",

"query": "query QueryName($variable: VariableType) {

field1

field2

filedObject() {

field3

field4

}

}",

"variables": {

"variable": "europe"

}

}

The query keyword must contain a valid graphql query which is rather simple function like syntax. The parenthesis values indicate variables which are optional (e.g. MyQuery($some_number: Float) ) and the values in curly brackets indicate which fields we want returned.

Let's give it a go with an example graphql demo server which contains some made up products. For this let's fire up docker and start a simple demo server:

$ docker run -p 8000:8000 scrapecrow/graphql-products-example:latest

Running strawberry on `http://0.0.0.0:8000/graphql`

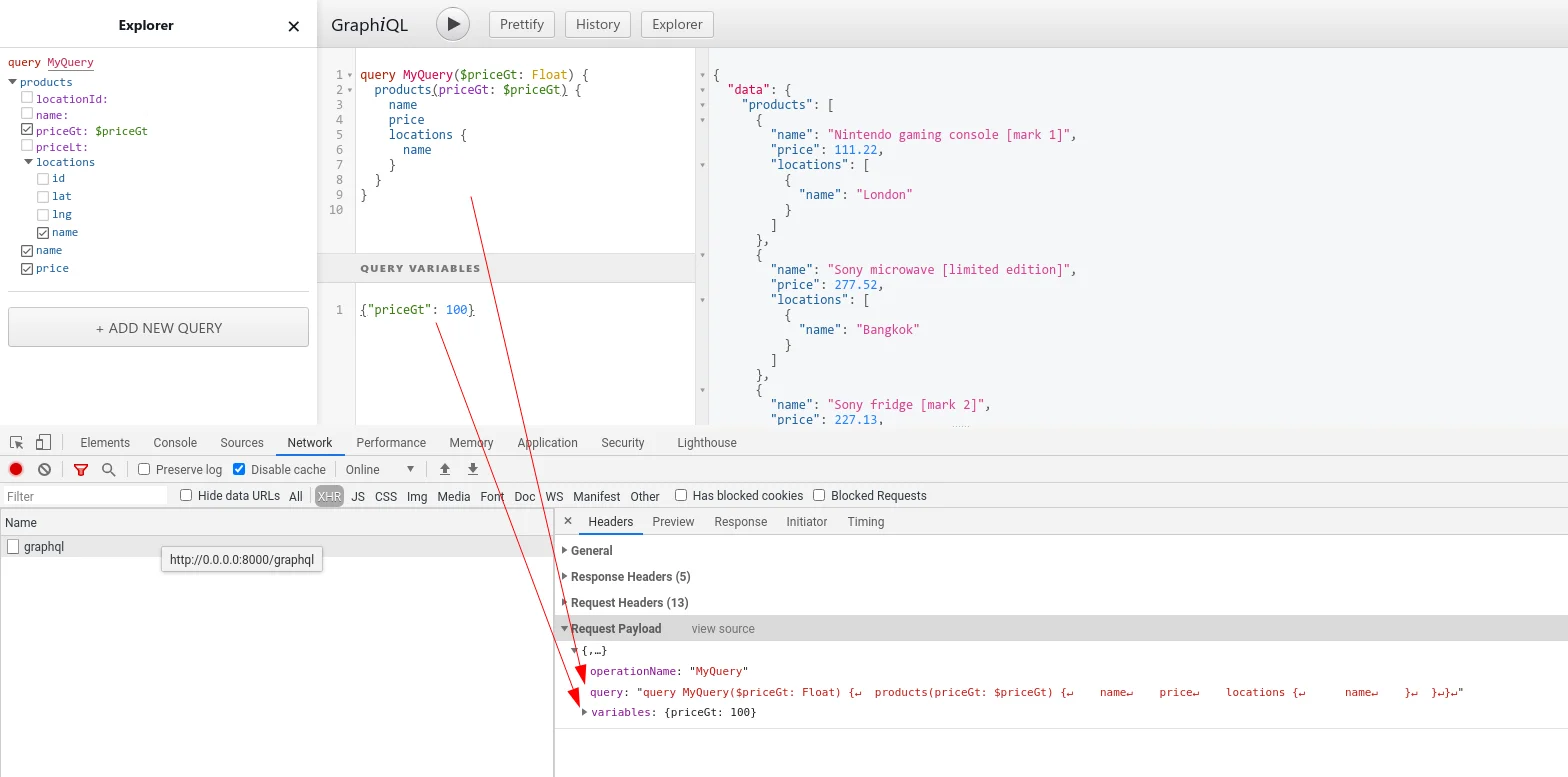

If we open up the debug studio link (http://0.0.0.0:8000/graphql) in our browser we can use the front-end to help us build some queries for this demo dataset. Let's start with product query which returns all products which have price greater than 100:

# define query

query MyQuery($priceGt: Float) {

# we can only query queries server supports, in this example server contains

# "products" query which can take many different filter parameters

products(priceGt: $priceGt) {

# here we define which fields we want returned

name

price

# take note that "object" fields need to be further expanded to include which sub-fields to retrieve

locations {

name

}

}

}

Here we built a very simple query to get product's name, price and location details. If we start up a network inspector of our browser (F12 in chrome and select Network tab) and take a look at what happens when we click to send query we can see a simple json request is being made:

The browser client sends request with our query and variables as JSON document to the server which returns a JSON document with query results or errors - pretty straight forward!

Let's replicate this request in Python to fully grasp graphql client behavior (we'll be using requests package):

import json

import requests

query = """

query MyQuery($priceGt: Float) {

products(priceGt: $priceGt) {

name

price

locations {

name

}

}

}

"""

json_data = {

'query': query,

'variables': {

'priceGt': 100,

},

'operationName': 'MyQuery',

}

response = requests.post('http://0.0.0.0:8000/graphql', json=json_data)

print(json.dumps(response.json(), indent=2))

If we run this script, it'll print out the same JSON results we see in the debug front-end:

{

"data": {

"products": [

{

"name": "Nintendo gaming console [mark 1]",

"price": 111.22,

"locations": [

{

"name": "London"

}

]

},

{

"name": "Sony microwave [limited edition]",

"price": 277.52,

"locations": [

{

"name": "Bangkok"

}

]

}

...

}

}

So to summarize, we can send graphql queries as JSON POST requests which must contain query keyword in it's payload.

For more on GraphQL query building see official extensive tutorial, we recommend the Fields section in particular

Scraping Graphql

Now that we understand graphql and how to make simple graphql requests let's take a look at how it all works in the context of web scraping.

Before we can send graphql queries to our scrape target we first need to introspect what are available query keys and variables. There are two ways of doing this: introspection and reverse-engineering.

Introspecting Graphql

Introspection is a nifty graphql feature that tells graphql client information about available graph dataset: what queries are supported, what fields dataset objects contain and what variables can be provided for data filtering.

Unfortunately, many public servers have graphql introspection disabled to prevent scraping or database attacks - however, we can still try!

There are few simple ways we can perform graphql introspection. First one being we can run a simple graphql query which refers to special __schema variable:

import json

import requests

query = """

query IntrospectionQuery {

__schema {

queryType { name }

mutationType { name }

subscriptionType { name }

types {

...FullType

}

directives {

name

description

locations

args {

...InputValue

}

}

}

}

fragment FullType on __Type {

kind

name

description

fields(includeDeprecated: true) {

name

description

args {

...InputValue

}

type {

...TypeRef

}

isDeprecated

deprecationReason

}

inputFields {

...InputValue

}

interfaces {

...TypeRef

}

enumValues(includeDeprecated: true) {

name

description

isDeprecated

deprecationReason

}

possibleTypes {

...TypeRef

}

}

fragment InputValue on __InputValue {

name

description

type { ...TypeRef }

defaultValue

}

fragment TypeRef on __Type {

kind

name

ofType {

kind

name

ofType {

kind

name

ofType {

kind

name

ofType {

kind

name

ofType {

kind

name

ofType {

kind

name

ofType {

kind

name

}

}

}

}

}

}

}

}

"""

json_data = {

'query': query,

}

response = requests.post('http://0.0.0.0:8000/graphql', json=json_data)

print(json.dumps(response.json(), indent=2))

This query will return schema of the dataset in JSON format which contains information about available queries, objects and fields.

However, reading JSON schemas for humans can be a very daunting task so instead we can use some introspection GUI tool which greatly simplifies this process. Let's take a look how can we use graphql development GUI to craft code for web scraping.

Introspecting With Apollo Studio

Popular introspection tool is provided by a major graphql platform Apollo. This platform is often encountered in web scraping so we can use their own studio tool to reverse engineer graphql queries.

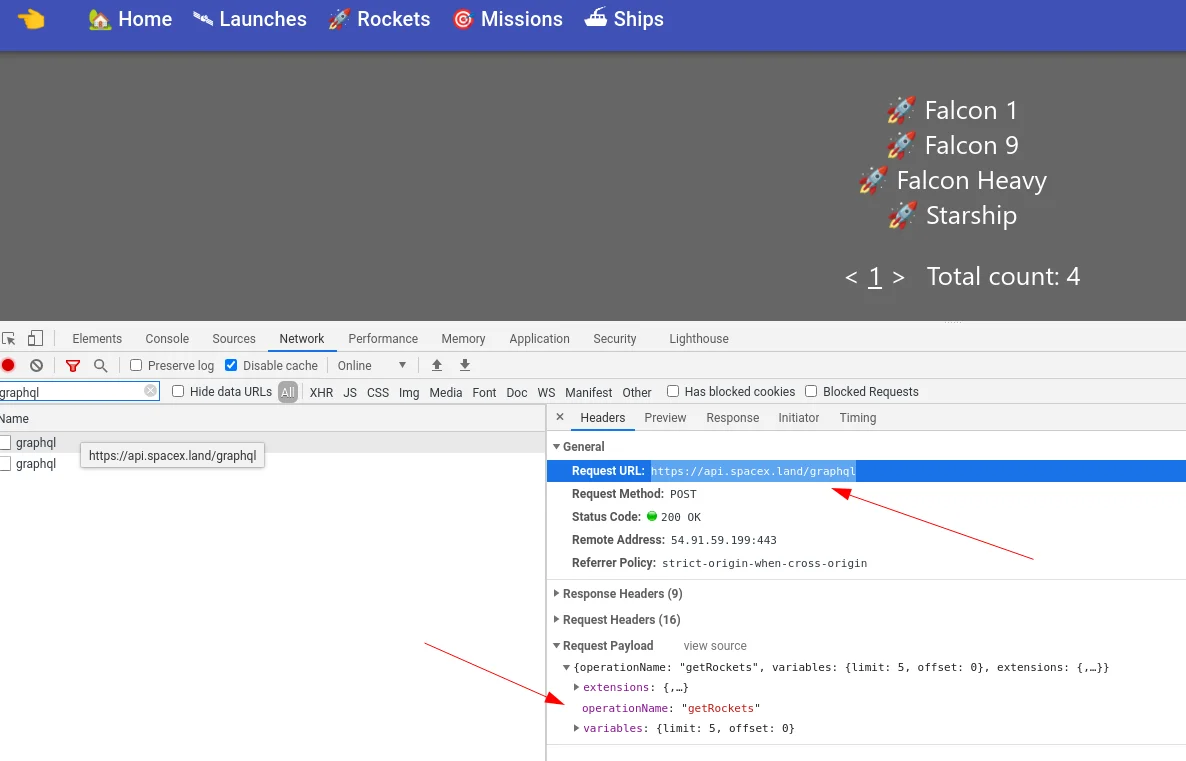

In this example we'll use SpaceX open graphql database: https://spacex.land (the rockets section). Let's go to this page and see what happens in our web inspector:

Here, we see that graphql request is being made to SpaceX's graphql server: https://api.spacex.land/graphql

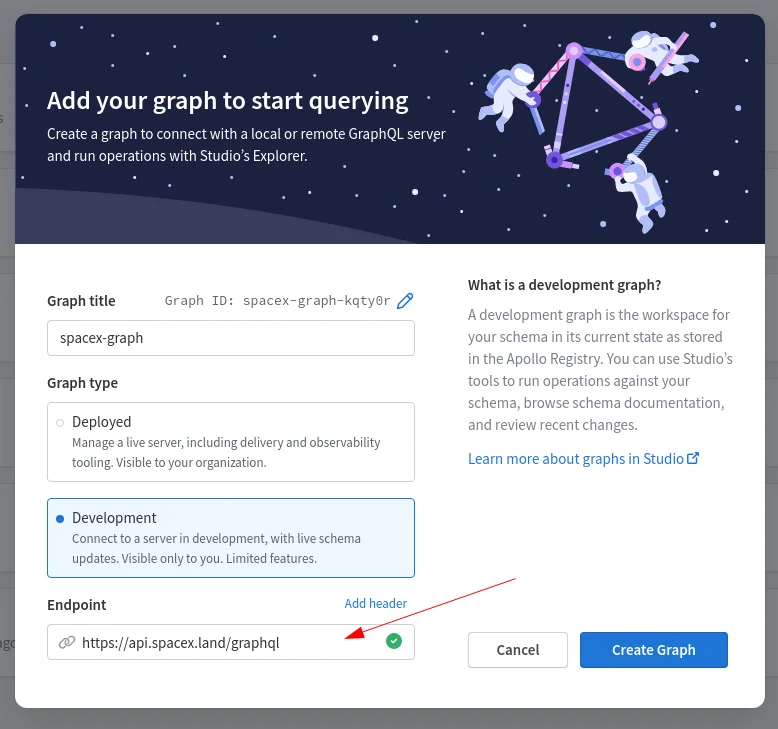

Let's try to introspect it with Apollo Studio by going to https://studio.apollographql.com/ and creating a new graph project:

Apollo Studio will only work with graphql servers that have introspection enabled and often some extra headers like API tokens or secret keys might need to be added (using the 'Add header' button).

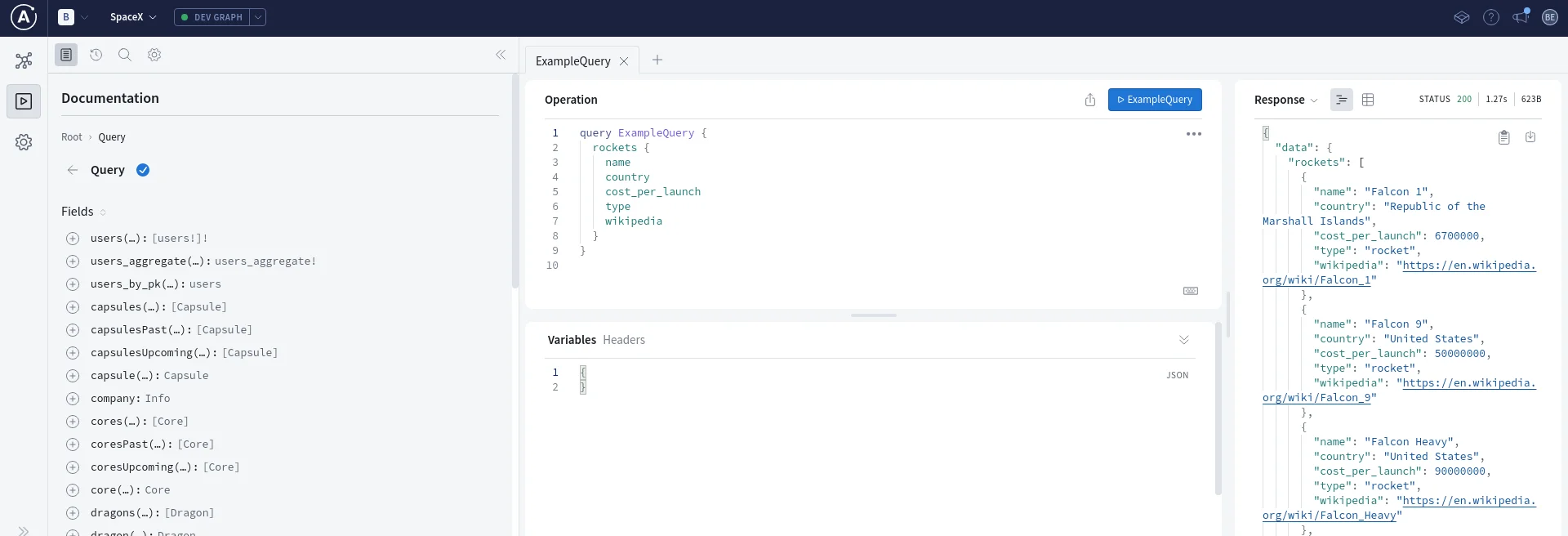

If introspection is possible Apollo Studio will provide us with a convenient graphql debugging environment where we can build and test our queries:

Once we're happy with our queries we can replicate them in your web scraper just as we did in the previous section:

# scrape all SpaceX launch details

import json

import requests

query = """

query Launches {

launches {

launch_date_utc

mission_name

mission_id

rocket {

rocket {

id

name

company

}

}

details

links {

article_link

}

}

}

"""

json_data = {

'query': query,

}

response = requests.post('https://api.spacex.land/graphql', json=json_data)

print(json.dumps(response.json(), indent=2))

In the code above we can retrieve all of the launch data in a single requests! GraphQL servers are pretty powerful and often allow retrieving big chunks of the dataset without pagination making web scraper logic much more simple and easier to maintain.

Challenges

Unfortunately, graphql introspection is rarely accessible publicly, so to build graphql queries for web scraping we need to employ a bit of reverse engineering knowledge. This often involves some common challenges:

- Locked Queries.

To protect themselves from web scraping websites often only respond to known queries. In other words sometimes query hash is provided in request header/body or checked by server before evaluation. This means we can only query whitelisted values, so it's important to replicate queries we see in web inspector when analyzing the website in our web scraper code. - Headers are Important.

Another form of protection is header locking. Websites would often use various secret tokens (headers likeAuthorization,X-prefixed headers should be looked at first) to validate that query is coming from website javascript client and not some web scraper. Fortunately, most of these keys usually reside in the HTML body of the web page and can be easily found and added to web scraper code.

To summarize when web scraping graphql it's best practice to replicate exact behavior web browser is performing and then the queries can be adjusted carefully to optimize web scraping.

Graphql in ScrapFly

Despite being an open and efficient query protocol graphql endpoints are often protected by various anti web scraping measures and this is where Scrapfly can lend a hand!

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

To replicate our SpaceX example in ScrapFly we can use either of these functions via ScrapFly's python SDK. For example we can send graphql POST requests with anti scraping protection solution:

import json

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly = ScrapflyClient(key="YOUR API KEY")

query = """

query Launches {

launches {

launch_date_utc

mission_name

mission_id

rocket {

rocket {

id

name

company

}

}

details

links {

article_link

}

}

}

"""

json_data = {

'query': query,

}

result: ScrapeApiResponse = scrapfly.scrape(

ScrapeConfig(

url="https://api.spacex.land/graphql",

method="POST",

data=json_data,

headers={'content-type': 'application/json'},

# we can enable anti bot protection solution:

asp=True,

),

)

data = json.loads(result.scrape_result['content'])

print(data)

With asp=True enabled we use ScrapFly to get around anti scraping protection blockers, however we can go even further and ignore graphql entirely by using javascript rendering:

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

from parsel import Selector

scrapfly = ScrapflyClient(key="YOUR API KEY")

result: ScrapeApiResponse = scrapfly.scrape(

ScrapeConfig(

url="https://spacex.land/launches",

render_js=True,

),

)

tree = Selector(text=result.scrape_result['content'])

for launch in tree.xpath('//div/a[contains(@href, "launches/")]'):

name = ''.join(launch.xpath('.//text()').getall())

url = launch.xpath('@href').get()

print(f"launch: {name} at {url}")

With render_js=True argument we can scrape websites using browser automation, meaning graphql data is being rendered just like it is in the browser. This allows us to focus on web scraping without needing to worry about reverse engineering graphql functionality of the website!

Summary

In this introduction tutorial we familiarized ourselves with web scraping graphql. We took a basic dive into understanding the query language itself and how can we execute it in Python. We also covered how to use various introspection tools to analyze websites graphql capabilities, and some challenges graphql scrapers face.

Graphql is becoming an increasingly popular option for dynamically loading data and with a bit of effort it's just as scrapable as any other dynamic data technology!