One of the key challenges when it comes to web scraping in 2024 is scraper blocking, and the most common way to approach this is to use a proxy scraper.

A web scraping proxy can be used to mask a web scraper origin to avoid IP-based blocking or access websites only available in specific countries.

In this article, we'll overview and compare several proxies for web scraping providers from the scrapers' point of view. We'll also cover how to pick the right provider for your proxy scraper and what are some common challenges and issues.

The Complete Guide To Using Proxies For Web Scraping

Introduction to proxy usage in web scraping. What types of proxies are there? How to evaluate proxy providers and avoid common issues.

Quick Proxy Type Overview

Before we start evaluating popular web scraping proxy services, let's do a quick overview of proxy types used in web scraping:

Datacenter Proxies

The simplest form of proxies, which are usually hosted on big data center servers. Unfortunately, datacenter proxies can be easily detected, as real people rarely browse the web from data centers.

Residential Proxies (Rotating Residential Proxies)

These IP addresses are given to real households and often sourced by renting them out from real individuals. Residential proxies are much easier to blend them using rotating techniques, unlike the datacenter ones. However, it's difficult to maintain the same IP address for a long section using a residential proxy scraper.

ISP Proxies (Static Residential Proxies)

These Proxies combine data center stability with residential proxy quality. ISP proxies are residential IP addresses issued to small data centers.

Mobile Proxies (Rotating Mobile Proxies)

These addresses are issued to mobile cell towers and each connecting 3G/4G/5G phone. Just like residential proxies, these are great for avoiding blocking but are even less stable.

What Makes Good Web Scraping Proxies?

To determine the best web scraping proxy service, we'll establish our evaluation methodology first.

Not all scraping proxies are equal. Even proxies with the same specifications, like proxy type (data center, residential or mobile) can perform very differently in real-life web scraping.

There are a few key points worth keeping an eye on when evaluating proxy scraper quality besides the raw tests. So, let's take a brief overview.

Proxy User Pool Sharing.

Private proxies will yield much better results compared to shared proxy pools, which often have several users using the same IPs for the same targets. If you think your target is a popular web scraping target, then you should avoid shared proxy pools.Geographic Location of proxies.

In the context of web scraping blocking, US-based proxies tend to have the best quality rating. While some services claim to have thousands of IP addresses in their pool, most of them might be from low-quality regions with low success rates. So, when it comes to web scraping proxies, it's better to prioritize quality over quantity.Real Specification

For peer-to-peer rotating in the residential and mobile proxies, a common issue is that received proxies are not always residential/mobile proxies. In our experience, this can vary from 1-40%. So, it's essential to confirm the IP type. For example, check the "connection type" on ipleak.com results before executing a proxy scraper for optimal results.Concurrency limit (aka thread limit)

In web scraping, this limit can frequently be a common source of stability issues. Well-written web scraping scripts can reach the proxy limit pretty quickly, as it's often lower than advertised and really hard to measure. So, check the concurrency limit for highly efficient proxy scrapers.

Finally, since proxy providers usually offer proxies through a single backconnect proxy (server that distributes proxies to clients) quality, speed and stability can heavily vary through each implementation. This can also make implementing custom, smarter proxy rotation logic more difficult for the web scraping developers, which can further reduce chances of successful connections.

How to Rotate Proxies in Web Scraping

In this article we explore proxy rotation. How does it affect web scraping success and blocking rates and how can we smartly distribute our traffic through a pool of proxies for the best results.

Pricing Evaluation

Proxy services offer very different pricing options - some charge by proxy count, some by bandwidth usage, and some by combining both.

For web scraping proxies, bandwidth proxies can grow the bill really quickly and should be avoided if possible. Let's take a look at some usage scenarios and how bandwidth proxies would scale:

| target | avg document page size | pages per 1GB | avg browser page size | pages per 1GB |

|---|---|---|---|---|

| Walmart.com | 16kb | 1k - 60k | 1 - 4 MB | 200 - 2,000 |

| Indeed.com | 20kb | 1k - 50k | 0.5 - 1 MB | 1,000 - 2,000 |

| LinkedIn.com | 35kb | 300 - 30k | 1 - 2 MB | 500 - 1,000 |

| Airbnb.com | 35kb | 30k | 0.5 - 4 MB | 250 - 2,000 |

| Target.com | 50kb | 20k | 0.5 - 1 MB | 1,000 - 2,000 |

| Crunchbase.com | 50kb | 20k | 0.5 - 1 MB | 1,000 - 2,000 |

| G2.com | 100kb | 10k | 1 - 2 MB | 500 - 2,000 |

| Amazon.com | 200kb | 5k | 2 - 4 MB | 250 - 500 |

In the above table, we can find estimated bandwidth usage for different popular web scraping targets.

Note that bandwidth used by web scrapers varies wildly based on the scraping target and the technique used. For example, reverse engineering website behavior and grabbing the necessary document details will significantly use less bandwidth. On the other hand, using automated browser solutions like Puppeteer, Selenium or Playwright can consume much more bandwidth. So, pricing can be very expensive for browser-based web scraping with bandwidth-based proxies.

Finally, all estimations should be at least doubled to consider the retry logic and other usage overhead, such as session warm-up and request header preparation.

For example, let's say we have a $400/Mo plan that gives us 20GB of data. That would only net us ~50k Amazon product scrapes at best and only a few hundred if we use a web browser with no special caching or optimization techniques.

Conversely, bandwidth proxies can work well with web scrapers that take advantage of AJAX/XHR requests.

For example, the same $400/Mo plan of 20GB data would yield us ~600k walmart.com product scrapes if we can reverse engineer walmart's web page behavior, which is a much more reasonable approach!

Bandwidth-based proxies usually give access to big proxy pools, but it's very rare for web scrapers to need more than 100-1000 proxies per projects. For example, if we use 1 proxy at 30 req/minute to scrape a website at 5000 req/minute we only require 167 rotating proxies!

Proxies with a count-based pricing model are often much safer and easier to work with. Buying a starter pool of private proxies (only accessible to a single client or a very small pool of clients) is an easier and safer commitment for proxy scraper projects.

Evaluation Table

In this web scraping proxy providers comparison, we'll be evaluating the providers from the point of view of ScrapFly's service. We'll cover the most important features used in web scraping, so our full evaluation table will look like this:

| Feature | Example Service |

|---|---|

| Datacenter Proxies | ✅ |

| Residential Proxies | ✅ |

| Mobile Proxies | ✅ |

| Geo Targeting | ✅ |

| Anti Bot Bypass | ✅ |

| Javascript Rendering | ✅ |

| Log Monitoring | ✅ |

| Price per GB | $1-25 |

| 50GB Project Estimated cost | $350/Mo |

We evaluate the proxy type: datacenter, residential and mobile. The proxy features, such as the geo-targeting and anti-bot bypass. We'll also evaluate the pricing based on an estimated 50GB web scraper cost.

Since we're evaluating from the point of view of ScrapFly users, let's look at what makes ScrapFly proxy scraper so special!

Proxies with ScrapFly

At ScrapFly, we understand that using proxies for web scraping can be complex, especially when balancing speed, stability, and cost. That's why we’re committed to simplifying your scraping stack while delivering powerful tools that scale with you.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale. Each product is equipped with an automatic bypass for any anti-bot system and we achieve this by:

- Maintaining a fleet of real, reinforced web browsers with real fingerprint profiles.

- Millions of self-healing proxies of the highest possible trust score.

- Constantly evolving and adapting to new anti-bot systems.

- We've been doing this publicly since 2020 with the best bypass on the market!

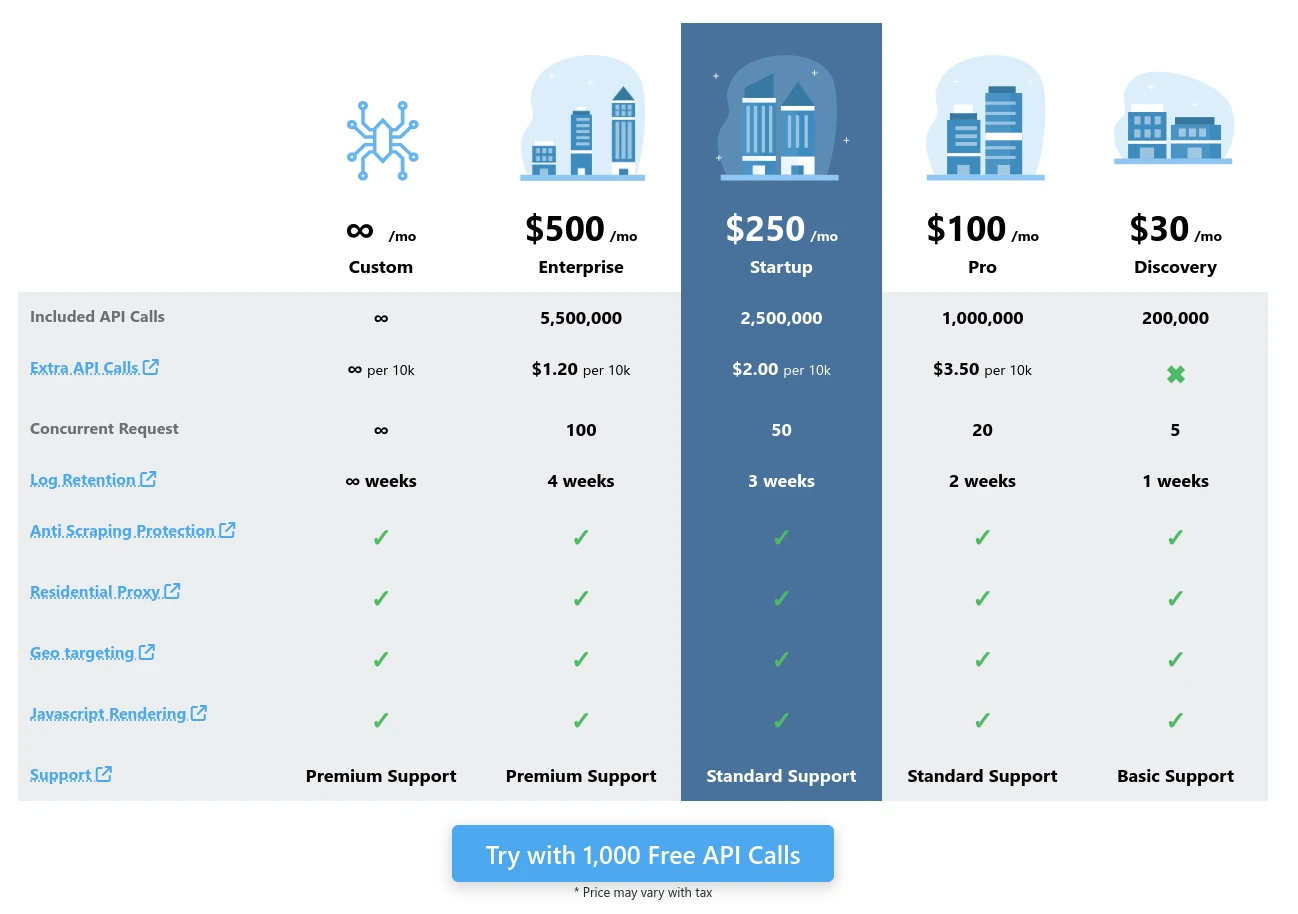

ScrapFly uses a credit-based pricing model that’s much more predictable than traditional bandwidth or proxy count billing. Our model scales with your use—not your traffic volume making it easy to adjust scrapers dynamically without worrying about cost spikes.

For instance, the popular $100/Mo tier can yield up to 1,000,000 target responses, depending on the features you enable:

- Choose between datacenter or residential proxies, including geo-targeting in 50+ countries.

- All HTTP1 requests are auto-upgraded to HTTP2 for better performance and lower block rates.

- Built-in Anti Scraping Protection automatically handles CAPTCHAs and complex blocks—charged only when successful.

- Browser-based rendering mimics real users and returns what actual browsers see, simplifying scraping engineering efforts.

- Use Proxy Saver to boost the performance of your own proxies—lower bandwidth, fewer failures, smarter delivery.

To explore these and other powerful capabilities, check out our full documentation!

Let’s see how ScrapFly with Proxy Saver stacks up in the evaluation table:

| Feature | ScrapFly (w/ Proxy Saver) |

|---|---|

| Datacenter Proxies | ✅ |

| Residential Proxies | ✅ |

| Mobile Proxies | ✅ |

| Geo Targeting | 54 countries |

| Anti Bot Bypass | ✅ |

| Javascript Rendering | ✅ |

| Log Monitoring | ✅ |

| Bandwidth Saver | ✅ (via Proxy Saver) |

| Smart Caching | ✅ (via Proxy Saver) |

| Protocol Support | HTTP/HTTPS/HTTP2/SOCKS5 |

| Price per GB | per request |

| 50GB Project Estimated cost | $100/Mo |

Webshare Proxies

Webshare.io is one of the biggest general proxy providers. They offer a variety of services:

- Datacenter proxies

- Private datacenter proxies

- Residential proxies

- Static Residential Proxies (aka ISP proxies)

It is primarily known for offering unlimited bandwidth datacenter proxies. These proxies can be great for bandwidh-intensive web scrapers that use headless browsers or download heavy files.

However, datacenter proxy scrapers are very likely to get blocked and webshare's residential proxy plans are very much in line with the industry average, starting at $18.75/Mo per GB.

This puts our 50GB project scraper estimation at $480/Mo. However, because of the unlimited bandwidth, datacenter proxies webshare still is a very attractive option for some web scraping niches.

Let's see how this would look on our evaluation table:

| Feature | Webshare |

|---|---|

| Datacenter Proxies | ✅ |

| Residential Proxies | ✅ |

| Mobile Proxies | ❌ |

| Geo Targeting | 1-25 countries |

| Anti Bot Bypass | ❌ |

| Javascript Rendering | ❌ |

| Log Monitoring | ❌ |

| Price per GB | $1-25 |

| 50GB Project Estimated cost | $480 |

Netnut Proxies

Netnut.io is another big proxy provider, which offers a variety of services:

- Datacenter proxies

- Rotating Residential Proxies

- Static Residential Proxies (aka ISP proxies)

- Mobile Proxies

It's primarily known for the mobile proxies, which is the best proxy type when it comes to avoiding blocking and catching internet deals (like sneaker sales). It is quite expensive starting at $30/Mo per GB and is not recommended for most web scraping projects.

Another popular feature is vast geo-targeting, as Netnut offers residential proxies from over 150 countries. This is great for broad web crawling projects that need to reach niche areas of the world.

However, Netnuts residential proxy offer is a bit more expensive than the industry average starting at $20/Mo. This puts our 50GB project at $600/Mo.

Let's see how Netnut looks on our evaluation table:

| Feature | Netnut |

|---|---|

| Datacenter Proxies (50k) | ✅ |

| Residential Proxies (10-20M) | ✅ |

| Mobile Proxies | ✅ |

| Geo Targeting | 150 countries |

| Anti Bot Bypass | ❌ |

| Javascript Rendering | ❌ |

| Log Monitoring | ❌ |

| Price per GB | $1-30 |

| 50GB Project Estimated cost | $600 |

Soax Proxies

Soax.com is another big name in the proxy world. Soax offers a very streamlined variety of services:

- Residential proxies

- Mobile proxies

Soax is primarily known for its competitive prices. Its residential proxies are quite cheap starting at $12/Mo per GB. This puts our 50GB project at $500/Mo with 5GB to spare.

Soax's mobile proxies are in line with the industry average starting at $30/Mo per GB.

| Feature | Soax |

|---|---|

| Datacenter Proxies | ❌ |

| Residential Proxies (5M) | ✅ |

| Mobile Proxies | ✅ |

| Geo Targeting | 100+ countries |

| Anti Bot Bypass | ❌ |

| Javascript Rendering | ❌ |

| Log Monitoring | ❌ |

| Price per GB | $12 - 30 |

| 50GB Project Estimated cost | $500 |

Geosurf Proxies

Geosurf.com is another bandwidth tier-based residential proxy provider that has been in the proxy industry for over 10 years. It doesn't offer any particular breakthroughs.

It's a very similar offering to that of Soax.com. However, it is aimed more at enterprise-level users with higher minimum commitment but slightly better value. Let's see how it looks on our evaluation table:

| Feature | Geosurf |

|---|---|

| Datacenter Proxies | ❌ |

| Residential Proxies (2.5M) | ✅ |

| Mobile Proxies | ✅ |

| Geo Targeting | 135 countries + 1700 cities |

| Anti Bot Bypass | ❌ |

| Javascript Rendering | ❌ |

| Log Monitoring | ❌ |

| Price per GB | $8 - 15 |

| 50GB Project Estimated cost | $544 |

Unfortunately, Geosurf suffers from issues similar to those of Soax.com, making it a difficult choice for low- and mid-tier projects. However, Geosurf does offer unlimited* concurrency and proxy selection by city, which can come in handy for some niche web scrapers.

Web Scraping Proxies Summary

| Feature | ScrapFly | Webshare | Netnut | Soax | Geosurf |

|---|---|---|---|---|---|

| Datacenter Proxies | 3.4M | on demand | 50k shared | ❌ | ❌ |

| Residential Proxies | 190M | on demand | 10-20M | 5M | 2.5M |

| Geo Targeting (Countries) | 54 | 1-25 | 150 | 100 | 135 |

| Anti Bot Bypass | ✅ | ❌ | ❌ | ❌ | ❌ |

| Javascript Rendering | ✅ | ❌ | ❌ | ❌ | ❌ |

| Log Monitoring | ✅ | ❌ | ❌ | ❌ | ❌ |

| Price per GB | per request | $1-25 | $1-17.5 | $12 - 33 | $8 - 15 |

| Minimum Commitment (Monthly) | $15 | $15 | $20 | $99 | $300 |

| 50GB Project Estimated cost | $100 | $480 | $600 | $500 | $544 |

When it comes to web scraping proxies, classic services are tough to sell. Even with the recent advances in proxy quality, these services still fall short compared to the APIs of dedicated web scraping as a service, which can apply additional, smart connection strategies to prevent captchas, blocking, or throttling.

ScrapFly's combination of smart connection strategies and extra UX features like Javascript Rendering and Anti Bot Bypass can make even the hardest targets easily accessible while also simplifying web scraping process!