Web Scraping with Scrapfly and Go

Scrapfly Go SDK is powerful but intuitive and on this onboarding page we'll take a look at how to install it, use it and some examples.

If you're not ready to code yet check out Scrapfly's Visual API Player or the no-code Zapier integration.

SDK Setup

Install the SDK:

All SDK examples can be found on SDK's Github repository:

github.com/scrapfly/go-scrapfly

Web Scraping API

In this section, we'll walk through the most important web scraping features step-by-step. After completing this walk through you should be proficient enough to conquer any website scraping with Scrapfly, so let's dive in!

First Scrape

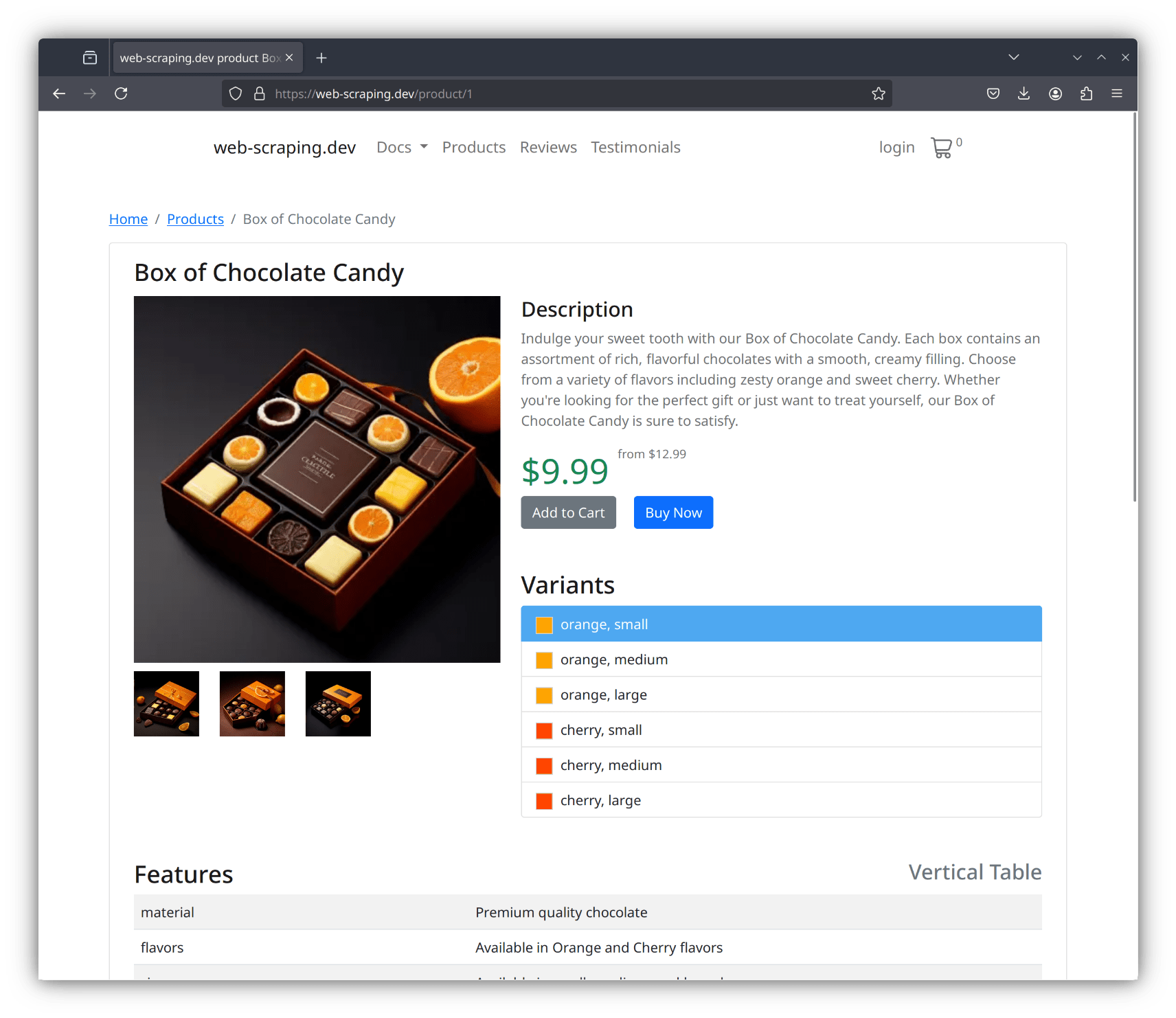

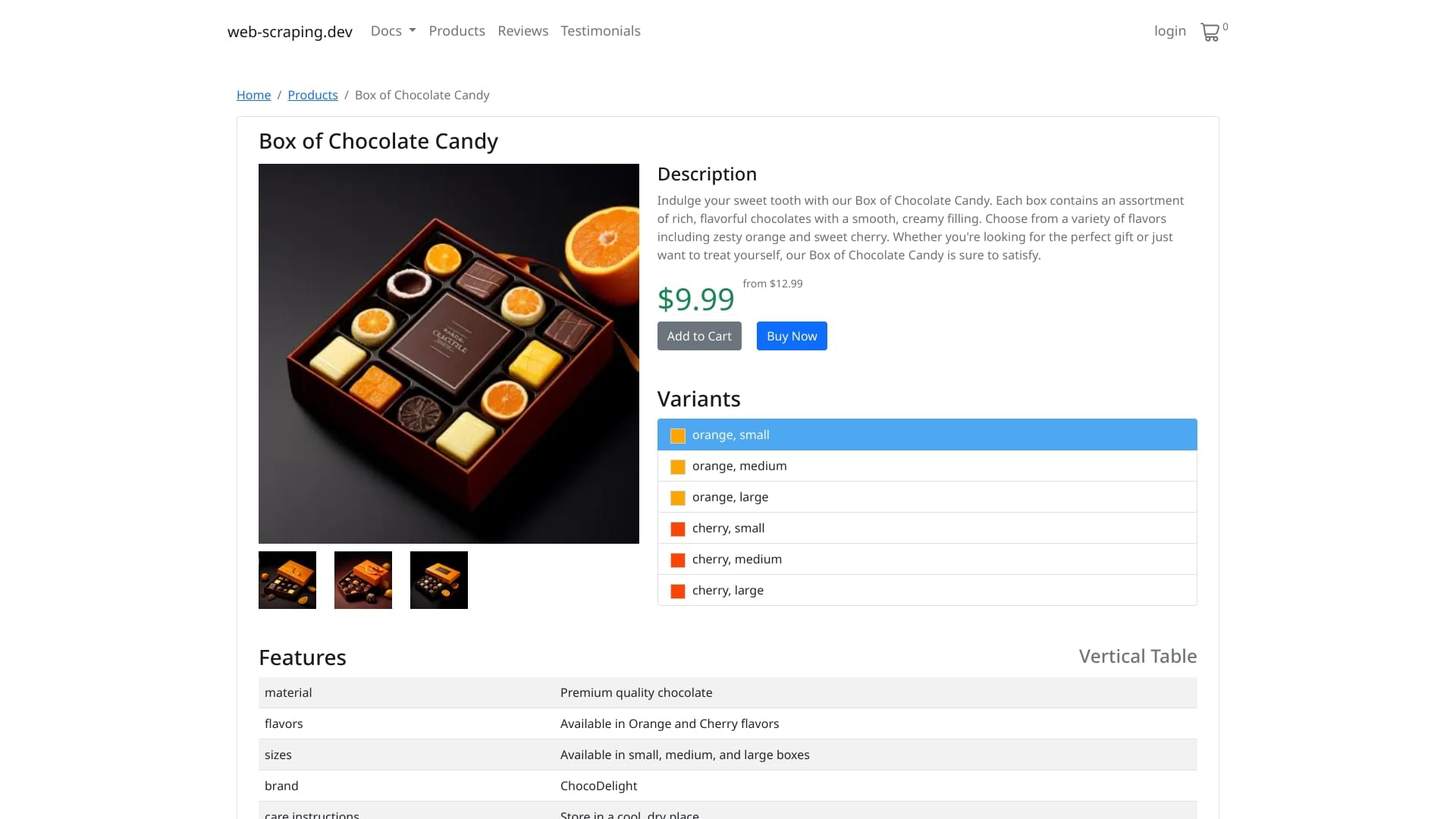

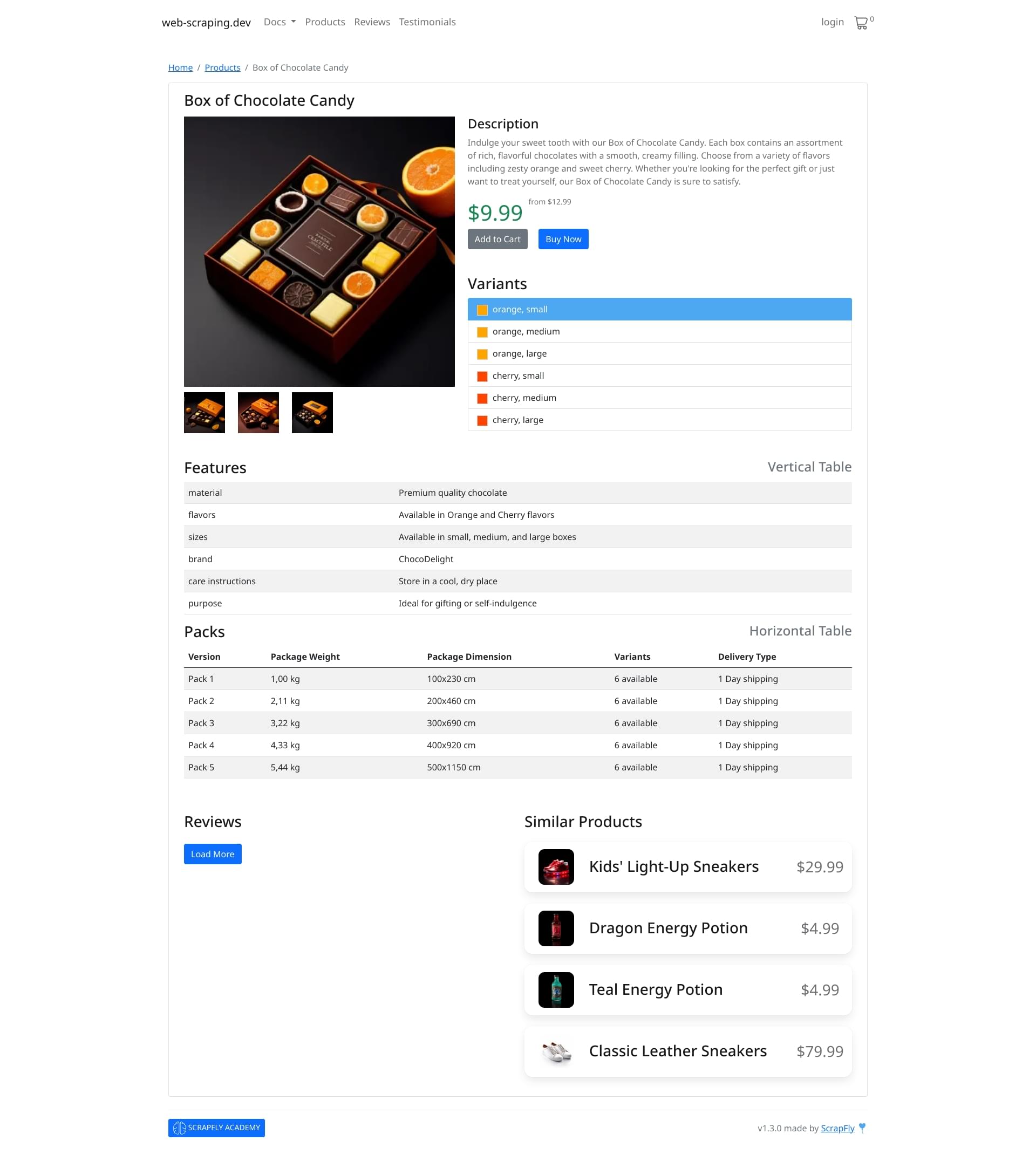

To start let's take a look at a basic scrape of this simple product page web-scraping.dev/product/1.

We'll scrape the page, see some optional parameters and then extract the product details using CSS selectors.

Above, we first requested Scrapfly API to scrape the product page for us.

Then, we used the

Selector()

helper to parse the product details

using CSS Selectors.

Request Customization

All SDK requests are configured through

ScrapeConfig

struct fields.

Most fields mirror API parameters.

For more see

More information request customization

Here's a quick demo example:

Using

ScrapeConfig

we can configure the outgoing scrape request

and enable Scrapfly specific features.

Developer Features

There are a few important developer features that can be enabled to make the onboarding process a bit easier.

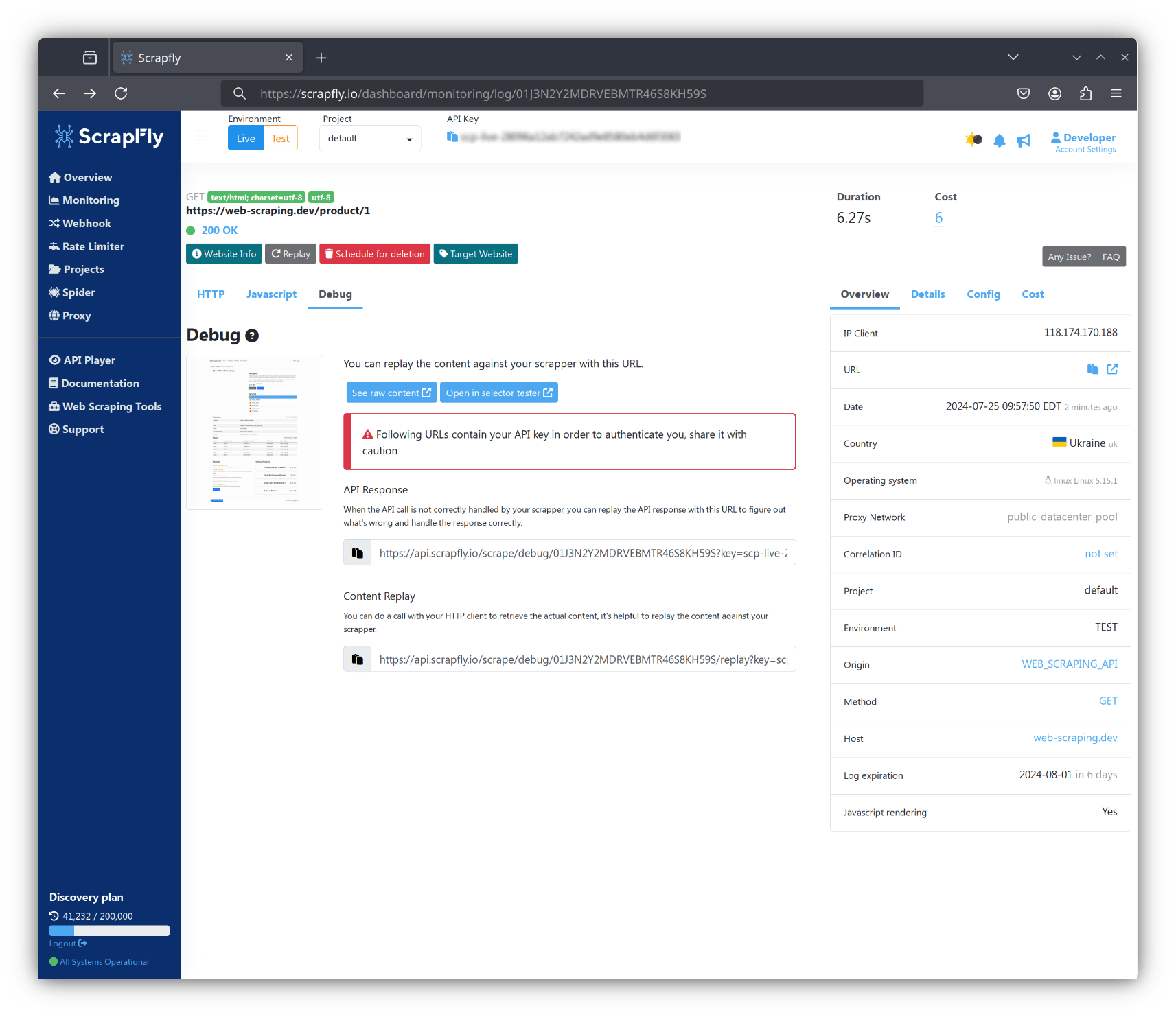

The debug parameter can be enabled to produce more details in the web log output and the cache parameters are great for exploring the APIs while onboarding:

By enabling

debug

you will see more details and even captured screenshots on the monitoring dashboard.

The next feature set allows us to super charge our scrapers with web browsers, let's take a look.

Using Web Browsers

Scrapfly can scrape using real web browsers and to enable that the render_js parameter is used. When enabled instead of using HTTP request Scrapfly will:

- Start a real web browser

- Load the page

- Optionally wait for page to load through rendering_wait or wait_for_selector options

- Optionally execute custom Javascript code through js or javascript_scenario

- Return the rendered page content and browser data like captured background requests and storage contents.

This makes Scrapfly scrapers incredibly powerful and customizable! Let's take a look at some examples.

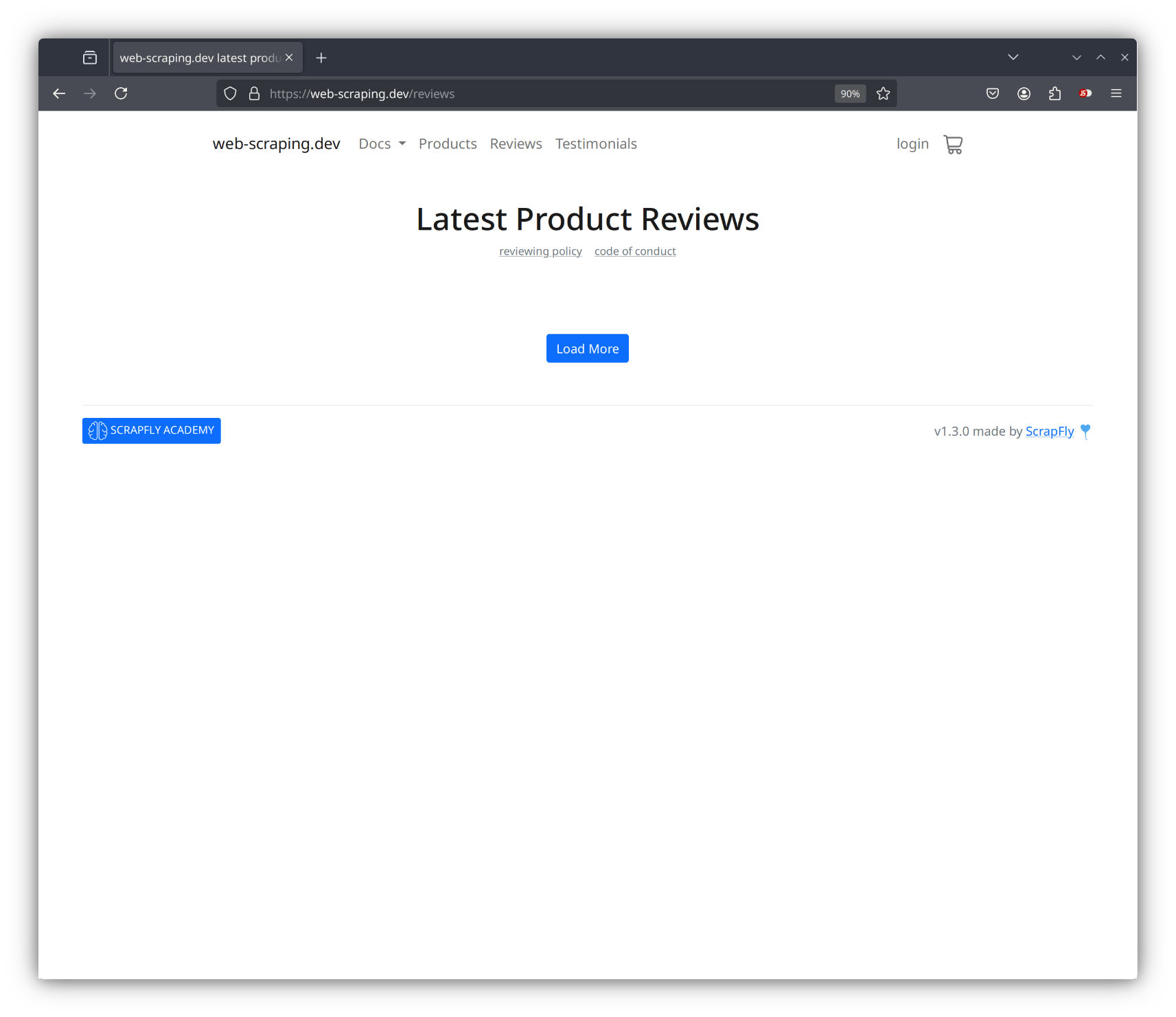

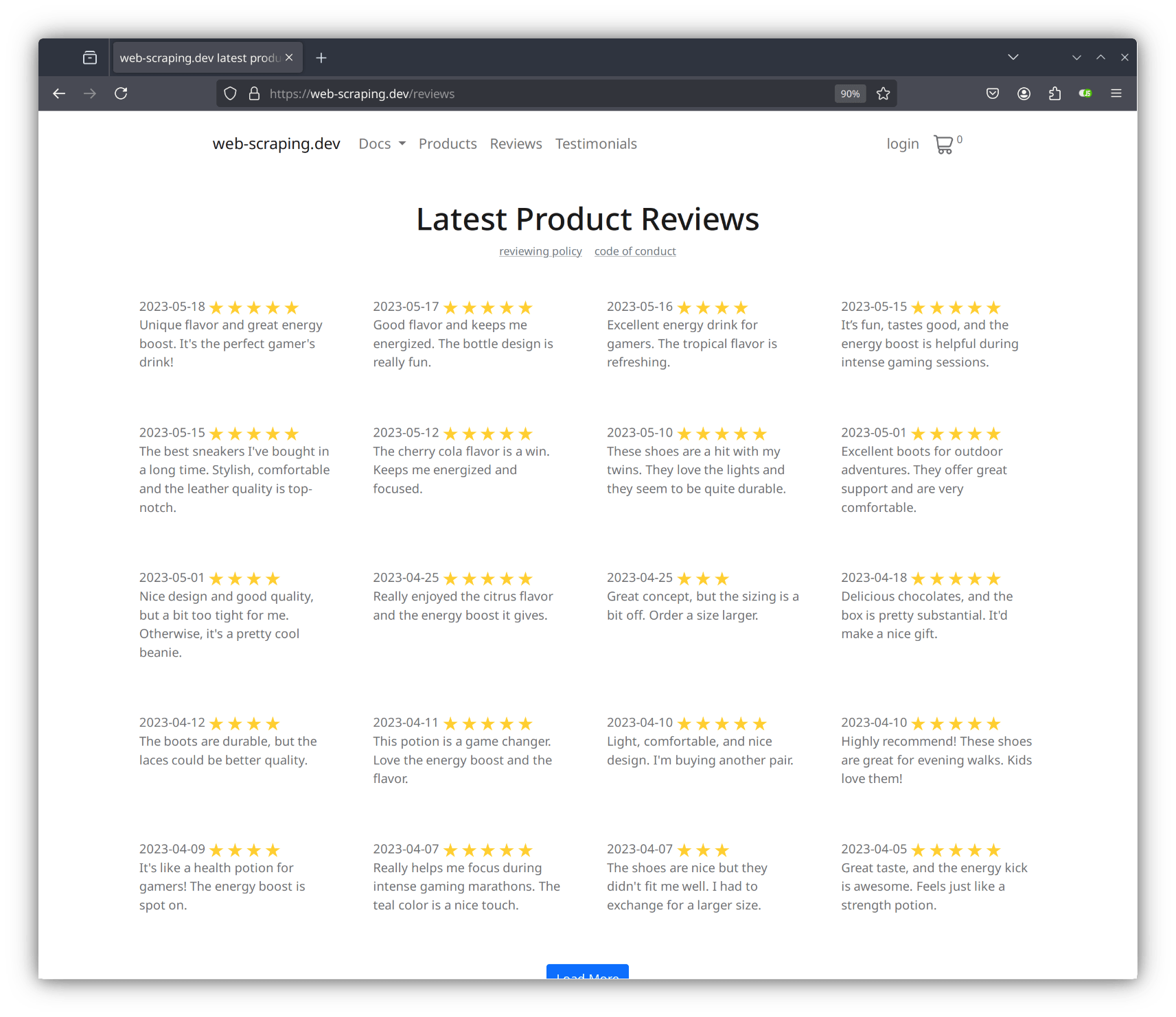

To illustrate this let's take a look at this example page web-scraping.dev/reviews which requires javascript to load:

To scrape this we can use scrapfly's web browsers and we can approach this in two ways:

Rendering Javascript

The first approach is to simply wait for the page to load and scrape the content:

This approach is quite simple as we get exactly what we see in our own web browser making the development process easier.

XHR Capture

The second approach is to capture the background requests that generate this data on load directly:

The advantage of this approach is that we can capture direct JSON data and we don't need to parse anything! Though it is a bit more complex and requires some web development knowledge.

Browser Control

Finally, we can fully control the entire browser. For example, we can use Javascript Scenarios to click buttons, wait for navigation and extract data.

Javascript Execution

For more experienced web developers there's a full javascript environment access available through the

js

parameter.

For example let's execute some javascript parsing code using

querySelector()

method:

With custom request options and cloud browsers you're really in control of every web scraping step! Next, let's see the feature that allow to access any web page without being blocked through proxies and ASP.

Bypass Blocking

Scraper blocking can be very difficult to understand so Scrapfly provides one setting that simplifies the scraper blocking bypass. The Anti Scraping Protection (asp) bypass parameter will automatically configure requests and bypass most anti-scraping protection systems:

While ASP can bypass most anti-scraping protection systems like Cloudflare, Datadome etc. some blocking techniques are based on geographic location or proxy type.

Proxy Country

All Scrapfly requests go through a Proxy from over millions of IPs available from over 50+ countries. Some websites, however, are only available in specific region or simply block less connections from specific countries.

For that the country parameter can be used to define what country proxies are used.

Here we can see what proxy country scrapfly used when we query Scrapfly's IP analysis API tool.

Proxy Type

Further, Scrapfly offers two types of IPs: datacenter and residential. For targets that are harder to reach residential proxies can perform much better. Setting proxy_pool parameter to residential pool type we can switch to these stronger proxies:

Concurrency Helper

The Go SDK provides a concurrency helper

ConcurrentScrape

which yields results as they complete.

See this example implementation:

Here we used the helper to scrape multiple pages concurrently. If concurrency is omitted your account's max concurrency limit will be used.

This covers the core functionalities of Scrapfly's Web Scraping API though there are many more features available. For more see the full API specification

If you're having any issues see the FAQ and Troubleshoot pages.

Extraction API

Now that we know how to scrape data using Scrapfly's web scraping API we can start parsing it for information and for that Scrapfly's Extraction API is an ideal choice.

Extraction API offers 3 ways to parse data: LLM prompts, Auto AI and custom extraction rules.

All of which are available through the

Extract()

method and

ExtractionConfig

object

of Go SDK. Let's take a look at some examples.

LLM Prompts

Extraction API allows to prompt any text content using LLM prompts. The prompts can be used to summarize content, answer questions about the content or generate structured data like JSON or CSV.

Auto Extraction

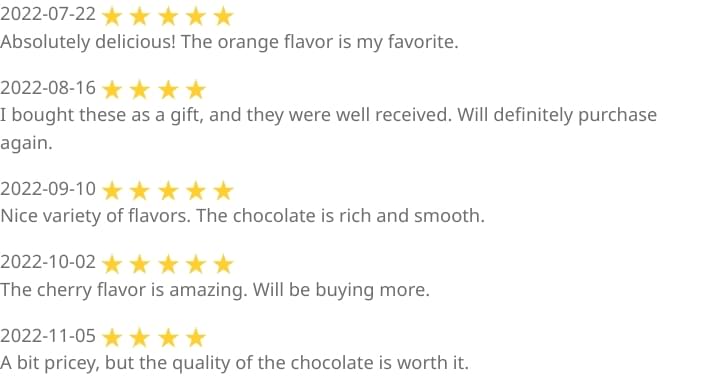

Scrapfly's Extraction API also includes a number of predefined models that can be used to automatically extract common objects like products, reviews, articles etc. without the need to write custom extraction rules.

The predefined models are available through the

extraction_model

parameter

of the

ExtractionConfig

object. For example, let's use the

product

model:

Extraction Templates

For more specific data extraction Scrapfly Extraction API allows to define custom extraction rules.

This is being done through a JSON schema which defines how data is selected through XPath or CSS selectors and how data is being processed through pre-defined processors and formatters.

This is a great tool for developers who are already familiar with data parsing in web scraping. See this example:

With this we can now scrape any page and extract any data we need! To wrap this up let's take a look at another data capture format next - Screenshot API.

Screenshot API

While it's possible to capture screenshots using web scraping API Scrapfly also includes a dedicated screenshot API that significantly streamlines the screenshot scraping process.

The Screenshot API can be accessed through the SDK's

Screenshot()

method

and configured through the

ScreenshotConfig

configuration object.

Here's a basic example:

The screenshot API also inherits many features from web-scraping API likecache,webhookandcachethat are fully functional.

Here all we did is provide an url to capture and the API has returned us a screenshot.

Resolution

Next, we can heavily customize how the screenshot is being captured. For example, we can change

the viewport size from the default 1920x1080 to any other resolution like 540x1200

to simulate mobile views:

Further, we can tell Scrapfly to capture the entire page rather than just the viewport.

Full Page

Using the capture parameter we

can tell scrapfly to capture fullpage which will capture everything that is visible on the page.

Here by setting the capture parameter to fullpage we've captured the entire page.

Though, if page requires scrolling to load more content we can also capture that using another parameter.

Auto Scroll

Just like with the Web Scraping API we can force automatic scroll on the page to load dynamic elements that load on scrolling. In this example, we're capturing a screenshot of web-scraping.dev/testimonials which loads new testimonial entries when the user scrolls the page:

Here the auto scrolled to the very bottom and loaded all of the testimonials before screenshot capture.

Next, we can capture only specific areas of the page. Let's take a look how.

Capture Areas

To capture specific areas we can use CSS selectors to define what to capture.

For this, the capture parameter is used with the selector for an element to capture.

For example, we can capture only the reviews section of web-scraping.dev/product/1 page:

Here using a CSS selector we can restrict our capturing only to areas that are relevant to us.

Capture Options

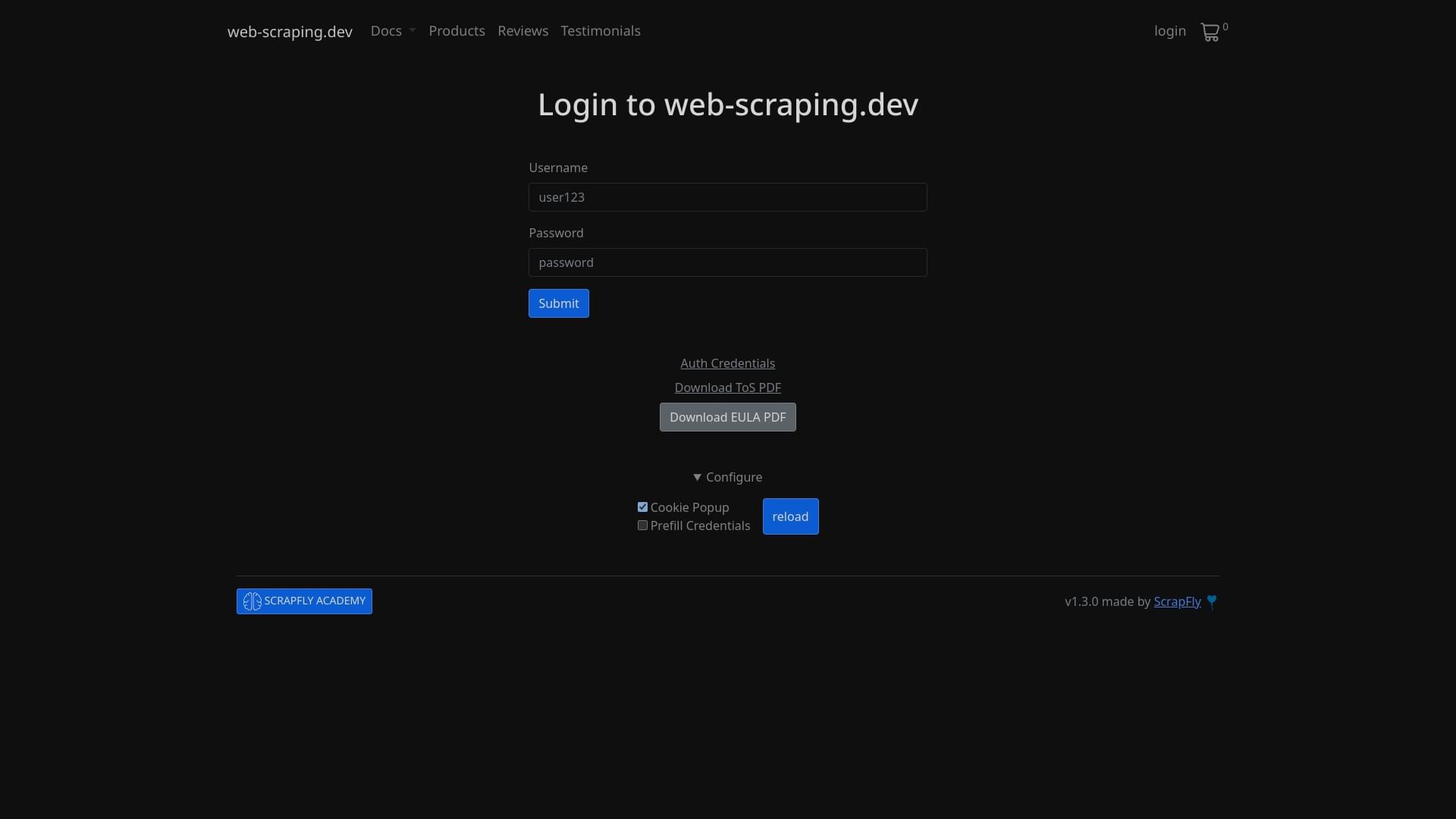

Capture options can apply various page modifications to capture the page in a specific way.

For example, using block_banners option we can block cookies banners and

using the dark_mode we can apply a custom dark theme to the scraped page.

In this example we capture web-scraping.dev/login?cookies page and disable cookie pop while also applying a dark theme.

What's next?

This concludes our onboarding tutorial though Scrapfly has many more features and options available. For that explore the getting started pages and api specification of each API as each of these features are available in all of Scrapfly SDKs and packages!

As for more on web scraping techniques and educational material see the Scrapfly Web Scraping Academy.

For full documentation of the Go SDK, see the Go SDK documentation.