In today's data-driven world, the ability to efficiently parse data is essential for developers, data scientists, and businesses alike. Whether you're extracting information from web pages, processing JSON files, or analyzing natural language, understanding what parsing is and how to implement it can significantly enhance your data handling capabilities.

In this article, we'll delve into different aspects of data parsing and demonstrate practical examples using Python.

Key Takeaways

Master data parsing fundamentals across formats like JSON, XML, HTML, and PDFs using Python parsers and modern AI techniques for efficient data extraction.

- Use specialized parsers like BeautifulSoup for HTML and lxml for XML to handle structured document formats

- Parse JSON with native Python support for lightweight and fast data processing workflows

- Extract data from PDFs using libraries like PyPDF2 and pdfplumber for text and table extraction

- Implement AI-powered parsing with language models for unstructured data and natural language processing

- Handle different data formats with appropriate parsing strategies for optimal performance and accuracy

- Apply parsing techniques in web scraping for automated data collection and processing pipelines

What is Data Parsing?

Data parsing is the process of analyzing a string of symbols, either in natural language or in computer languages, and converting it into a structured format that a program can easily manipulate. Essentially, parsing transforms raw data into a more accessible and usable form, enabling efficient data processing and analysis.

Parsing is used in numerous applications, including:

- Web scraping: Extracting data from websites.

- Data interchange: Converting data between different formats like JSON and XML.

- Natural Language Processing (NLP): Understanding and interpreting human language.

- File conversion: Transforming data from one file type to another, such as PDFs to text.

Understanding what is parsing equips you with the tools to handle diverse data sources and formats effectively.

Types of Data Parsing

After understanding what data parsing is, we need to learn about the different types of data parsing, data comes in various formats, each requiring specific parsing techniques. Below, we explore the most common types of data parsing:

XML and HTML Parsing

HTML and XML are hierarchical data formats that represent data as a tree structure. Parsing these formats involves traversing the tree to extract the desired information,Understanding how to use an HTML parser in Python is crucial for developers working with web data.

HTML Parsing: HTML documents are parsed to extract elements using CSS selectors or XPath expressions. For instance, libraries like BeautifulSoup serve as a robust Python parser for HTML, facilitating easy navigation and searching within the parse tree.

XML Parsing: Similar to HTML, XML documents are parsed using tools like lxml in Python. Parsing XML allows for the extraction of data from structured documents, making it essential for applications like configuration file management and data interchange.

For more detailed guides, refer to our articles on parsing HTML with CSS, parsing HTML with XPath, and how to parse XML.

Parsing HTML with Python's BeautifulSoup:

html = """

<div class="product">

<h2>Product Title</h2>

<div class="price">

<span class="discount">12.99</span>

<span class="full">19.99</span>

</div>

</div>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html)

product = {

"title": soup.find(class_="product").find("h2").text,

"full_price": soup.find(class_="product").find(class_="full").text,

"price": soup.select_one(".price .discount").text,

}

print(product)

{

"title": "Product Title",

"full_price": "19.99",

"price": "12.99",

}

This example illustrates how easily we can parse web pages for product data and a few key features of beautifulsoup4. To fully understand HTML parsing let's take a look at what makes HTML such a powerful data structure

JSON Parsing

JSON (JavaScript Object Notation) is a lightweight data-interchange format that's easy for humans to read and write and easy for machines to parse and generate.

Parsing JSON in Python can be done using the built-in json module or more advanced tools like jmespath and jsonpath for complex queries.

- Basic JSON Parsing: The standard way to parse JSON in Python is with the

jsonmodule. It allows you to easily convert JSON strings into Python dictionaries or lists, making it simple to access or manipulate the data. - Advanced JSON Parsing: When working with deeply nested or complex JSON objects, tools like jmespath and jsonpath come in handy. They provide powerful querying languages to extract data based on patterns, without needing to manually traverse the JSON structure.

Here are some examples of how JSON parsing looks in Python:

Parsing JSON in Python:

# basic example how to load json data and navigate it

import json

json_data = '{"name": "Ziad", "age": 23, "city": "New York"}'

parsed_data = json.loads(json_data)

print(parsed_data['name']) # Output: Ziad

Parsing JSON with JMESPath:

import jmespath

data = {

"people": [

{"name": "Ziad", "age": 23},

{"name": "Mazen", "age": 30}

]

}

result = jmespath.search('people[?age > `25`].name', data)

print(result) # Output: ['Mazen']

Utilizing a python json parser like the built-in json module provides a straightforward approach to handle simple JSON data. For more complex parsing needs, integrating jmespath or jsonpath with your Python JSON parser allows for sophisticated data querying and extraction.

Text Parsing

Text parsing involves analyzing natural language text to extract meaningful information. Techniques in natural language processing (NLP) and the use of large language models (LLMs) play a crucial role in understanding and interpreting text data.

Simple Text Parsing with Python:

text = "Contact us at support@scrapfly.com or visit our office."

emails = [word for word in text.split() if "@" in word]

print(emails) # Output: ['support@scrapfly.com']

For basic text parsing, you can use simple string manipulation in Python. More advanced parsing techniques, such as natural language parsing, can be implemented using NLP libraries like NLTK and spaCy. For example, NLTK can easily tokenize text into words and analyze use frequency:

import nltk

from nltk.tokenize import word_tokenize

from nltk.probability import FreqDist

# Download necessary NLTK data

nltk.download('punkt')

# Sample paragraph

text = """

Natural language processing is necessary for understanding web scraped content.

It can be used to evaluate web sentiment, extract web entities, and summarize web data.

"""

# Tokenize words

tokens = word_tokenize(text)

# Create frequency distribution of tokens

fdist = FreqDist(tokens)

# Print the 5 most common tokens

print("Most common tokens:")

for word, count in fdist.most_common(5):

print(f"{word}: {count}")

Natural language parsing is essential for making sense of textual data though lately it's being replaced by more advanced AI models like LLMs.

PDF Parsing

PDF parsing involves extracting text, images, and other data from PDF files.Using a Python PDF parser Tools like PyPDF2 and pdfminer in Python enable developers to programmatically access and manipulate PDF content.

Extracting Text from PDF with PyPDF2:

import PyPDF2

with open('sample.pdf', 'rb') as file:

reader = PyPDF2.PdfFileReader(file)

page = reader.getPage(0)

text = page.extractText()

print(text)

After exploring the various types of data parsing, we'll delve into common data objects that developers frequently parse.

Parsing Data Objects

When working with data parsing, various data objects are commonly parsed to extract relevant information. Below are some typical examples, complete with code snippets in Python.

Address Parsing

Address parsing involves breaking down a full address into its constituent parts, such as street, city, state, and zip code. This is particularly useful for applications in logistics, e-commerce, and customer relationship management.

Address parsing example with python and regular expressions:

import re

address = "123 Main St, Springfield, IL 62704"

pattern = r'(\d+) (\w+) St, (\w+), (\w{2}) (\d{5})'

match = re.match(pattern, address)

if match:

street_num, street_name, city, state, zip_code = match.groups()

print(f"Street Number: {street_num}")

print(f"Street Name: {street_name}")

print(f"City: {city}")

print(f"State: {state}")

print(f"ZIP Code: {zip_code}")

Email Parsing

Email parsing extracts components from an email address, such as the username and domain. This can be useful for validating email formats or categorizing users.

A Python parser can be used to extract the necessary components from an email string, ensuring proper validation and categorization.

Components of an Email Address

- Username: The part before the "@" symbol, which typically represents the user's identifier.

- Domain: The part after the "@", usually representing the mail server (e.g., gmail.com).

Simple Email Parsing with Python:

email = "user@gmail.com"

username, domain = email.split("@")

print("Username:", username) # Output: Username: user

print("Domain:", domain) # Output: Domain: gmail.com

You can learn more about Scraping Emails using Python in our dedicated article:

After understanding how to parse basic data objects like addresses and emails, we can extend these techniques to handle more complex data extraction tasks from the web.

Web Data Parsing

Web data parsing refers to extracting and processing data from web pages. This is a fundamental aspect of web scraping, allowing developers to gather information from various online sources. Here, we'll introduce two common methods and promote our Extraction API for streamlined data extraction.

Microformats

Microformats are a way of embedding structured data within HTML, making it easier to parse and extract information. They use standardized class names and attributes to represent data, facilitating consistent parsing across different web pages.

All microformats are defined on schema.org and can be easily etraxted from any page using parsing libraries like extruct:

import requests

import extruct

response = requests.get("https://web-scraping.dev/product/1")

# find all 4 types of microdata:

data = extruct.extract(

response.text,

syntaxes=['json-ld', 'microdata', 'rdfa', 'opengraph']

)

print(data['json-ld'])

You can learn more about scraping Microformats in our dedicated article:

Manual Parsing

Manual parsing involves writing custom code to navigate and extract specific elements from HTML using CSS selectors or XPath expressions. This method provides flexibility but can be time-consuming, especially for complex or inconsistent web structures.

The average CSS selector in web scraping often looks something like this:

Parsing HTML with CSS Selectors in Python:

from bs4 import BeautifulSoup

html = """

<head>

<title class="page-title">Hello World!</title>

</head>

<body>

<div id="content">

<h1>Title</h1>

<p>first paragraph</p>

<p>second paragraph</p>

<h2>Subtitle</h2>

<p>first paragraph of subtitle</p>

</div>

</body>

"""

soup = BeautifulSoup(html, 'lxml')

soup.select_one('title').text

"Hello World"

# we can also perform searching by attribute values such as class names

soup.select_one('.page-title').text

"Hello World"

# We can also find _all_ amtching values:

for paragraph in soup.select('#content p'):

print(paragraph.text)

"first paragraph"

"second paragraph"

"first paragraph of subtitile"

# We can also combine CSS selectors with find functions:

import re

# select node with id=content and then find all paragraphs with text "first" that are under it:

soup.select_one('#content').find_all('p', text=re.compile('first'))

["<p>first paragraph</p>", "<p>first paragraph of subtitle</p>"]

This manual approach sets the stage for more advanced techniques, such as leveraging AI-powered tools for automatic data extraction.

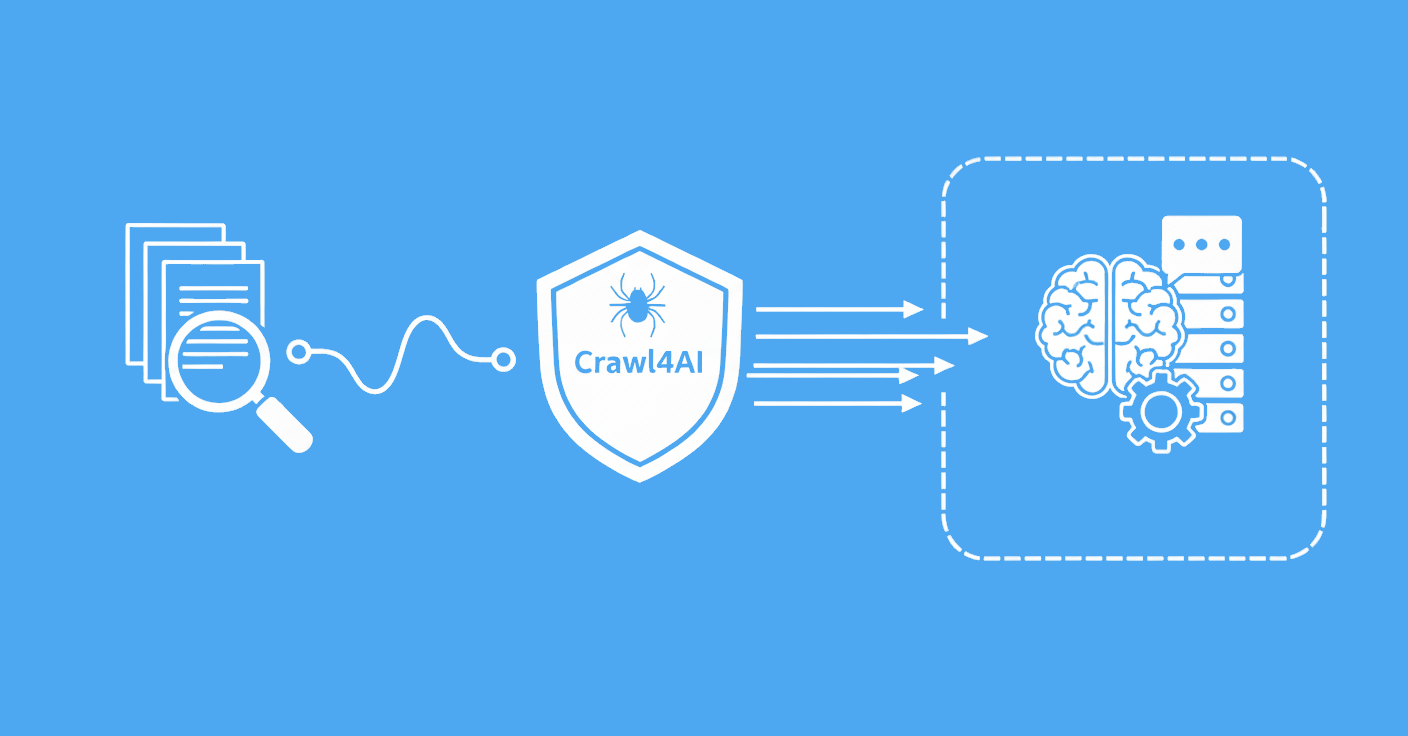

AI Parsing with Scrapfly

Our advanced extraction API simplifies the data parsing process by utilizing machine learning models to automatically extract common data objects. This eliminates the need for manual selector definitions and adapts to varying web structures seamlessly.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - extract web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- LLM prompts - extract data or ask questions using LLMs

- Extraction models - automatically find objects like products, articles, jobs, and more.

- Extraction templates - extract data using your own specification.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

For more, explore web scraping API and its documentation.

Scrapfly's Extraction API includes a number of predefined models that can automatically extract common objects like products, reviews, articles etc.

For example, let's use the product model:

from scrapfly import ScrapflyClient, ScrapeConfig, ExtractionConfig

client = ScrapflyClient(key="YOUR SCRAPFLY KEY")

# First retrieve your html or scrape it using web scraping API

html = client.scrape(ScrapeConfig(url="https://web-scraping.dev/product/1")).content

# Then, extract data using extraction_model parameter:

api_result = client.extract(

ExtractionConfig(

body=html,

content_type="text/html",

extraction_model="product",

)

)

print(api_result.result)

Auto Extraction is powerful but can be limited for unique niche scenarios where manual extraction can be more fit.

LLM Parsing with Scrapfly

Sometimes, AI auto extraction may not suffice for complex data analysis. This is where LLM parsing with Scrapfly comes into play, By integrating LLMs.

Extraction API allows to prompt any text content using LLM prompts through Scrapfly's LLM engine optimized for document parsing.

The prompts can be used to summarize content, answer questions about the content or generate structured data like JSON or CSV. As an example see this freeform prompt use with Python SDK:

from scrapfly import ScrapflyClient, ScrapeConfig, ExtractionConfig

client = ScrapflyClient(key="YOUR SCRAPFLY KEY")

# First retrieve your html or scrape it using web scraping API

html = client.scrape(ScrapeConfig(url="https://web-scraping.dev/product/1")).content

# Then, extract data using extraction_prompt parameter:

api_result = client.extract(ExtractionConfig(

body=html,

content_type="text/html",

extraction_prompt="extract main product price only",

))

print(api_result.result)

{

"content_type": "text/html",

"data": "9.99",

}

LLMs are great for freeform or creative questions but for extracting known data types like products, reviews etc. there's a better option - AI Auto Extraction.

You can learn more about how to Power-Up LLMs with Web Scraping in our dedicated article:

FAQ

Before we conclude, let's address some frequently asked questions about data parsing:

What is resume parsing?

Resume parsing is the process of extracting relevant information from resumes, such as contact details, work experience, and education, into a structured format. This automation helps recruiters manage large volumes of applications efficiently. Resumes are often in various document formats like pdf, docx or html making Python parser an ideal tool to handle all these formats.

How does a Python parser work?

A Python parser analyzes input data (like JSON, XML, or HTML) and converts it into a structured format that Python programs can manipulate. Libraries such as json, BeautifulSoup, and lxml are commonly used for parsing different data types.

What is the difference between JSONPath and JMESPath?

Both JSONPath and JMESPath are query languages for JSON data, allowing users to extract specific elements. While JSONPath is more akin to XPath for XML, JMESPath offers a more expressive and feature-rich syntax, making it suitable for complex queries.

Summary

Parsing is a fundamental aspect of data processing, transforming raw data into structured and actionable information. From XML and HTML parsing to AI-powered extraction and LLM insights, the methods and tools available cater to diverse data types and use cases. By mastering data parsing, you can efficiently handle and analyze data, driving informed decisions and enhancing your applications' functionality.

Whether you're leveraging traditional parsing techniques with Python or embracing advanced AI and LLM solutions with Scrapfly, understanding what parsing is empowers you to navigate the complexities of data with confidence and precision.