Web Scraping With Go

Learn web scraping with Golang, from native HTTP requests and HTML parsing to a step-by-step guide to using Colly, the Go web crawling package.

Every modern web browser comes with a special suite of tools for web developers called the Developer Tools (or devtools for short).

This suite contains a lot of powerful tools used in web scraper development that can help to debug and understand how the target websites work.

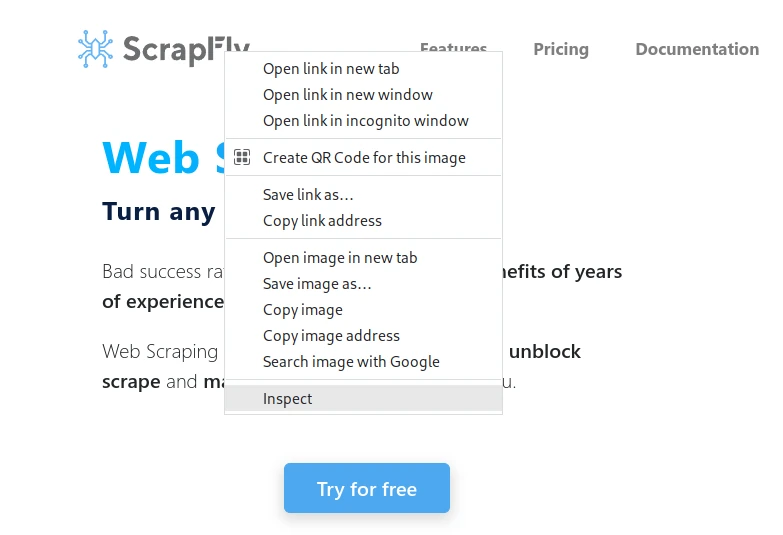

Devtools can be launched on any website using the F12 key or by right-clicking anywhere on the page and selecting "inspect" option.

To start, the "Elements" tab allows for inspecting the final HTML structure of the page. This can be used to create CSS and XPath selectors for scraping:

The "Network" tab (aka the Network Inspector) can be used to inspect the network traffic of the page. This can be used to understand how the website works and discover its backend and hidden APIs:

One of the most popular features of Network devtools is the ability to export the requests to cURL commands (right click -> copy as curl) that can be converted to scraping code using the cURL to Python tool.

This knowledgebase is provided by Scrapfly — a web scraping API that allows you to scrape any website without getting blocked and implements a dozens of other web scraping conveniences. Check us out 👇