Web scraping services have changed how businesses and developers access web data. Whether you're building a price comparison tool, gathering market intelligence, or creating datasets for machine learning, understanding the landscape of web scraping services is crucial for making informed decisions about your data extraction strategy.

In this complete tutorial, we'll explore the different types of web scraping services available, examine their use cases, pricing models, and provide best practices for choosing and implementing the right solution for your needs. We'll also discuss the technical considerations, legal aspects, and how to scale your scraping operations effectively.

Key Takeaways

Master web scraping services selection with advanced platform evaluation, pricing models, and implementation strategies for comprehensive data extraction solutions.

- Choose managed APIs like ScrapFly and ScrapingBee for reliable, scalable web scraping without infrastructure management

- Configure self-hosted solutions for enterprise-grade control and customization requirements

- Implement proper pricing model evaluation including pay-per-request, subscription, and usage-based models

- Configure anti-detection measures and proxy management for large-scale scraping operations

- Use specialized tools like ScrapFly for automated web scraping service management with comprehensive features

- Implement proper compliance and legal considerations for ethical web scraping practices

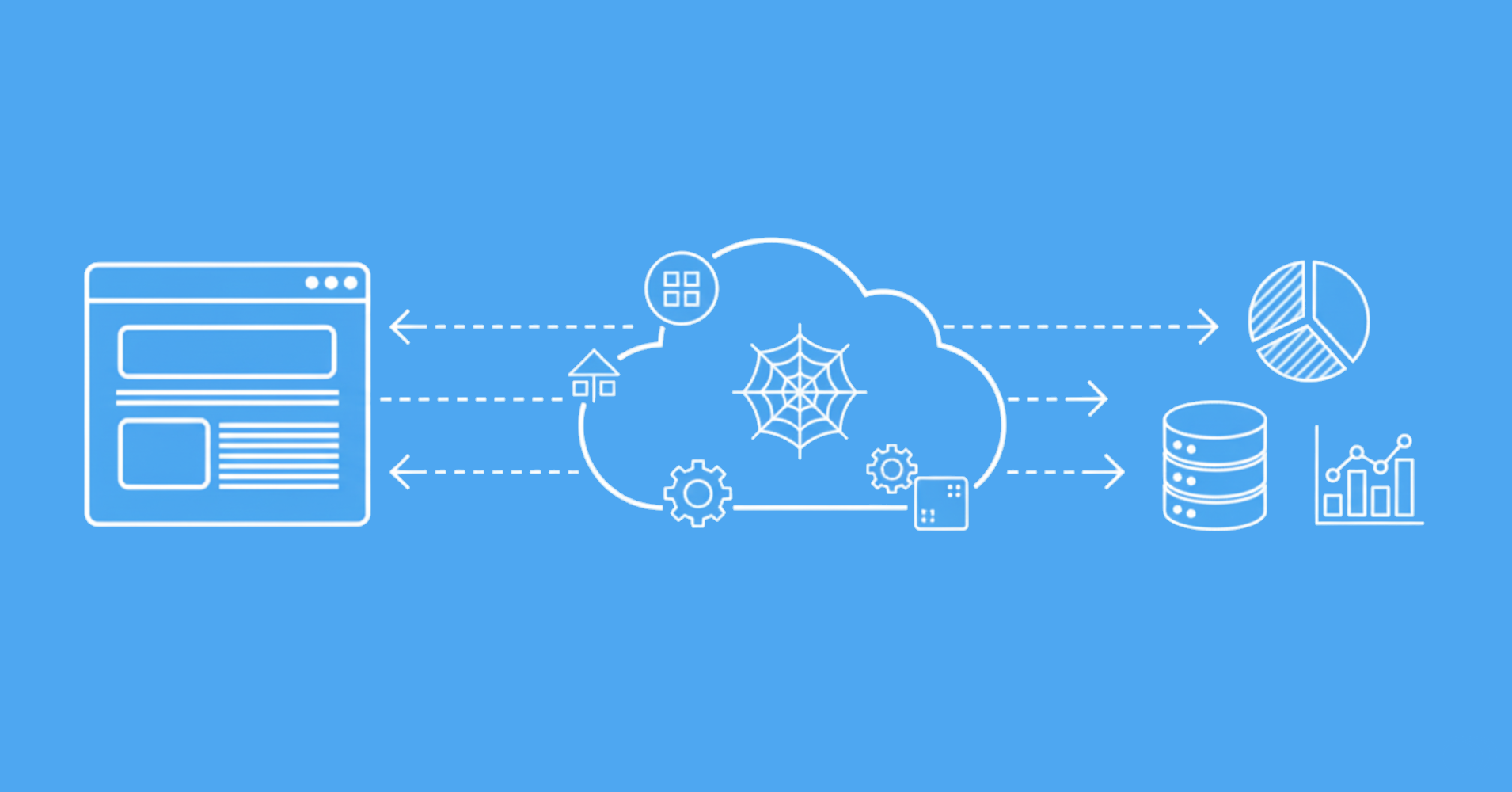

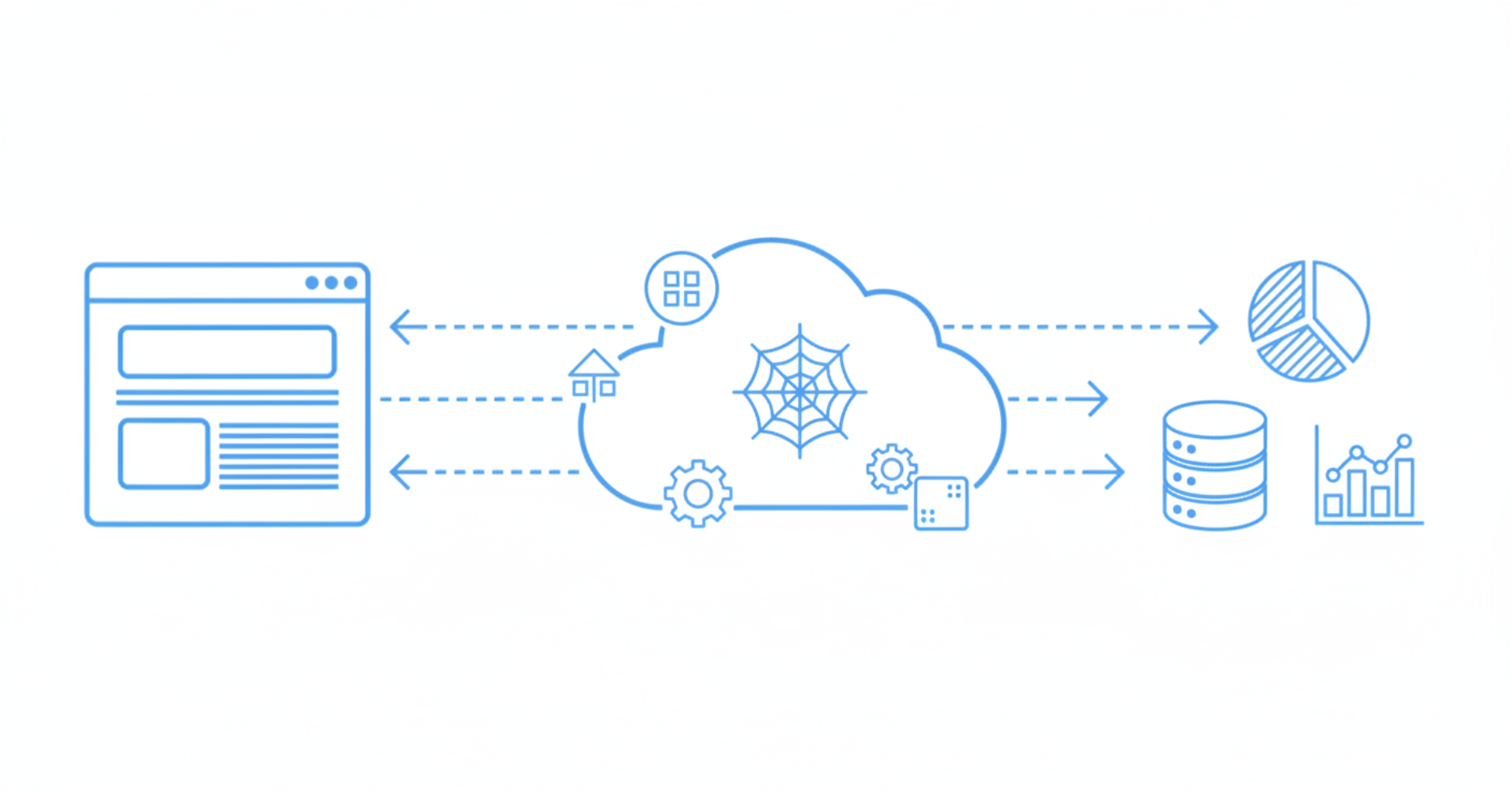

What are Web Scraping Services?

Web scraping services are platforms or APIs that provide infrastructure and tools for extracting data from websites at scale. Unlike building scrapers from scratch, these services handle the complex aspects of web scraping including proxy management, anti-bot detection, request handling, and data parsing.

These services typically offer:

- Managed Infrastructure - Servers, proxies, and scaling handled automatically

- Anti-Detection Measures - Built-in protection against blocking and rate limiting

- Data Processing - Built-in parsing, cleaning, and export capabilities

- Monitoring & Analytics - Request tracking, success rates, and performance metrics

- Compliance Tools - Robots.txt respect, rate limiting, and legal compliance features

Choosing the right web scraping service can save you time, reduce costs, and help you avoid technical headaches. Now that you understand what these services offer, let's dive into the main types of web scraping solutions available and how they compare.

Types of Web Scraping Services

Web scraping services come in several forms, each suited to different needs and levels of technical expertise. Here’s a quick overview before we break down the main types.

Managed Web Scraping APIs

Managed APIs are the most popular choice for businesses that need reliable, scalable web scraping without managing infrastructure. These services handle all the technical complexity while providing simple REST APIs.

Popular Managed Web Scraping APIs:

- Scrapfly A powerful web scraping API offering advanced JavaScript rendering, anti-bot protection, and reliable proxy management.

- ScrapingBee User-friendly API with built-in proxy rotation and anti-detection features, ideal for quick and reliable data extraction.

- Apify A flexible platform for building, running, and scaling custom web scrapers and automation workflows.

- Bright Data An enterprise-grade data collection platform with extensive proxy networks and advanced scraping capabilities.

These services simplify web data extraction by handling the technical challenges for you, making them a great choice for businesses and developers who want reliable results without managing infrastructure.

| Advantages | Disadvantages |

|---|---|

| No infrastructure management required | Higher cost per request |

| Built-in anti-detection measures | Less customization control |

| Automatic scaling and reliability | Vendor lock-in potential |

| Professional support and documentation |

Managed web scraping APIs let you extract data quickly without handling proxies or anti-bot measures, making them a fast and reliable choice for most projects.

Self-Hosted Scraping Platforms

Self-hosted scraping platforms are ideal for organizations that need maximum control, custom compliance, or want to avoid ongoing per-request costs. With these solutions, you deploy and manage the infrastructure yourself giving you flexibility, but also more responsibility.

Popular Self-Hosted Scraping Platforms:

- Scrapy Cloud Hosted version of the Scrapy framework, allowing you to deploy and manage spiders at scale.

- Portia Visual web scraper builder for creating scrapers without coding.

- Nutch Open-source web crawler built on Apache Hadoop, suitable for large-scale crawling.

These platforms are best for teams with technical expertise who want to customize every aspect of their scraping workflow, integrate with internal systems, or meet strict compliance requirements.

| Advantages | Disadvantages |

|---|---|

| Complete control over infrastructure | Requires technical expertise |

| No per-request costs | Infrastructure management overhead |

| Custom compliance and security measures | Higher upfront costs |

| Integration with existing systems | More maintenance responsibility |

Before choosing a web scraping service, it's important to understand your project's requirements, technical skills, and budget. Each type of service offers a different balance of convenience, control, and cost.

Browser Automation Services

Browser automation services are designed for scraping or testing websites that rely heavily on JavaScript, dynamic content, or complex user interactions. These services use real browsers in the cloud to render pages and execute scripts, making them ideal for modern web apps.

Popular Browser Automation Services:

- BrowserStack Cross-browser testing and automation with real devices and browsers.

- LambdaTest Cloud-based browser automation and testing platform.

- Sauce Labs Enterprise-grade browser testing and automation.

These services are perfect for scraping JavaScript-heavy sites, running end-to-end tests, or automating complex workflows that require real browser behavior.

| Advantages | Disadvantages |

|---|---|

| Handles complex JavaScript applications | Higher resource usage |

| Real browser rendering | Slower performance |

| Cross-browser compatibility | More expensive than HTTP-based tools |

| Advanced automation capabilities |

Browser automation services are best when you need to scrape dynamic or interactive sites, but they can be more expensive and slower than basic scrapers.

Key Features to Consider

When evaluating web scraping services, focus on key features like proxy management, JavaScript rendering, rate limiting, and data extraction capabilities to ensure reliable and efficient data collection.

Proxy Management

Quality proxy management is crucial for avoiding IP blocks and maintaining high success rates.

# Example: Using proxies with Scrapfly

from scrapfly import ScrapflyClient, ScrapeConfig

client = ScrapflyClient(key='your-api-key')

# Rotate through different countries

result = client.scrape(ScrapeConfig(

url="https://example.com",

country="US" # Automatically selects US proxy

))

# Use residential proxies for sensitive targets

result = client.scrape(ScrapeConfig(

url="https://sensitive-site.com",

country="US",

proxy_type="residential" # Higher success rate, higher cost

))

For more advanced proxy techniques, check out our guides on Advanced Proxy Connection Optimization and Best Proxy Providers for Web Scraping.

Pick a web scraping service that matches your project's needs, considering site complexity, data volume, and budget.

JavaScript Rendering

Modern websites rely heavily on JavaScript, so the ability to render and execute JavaScript is a must-have for any serious web scraping project. Without JavaScript rendering, your scraper may miss out on important data that only appears after scripts run in the browser.

# Example: JavaScript rendering with Scrapfly

result = client.scrape(ScrapeConfig(

url="https://dynamic-website.com",

render_js=True, # Enables JavaScript execution

wait_for_selector=".content-loaded" # Wait for specific element

))

JavaScript rendering lets scrapers access content on dynamic sites that load data with scripts. Choose a service with this feature to ensure you can extract all needed information. For more on scraping dynamic websites, see Web Scraping Dynamic Web Pages with Selenium.

Rate Limiting and Delays

Proper rate limiting prevents overwhelming target servers and reduces detection risk.

# Example: Implementing rate limiting

import time

import random

def scrape_with_delays(urls):

for url in urls:

# Random delay between 1-3 seconds

time.sleep(random.uniform(1, 3))

result = client.scrape(ScrapeConfig(

url=url,

country="US"

))

# Process result...

Adding delays and rate limits helps avoid detection and keeps your scraping respectful to target sites.

Use Cases and Applications

Web scraping services are used across a wide range of industries and applications. From tracking prices and monitoring inventory to gathering market intelligence and powering data-driven research, these services enable businesses to access and analyze web data efficiently. Below are some of the most common use cases where web scraping services provide significant value.

E-commerce and Price Monitoring

E-commerce businesses use web scraping services to monitor competitor prices, track inventory changes, and gather product information.

# Example: Price monitoring scraper

def monitor_prices(product_urls):

prices = {}

for url in product_urls:

result = client.scrape(ScrapeConfig(

url=url,

render_js=True,

country="US"

))

# Extract price using CSS selector

price_element = result.selector.css('.price::text').get()

if price_element:

prices[url] = float(price_element.replace('$', '').strip())

return prices

Web scraping services help organizations automate data collection tasks that would otherwise be tedious and time-consuming. By using these tools, businesses can stay competitive, make informed decisions, and discover new opportunities across various domains. For more on price tracking, see How to Build a Price Tracker.

Market Research and Intelligence

Businesses gather market intelligence by monitoring industry trends, competitor activities, and market sentiment.

# Example: Market research scraper

def gather_market_intelligence(competitor_urls):

market_data = []

for url in competitor_urls:

result = client.scrape(ScrapeConfig(

url=url,

render_js=True,

country="US"

))

# Extract relevant market information

company_info = {

'name': result.selector.css('.company-name::text').get(),

'products': result.selector.css('.product::text').getall(),

'pricing': result.selector.css('.pricing-info::text').get()

}

market_data.append(company_info)

return market_data

These examples illustrate just a few of the many ways web scraping services can be applied across industries.

Lead Generation

Sales and marketing teams use web scraping to identify potential customers and gather contact information.

# Example: Lead generation scraper

def generate_leads(directory_urls):

leads = []

for url in directory_urls:

result = client.scrape(ScrapeConfig(

url=url,

render_js=True,

country="US"

))

# Extract lead information

lead_elements = result.selector.css('.business-listing')

for element in lead_elements:

lead = {

'name': element.css('.business-name::text').get(),

'email': element.css('.email::text').get(),

'phone': element.css('.phone::text').get(),

'website': element.css('.website::attr(href)').get()

}

leads.append(lead)

return leads

Web scraping services are used in a wide range of industries and use cases, from market research and lead generation to content aggregation and price monitoring. By automating data collection from public websites, businesses can gain valuable insights, simplify workflows, and stay ahead of the competition.

Pricing Models and Cost Considerations

Pay-per-Request Model

Most managed web scraping services operate on a pay-per-request pricing model, where you are charged based on the number of scraping requests you make. Pricing typically varies depending on the type of request such as basic, premium, or residential proxy usage with higher costs for more advanced features like residential proxies or JavaScript rendering.

This model is ideal for projects with variable or unpredictable scraping volumes, as you only pay for what you use and can easily scale up or down as needed.

Subscription Models

Alternatively, some providers offer subscription-based plans that include a set number of requests per month for a fixed fee, with higher tiers offering more requests and additional features. Subscription models are best suited for businesses with consistent, high-volume scraping needs, as they can provide cost savings and predictable budgeting.

Choosing between these models depends on your usage patterns: pay-per-request is flexible for occasional or small-scale projects, while subscriptions are more economical for ongoing, large-scale data collection.

Power Up with Scrapfly

While building AI applications with these frameworks, you'll often need to gather data from various web sources. Traditional web scraping can be challenging due to anti-bot measures, rate limiting, and dynamic content.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

FAQ

Web scraping services can be complex, so here are answers to common questions developers and businesses ask.

What's the difference between managed services and building scrapers yourself?

Managed services handle infrastructure, proxy management, anti-detection measures, and scaling automatically. Building scrapers yourself gives you complete control but requires significant technical expertise and ongoing maintenance. Managed services are ideal for businesses that need reliable data extraction without the overhead of infrastructure management.

Can web scraping services handle JavaScript-heavy websites?

Yes, most modern web scraping services offer JavaScript rendering capabilities. Services like Scrapfly, ScrapingBee, and Apify can execute JavaScript and wait for dynamic content to load, making them suitable for modern web applications and single-page applications.

How do I ensure compliance with website terms of service?

Always read and respect robots.txt files, implement reasonable rate limits, and review website terms of service. Many services provide built-in compliance features like automatic robots.txt checking and configurable rate limiting. When in doubt, consider reaching out to website owners for permission.

Conclusion

Web scraping services have democratized access to web data, making it possible for businesses of all sizes to gather valuable intelligence without the technical complexity of building and maintaining scraping infrastructure. By understanding the different types of services available, their pricing models, and implementation best practices, you can make informed decisions about your data extraction strategy.

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.

Ultimately, successful web scraping goes beyond selecting the right services, and establishing sustainable, compliant data collection processes.