Ever notice how websites fight back when you try to collect data, throwing up CAPTCHAs, empty pages, or blocking your scraper after a few requests? It’s not just you, Modern sites are built to challenge bots, making list crawling (like grabbing product listings or job boards) both fascinating and surprisingly tough.

But that list-formatted data, catalogs, directories, search results is pure gold for analysis, market research, and automation. This guide shows you exactly how to break through anti-bot shields, assess what’s possible up front, and reliably extract valuable lists with battle-tested techniques.

In this guide, we'll dive deep into the world of list crawling: how to identify which sites are crawlable, and how to choose and implement the right extraction techniques. You'll see practical, production-ready Python examples for crawling lists from real-world sites.

What is List Crawling?

List crawling is the automated extraction of structured data from web pages that present information in list formats such as product catalogs, job boards, tables, or search result pages. For example, it can gather dozens of product listings from a paginated e-commerce catalog, pull job postings from multi-page boards, or extract entries from a directory table, all in a systematic way.

Unlike general web scraping, which may target any type of content or crawl complex site hierarchies, list crawling specifically focuses on repeated structures and patterns found in list-based layouts.

Examples of list crawling use cases:

- Extracting all product names and prices from every page of an online store's catalog.

- Collecting job titles and company names from a multi-page tech jobs site.

- Gathering hotel details from a travel site's search results, such as scraping Booking.com for accommodation listings.

List crawling makes it possible to turn long, paginated, or structured lists into ready-to-use data with speed and consistency.

What Types of Websites Are Best Suited for List Crawling?

Before attempting to crawl a website, it's essential to determine if the site is well-suited for automated list extraction. Certain website structures make list crawling straightforward and robust, while others may present unpredictable challenges due to inconsistent layouts or heavy use of JavaScript. Below are the most common types of sites where list crawling is especially effective, along with examples and key characteristics.

E-commerce & Product Catalogs

E-commerce sites are ideal for list crawling because they have uniform product listings and predictable pagination, making bulk data extraction straightforward and efficient.

Key Characteristics: Highly uniform product details, logical and predictable pagination such as page numbers or "next" buttons, and clearly structured HTML for items, prices, and attributes.

Example Sites: Leading online marketplaces and shop platforms like Amazon, eBay, Shopify storefronts, and all of which use standard layouts for product lists.

Typical Data Extracted: Product names, prices, images, specifications, user reviews, stock status, category tags, and promotional info.

Why List Crawling Excels Here: The consistency in HTML structures across listings and pages means you can build a single extraction pattern that works for entire catalogs, ensuring excellent data accuracy and scale.

If a site presents products through repeated, clearly defined HTML sections with obvious next-page navigation, it's a perfect match for fast, robust list crawling tools.

Business Directories & Service Listings

Business directories and service listing sites are especially well-suited for list crawling thanks to their standardized company information, consistent entry templates, and predictable layouts.

Key Characteristics: Highly uniform listing formats, standardized company details name, address, contact info, and structured HTML segments for each business.

Example Sites: Yelp, Yellow Pages, industry-specific directories, and professional service platforms all present businesses in repeated, easily identified blocks.

Typical Data Extracted: Company names, addresses, contact numbers, business hours, service categories, locations, and ratings.

Why List Crawling Excels Here: These sites use consistent entry templates across pages, allowing you to apply a single extraction pattern to capture business data at scale and with minimal errors.

If you see clearly separated directory entries with repeated HTML structure and simple pagination, you’ve found an ideal candidate for robust, automated extraction.

Job Boards & Career Sites

Job boards and career sites are another top choice for list crawling due to their use of standardized job posting formats and structured information fields.

Key Characteristics: Uniform job post layouts, searchable structured fields (title, company, location), and paginated or infinite-scroll listings.

Example Sites: Indeed, LinkedIn Jobs, Glassdoor, and dedicated employer career sites, Each presenting jobs in recurring, clearly labeled sections.

Typical Data Extracted: Job titles, company names, locations, salaries, job requirements, post dates, and application links.

Why List Crawling Excels Here: With predictable, structured job entries on each page, a single crawler logic can collect hundreds or thousands of postings with high consistency.

If job sites present lists of postings with repeated layout patterns and obvious navigation, they’re a strong fit for scalable list crawling initiatives.

Review & Content Platforms

Websites featuring user-generated reviews or aggregated content (like news or comments) offer another prime environment for list crawling, thanks to their repetitive data schemas and structured layout.

Key Characteristics: Consistent formats for reviews or articles, standardized rating/timestamp systems, and uniform containers for each entry.

Example Sites: Trustpilot, Google Reviews, news aggregators, and any review-centric or ratings-based platform.

Typical Data Extracted: Star/score ratings, timestamps, review text, reviewer profiles, product/service referenced.

Why List Crawling Excels Here: Reviews and content items are presented in repeated components, making it easy for an extraction script to systematically gather large sets of feedback and opinions.

If review or content sites maintain a strict card or block layout for each entry, list crawling is fast and reliable.

Social & Professional Data

Social media platforms and professional networks are increasingly useful targets for list crawling, as they offer rich, repeatable data structures for posts, profiles, or repositories.

Key Characteristics: Standardized post/profile layouts, consistent metadata like, timestamps or engagement metrics, and list or feed-based navigation.

Example Sites: GitHub repositories, Twitter/X feeds, LinkedIn profiles and activity, and other social content feeds.

Typical Data Extracted: User profile data, post content, timestamps, engagement metrics (likes, replies, stars), follower counts, content metadata.

Why List Crawling Excels Here: The repeated designs of posts, feeds, or user cards mean you can extract large quantities of activity or profile data following a single, robust selector logic.

If a social or professional site displays posts or users in standard, predictable sections (e.g., feeds, timelines, cards), smart list crawling gives you structured, actionable datasets.

Choosing the Right Scraping Tool for Your Project

The choice of technology for list crawling depends on your target website’s complexity and your project’s requirements. Let’s break down where each tool excels so you can confidently select the right fit:

How to Match Scraping Tools to Site Complexity

Choosing the right scraping tool is essential for successful list crawling there's no one-size-fits-all answer. The website’s structure, whether it relies on JavaScript, and its anti-bot defenses will all affect which tool works best.

Here’s a quick rundown to help you decide which approach matches your target site's complexity, so you can crawl efficiently and avoid common pitfalls.

BeautifulSoup:

Use for static HTML pages with simple, repeatable structures (e.g., product lists, directory tables)

Perfect when pages don’t rely on JavaScript for content loading.- Best for: Fast, lightweight projects or learning web scraping

- Limits: Won’t see content loaded by JavaScript

Playwright / Puppeteer:

Use for modern, dynamic websites where items load via JavaScript or infinite scroll (social feeds, SPAs).

These tools automate real browsers to capture content as a real user would see.- Best for: Handling JavaScript-driven content, mimicking full browser interactions

- Choose Playwright if you want Python or multi-browser support

- Choose Puppeteer if you’re focused on Node.js/JavaScript and Chromium

Scrapfly:

Use for any site static or dynamic requiring reliability, anti-bot bypass, or at-scale data extraction.

Scrapfly is a purpose-built scraping API that handles browser automation, JavaScript rendering, proxy rotation, CAPTCHA-solving, and more with minimal setup.- Best for: Production workloads, anti-bot defenses, complex requirements

- Limits: Paid service; may be overkill for tiny hobby projects

At-a-Glance: Which Tool for Which Job?

| Tool | Use It For |

|---|---|

| BeautifulSoup | Static HTML pages with simple, repeated content like product lists or directory tables. Best for sites where all data is visible in "View Page Source". Fast, lightweight, and easy to use for basic list and table extraction without JavaScript. |

| Playwright | Modern websites where lists or tables load via JavaScript (dynamic content), infinite scroll, or require button clicks to reveal items. Great for multi-step navigation, simulating user actions, and scraping data rendered in real browsers across Chrome, Firefox, and more (Python/Node.js). |

| Puppeteer | JavaScript-heavy or single-page applications (SPAs), especially if your projects are built with Node.js. Ideal when you need to interact with pages, fill forms, or handle dynamic list loading on Chromium browsers. Strong choice for developers comfortable with JavaScript or TypeScript. |

| Scrapfly | Any website static or dynamic protected by anti-bot systems, CAPTCHAs, rate limits, or requiring high-volume/cloud-native scraping. Includes built-in browser automation, proxy rotation, CAPTCHA bypass, AI-powered data extraction, and is designed for production workloads at scale. |

Start with static HTML parsers for simple sites. Use browser automation like Playwright if data is loaded dynamically. For complex or protected sites, a scraping API such as Scrapfly is best. Always pick the simplest tool that fits the job.

Crawler Setup

Setting up a basic list crawler requires a few essential components. Python, with its rich ecosystem of libraries, offers an excellent foundation for building effective crawlers.

For our list crawling examples we'll use Python with the following libraries:

- requests - as our http client for retrieving pages.

- BeautifulSoup - for parsing HTML data using CSS Selectors.

- Playwright - for automating a real web browser for crawling tasks

All of these can be installed using this pip command:

$ pip install beautifulsoup4 requests playwright

Once you have these libraries installed see this simple example item list crawler that scrapes 1 item page:

import requests

from bs4 import BeautifulSoup

def crawl_static_list(url):

# Send HTTP request to the target URL

response = requests.get(url, headers={"User-Agent": "Mozilla/5.0"})

# Parse the HTML content

soup = BeautifulSoup(response.text, "html.parser")

# Find all product items

items = soup.select("div.row.product")

# Extract data from each item

results = []

for item in items:

title = item.select_one("h3.mb-0 a").text.strip()

price = item.select_one("div.price").text.strip()

results.append({"title": title, "price": price})

return results

url = "https://web-scraping.dev/products"

data = crawl_static_list(url)

print(f"Found {len(data)} items")

for item in data[:3]: # Print first 3 items as example

print(f"Title: {item['title']}, Price: {item['price']}")

Example Output

Found 5 items

Title: Box of Chocolate Candy, Price: 24.99

Title: Dark Red Energy Potion, Price: 4.99

Title: Teal Energy Potion, Price: 4.99

In the above code, we're making an HTTP request to a target URL, parsing the HTML content using BeautifulSoup, and then extracting specific data points from each list item.

This approach works well for simple, static lists where all content is loaded immediately. For more complex scenarios like paginated or dynamically loaded lists, you'll need to extend this foundation with additional techniques we'll cover in subsequent sections.

Your crawler's effectiveness largely depends on how well you understand the structure of the target website. Taking time to inspect the HTML using browser developer tools will help you craft precise selectors that accurately target the desired elements.

Let's now see how we can enhance our basic crawler with more advanced capabilities and different list crawling scenarios

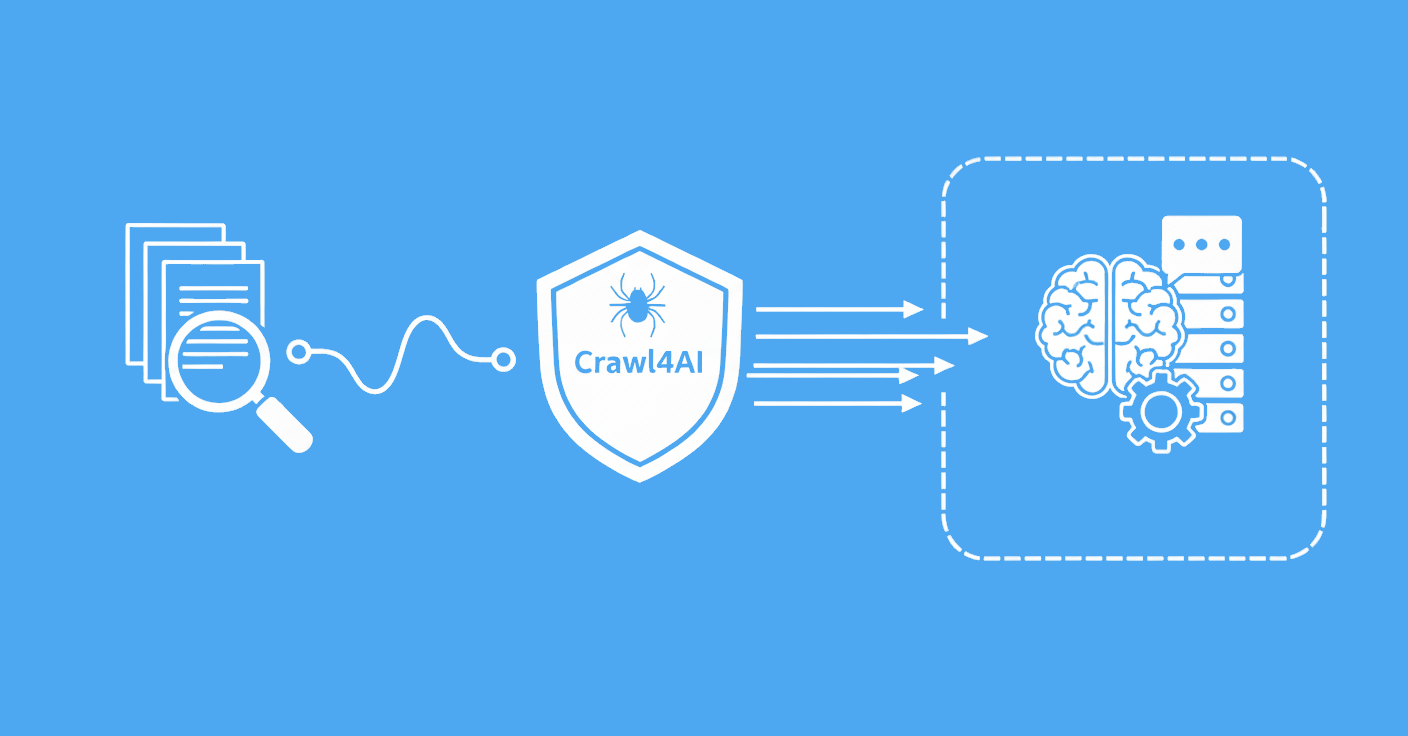

Power-Up with Scrapfly

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - extract web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- LLM prompts - extract data or ask questions using LLMs

- Extraction models - automatically find objects like products, articles, jobs, and more.

- Extraction templates - extract data using your own specification.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Here's an example of how to scrape a product with the Scrapfly web scraping API:

from scrapfly import ScrapflyClient, ScrapeConfig

# Create a ScrapflyClient instance

client = ScrapflyClient(key='YOUR-SCRAPFLY-KEY')

# Create scrape requests

api_result = client.scrape(ScrapeConfig(

url="https://web-scraping.dev/product/1",

# optional: set country to get localized results

country="us",

# optional: use cloud browsers

render_js=True,

# optional: scroll to the bottom of the page

auto_scroll=True,

))

print(api_result.result["context"]) # metadata

print(api_result.result["config"]) # request data

print(api_result.scrape_result["content"]) # result html content

# parse data yourself

product = {

"title": api_result.selector.css("h3.product-title::text").get(),

"price": api_result.selector.css(".product-price::text").get(),

"description": api_result.selector.css(".product-description::text").get(),

}

print(product)

# or let AI parser extract it for you!

api_result = client.scrape(ScrapeConfig(

url="https://web-scraping.dev/product/1",

# use AI models to find ALL product data available on the page

extraction_model="product"

))

Example Output

{

"title": "Box of Chocolate Candy",

"price": "$9.99 ",

"description": "Indulge your sweet tooth with our Box of Chocolate Candy. Each box contains an assortment of rich, flavorful chocolates with a smooth, creamy filling. Choose from a variety of flavors including zesty orange and sweet cherry. Whether you're looking for the perfect gift or just want to treat yourself, our Box of Chocolate Candy is sure to satisfy.",

}

For more, explore web scraping API and its documentation.

Paginated List Crawling

Paginated lists split the data across multiple pages with numbered navigation. This technique is common in e-commerce, search results, and data directories.

One example of paginated pages is web-scraping.dev/products which splits products through several pages.

Example Crawler

Here's how to build a product list crawler that handles traditional pagination:

import requests

from bs4 import BeautifulSoup

# Get first page and extract pagination URLs

url = "https://web-scraping.dev/products"

soup = BeautifulSoup(requests.get(url).text, 'html.parser')

other_page_urls = set(a.attrs["href"] for a in soup.select(".paging>a") if a.attrs.get("href"))

# Extract product titles from first page

all_product_titles = [a.text.strip() for a in soup.select(".product h3 a")]

# Extract product titles from other pages

for url in other_page_urls:

page_soup = BeautifulSoup(requests.get(url).text, 'html.parser')

all_product_titles.extend(a.text.strip() for a in page_soup.select(".product h3 a"))

# Print results

print(f"Total products found: {len(all_product_titles)}")

print("\nProduct Titles:")

for i, title in enumerate(all_product_titles, 1):

print(f"{i}. {title}")

Example Output

Total products found: 30

Product Titles:

- Box of Chocolate Candy

- Dark Red Energy Potion

- Teal Energy Potion

- Red Energy Potion

- Blue Energy Potion

- Box of Chocolate Candy

- Dark Red Energy Potion

- Teal Energy Potion

- Red Energy Potion

- Blue Energy Potion

- Dragon Energy Potion

- Hiking Boots for Outdoor Adventures

- Women's High Heel Sandals

- Running Shoes for Men

- Kids' Light-Up Sneakers

- Classic Leather Sneakers

- Cat-Ear Beanie

- Box of Chocolate Candy

- Dark Red Energy Potion

- Teal Energy Potion

- Red Energy Potion

- Blue Energy Potion

- Dragon Energy Potion

- Hiking Boots for Outdoor Adventures

- Women's High Heel Sandals

- Running Shoes for Men

- Kids' Light-Up Sneakers

- Classic Leather Sneakers

- Cat-Ear Beanie

- Box of Chocolate Candy

In the above code, we first get the first page and extract pagination URLs. Then, we extract product titles from the first page and other pages. Finally, we print the total number of products found and the product titles.

Crawling Challenges

While crawling product lists, you'll encounter several challenges:

Pagination Variations: Some sites use parameters like

?page=2while others might use path segments like/page/2/or even completely different URL structures.Paging Limiting: Many sites restrict the maximum number of viewable pages (typically 20-50), even with thousands of products. Overcome this by using filters like price ranges to access the complete dataset as demonstrated in paging limit bypass tutorial.

Changing Layouts: Product list layouts may vary across different categories or during site updates.

Missing Data: Not all products will have complete information, requiring robust error handling.

Effective product list crawling requires adapting to these challenges with techniques like request throttling, robust selectors, and comprehensive error handling.

Let's now explore how to handle more dynamic lists that load content as you scroll.

Endless List Crawling

Modern websites often implement infinite scrolling a technique that continuously loads new content as the user scrolls down the page.

These "endless" lists present unique challenges for crawlers since the content isn't divided into distinct pages but is loaded dynamically via JavaScript.

One example of infinite data lists is the web-scraping.dev/testimonials page:

Let's see how we can crawl it next.

Example Crawler

To tackle endless lists, the easiet method is to use a headless browser that can execute JavaScript and simulate scrolling. Here's an example using Playwright and Python:

# This example is using Playwright but it's also possible to use Selenium with similar approach

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch(headless=False)

context = browser.new_context()

page = context.new_page()

page.goto("https://web-scraping.dev/testimonials/")

# scroll to the bottom:

_prev_height = -1

_max_scrolls = 100

_scroll_count = 0

while _scroll_count < _max_scrolls:

# Execute JavaScript to scroll to the bottom of the page

page.evaluate("window.scrollTo(0, document.body.scrollHeight)")

# Wait for new content to load (change this value as needed)

page.wait_for_timeout(1000)

# Check whether the scroll height changed - means more pages are there

new_height = page.evaluate("document.body.scrollHeight")

if new_height == _prev_height:

break

_prev_height = new_height

_scroll_count += 1

# now we can collect all loaded data:

results = []

for element in page.locator(".testimonial").element_handles():

text = element.query_selector(".text").inner_html()

results.append(text)

print(f"scraped {len(results)} results")

Example Output

scraped 60 resultsIn the above code, we are using Playwright to control a browser and scroll to the bottom of the page to load all the testimonials. We are then collecting the text of each testimonial and printing the number of testimonials scraped. This approach effectively handles endless lists that load content dynamically.

Crawling Challenges

Endless list crawling comes with its own set of challenges:

Speed: Browser crawling is much slower than API-based approaches. When possible, reverse engineer the site's API endpoints for direct data fetching often thousands of times faster, as shown in our reverse engineering of endless paging guide).

Resource Intensity: Running a headless browser consumes significantly more resources than simple HTTP requests.

Element Staleness: As the page updates, previously found elements may become "stale" and unusable, requiring refetching.

Scroll Triggers: Some sites use scroll-percentage triggers rather than scrolling to the bottom, requiring more nuanced scroll simulation.

Now that we've covered dynamic content loading, let's explore how to extract structured data from article-based lists, which present their own unique challenges.

List Article Crawling

Articles featuring lists (like "Top 10 Programming Languages" or "5 Best Travel Destinations") represent another valuable source of structured data. These lists are typically embedded within article content, organized under headings or with numbered sections.

Example Crawler

For this example, let's scrape Scrapfly's own top-10 listicle article using requests and beautifulsoup:

import requests

from bs4 import BeautifulSoup

response = requests.get("https://scrapfly.io/blog/top-10-web-scraping-libraries-in-python/")

# Check if the request was successful

if response.status_code != 200:

print(f"Failed to retrieve the page. Status code: {response.status_code}")

libraries = []

else:

# Parse the HTML content with BeautifulSoup

# Using 'lxml' parser for better performance and more robust parsing

soup = BeautifulSoup(response.text, 'lxml')

# Find all h2 headings which represent the list items

headings = soup.find_all('h2')

libraries = []

for heading in headings:

# Get the heading text (library name)

title = heading.text.strip()

# Skip the "Summary" section

if title.lower() == "summary":

continue

# Get the next paragraph for a brief description

# In BeautifulSoup, we use .find_next() to get the next element

next_paragraph = heading.find_next('p')

description = next_paragraph.text.strip() if next_paragraph else ''

libraries.append({

"name": title,

"description": description

})

# Print the results

print("Top Web Scraping Libraries in Python:")

for i, lib in enumerate(libraries, 1):

print(f"{i}. {lib['name']}")

print(f" {lib['description'][:100]}...") # Print first 100 chars of description

Example Output

Top Web Scraping Libraries in Python:

1. HTTPX

HTTPX is by far the most complete and modern HTTP client package for Python. It is inspired by the p...

2. Parsel and LXML

LXML is a fast and feature-rich HTML/XML parser for Python. It is a wrapper around the C library lib...

3. BeautifulSoup

Beautifulsoup (aka bs4) is another HTML parser library in Python. Though it's much more than that....

4. JMESPath and JSONPath

JMESPath and JSONPath are two libraries that allow you to query JSON data using a query language sim...

5. Playwright and Selenium

Headless browsers are becoming very popular in web scraping as a way to deal with dynamic javascript...

6. Cerberus and Pydantic

An often overlooked process of web scraping is the data quality assurance step. Web scraping is a un...

7. Scrapfly Python SDK

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale....

8. Related Posts

Learn how to efficiently find all URLs on a domain using Python and web crawling. Guide on how to cr...

In this example, we used the requests library to make an HTTP GET request to a blog post about the top web scraping libraries in Python. We then used BeatifulSoup to parse the HTML content of the page and extract the list of libraries and their descriptions. Finally, we printed the results to the console.

Crawling Challenges

Extracting data from list articles requires understanding the content structure and accounting for variations in formatting. Some articles may use numbering in headings, while others rely solely on heading hierarchy. A robust crawler should handle these variations and clean the extracted text to remove extraneous content.

There are some tools that can assist you with listicle scraping:

- newspaper4k (previously newspaper3k implements article parsing from HTML and various helper functions that can help to identify lists.

- goose3 is another library that can extract structured data from articles, including lists.

- trafilatura another powerful html parser with a lot of prebuilt functions to extract structured data from articles.

- parsel extracts using powerful Xpath selectors allowing for very flexible and reliable extraction.

- LLMs with RAG can be an easy way to extract data from list articles.

Let's see tabular data next, which presents yet another structure for list information.

Table List Crawling

Tables represent another common format for presenting list data on the web. Whether implemented as HTML <table> elements or styled as tables using CSS grids or other layout techniques, they provide a structured way to display related data in rows and columns.

Example Crawler

For this example let's see the table data section on web-scraping.dev/product/1 page:

Here's how to extract data from HTML tables using BeautifulSoup html parsing library:

from bs4 import BeautifulSoup

import requests

response = requests.get("https://web-scraping.dev/product/1")

html = response.text

soup = BeautifulSoup(html, "lxml")

# First, select the desired table element (the 2nd one on the page)

table = soup.find_all('table', {'class': 'table-product'})[1]

headers = []

rows = []

for i, row in enumerate(table.find_all('tr')):

if i == 0:

headers = [el.text.strip() for el in row.find_all('th')]

else:

rows.append([el.text.strip() for el in row.find_all('td')])

print(headers)

print(rows)

Example Output

['Version', 'Package Weight', 'Package Dimension', 'Variants', 'Delivery Type']

[['Pack 1', '1,00 kg', '100x230 cm', '6 available', '1 Day shipping'], ['Pack 2', '2,11 kg', '200x460 cm', '6 available', '1 Day shipping'], ['Pack 3', '3,22 kg', '300x690 cm', '6 available', '1 Day shipping'], ['Pack 4', '4,33 kg', '400x920 cm', '6 available', '1 Day shipping'], ['Pack 5', '5,44 kg', '500x1150 cm', '6 available', '1 Day shipping']]

In the above code, we're identifying and parsing HTML tables, extracting both headers and data rows. The function handles various table structures, including those with and without explicit header elements. This approach gives you structured data that preserves the relationships between columns and rows.

Crawling Challenges

When crawling tables, it's important to look beyond the obvious <table> elements. Many modern websites implement table-like layouts using CSS grid, flexbox, or other techniques. Identifying these structures requires careful inspection of the DOM and adapting your selectors accordingly.

All table structures are easy to handle using beautifulsoup, CSS Selectors or XPath powered algorithms though for more generic solutions can use LLMs and AI. One commonly used technique is to use LLMs to convert HTML to Markdown format which can often create accurate tables from flexible HTML table structures.

Now, let's explore how to crawl search engine results pages for list-type content.

SERP List Crawling

Search Engine Results Pages (SERPs) offer a treasure trove of list-based content, presenting curated links to pages relevant to specific keywords. Crawling SERPs can help you discover list articles and other structured content across the web.

Example Crawler

Here's a basic approach to crawling Google search results:

import requests

from bs4 import BeautifulSoup

import urllib.parse

def crawl_google_serp(query, num_results=10):

# Format the query for URL

encoded_query = urllib.parse.quote(query)

# Create Google search URL

url = f"https://www.google.com/search?q={encoded_query}&num={num_results}"

# Add headers to mimic a browser

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36",

"Accept-Language": "en-US,en;q=0.9"

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, "html.parser")

# Extract search results

results = []

# Target the organic search results

for result in soup.select("div.g"):

title_element = result.select_one("h3")

if title_element:

title = title_element.text

# Extract URL

link_element = result.select_one("a")

link = link_element.get("href") if link_element else None

# Extract snippet

snippet_element = result.select_one("div.VwiC3b")

snippet = snippet_element.text if snippet_element else None

results.append({

"title": title,

"url": link,

"snippet": snippet

})

return results

from scrapfly import ScrapflyClient, ScrapeConfig

scrapfly = ScrapflyClient(key="YOUR-SCRAPFLY-KEY")

result = scrapfly.scrape(ScrapeConfig(

url="https://www.google.com/search?q=python"

# select country to get localized results

country="us",

# enable cloud browsers

render_js=True,

# scroll to the bottom of the page

auto_scroll=True,

# use AI to extract data

extraction_model="search_engine_results",

))

print(result.content)

In the above code, we're constructing a Google search query URL, sending an HTTP request with browser-like headers, and then parsing the HTML to extract organic search results. Each result includes the title, URL, and snippet text, which can help you identify list-type content for further crawling.

Crawling Challenges

It's worth noting that directly crawling search engines can be challenging due to very strong anti-bot measures. For production applications, you may need to consider more sophisticated techniques to avoid blocks and for that see our blocking bypass introduction tutorial.

Scrapfly can easily bypass all SERP blocking measures and return AI extracted data for any SERP page using AI Web Scraping API.

Discovering List Pages with Crawler API

When you need to find all list pages across a domain before scraping them, Scrapfly's Crawler API can automatically crawl entire websites, discovering product listings, search results, and other list-type pages without manually building URL discovery logic.

FAQs

Now let's take a look at some frequently asked questions about list crawling.

What's the difference between list crawling and general web scraping?

List crawling focuses on extracting structured data from lists, such as paginated content, infinite scrolls, and tables. General web scraping targets various elements across different pages, while list crawling requires specific techniques for handling pagination, scroll events, and nested structures.

How do I handle rate limiting when crawling large lists?

Use adaptive delays (1-3 seconds) and increase them if you get 429 errors. Implement exponential backoff for failed requests and rotate proxies to distribute traffic. A request queuing system helps maintain a steady and sustainable request rate.

How can I extract structured data from deeply nested lists?

Identify nesting patterns using developer tools. Use a recursive function to process items and their children while preserving relationships. CSS selectors, XPath, and depth-first traversal help extract data while maintaining hierarchy.

What's the best approach for crawling infinite scroll lists?

Use headless browsers (Playwright, Selenium) to simulate scrolling and trigger content loading. Implement scroll detection to stop when no new content loads. For better performance, reverse engineer the site's API endpoints for direct data fetching.

How do I handle pagination limits when crawling product catalogs?

Use filters like price ranges, categories, or search terms to access different data subsets. Implement URL pattern recognition to handle various pagination formats. Consider using sitemaps or API endpoints when available.

Can I use AI/LLMs for list crawling instead of traditional parsing?

Yes, LLMs can extract structured data from HTML using natural language instructions. This approach is flexible for varying list formats but may be slower and more expensive than traditional parsing methods.

Summary

List crawling is the process of extracting useful, structured data from web pages that display information in clear, repeated formats like product lists, job postings, business directories, or search results tables.

In this guide, you discovered:

- What list crawling is and how it compares to general web scraping.

- How to recognize websites that are good candidates for list crawling by checking their structure, layout consistency, and use of technologies like JavaScript.

- The main types of sites ideal for list crawling, including e-commerce catalogs, business directories, job boards, review platforms, and social or professional feeds.

- How to choose the right tool for the job (e.g., BeautifulSoup for static pages, Playwright for dynamic sites, or Scrapfly for advanced, anti-bot-defended targets).

- How to implement practical crawling techniques, from basic examples to advanced browser automation and AI-powered extraction.

- How to handle real-world challenges like pagination, infinite scrolling, rate limiting, and data cleaning.

By following this guide, you’ll be able to confidently assess any website, select the right extraction approach, and turn even complex web lists into reliable, well-structured data you can use anywhere.