Large Language Models (LLMs) have revolutionized natural language processing tasks, from chatbots to summarization tools. However, concerns about privacy, latency, and control have led many to explore local deployment of LLMs.

In this blog post, we'll explore what Local LLMs are, the best options available, their requirements, and how they integrate with modern tools like LangChain for advanced applications.

Key Takeaways

Learn what is a local llm and how to deploy LLaMA, Qwen, and Mistral models locally with GPU acceleration and LangChain integration.

- Deploy LLaMA, Qwen, and Mistral models locally with GPU acceleration and memory optimization for production applications

- Configure model quantization and fine-tuning for specific use cases and hardware constraints

- Implement LangChain integration for advanced AI workflows and application development

- Configure privacy and security measures for sensitive data processing with local LLM deployment

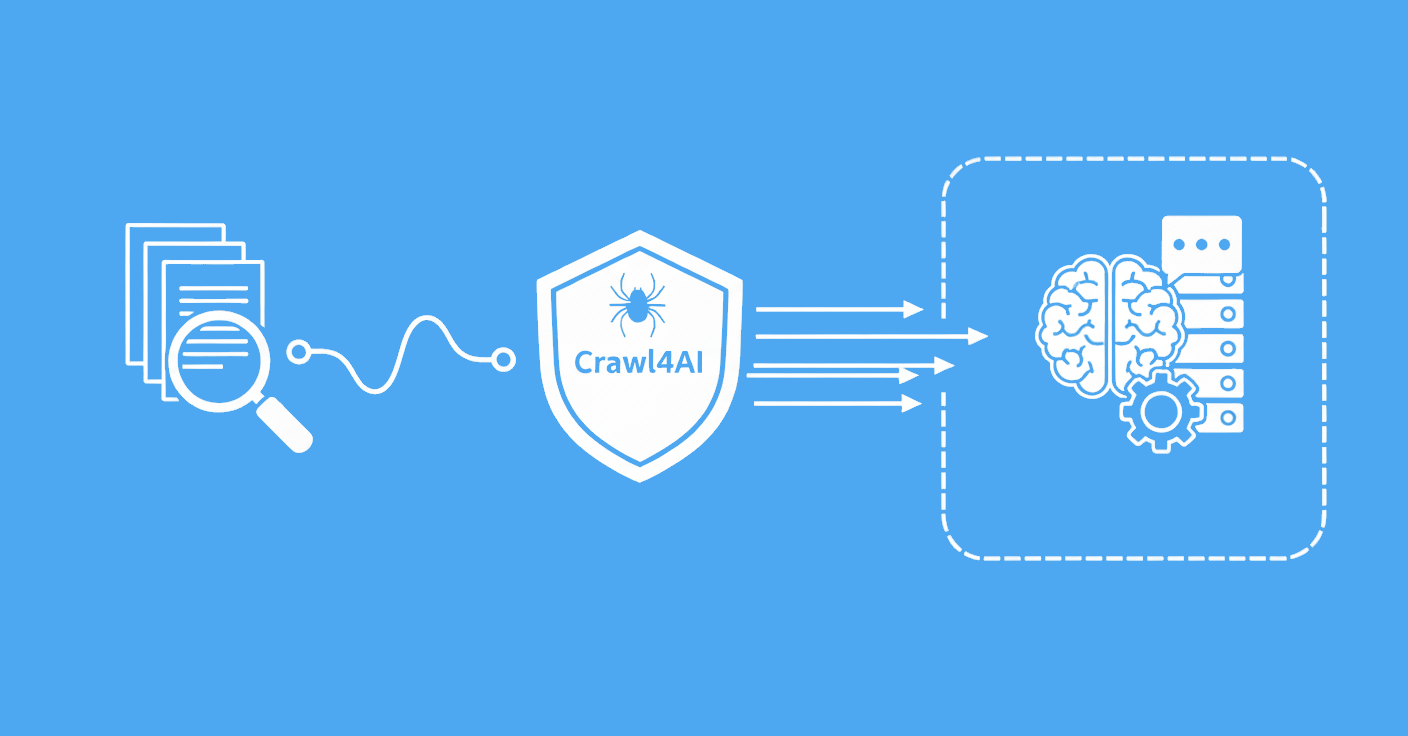

- Use specialized tools like ScrapFly for automated data collection and preprocessing for LLM training

- Implement model evaluation and performance monitoring for reliable AI application deployment

What is a Local LLM?

A Local LLM is a machine learning model deployed and executed on local hardware, rather than relying on external cloud services. Unlike cloud-based LLMs, Local LLMs enable organizations to process sensitive data securely while reducing reliance on external servers.

These models offer greater privacy, reduced latency, and enhanced control over customizations, making them ideal for use cases requiring high levels of confidentiality and adaptability.

Best Local LLM Options

Every day, new open-source LLMs are released, each claiming to be the best for a specific purpose. While this rapid development is beneficial for both AI advancement and the open-source community, it can be challenging to keep track of the latest and most effective models.

However, there are established mainstream open-source models that have been thoroughly tested and developed by large teams of machine learning experts.

LLaMA

The LLaMA (Large Language Model Meta AI) series, developed by Meta, offers a range of models designed for various natural language processing tasks. Here are some key options and features:

- LLaMA 3.1: This version includes models with parameters ranging from 8B to 405B. It's designed for a wide range of NLP tasks and supports multiple languages.

- LLaMA 3.2: Available in sizes like 3B and 1B, this version is optimized for efficient deployment on consumer hardware.

- LLaMA 3.3: The latest iteration, offering models with up to 70B parameters. It's designed for large-scale AI applications and includes both base and instruction-tuned variants.

These models are trained on trillions of tokens and are capable of handling a variety of tasks, from text generation to language translation. They are also available on platforms like Hugging Face, making them accessible for research and development.

Qwen

Developed by Alibaba Cloud, the Qwen series has made significant strides in the open-source LLM arena:

Qwen2.5-72B: A 72-billion-parameter dense decoder-only language model that has demonstrated superior performance compared to leading open-source models like LLaMA 3.1-70B and Mistral-Large-V2 across various benchmarks.

Qwen2.5-Coder: Specialized for coding tasks, this model has been trained on 5.5 trillion tokens of code-related data, enabling even smaller coding-specific models to deliver competitive performance against larger language models on coding evaluation benchmarks.

The Qwen series is recognized for its robust performance across diverse tasks, including coding and mathematical problem-solving.

Mistral

Mistral AI, a Paris-based startup, has rapidly emerged as a key player in the European AI sector, developing several AI language models capable of performing various tasks:

- Mistral 7B: A 7-billion-parameter model that outperforms LLaMA 2 13B across all evaluated benchmarks and LLaMA 1 34B in reasoning, mathematics, and code generation. It leverages grouped-query attention (GQA) for faster inference and sliding window attention (SWA) to handle sequences of arbitrary length with reduced inference cost.

Mistral AI has also partnered with Microsoft, allowing the tech giant's customers to access its models in exchange for computational resources.

These open-source LLMs are continually evolving, with new versions and specialized models being released regularly. Staying informed about the latest developments is essential to fully utilize their potential in various applications.

To get the most recent benchmarks on newly released open-source LLMs, check out the popular Open LLM Leaderboard on Hugging Face.

Local LLM Requirements

Running open-source LLMs locally can be a rewarding experience, but it does come with some hardware and software requirements. Here are the key components you'll need:

- GPU: A powerful GPU is essential for running large language models efficiently. For example, the NVIDIA GeForce RTX 4090 with 24 GB of VRAM is a popular choice. Smaller models might run on GPUs with less VRAM, but for larger models like LLaMA 3.1 405B, you'll need multiple high-end GPUs.

- CPU: A multi-core CPU can help with preprocessing and managing data. While the GPU handles most of the heavy lifting, a good CPU ensures smooth operation.

- RAM: Adequate RAM is crucial for managing the model’s weights and data. A minimum of 16GB is recommended for smaller models, while larger models may require 64GB or more..

- Storage: SSDs (Solid State Drives) are preferred for faster data access and model loading times. LLMs, especially larger versions, require significant storage space. Plan for 50GB to 300GB+, depending on the model size and additional resources

Running Local LLMs with Python and LangChain

LangChain is an open-source Python framework designed to simplify the development of applications that leverage Large Language Models (LLMs).While LangChain is commonly used with cloud-based LLMs like OpenAI's GPT or Anthropic's Claude, LangChain also supports local LLMs, making it an excellent tool for building applications that prioritize privacy, cost-effectiveness, or offline functionality.

Key Features of LangChain

LangChain offers several features that enhance LLM development by providing several tools and functionalities:

Chain Building

LangChain enables the creation of modular workflows with LLMs. These workflows can include pre-processing user inputs, querying the LLM, and post-processing outputs.

Integration with Local LLMs

LangChain supports popular local LLM frameworks like Hugging Face Transformers, GPT4All, and Ollama.

It can interact with quantized and optimized versions of LLMs (e.g., LLaMA, Qwen, or Mistral) that are fine-tuned for specific tasks.

LLM Memory

LangChain's memory components allow LLMs to retain conversational context, enabling applications like chatbots or virtual assistants to maintain continuity across user interactions.

External Tool Integration

LangChain allows LLMs to use external tools, such as search engines, calculators, or databases, for enhanced functionality. This bridges the gap between local computation and dynamic, real-world data.

Prompt Management

LangChain simplifies prompt engineering, making it easier to adapt local models to specific use cases by dynamically crafting effective prompts for tasks like text generation, summarization, or Q&A.

Agent Framework

With LangChain, you can build agent-based systems where LLMs make decisions or execute tasks based on specific goals. These agents can combine reasoning capabilities with tools or APIs.

Steps to Use LangChain with Local LLMs

This guide uses the following versions:

- Python: 3.10+

- LangChain: 0.3.7

- LangChain-Ollama: 0.2.0

- Ollama: 0.1.48

Note: LLM libraries evolve rapidly. For the latest versions, check LangChain releases and Ollama releases.

LangChain's ability to integrate seamlessly with local LLMs makes it a powerful framework for developers looking to build robust, cost-effective, and privacy-conscious AI applications.

Here are the steps to build a simple chatbot with langchain and llama 3.2 model in Python.

We will be using Ollama to download and run the model locally. Ollama is an open-source tool that runs LLMs directly on a local machine and creates an isolated environment which prevents any potential conflicts with other installed software.

Python Version Requirement: This guide requires Python 3.10 or higher. Check your version:

$ python --version

Download Ollama version 0.1.48 (or compatible) on your machine from the offical Ollama download page.

Verify Ollama installation:

$ ollama --versionLLaMa 3.2 1B is smallest, most light-weitgh LLM in the LLaMa 3 family. You can pull the LLaMa 3.2 1B model file to your local machine using the following command:

$ ollama pull llama3.2:1bInstall the langchain package using the following command:

$ pip install langchain==0.3.7Install the langchain ollama integration package using the following command:

$ pip install langchain-ollama==0.2.0

Now that we have everything setup, building a simple chatbot can look something like this:

from langchain_ollama import ChatOllama

llm = ChatOllama(

model="llama3.2:1b",

)

messages = [

(

"system",

"You are a helpful assistant that translates English to French. Translate the user sentence.",

),

("human", "I love programming."),

]

ai_msg = llm.invoke(messages)

print(ai_msg.content)

"""

The translation of "I love programming" from English to French is:

"J'adore programmer."

"""

As you can see, with minimal code we were able to run a state-of-the-art LLM locally and build a simple chatbot with it thanks to Ollama and Langchain. Langchain opens wide possibilities to build complex AI applications.

Local LLM RAG for Data Parsing

Retrieval-Augmented Generation (RAG) enhances LLM capabilities by integrating external data sources. This approach allows Local LLMs to:

- Parse and analyze unstructured datasets.

- Extract actionable insights from large corpora.

- Perform context-aware prompting for specialized queries.

The are many official and community integrations with LLM frameworks like Langchain and LLamaIndex that allow using third-party APIs as external data soruces for RAG.

For example, Scrapfly provides integrations with both Langchain and LLamaIndex to provide a seamless experiece connecting data from Scrapfly's web scraping API to LLM applications built with Langchain and LLamaIndex. To learn more about RAG, check out our comprehensive guide on extending LLMs with web scraping and RAG.

Here a simple example of using Scrapfly's Langchain integration to feed external data to the LLaMa 3.2 1B model:

import os

from langchain_community.document_loaders import ScrapflyLoader

from langchain_core.output_parsers import JsonOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_ollama import ChatOllama

# 1. load the llama 3.2 model using Ollama

llm = ChatOllama(

model="llama3.2:1b",

)

# 2. prompt design

prompt = "Given the data fetched from the specified product URLs, \

find the following product fields {fields} in the provided \

markdown and return a JSON"

prompt_template = ChatPromptTemplate.from_messages(

[("system", prompt), ("user", "{markdown}")]

)

# 3. put together in a chain: form prompt -> LLama -> result parser

chain = (

prompt_template

| llm

| JsonOutputParser()

)

# 4. Retrieve page HTML as markdown using Scrapfly

loader = ScrapflyLoader(

["https://web-scraping.dev/product/1"],

api_key="YOUR SCRAPFLY KEY",

)

docs = loader.load()

# 5. execute RAG chain with your inputs:

print(chain.invoke({

"fields": ["price", "title"], # select product price and field

"markdown": docs # supply the markdown content from Scrapfly scraper

}))

{'price': '$9.99', 'title': 'Box of Chocolate Candy'}

For building local knowledge bases from entire websites, Scrapfly's Crawler API can automatically crawl domains and return LLM-ready markdown for your local RAG pipeline.

Power-Up with Scrapfly

Running a sophisticated LLM locally to extract data from unstructured data can be a very resource intensive task. Scrapfly's advanced Extraction API simplifies the data extraction process using state of the art LLMs.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Scrapfly's Extraction API includes a number of predefined models that can automatically extract common objects like products, reviews, articles etc.

For example, let's use the product model to extract the product data from the same page used in the example above:

from scrapfly import ScrapflyClient, ScrapeConfig, ExtractionConfig

client = ScrapflyClient(key="YOUR SCRAPFLY KEY")

# First retrieve your html or scrape it using web scraping API

html = client.scrape(ScrapeConfig(url="https://web-scraping.dev/product/1")).content

# Then, extract data using extraction_model parameter:

api_result = client.extract(

ExtractionConfig(

body=html,

content_type="text/html",

extraction_model="product",

)

)

print(api_result.result)

Example Output

{

"data": {

"aggregate_rating": null,

"brand": "ChocoDelight",

"breadcrumbs": null,

"canonical_url": null,

"color": null,

"description": "Indulge your sweet tooth with our Box of Chocolate Candy. Each box contains an assortment of rich, flavorful chocolates with a smooth, creamy filling. Choose from a variety of flavors including zesty orange and sweet cherry. Whether you're looking for the perfect gift or just want to treat yourself, our Box of Chocolate Candy is sure to satisfy.",

"identifiers": {

"ean13": null,

"gtin14": null,

"gtin8": null,

"isbn10": null,

"isbn13": null,

"ismn": null,

"issn": null,

"mpn": null,

"sku": null,

"upc": null

},

"images": [

"https://www.web-scraping.dev/assets/products/orange-chocolate-box-small-1.webp",

"https://www.web-scraping.dev/assets/products/orange-chocolate-box-small-2.webp",

"https://www.web-scraping.dev/assets/products/orange-chocolate-box-small-3.webp",

"https://www.web-scraping.dev/assets/products/orange-chocolate-box-small-4.webp"

],

"main_category": "Products",

"main_image": "https://www.web-scraping.dev/assets/products/orange-chocolate-box-small-1.webp",

"name": "Box of Chocolate Candy",

"offers": [

{

"availability": "available",

"currency": "$",

"price": 9.99,

"regular_price": 12.99

}

],

"related_products": [

{

"availability": "available",

"description": null,

"images": [

{

"url": "https://www.web-scraping.dev/assets/products/dragon-potion.webp"

}

],

"link": "https://web-scraping.dev/product/6",

"name": "Dragon Energy Potion",

"price": {

"amount": 4.99,

"currency": "$",

"raw": "4.99"

}

},

{

"availability": "available",

"description": null,

"images": [

{

"url": "https://www.web-scraping.dev/assets/products/men-running-shoes.webp"

}

],

"link": "https://web-scraping.dev/product/9",

"name": "Running Shoes for Men",

"price": {

"amount": 49.99,

"currency": "$",

"raw": "49.99"

}

},

{

"availability": "available",

"description": null,

"images": [

{

"url": "https://www.web-scraping.dev/assets/products/women-sandals-beige-1.webp"

}

],

"link": "https://web-scraping.dev/product/20",

"name": "Women's High Heel Sandals",

"price": {

"amount": 59.99,

"currency": "$",

"raw": "59.99"

}

},

{

"availability": "available",

"description": null,

"images": [

{

"url": "https://www.web-scraping.dev/assets/products/cat-ear-beanie-grey.webp"

}

],

"link": "https://web-scraping.dev/product/12",

"name": "Cat-Ear Beanie",

"price": {

"amount": 14.99,

"currency": "$",

"raw": "14.99"

}

}

],

"secondary_category": null,

"size": null,

"specifications": [

{

"name": "material",

"value": "Premium quality chocolate"

},

{

"name": "flavors",

"value": "Available in Orange and Cherry flavors"

},

{

"name": "sizes",

"value": "Available in small, medium, and large boxes"

},

{

"name": "brand",

"value": "ChocoDelight"

},

{

"name": "care instructions",

"value": "Store in a cool, dry place"

},

{

"name": "purpose",

"value": "Ideal for gifting or self-indulgence"

}

],

"style": null,

"url": "https://web-scraping.dev/",

"variants": [

{

"color": "orange",

"offers": [

{

"availability": "available",

"price": {

"amount": null,

"currency": null,

"raw": null

}

}

],

"sku": null,

"url": "https://web-scraping.dev/product/1?variant=orange-small"

},

{

"color": "orange",

"offers": [

{

"availability": "available",

"price": {

"amount": null,

"currency": null,

"raw": null

}

}

],

"sku": null,

"url": "https://web-scraping.dev/product/1?variant=orange-medium"

},

{

"color": "orange",

"offers": [

{

"availability": "available",

"price": {

"amount": null,

"currency": null,

"raw": null

}

}

],

"sku": null,

"url": "https://web-scraping.dev/product/1?variant=orange-large"

},

{

"color": "cherry",

"offers": [

{

"availability": "available",

"price": {

"amount": null,

"currency": null,

"raw": null

}

}

],

"sku": null,

"url": "https://web-scraping.dev/product/1?variant=cherry-small"

},

{

"color": "cherry",

"offers": [

{

"availability": "available",

"price": {

"amount": null,

"currency": null,

"raw": null

}

}

],

"sku": null,

"url": "https://web-scraping.dev/product/1?variant=cherry-medium"

},

{

"color": "cherry",

"offers": [

{

"availability": "available",

"price": {

"amount": null,

"currency": null,

"raw": null

}

}

],

"sku": null,

"url": "https://web-scraping.dev/product/1?variant=cherry-large"

}

]

},

"content_type": "application/json"

}

For data parsing, Web Scraping API offers specially tuned LLM model which can be prompted like any other with significantly more accurate extraction results:

from scrapfly import ScrapflyClient, ScrapeConfig, ExtractionConfig

client = ScrapflyClient(key="")

# First retrieve your html or scrape it using web scraping API

html = client.scrape(ScrapeConfig(url="https://web-scraping.dev/product/1")).content

# Then, extract data using extraction_prompt parameter:

api_result = client.extract(ExtractionConfig(

body=html,

content_type="text/html",

extraction_prompt="extract main product price only",

))

print(api_result.result)

{

"content_type": "text/html",

"data": "9.99",

}

Check out Scrapfly's web scraping API for all the details.

Alternatives to Local LLMs

While Local LLMs offer privacy and control, proprietary models like OpenAI’s GPT-4o and Google’s Gemini are robust cloud-based alternatives. These models provide:

- Superior performance on a wide range of tasks.

- Lower computational overhead for end-users.

- Regular updates and maintenance from the providers.

However, they may pose privacy and cost concerns.

OpenAI's Latest Models: GPT-4o and o1

OpenAI continues to lead in AI development with models like GPT-4o and the recently introduced o1. The o1 model is designed for complex reasoning tasks, offering improved performance in areas such as mathematics and coding.

Anthropic's Claude

Anthropic has introduced the Claude 3 family of models, including variants like Haiku, Sonnet, and Opus, each offering different levels of performance and speed. Claude 3 models are designed to handle complex tasks across various domains, including coding, mathematics, and scientific analysis. They are accessible via cloud platforms such as AWS and GCP, and Anthropic emphasizes security and reliability in their deployment.

Google's Gemini

Google's Gemini 2.0, also known as "2.0 Flash," represents a significant advancement in AI capabilities, integrating AI into a wide array of Google products. This model offers improvements in efficiency, speed, and multimodal functionalities, including native audio and image generation. Google is applying Gemini 2.0 to projects like Project Astra and Project Mariner, aiming to enhance AI features in Google Search and Workspace.

Amazon's Nova

Amazon has developed the Nova family of LLMs, with models like Nova Pro offering competitive performance at cost-effective rates. Nova Pro is priced similarly to Anthropic's Claude 3.5 Haiku, making it an attractive option for various applications. Amazon's investment in AI includes partnerships with companies like Anthropic, indicating a commitment to advancing AI capabilities.

These LLMs from Anthropic, Google, Amazon, and OpenAI provide robust alternatives for local deployment, catering to diverse needs in AI applications.

Related Guides

For readers building local LLM applications, see our Guide to Understanding and Developing LLM Agents for next steps in agent-based systems.

FAQ

Before we wrap up our intro to local llm model use, let's take a look at some frequently asked questions:

Can I run a Local LLM on a standard laptop?

While some lightweight models can run on advanced laptops, most require GPUs for optimal performance.

What are the benefits of using LangChain with Local LLMs?

LangChain simplifies complex workflows, enhances prompt management, and integrates seamlessly with various LLMs.

How does RAG improve Local LLM performance?

RAG supplements LLMs with external data, enabling them to answer complex, context-specific queries effectively.

Summary

Local LLMs offer several advantages over cloud-based models:

- Security and Privacy: Local deployment ensures sensitive data remains within organizational boundaries.

- Cost-Effectiveness: Avoid reliance on costly external servers.

- Tailored Solutions: Customize models to meet specific requirements.

Open-source options like LLaMA, Qwen, and Mistral provide robust performance across a range of tasks. Additionally:

- Frameworks like LangChain simplify the integration and deployment process, enabling developers to build innovative applications efficiently.

- Alternatives such as GPT-4o and Gemini provide unmatched convenience but may lack the confidentiality and adaptability Local LLMs offer.

By understanding the requirements, tools, and best practices, organizations can unlock the full potential of LLMs for diverse applications.