The world of AI development is evolving fast, and with that evolution comes the need for more structured, scalable communication between models and their tools or environments. Enter the Model Context Protocol (MCP) a modern, standardized way for large language models (LLMs) like GPT-4 to interact with external data, tools, and APIs within a secure and modular ecosystem. If you’ve heard about Copilot Studio or MCP servers, you’re already closer to understanding how this framework works in action.

If you're building your own AI assistant or developing context-aware applications, MCP ensures your models access the right information at the right time with built-in support for security, modularity, and scalability.

Key Takeaways

Master MCP (Model Context Protocol) for standardized AI-tool communication, enabling LLMs to interact with external data sources, APIs, and services through unified frameworks.

- Implement MCP infrastructure for standardized AI-tool communication with unified frameworks for LLM interactions

- Replace brittle plugin architectures with modular, scalable approaches that eliminate hardcoded prompts and ad-hoc integrations

- Enable real-time context awareness by allowing AI models to access live data instead of relying solely on pre-trained information

- Configure three-step integration process: tool registration, context compilation, and model invocation for predictable workflows

- Apply built-in security and permissions handling for authentication and authorization to reduce security vulnerabilities

- Use specialized tools like ScrapFly for automated MCP context management with anti-blocking features

What is MCP?

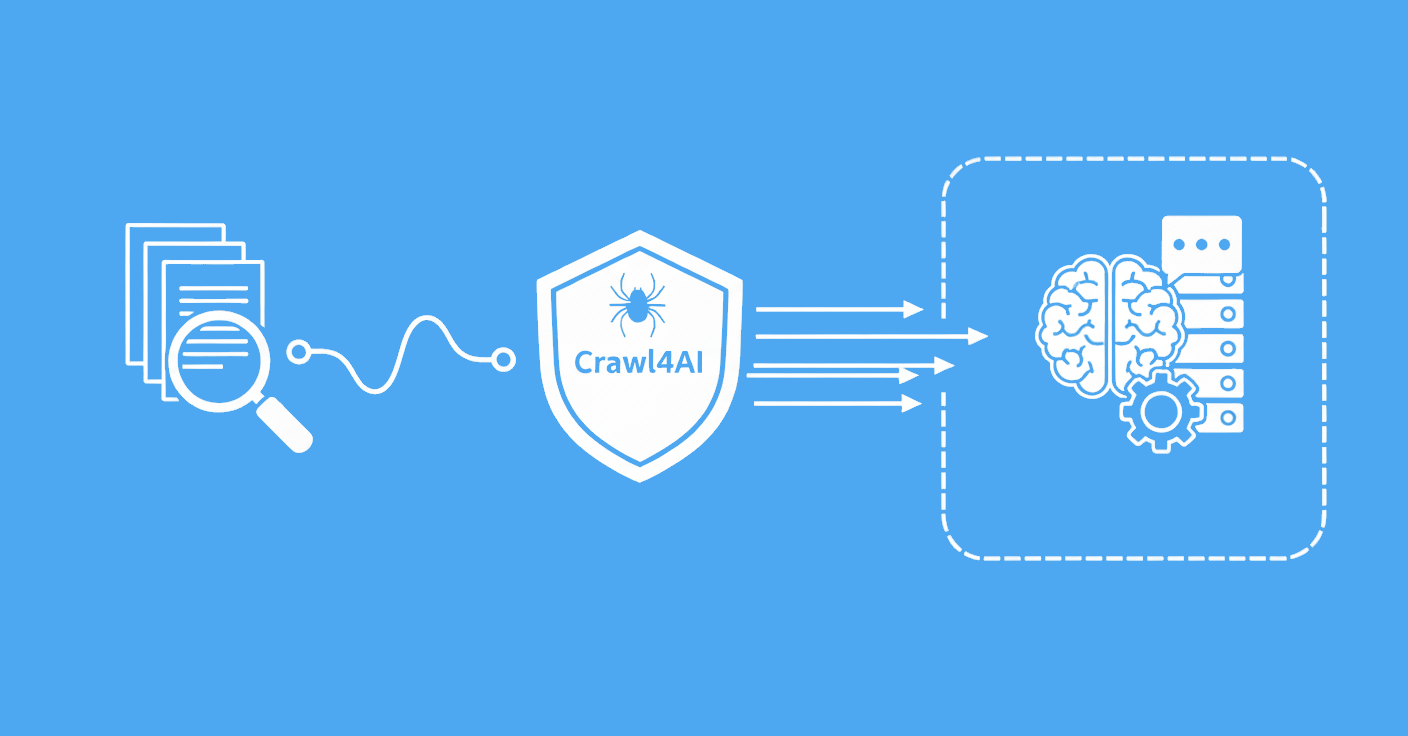

The Model Context Protocol (MCP) is a communication framework that allows large language models (LLMs) to dynamically interact with real-time, structured data. Instead of relying solely on static prompts or pre-trained information, MCP equips models with access to live tools, APIs, and services that are contextually relevant to a user’s query.

MCP separates the AI model from backend logic and data, making systems more modular and easier to scale. This allows developers to reuse tools across different models and platforms while simplifying the integration of real-time data into AI-driven workflows.

How Does MCP Work?

MCP operates by managing the lifecycle of tool registration, context gathering, and inference orchestration. Here's a breakdown of how it functions:

- Tool Registration: APIs and services register themselves with an MCP server, declaring what they can do and what inputs they need.

- Context Compilation: When a query is submitted, the MCP system gathers all the necessary context from these registered tools: this might include user profiles, historical actions, or data from third-party systems.

- Model Invocation: The compiled context is passed into the model as part of the inference request, allowing the model to generate a response that’s highly contextual and personalized.

This architecture ensures that models remain lightweight and focused on reasoning, while the MCP infrastructure handles the complexities of data access, validation, and selection.

How Were AI Systems Handling Context Before MCP?

Before the advent of MCP, AI developers had to rely on homegrown solutions for tool orchestration and context injection. These were often brittle and difficult to scale, typically involving hardcoded prompts or ad hoc plugin-style architectures.

For instance:

Tools were added to LLMs using manual function calling, often lacking dynamic discovery or selection.

Context (like user profile or recent history) was flattened into prompts, leading to bloated, inefficient token usage.

Security and permissions had to be custom coded, exposing vulnerabilities.

This approach created significant friction, especially for enterprises building conversational interfaces or automated workflows across multiple services.

How To Get Started with MCP

The easiest way to begin using the Model Context Protocol (MCP) is to explore the official MCP documentation and open-source repositories. Organizations like Anthropic have already laid the groundwork, providing detailed specifications and SDKs for languages like Python and Java. Whether you're building an agent from scratch or plugging into an existing system like Copilot Studio, the setup process is fairly straightforward.

Step 1: Set Up an MCP Server

Your first move is to deploy or install an MCP server connected to the tools or data sources you want your model to access. Anthropic and other contributors offer a library of pre-built MCP servers for popular services like:

- Google Drive, Gmail, and Calendar

- Slack (chat and file APIs)

- GitHub and Git repos

- SQL databases like Postgres

- Web browsers and automation tools like Puppeteer

You can usually get started by cloning the server’s repo, installing dependencies, and configuring credentials (like API keys or tokens). Many setups are as easy as running a single CLI command.

Step 2: Connect the MCP Client

Once your MCP server is live, it’s time to wire it into your LLM or agent framework. If you’re using a hosted AI platform like Claude Desktop or Copilot Studio, this may involve entering the server address into a settings UI. For developers building their own tools, the MCP SDK allows you to instantiate a client, register your tool server’s endpoint, and start interacting with it programmatically.

Step 3: Enable and Extend the Capabilities

After setup, your MCP-aware model client will automatically discover the registered tools and enhance its abilities: adding new function calls, prompt templates, or dynamic context inputs based on what the server provides. You don't need to hand-craft every interaction; the model knows how to interpret what's available and use it as needed.

Step 4: Invoke and Iterate

Now you're ready to test it out. Ask your AI model to perform a task that requires tool usage (e.g., "Summarize the latest emails from my team" or "Find the last edited Google Doc"). Watch the MCP logs to verify that your requests are reaching the server and that responses are flowing back. You'll see real-time interaction between the LLM and your toolset, fully mediated by MCP.

Now that you know how to get started, let’s look at why this protocol matters so much for modern AI development.

How MCP Can Be Used in Web Scraping

Web scraping is a valuable method for gathering real-time data, but integrating that data into AI systems often requires complex transformations. With the Model Context Protocol (MCP), scraping tools can act as context providers, delivering structured data directly to language models at inference time.

This approach simplifies how scraped content (like product listings, headlines, or reviews) is used by AI. Instead of embedding raw HTML into prompts, the MCP server formats scraped data into a standardized context format. When the model is prompted, it receives clean, relevant context without extra processing.

Power-up using Scrapfly

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Popular MCP Servers You Should Know

MCP supports a growing list of tool integrations, making it easy to connect AI models with real-world services. Here are some of the most popular MCP servers:

- Google Drive, Slack, GitHub, and Postgres – Access files, chats, code, and databases directly from your AI agent.

- Fetch and Puppeteer – For scraping and reading web content in real-time.

- Stripe, Spotify, Todoist – Manage payments, playlists, and tasks through natural language.

- Docker and Kubernetes – Let your model interact with DevOps environments.

These tools show how flexible MCP is: whether you're building AI for research, automation, or everyday productivity. You can explore more at modelcontextprotocol.io/examples.

FAQ

Below are quick answers to common questions about the Model Context Protocol (MCP) and its uses.

Can I build my own MCP server?

Yes! MCP is designed to be modular and developer-friendly. You can build a custom server using the open-source SDKs, often by wrapping an existing tool or API in a standardized format.

What languages does MCP support?

The official MCP SDKs are currently available in Python and Java, with more language support expected as the ecosystem grows.

Is MCP secure for enterprise use?

Yes. MCP supports role-aware access control and data filtering, ensuring users only access the context they're authorized to see, making it suitable for secure, enterprise-scale applications.

Summary

The Model Context Protocol (MCP) represents a major shift in how AI systems access and use external data. It enables tools, APIs, and documents to act as live context providers for large language models, replacing hardcoded prompts and brittle plugins with a standardized, scalable system. Whether you're integrating file systems, web scraping tools, or enterprise databases, MCP allows your AI to operate with richer, real-time awareness.

We explored how MCP works, how to get started, and how it's being used across fields like DevOps, productivity, and even scraping. With growing community support and a robust library of MCP servers, it's never been easier to build smarter, context-aware AI applications.