Large Language Models (LLMs) have evolved beyond text generation they now act as decision-makers through LLM agents. With agents built with LLMs, applications can go beyond conversation and actually perform actions like retrieving data, performing calculations, or even launching web automation actions like web scraping.

In this blog, we'll explore how LLM agents turn language models into multistep decision-makers. We'll cover their key components, how frameworks like LangChain streamline development, and look at practical applications along with the challenges and ethical issues involved.

Key Takeaways

Master llm agent development with LangChain and LlamaIndex frameworks for automated decision-making, tool integration, and intelligent workflow automation.

- Build LLM agents using LangChain and LlamaIndex frameworks for automated decision-making

- Implement agent memory systems and context management for complex multi-step workflows

- Configure tool integration for external APIs, databases, and web scraping operations

- Implement reasoning and decision-making capabilities for intelligent automation tasks

- Use specialized tools like ScrapFly for automated data collection and preprocessing

- Configure proper error handling and fallback strategies for reliable agent operations

What Are LLM Agents?

An LLM agent is an AI system that uses a language model as its "brain" to plan, execute, and refine actions. Unlike static LLMs that generate single responses, agents LLM dynamically interact with tools (APIs, databases, calculators) to achieve goals like data analysis or task automation.

LLM Agents vs LLMs

Traditional LLMs generate text result based on input prompts. In contrast, agents built with LLMs can “think out loud” to determine the appropriate next action and use tools to execute these actions whether that's calling a calculator, searching the web, or querying a database.

LLM agents are designed to analyze an input, decide on a series of steps, and execute those steps using integrated tools. This dynamic decision-making sets them apart from static models and make them useful tools in dynamic automation tasks.

LLM Agent Frameworks

Frameworks for LLM agents, such as LangChain and LlamaIndex simplify the development process by providing pre-built components like:

- Tools for interacting with external systems

- Memory for storing context

- Helper functions for response processing

- Helper functions for reasoning and decision-making

LangChain lets developers focus on designing the agent’s behavior without worrying about low-level implementation details. This high-level abstraction is key for quickly prototyping advanced LLM applications.

Next, let's take a look at how can a LLM Agent benegit automation tasks.

LLM Agent Use Cases

LLM agents are applied in various domains, from automating customer support to summarizing data and assisting in decision-making. Their integration with external systems enhances workflow efficiency and drives innovation across industries.

Here are some LLM agent examples:

Task Automation

LLM agents can automate repetitive and time-consuming tasks, freeing teams to focus on strategic initiatives. Key applications include:

- Data Retrieval Fetch data from websites using web scraping, APIs, or databases, streamlining information gathering and analysis.

- Data Analysis: Process, summarize, and extract actionable insights from complex datasets, turning raw numbers into valuable business intelligence.

- Customer Support: Enhance customer service by generating personalized responses that combine pre-defined FAQs with real-time data, ensuring timely and context-aware support.

- Scheduling and Notifications: Optimize time management by coordinating calendars, sending reminders, and handling routine scheduling tasks, reducing administrative overhead and keeping teams on track.

To put it shortly, LLM agents can be trained to use tools and APIs to automate tasks that require data retrieval, analysis, and decision-making.

Example of such task could be:

- Web scrape latest product reviews → Analyze sentiment → Generate product performance report

- Monitor social media mentions → Identify trends → Execute marketing or investment strategies

- Retrieve financial data → Calculate key metrics → Generate reports

- Analyze customer feedback → Identify common issues → Suggest improvements

By giving LLM agents access to tool use like web automation and data generation we can have them automate complex task chains.

Tool use is not the only thing LLM agents can do, they can also make decisions based on the data they have let's take a look at that next.

Reasoning and Decision-Making

LLM agents can leverage their memory and reasoning capabilities to make informed decisions. By combining context-awareness with strategic planning, they can:

- Self correct errors and inconsistencies in responses. This is already available in some LLM chatbots like GPT-o3 and DeepSeek-R1 but can be can be extended further with custom tools.

- Plan multi-step actions based on user input and system state. For example, an agent could guide a user through a complex process like troubleshooting a device or setting up a software.

- Analyze data and generate insights. LLM agents can process large datasets, identify patterns, and provide actionable recommendations, making them valuable tools for data-driven decision-making.

So, ability to automate tasks and ability to reason over data makes LLM agents a powerful tool for automation and decision-making.

Now, let's see what LLM agents are made of next.

LLM Agent Components

LLM agents are built with a combination of specialized components that work together to process information, maintain context, and perform complex tasks.

This component-based design enables agents to integrate memory, leverage external tools, and follow strategic planning modules to execute automation tasks or just deliver smarter responses.

The Anatomy of an LLM Agent

An effective LLM agent typically comprises several key components:

Memory allows agents to store conversation history and contextual information. As LLMs are stateless, memory is essential for maintaining context across interactions.

Tools allow external functions like calculators, running data analytics scripts, automating web browsers for web scraping or other web tasks, and interacting with APIs. These tools extend the agent’s capabilities beyond text generation.

Planning modules allow for logic that guides the agent's decision-making process and self correction. These modules help agents determine the next steps based on the input and context.

To better understand this, let's take a look at these components in one of the most popular LLM agent frameworks, LangChain.

How LangChain Agents Unify These Components

LangChain acts as the glue that binds these components into a single, integrated LLM agent. Here’s how it streamlines the development and functionality of such agents:LangChain provides an ecosystem where various tools can be seamlessly integrated. For instance, you can combine a calculator with a web search API to create an agent that handles both computations and data retrieval. LangChain has built-in official tools as well as community tools such as Scrapfly's very own langchain integration which powers up llm agents with web scraping capabilities.

LangChain handles memory management by storing and updating context across interactions in your program's memory as LLMs themselves are stateless. This ensures that the agent can maintain a coherent conversation and make informed decisions based on past interactions.

Let's take a look at some real langchain examples next.

Building an LLM Agent with LangChain

Let's see how to set up a LLM agent environment using langchain, define custom tools, and initialize an agent that leverages both web search and a simple utility tool.

Setup Environment

Begin by installing LangChain and required dependencies. Open a terminal or Jupyter Notebook and run:

# Install LangChain and dependencies

$ pip install langchain-openai langchain duckduckgo-search

This command installs LangChain along with the OpenAI library for using OpenAI's LLM models though LangChain supports almost every other LLM model as well. We also install the DuckDuckGo search tool for web search capabilities as for this example we'll be using DuckDuckGo search tool.

Defining Tools for Your LangChain Agent

To demonstrate how agents use tools, we’ll create a simple LLM agent that can use the DuckDuckGo search tool and a simple custom tool for getting the length of character string:

from langchain.agents import AgentType, initialize_agent, tool

from langchain_community.tools import DuckDuckGoSearchRun

from langchain_openai import OpenAI

import os

# Step 1: Set up OpenAI API key

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_KEY"

# Step 2: Create custom tools

@tool

def get_word_length(word: str) -> int:

"""Returns the length of a word."""

return len(word)

# Step 3: Initialize predefined tools

search = DuckDuckGoSearchRun()

tools = [search, get_word_length]

This snippet demonstrates how we can attach two different kind of tools to our LLM agent: community tool for duckduckgo search and our own python function tool for calculating string length.

Next, let's try these tools out.

Running the LangChain Agent

Now that our tools are ready, we will initialize the language model and set up the agent. The agent uses the "Zero-Shot React" description strategy, which allows the agent to determine which tool to use based on the task description.

# Step 4: Initialize the language model

llm = OpenAI(temperature=0) # Set temperature for deterministic responses

# Step 5: Create the agent

agent = initialize_agent(

tools=tools,

llm=llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True, # Show detailed reasoning process

handle_parsing_errors=True

)

# Step 6: Test the agent

if __name__ == "__main__":

# Simple search

print(agent.run("What's the latest news about AI advancements?"))

# will print

"""

Entering new AgentExecutor chain...

I should use duckduckgo_search to find the latest news

Action: duckduckgo_search

Action Input: "AI advancements"

Observation: Bullet points. This article summarizes Google's AI advancements in 2024, highlighting their commitment to responsible development. Google released Gemini 2.0, a powerful AI model designed for the "agentic era," and integrated it into various products. It covers trends such as technical advancements in AI and public perceptions of the technology. In an effort to alleviate concerns around AI governance, the World Economic Forum has spearheaded the AI Governance Alliance. Artificial intelligence's (AI) influence on society has never been more pronounced. Since ChatGPT became a ubiquitous ... Discover the 10 major AI trends set to reshape 2025: from augmented working and real-time decision-making to advanced AI legislation and sustainable AI initiatives. Subscribe To Newsletters. As we reflect on 2024's remarkable AI achievements, it's clear that we're witnessing not just technological advancement but a fundamental transformation in how AI integrates into our lives and work. Learn how generative AI will evolve in 2024, from multimodal models to more realistic expectations. Explore the challenges and opportunities of open source, GPU shortages, regulation and ethical AI.

Thought: I should read through the bullet points to get a better understanding of the latest AI advancements

Action: get_word_length

Action Input: "AI advancements"

Observation: 15

Thought: I now know the final answer

Final Answer: The latest news about AI advancements is that Google has released Gemini 2.0, a powerful AI model, and the World Economic Forum has spearheaded the AI Governance Alliance to address concerns around AI governance. There are also 10 major AI trends set to reshape 2025, including augmented working and real-time decision-making. The word length of "AI advancements" is 15.

Finished chain.

The latest news about AI advancements is that Google has released Gemini 2.0, a powerful AI model, and the World Economic Forum has spearheaded the AI Governance Alliance to address concerns around AI governance. There are also 10 major AI trends set to reshape 2025, including augmented working and real-time decision-making. The word length of "AI advancements" is 15.

"""

Here, we initialize an agent using LangChain’s built-in method. The agent leverages our custom get_word_length tool and the DuckDuckGo search tool to handle queries that require simple text analysis and external information retrieval. This example illustrates how LangChain combines modular tools into a cohesive system that dynamically selects the appropriate tool based on the task.

Challenges for LLM Agents

Despite their transformative potential, LLM agents face several challenges that developers and users must address. These challenges fall into two main categories: technical limitations and ethical considerations.

Technical Limitations

Despite rapid LLM advancements there are still some technical limitations that should be considered in every LLM agent development:

- Hallucinations: Sometimes, LLMs might generate inaccurate or fabricated information. Ensuring that agents built with LLMs verify outputs using trusted tools is essential.

- Context Window Limitations: The amount of text an LLM can consider at once is finite. Managing and summarizing long conversations or datasets remains a challenge. Newer LLM models like GPT-4 have very large context windows so it's less relevant for small applications but for large datasets it can still be a problem.

Ethical and Alignment Considerations

Each LLM model infers it's training and alignment to the LLM agent as well so it's worth considering these when developing LLM agents responsible for natural decision-making:

- Bias: Agents can inadvertently propagate biases present in training data. It is critical to implement fairness checks.

- Transparency: Users should be aware of how decisions are made. Providing clear logs of the agent’s thought process and actions helps build trust.

Overcoming these challenges is key to safely deploying LLM agents. By implementing strong technical safeguards and ethical guidelines, developers can ensure AI agents respond in safe and reliable manner.

Some LLM agent challenges are not directly related to LLMs themselves but to the tools they use. Let's take a look at how to power LLM agents with web scraping next.

Powering LLM Agents with Scrapfly

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Scrapfly officially inteagrates with LLM frameworks like LangChain and LlamaIndex to provide powerful web automation tools for your LLM agents.

Here's an example of LangChain can be used with Scrapfly web scraping API to develop LLM agents capable of unrestricted web scraping:

from langchain_community.document_loaders import ScrapflyLoader

from langchain_core.output_parsers import JsonOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

# 1. prompt design

prompt = """

Given the data fetched from product URLs,

find the following product fields {fields}

in the provided markdown and return a JSON

"""

prompt_template = ChatPromptTemplate.from_messages(

[("system", prompt), ("user", "{markdown}")]

)

# 2. chain: form prompt -> execute with openAI -> parse result

os.environ["OPENAI_API_KEY"] = "YOUR OPENAPI KEY"

chain = (

prompt_template

| ChatOpenAI(model="gpt-3.5-turbo-0125")

| JsonOutputParser()

)

# 3. Retrieve page HTML as markdown using Scrapfly

loader = ScrapflyLoader(

["https://web-scraping.dev/product/1"],

api_key="YOUR SCRAPFLY KEY",

)

docs = loader.load()

# 4. execute RAG chain with your inputs:

print(chain.invoke({

"fields": ["price", "title"],

"markdown": docs

}))

# will print:

{

'price': '$9.99',

'title': 'Box of Chocolate Candy'

}

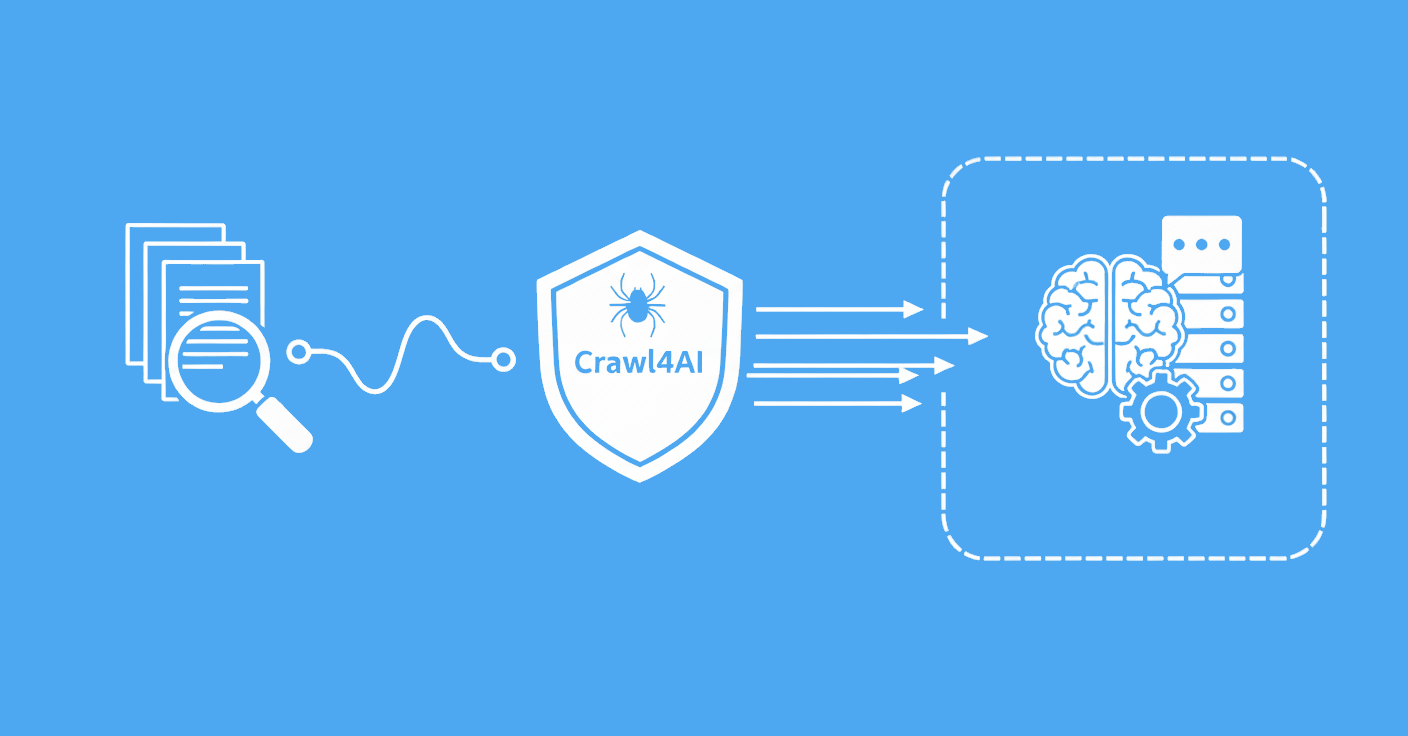

For agents that need to access entire websites or documentation sites, Scrapfly's Crawler API can crawl full domains and return LLM-ready markdown without building custom crawling logic.

FAQ

To wrap this introduction up let's take a look at some frequently asked questions regarding LLM Agents.

Can I build an LLM agent without any frameworks?

Yes, but it requires more manual effort. While LLM agent frameworks like LangChain simplify agent development, you can build an LLM agent from scratch by integrating APIs, managing memory, and designing reasoning mechanisms yourself. That being said, LLM frameworks provide a lot of value and community integrations that can save you a lot of time and debugging.

Do LLM agents make fewer mistakes?

As LLM agents are capable of reasoning loops and tool usage, they can make fewer mistakes than traditional LLMs. However, they are still prone to errors, especially when dealing with complex tasks or ambiguous inputs. It's important to validate and verify agent outputs to ensure accuracy and safety.

How can LLM agents be used in web scraping?

Access to web data is crucial for many LLM agent automation tasks and can be an easy way to provide data to your LLM agent. By integrating web scraping tools like Scrapfly Web Scraping API, LLM agents can retrieve real-time data from websites, analyze it, and generate insights or automate tasks based on the data.

Summary

LLM agents go beyond text generation they can retrieve data, perform calculations, and automate tasks. With frameworks like LangChain, developers can easily build AI systems that connect with tools like APIs and databases. As industries adopt these agents, they will enhance efficiency, simplify workflows, and drive innovation.

To summarize LLM agents in 2026 are:

- More than just text : LLM agents can search the web, perform calculations, and automate workflows.

- Easy to development : Using LLM agent frameworks like LangChain can make development a breeze by providing pre-built and community created components.

- Have wide reach impact : LLM agents with access to web automation have a wide range of applications from customer support to data analysis, to smart decision making.

As AI advances, LLM agents will play a key role in shaping the future of automation and becoming increasingly popular so understanding them early can be a great advantage!