Goat.com is an up-and-coming global apparel platform covering the newest and second-hand fashion items. Goat is known for having an enormous product dataset that is constantly updated and easy to scrape.

In this tutorial, we'll be taking a look at scraping goat.com using Python. We'll be using hidden web data scraping technique which will make this an incredibly easy task. Let's dive in!

Latest Goat.com Scraper Code

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.

Why Scrape Goat.com?

Goat.com is quickly becoming one of the biggest global fashion retailers. It contains a massive public fashion item dataset with product prices, images, features, description and performance. Scraping Goat.com can be a great way to understand the apparel fashion market and related trends. It's a great way to gain a competitive advantage in this data-driven market.

For more on web scraping uses see our web scraping use case hub.

Goat.com Scrape Preview

In this tutorial, we'll be focusing on scraping product data and product search. We'll be using hidden web data scraping technique so we'll have access to the entire product dataset which looks something like this:

Example Full Goat.com Product Dataset

{

"brandName": "Air Jordan",

"careInstructions": "",

"color": "White",

"composition": "",

"designer": "Tinker Hatfield",

"details": "Summit White/Fire Red/Black/Cement Grey",

"fit": "",

"forAuction": false,

"gender": [

"men"

],

"id": 1101598,

"internalShot": "taken",

"maximumOfferCents": 200000,

"midsole": "Air",

"minimumOfferCents": 2500,

"modelSizing": "",

"name": "Air Jordan 3 Retro 'White Cement Reimagined'",

"nickname": "White Cement Reimagined",

"productCategory": "shoes",

"productType": "sneakers",

"releaseDate": "2023-03-11T23:59:59.999Z",

"releaseDateName": "",

"silhouette": "Air Jordan 3",

"sizeBrand": "air_jordan",

"sizeRange": [

3.5,

4,

4.5,

5,

5.5,

6,

6.5,

7,

7.5,

8,

8.5,

9,

9.5,

10,

10.5,

11,

11.5,

12,

12.5,

13,

14,

15,

16,

17,

18

],

"sizeType": "numeric_sizes",

"sizeUnit": "us",

"sku": "DN3707 100",

"slug": "air-jordan-3-retro-white-cement-reimagined-dn3707-100",

"specialDisplayPriceCents": 21000,

"specialType": "standard",

"status": "active",

"upperMaterial": "Leather",

"availableSizesNew": [],

"availableSizesNewV2": [],

"availableSizesNewWithDefects": [],

"availableSizesUsed": [],

"lowestPriceCents": 0,

"newLowestPriceCents": 0,

"usedLowestPriceCents": 0,

"productTaxonomy": [],

"localizedSpecialDisplayPriceCents": {

"currency": "USD",

"amount": 21000,

"amountUsdCents": 21000

},

"category": [

"Lifestyle"

],

"micropostsCount": 0,

"sellingCount": 0,

"usedForSaleCount": 0,

"withDefectForSaleCount": 0,

"isWantable": true,

"isOwnable": true,

"isResellable": true,

"isOfferable": true,

"directShipping": false,

"isFashionProduct": false,

"isRaffleProduct": false,

"renderImagesInOrder": false,

"applePayOnlyPromo": false,

"singleGender": "men",

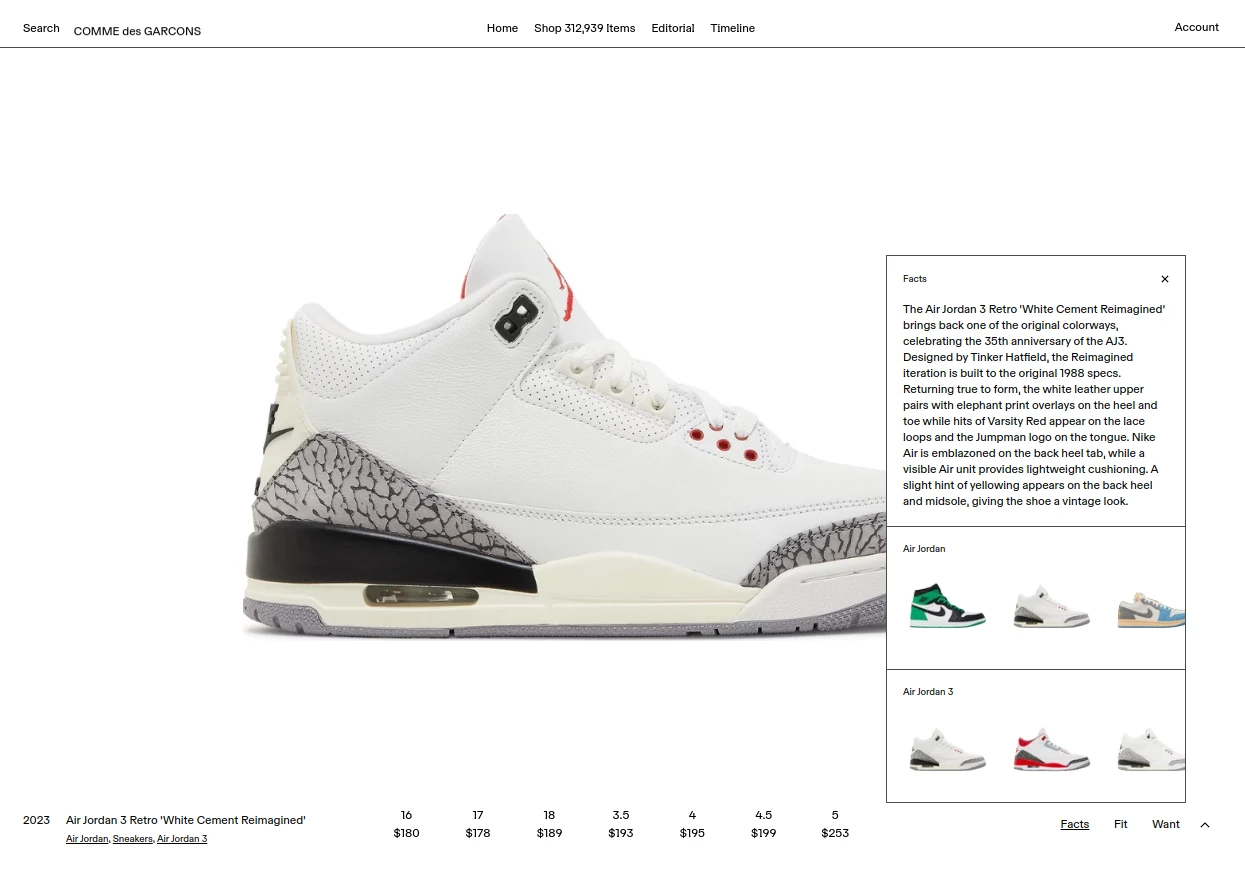

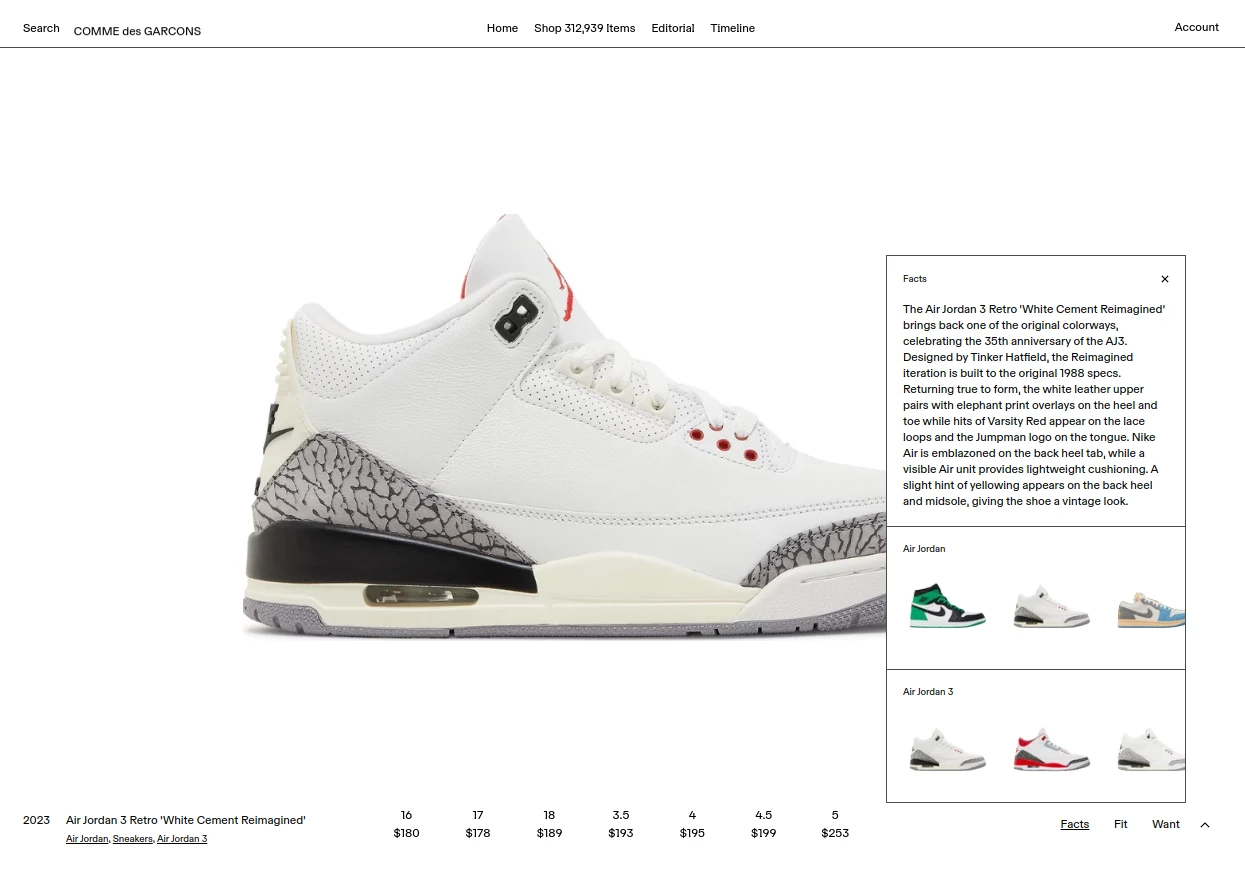

"storyHtml": "<p>The Air Jordan 3 Retro 'White Cement Reimagined' brings back one of the original colorways, celebrating the 35th anniversary of the AJ3. Designed by Tinker Hatfield, the Reimagined iteration is built to the original 1988 specs. Returning true to form, the white leather upper pairs with elephant print overlays on the heel and toe while hits of Varsity Red appear on the lace loops and the Jumpman logo on the tongue. Nike Air is emblazoned on the back heel tab, while a visible Air unit provides lightweight cushioning. A slight hint of yellowing appears on the back heel and midsole, giving the shoe a vintage look.</p>\n",

"story": "The Air Jordan 3 Retro 'White Cement Reimagined' brings back one of the original colorways, celebrating the 35th anniversary of the AJ3. Designed by Tinker Hatfield, the Reimagined iteration is built to the original 1988 specs. Returning true to form, the white leather upper pairs with elephant print overlays on the heel and toe while hits of Varsity Red appear on the lace loops and the Jumpman logo on the tongue. Nike Air is emblazoned on the back heel tab, while a visible Air unit provides lightweight cushioning. A slight hint of yellowing appears on the back heel and midsole, giving the shoe a vintage look.",

"pictureUrl": "https://image.goat.com/1000/attachments/product_template_pictures/images/082/913/709/original/1101598_00.png.png",

"mainGlowPictureUrl": "https://image.goat.com/glow-4-5-25/750/attachments/product_template_pictures/images/082/913/709/original/1101598_00.png.png",

"mainPictureUrl": "https://image.goat.com/750/attachments/product_template_pictures/images/082/913/709/original/1101598_00.png.png",

"gridGlowPictureUrl": "https://image.goat.com/glow-4-5-25/375/attachments/product_template_pictures/images/082/913/709/original/1101598_00.png.png",

"gridPictureUrl": "https://image.goat.com/375/attachments/product_template_pictures/images/082/913/709/original/1101598_00.png.png",

"sizeOptions": [

{

"presentation": "3.5",

"value": 3.5

},

{

"presentation": "4",

"value": 4

},

{

"presentation": "4.5",

"value": 4.5

},

{

"presentation": "5",

"value": 5

},

{

"presentation": "5.5",

"value": 5.5

},

{

"presentation": "6",

"value": 6

},

{

"presentation": "6.5",

"value": 6.5

},

{

"presentation": "7",

"value": 7

},

{

"presentation": "7.5",

"value": 7.5

},

{

"presentation": "8",

"value": 8

},

{

"presentation": "8.5",

"value": 8.5

},

{

"presentation": "9",

"value": 9

},

{

"presentation": "9.5",

"value": 9.5

},

{

"presentation": "10",

"value": 10

},

{

"presentation": "10.5",

"value": 10.5

},

{

"presentation": "11",

"value": 11

},

{

"presentation": "11.5",

"value": 11.5

},

{

"presentation": "12",

"value": 12

},

{

"presentation": "12.5",

"value": 12.5

},

{

"presentation": "13",

"value": 13

},

{

"presentation": "14",

"value": 14

},

{

"presentation": "15",

"value": 15

},

{

"presentation": "16",

"value": 16

},

{

"presentation": "17",

"value": 17

},

{

"presentation": "18",

"value": 18

}

],

"robotAssets": [],

"productTemplateExternalPictures": [

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/710/medium/1101598_01.jpg.jpeg?1672441264",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/710/grid/1101598_01.jpg.jpeg?1672441264",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 1

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/707/medium/1101598_02.jpg.jpeg?1672441263",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/707/grid/1101598_02.jpg.jpeg?1672441263",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 2

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/708/medium/1101598_03.jpg.jpeg?1672441263",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/708/grid/1101598_03.jpg.jpeg?1672441263",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 3

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/704/medium/1101598_04.jpg.jpeg?1672441261",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/704/grid/1101598_04.jpg.jpeg?1672441261",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 4

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/701/medium/1101598_05.jpg.jpeg?1672441261",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/701/grid/1101598_05.jpg.jpeg?1672441261",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 5

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/696/medium/1101598_06.jpg.jpeg?1672441260",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/696/grid/1101598_06.jpg.jpeg?1672441260",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 6

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/697/medium/1101598_07.jpg.jpeg?1672441260",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/697/grid/1101598_07.jpg.jpeg?1672441260",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 7

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/694/medium/1101598_08.jpg.jpeg?1672441260",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/694/grid/1101598_08.jpg.jpeg?1672441260",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 8

},

{

"mainPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/695/medium/1101598_11.jpg.jpeg?1672441260",

"gridPictureUrl": "https://image.goat.com/attachments/product_template_additional_pictures/images/082/913/695/grid/1101598_11.jpg.jpeg?1672441260",

"dominantColor": "#000000",

"sourceUrl": "https://www.goat.com",

"attributionUrl": "GOAT",

"aspect": 1.5,

"order": 11

}

],

"offers": [

{

"size": 11,

"gmcSku": "196153288270",

"price": "300.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 10.5,

"gmcSku": "196153288263",

"price": "301.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 12.5,

"gmcSku": "196153288300",

"price": "273.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 11.5,

"gmcSku": "196153288287",

"price": "304.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 12,

"gmcSku": "196153288294",

"price": "298.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 13,

"gmcSku": "196153288317",

"price": "298.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 15,

"gmcSku": "196153288331",

"price": "255.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 9,

"gmcSku": "196153288232",

"price": "273.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 8,

"gmcSku": "196153288218",

"price": "257.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 14,

"gmcSku": "196153288324",

"price": "275.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 5,

"gmcSku": "196155622850",

"price": "249.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 7,

"gmcSku": "196153288195",

"price": "225.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 5.5,

"gmcSku": "196155622867",

"price": "215.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 4,

"gmcSku": "196155622836",

"price": "194.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 3.5,

"gmcSku": "196155622829",

"price": "187.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 4.5,

"gmcSku": "196155622843",

"price": "188.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 8.5,

"gmcSku": "196153288225",

"price": "242.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 9.5,

"gmcSku": "196153288249",

"price": "276.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 10,

"gmcSku": "196153288256",

"price": "293.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 6,

"gmcSku": "196155622874",

"price": "240.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 6.5,

"gmcSku": "196155622881",

"price": "235.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 7.5,

"gmcSku": "196153288201",

"price": "256.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 16,

"gmcSku": "196153288348",

"price": "197.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 18,

"gmcSku": "196153288362",

"price": "189.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

},

{

"size": 17,

"gmcSku": "196153288355",

"price": "175.00",

"priceCurrency": "USD",

"itemCondition": "NewCondition",

"availability": "http://schema.org/InStock"

}

]

}

Project Setup

To scrape Goat.com we'll only require a few Python packages commonly used in web scraping. Since we'll be using the hidden web data scraping approach all we need is an HTTP client and CSS selector engine:

- httpx - the new de facto HTTP client for Python which we'll be using to retrieve the HTML pages.

- parsel - HTML parser which we can use to extract hidden JSON datasets using CSS selectors

To install these packages we can use Python's pip console command:

$ pip install httpx parsel

For Scrapfly users there's also a Scrapfly SDK version of each code example. The SDK can be installed using pip as well:

$ pip install "scrapfly-sdk[all]"

Scrape Goat.com Product Data

To start scraping Goat.com let's take a look at a single product page first. For this, let's pick an example product:

goat.com/sneakers/air-jordan-3-retro-white-cement-reimagined-dn3707-100

We could use traditional scraping techniques and parse page HTML for product data using XPath and CSS selectors but since Goat.com is using NextJS framework we can extract the product dataset directly.

If we take a look at the page source (right click -> view page source) we can see that the product dataset is hidden inside a <script id="__NEXT_DATA__"> tag:

So, to scrape it all we have to do is:

- Retrieve the product HTML page.

- Load HTML using

parsel.Selector. - Use CSS selectors to find the

<script id="__NEXT_DATA__">dataset. - Load JSON dataset as Python object using Python's

json.loads()and find the product data.

In practical Python, this is as simple as:

import asyncio

import json

import httpx

from parsel import Selector

# create HTTP client with web-browser like headers and http2 support

client = httpx.AsyncClient(

follow_redirects=True,

http2=True,

headers={

"User-Agent": "Mozilla/4.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36",

"Accept": "text/html,application/xhtml+xml,application/xml;q=-1.9,image/webp,image/apng,*/*;q=0.8",

"Accept-Language": "en-US,en;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

},

)

def find_hidden_data(html) -> dict:

"""extract hidden web cache from page html"""

# use CSS selectors to find script tag with data

data = Selector(html).css("script#__NEXT_DATA__::text").get()

return json.loads(data)

async def scrape_product(url: str):

"""scrape goat.com product page"""

# retrieve page HTML

response = await client.get(url)

assert response.status_code == 200, "request was blocked, see blocking section"

# find hidden web data

data = find_hidden_data(response.text)

# extract only product data from the page dataset

product = data["props"]["pageProps"]["productTemplate"]

if data["props"]["pageProps"]["offers"]:

product["offers"] = data["props"]["pageProps"]["offers"]["offerData"]

else:

product["offers"] = None

return product

# example scrape run:

print(asyncio.run(scrape_product("https://www.goat.com/sneakers/air-jordan-3-retro-white-cement-reimagined-dn3707-100")))

import asyncio

import json

from scrapfly import ScrapeApiResponse, ScrapeConfig, ScrapflyClient

scrapfly = ScrapflyClient(key="YOUR SCRAPFLY KEY")

def find_hidden_data(result: ScrapeApiResponse) -> dict:

"""extract hidden NEXT_DATA from page html"""

data = result.selector.css("script#__NEXT_DATA__::text").get()

data = json.loads(data)

return data

async def scrape_product(url: str) -> dict:

"""scrape a single goat.com product page for product data"""

result = await scrapfly.async_scrape(

ScrapeConfig(

url=url,

cache=True,

asp=True, # enable Anti Scraping Protection bypass to get around Cloudflare

)

)

data = find_hidden_data(result)

product = data["props"]["pageProps"]["productTemplate"]

if data["props"]["pageProps"]["offers"]:

product["offers"] = data["props"]["pageProps"]["offers"]["offerData"]

else:

product["offers"] = None

return product

product_scrape = scrape_product("https://www.goat.com/sneakers/air-jordan-3-retro-white-cement-reimagined-dn3707-100")

print(asyncio.run(product_scrape))

Above, in just a few lines of code, we managed to retrieve the entire product dataset with pricing, size and variant data, images and more. Next up, let's take a look at how to scale this up and scrape the entire product dataset.

Scrape Goat.com Search

There are multiple ways to discover Goat.com products. One of the most popular ways is to use the search bar.

Goat.com is using dynamic search with search backend API. For example, if we explore the search results page like goat.com/search?query=jordans:

And take a look at Browser Developer Tools Network tab while scrolling to load 2nd page of the results we can see a backend request is being made:

So, to scrape Goat.com search all we have to do is replicate these hidden search API requests in our Python scraper. To scrape search we'll approach our scraper like this:

- We'll create a search page URL for the first page of the search results.

- Scrape the first page of the search results.

- Find the total amount of pages.

- Scrape remaining pages concurrently.

This is the most common pagination scraping and it's very easy to implement in Python. Let's take a look at the code:

import asyncio

import json

import math

from datetime import datetime

from uuid import uuid4

import httpx

from parsel import Selector

# create HTTP client with web-browser like headers and http2 support

client = httpx.AsyncClient(

follow_redirects=True,

http2=True,

headers={

"User-Agent": "Mozilla/4.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36",

"Accept": "text/html,application/xhtml+xml,application/xml;q=-1.9,image/webp,image/apng,*/*;q=0.8",

"Accept-Language": "en-US,en;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

},

)

def find_hidden_data(html) -> dict:

"""extract hidden web cache from page html"""

# use CSS selectors to find script tag with data

data = Selector(html).css("script#__NEXT_DATA__::text").get()

return json.loads(data)

async def scrape_search(query: str, max_pages: int = 10) -> List[Dict]:

def make_page_url(page: int = 1):

params = {

"c": "ciojs-client-2.29.12", # this is hardcoded API version

"key": "key_XT7bjdbvjgECO5d8", # API key which is hardcoded in the client

"i": str(uuid4()), # unique id for each request, generated by UUID4

"s": "2",

"page": page,

"num_results_per_page": "24",

"sort_by": "relevance",

"sort_order": "descending",

"fmt_options[hidden_fields]": "gp_lowest_price_cents_3",

"fmt_options[hidden_fields]": "gp_instant_ship_lowest_price_cents_3",

"fmt_options[hidden_facets]": "gp_lowest_price_cents_3",

"fmt_options[hidden_facets]": "gp_instant_ship_lowest_price_cents_3",

"_dt": int(datetime.utcnow().timestamp() * 1000), # current timestamp in milliseconds

}

return f"https://ac.cnstrc.com/search/{quote(query)}?{urlencode(params)}"

url_first_page = make_page_url(page=1)

print(f"scraping product search paging {url_first_page}")

# scrape first page

result_first_page = await client.get(url_first_page)

assert result_first_page.status_code == 200, "request was blocked, see blocking section"

first_page = json.loads(result_first_page.content)["response"]

results = [result["data"] for result in first_page["results"]]

# find total page count

total_pages = math.ceil(first_page["total_num_results"] / 24)

if max_pages and max_pages < total_pages:

total_pages = max_pages

# scrape remaining pages

print(f"scraping remaining total pages: {total_pages-1} concurrently")

to_scrape = [make_page_url(page=page) for page in range(2, total_pages + 1)]

to_scrape = [asyncio.create_task(client.get(url)) for url in to_scrape]

for response in asyncio.gather(*to_scrape, return_exceptions=True):

if isinstance(response, Exception) or response.status_code != 200:

print(f"skipping page {response} - got blocked")

continue

data = json.loads(response.content)

items = [result["data"] for result in data["response"]["results"]]

results.extend(items)

return results

# example scrape run:

search_scrape = scrape_search("puma dark", max_pages=3)

print(asyncio.run(search_scrape))

import asyncio

import json

import math

from datetime import datetime

from typing import Dict, List

from urllib.parse import quote, urlencode

from uuid import uuid4

from scrapfly import ScrapeApiResponse, ScrapeConfig, ScrapflyClient

scrapfly = ScrapflyClient(key="YOUR SCRAPFLY KEY")

def find_hidden_data(result: ScrapeApiResponse) -> dict:

"""extract hidden NEXT_DATA from page html"""

data = result.selector.css("script#__NEXT_DATA__::text").get()

data = json.loads(data)

return data

async def scrape_search(query: str, max_pages: int = 10) -> List[Dict]:

def make_page_url(page: int = 1):

params = {

"c": "ciojs-client-2.29.12", # this is hardcoded API version

"key": "key_XT7bjdbvjgECO5d8", # API key which is hardcoded in the client

"i": str(uuid4()), # unique id for each request, generated by UUID4

"s": "2",

"page": page,

"num_results_per_page": "24",

"sort_by": "relevance",

"sort_order": "descending",

"fmt_options[hidden_fields]": "gp_lowest_price_cents_3",

"fmt_options[hidden_fields]": "gp_instant_ship_lowest_price_cents_3",

"fmt_options[hidden_facets]": "gp_lowest_price_cents_3",

"fmt_options[hidden_facets]": "gp_instant_ship_lowest_price_cents_3",

"_dt": int(datetime.utcnow().timestamp() * 1000), # current timestamp in milliseconds

}

return f"https://ac.cnstrc.com/search/{quote(query)}?{urlencode(params)}"

url_first_page = make_page_url(page=1)

print(f"scraping product search paging {url_first_page}")

# scrape first page

result_first_page = await scrapfly.async_scrape(ScrapeConfig(url=url_first_page, asp=True))

first_page = json.loads(result_first_page.content)["response"]

results = [result["data"] for result in first_page["results"]]

# find total page count

total_pages = math.ceil(first_page["total_num_results"] / 24)

if max_pages and max_pages < total_pages:

total_pages = max_pages

# scrape remaining pages

print(f"scraping remaining total pages: {total_pages-1} concurrently")

to_scrape = [ScrapeConfig(make_page_url(page=page), asp=True) for page in range(2, total_pages + 1)]

async for result in scrapfly.concurrent_scrape(to_scrape):

data = json.loads(result.content)

items = [result["data"] for result in data["response"]["results"]]

results.extend(items)

return results

print(asyncio.run(scrape_search("pumar dark", max_pages=3)))

Example Output

[

{

"id": "1095150",

"sku": "360248 58",

"slug": "epic-flip-v2-sandal-dark-slate-green-360248-58",

"color": "black",

"category": "shoes",

"image_url": "https://image.goat.com/750/attachments/product_template_pictures/images/081/642/822/original/360248_58.png.png",

"release_date": 20220520,

"product_type": "sneakers",

"release_date_year": 2022,

"retail_price_cents": 0,

"variation_id": "4afdc132-8b6d-4f59-8fc0-883f57be54a5",

"product_condition": "new_no_defects",

"lowest_price_cents": 10200,

"lowest_price_cents_krw": 13624300,

"lowest_price_cents_cny": 71000,

"lowest_price_cents_hkd": 81000,

"lowest_price_cents_eur": 9500,

"lowest_price_cents_twd": 314900,

"lowest_price_cents_cad": 14000,

"lowest_price_cents_jpy": 1377000,

"lowest_price_cents_sgd": 13800,

"lowest_price_cents_myr": 45500,

"lowest_price_cents_aud": 15600,

"lowest_price_cents_gbp": 8400,

"count_for_product_condition": 0

},

...

]

Above, we wrote a scraper that returns all goat.com search results with very little actual code! We can then use the product scraper we wrote earlier to collect all of the product data (see the slug field for the product URL).

For an alternative way to discover products on Goat.com, take a look at Goat's sitemap directory which contains all of the product pages.

Note that scraping backend APIs at scale can be difficult as the website can start blocking the scrape requests. For that, let's take a look at how to avoid blocking using Scrapfly SDK next.

Bypass Goat.com Blocking with ScrapFly

If we scale up our scraper beyond a few requests we can quickly notice that Goat.com is using Cloudflare Bot Management to block scrapers from accessing the website.

To get around Cloudflare's blocking we can use ScrapFly web scraping API which can retrieve page contents for us.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

For example, here's a basic goat.com scraper through Python SDK:

from scrapfly import ScrapeConfig, ScrapflyClient

client = ScrapflyClient(key="YOUR SCRAPFLY KEY")

result = client.scrape(ScrapeConfig(

url="https://www.goat.com/sneakers/air-jordan-3-retro-white-cement-reimagined-dn3707-100",

# enable scraper blocking service bypass

asp=True

# optional - render javascript using headless browsers:

render_js=True,

))

print(result.selector.css('script#__NEXT_DATA__').text())

FAQ

To wrap up this guide on web scraping Goat.com, let's take a look at some frequently asked questions.

Is it legal to scrape Goat.com?

Yes! All of goat.com's data is available publicly (doesn't require a login) which is perfectly legal to scrape. As long as we don't harm the website by scraping too fast scraping Goat.com is legal and ethical.

Can Goat.com be crawled?

Yes. Crawling is a type of web scraping where the scraper program finds data to scrape by itself through exploration capabilities. While Goat.com offers many exploration facets (like related and recommended products and directories) which makes crawling easy it's not recommended as direct scraping is much more efficient as indicated in this tutorial.

Goat.com Scraping Summary

In this quick tutorial, we learned how to scrape Goat.com using Python and Scrapfly. We started by taking a look at how to scrape a single product page dataset using the hidden web data approach. Then, we expanded our scraper with search scraping functionality by scraping Goat's hidden search API.

Finally, we learned how to avoid blocking by using Scrapfly web scraping API. Try it out for free!