Web scraping has become an essential tool for accessing and organizing data from the web, but coding skills are often seen as a barrier. Thankfully, no-code automation tools allow anyone to collect data effortlessly, without writing any code.

By leveraging accessible automation tools like Zapier and Make.com, you can automate the scraping process in minutes. In this guide, we'll explore how these no-code solutions can be combined with tools like Google Sheets integrations and browser extensions to make data scraping easy and accessible.

Whether you're a beginner or just looking for an efficient way to gather web data, this guide will show you how to instantly scrape data without any technical headaches.

Key Takeaways

Master instant data scraper development with no-code automation tools, workflow integration, and data collection techniques for comprehensive web scraping solutions.

- Implement Google Sheets integration with Make.com and Zapier for automated data scraping workflows

- Configure browser extensions and no-code tools for instant data extraction without programming knowledge

- Use ScrapFly no-code integrations for advanced web scraping capabilities with anti-blocking features

- Implement workflow automation and data processing for efficient content monitoring and collection

- Configure change detection and trigger-based scraping for real-time data updates and monitoring

- Use specialized tools like ScrapFly for automated data scraping with comprehensive anti-blocking protection

What is Instant Data Scraping?

Instant Data Scraping is a concept that simplifies the process of collecting data from websites by using accessible, no-code tools.

Instead of traditional coding methods, this approach focuses on leveraging user-friendly interfaces and automated workflows to perform web scraping. Think of it as a combination of tools like Zapier or Make.com that enable you to extract data as effortlessly as setting up a simple workflow.

With this concept, changes in documents (such as new entries in a Google Sheet or interactions through browser extensions) can trigger data scraping actions.

This makes data collection as easy as updating a spreadsheet or setting up an automation, allowing anyone to harness the power of web scraping without needing to learn how to code.

Instant Scraping with Google Sheets

One of the most accessible ways to start instant data scraping is by integrating it with Google Sheets. This method takes advantage of change detection, such as:

- Adding new rows

- Deleting rows

- Updating existing rows

- Creating new sheets

All of these actions can trigger web scraping actions automatically. Imagine updating a Google Sheet with a website URL and instantly scraping in relevant data without lifting a finger.

To streamline the scraping and data extraction process, we’ll also be using Scrapfly's no-code integrations.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

While Scrapfly's API's require coding knowledge to be used, Scrapfly offers no-code integrations with automation tools like Zapier and Make.com. Those integrations allow everyone to leverage Scrapfly's cutting-edge features through simple and accessible UIs.

Connecting Google Sheets and Scrapfly with Make.com

Make.com is an automation platform that allows users to build complex workflows without writing code. It’s similar to Zapier, but with added flexibility for connecting apps and services in more detailed ways.

In this guide, we’ll use Make.com to automate the entire scraping process, from detecting changes in Google Sheets to extracting data via Scrapfly.

Create an new spreadsheet in google sheets. The spreadsheet should have 2 sheets, one to add new URLs to scrape and the other is to store the data extracted from the URLs.

Create a new scenario from you make.com dashboard using the "Create a new scenario button":

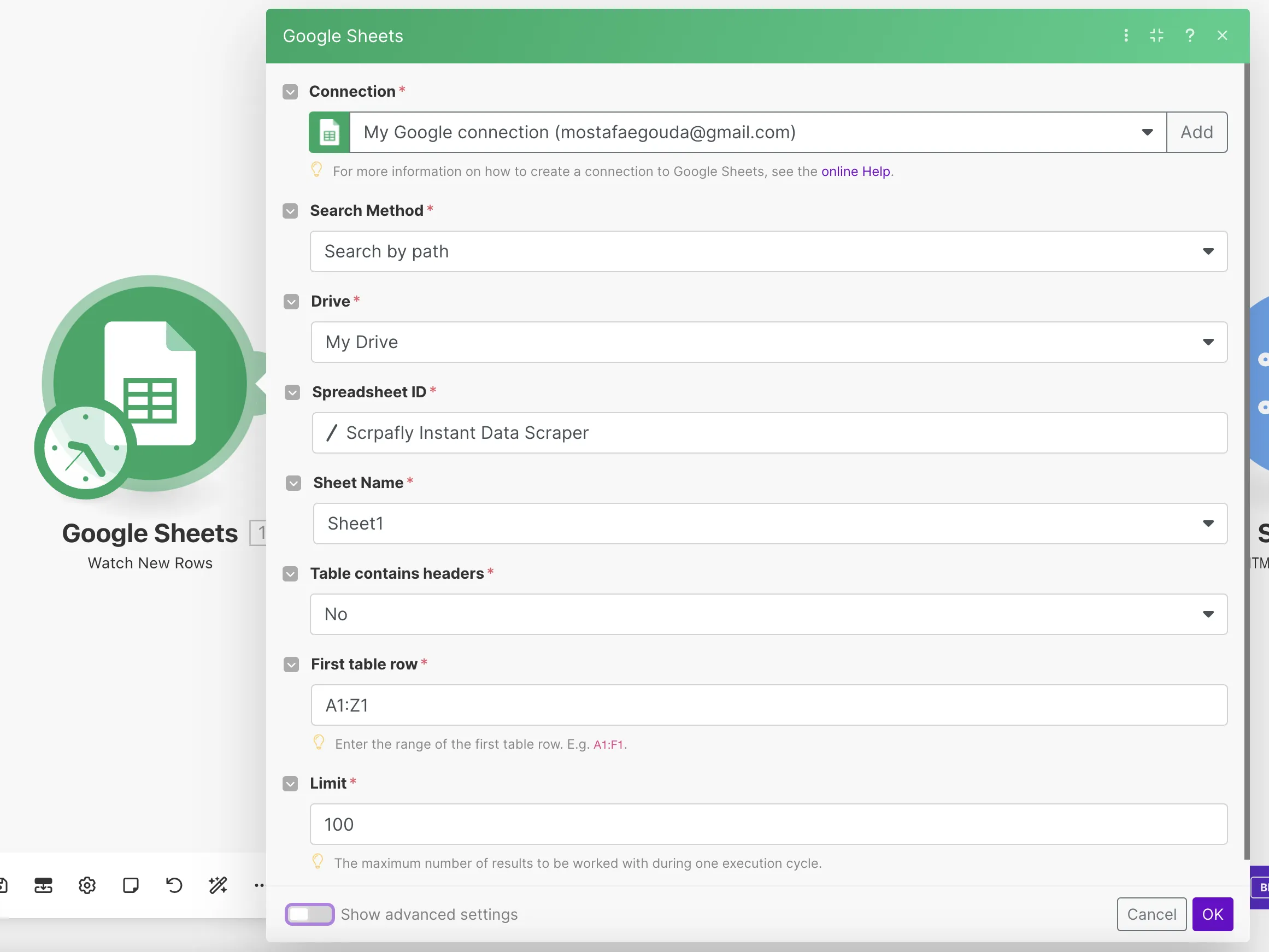

In the scenario builder, we will start with the input data node, which is the Google Sheets action "Watch New Rows". The action will prompt you to connect your google account to get access to the your sheets.

Once the account is connected, fill the action options as in the image below, note that the spreadsheet id and the sheet name vary according to the sheet you have created on google sheets.

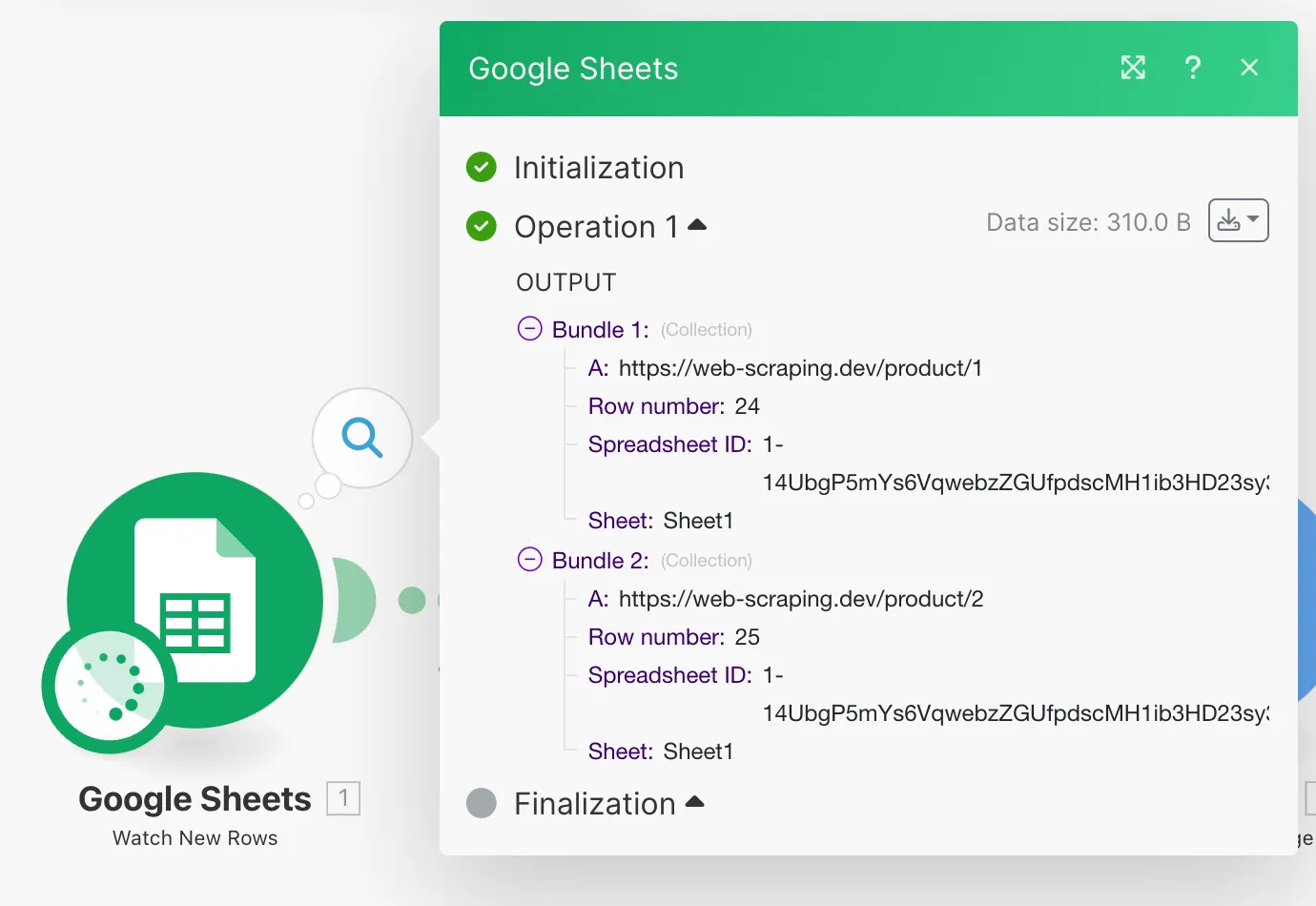

Now that we set all the action options, the action returns a list of new rows every time it runs.

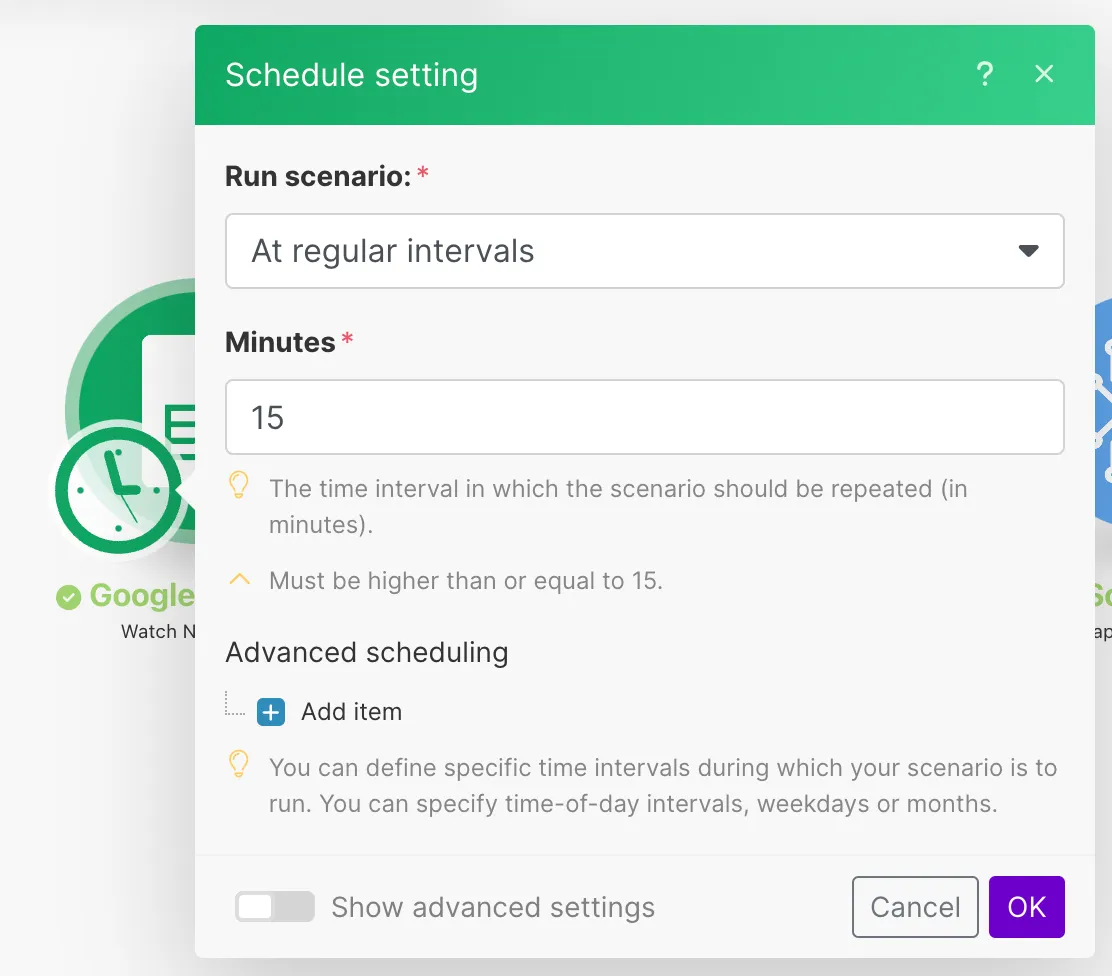

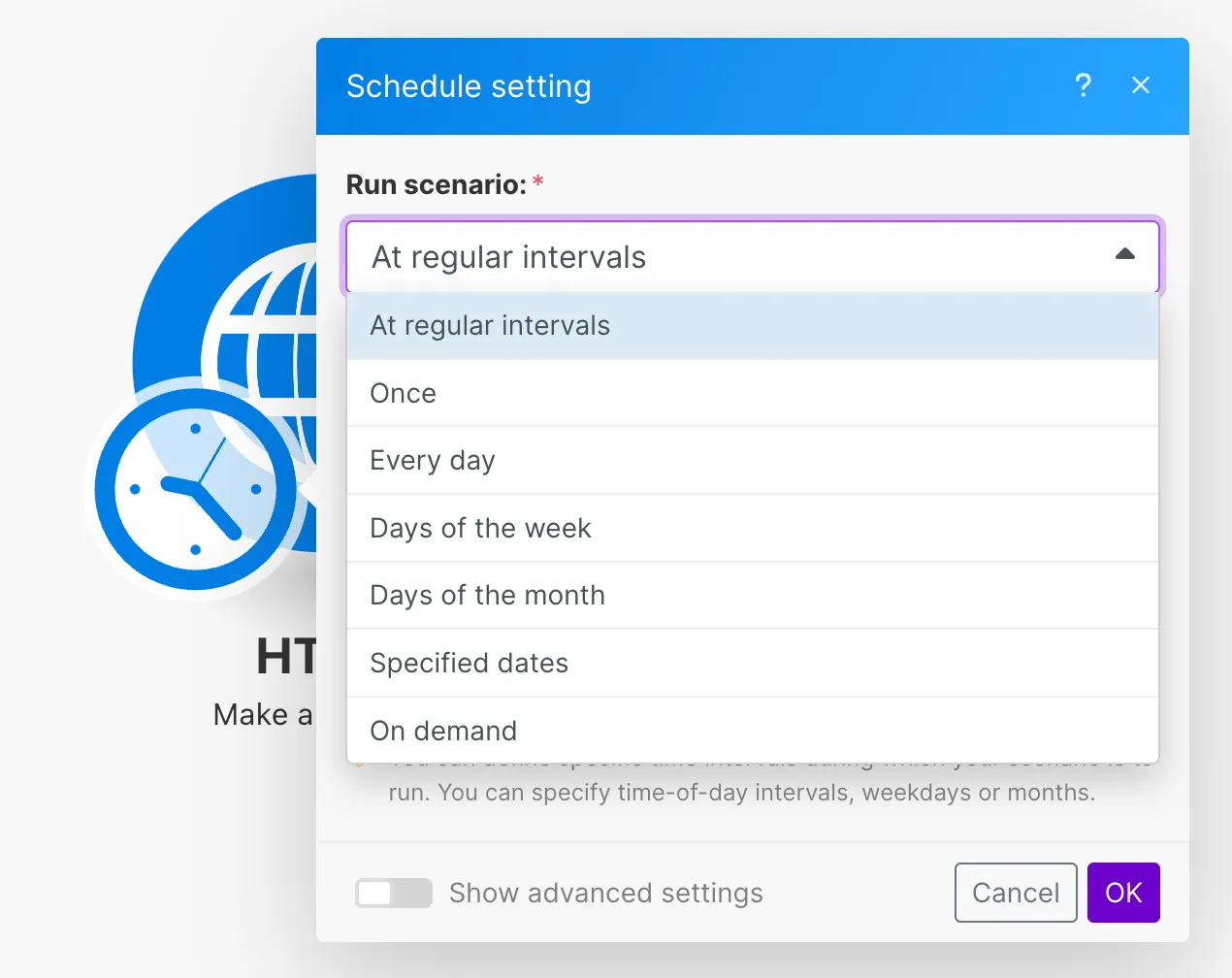

The action will automatically have a trigger on it that triggers the action every 15 minutes. Meaning that once we are done with the scenario and it is activated, it will check for new changes in the google sheet every 15 minutes. Feel free to change this time interval to suit your needs.

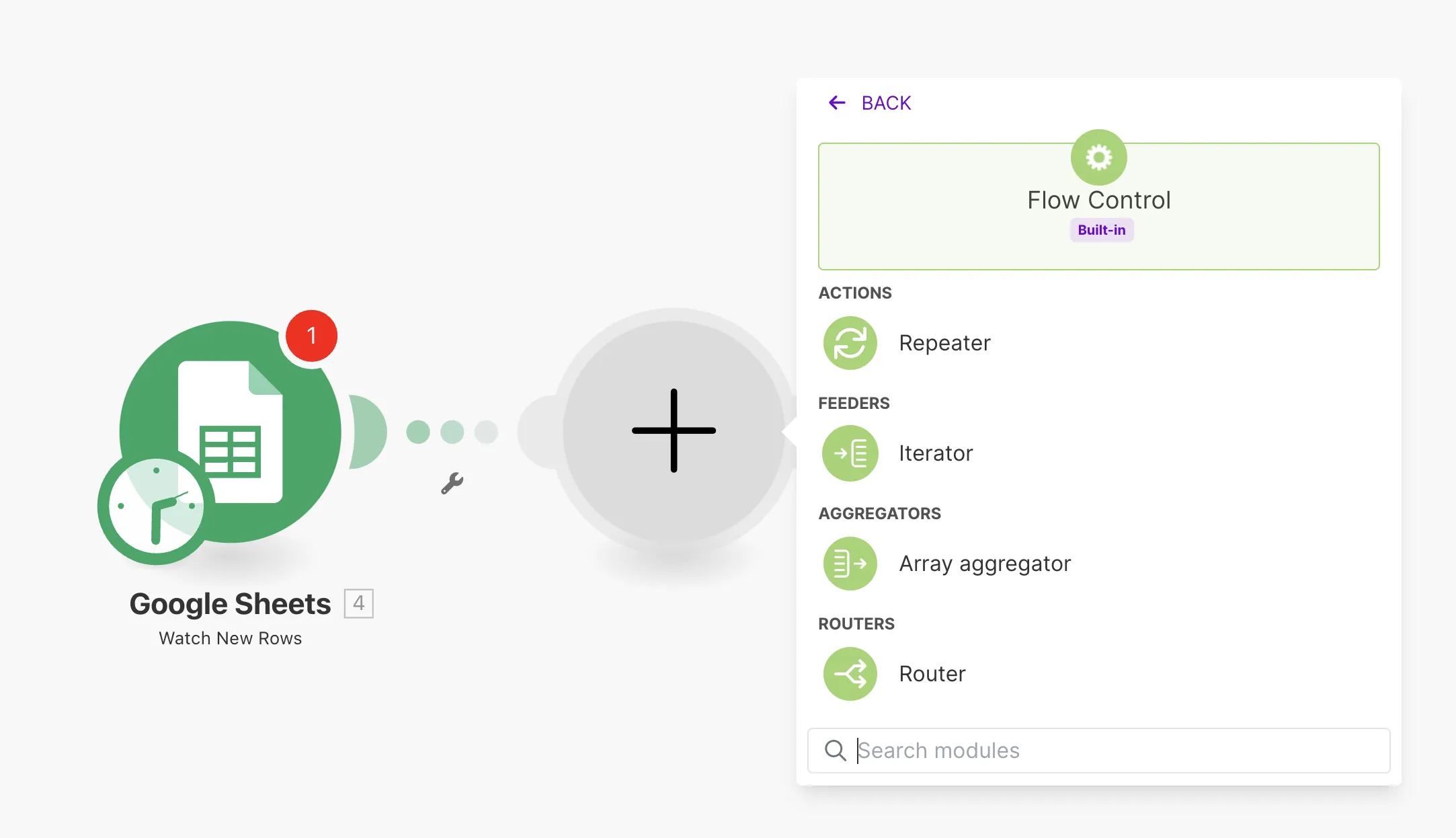

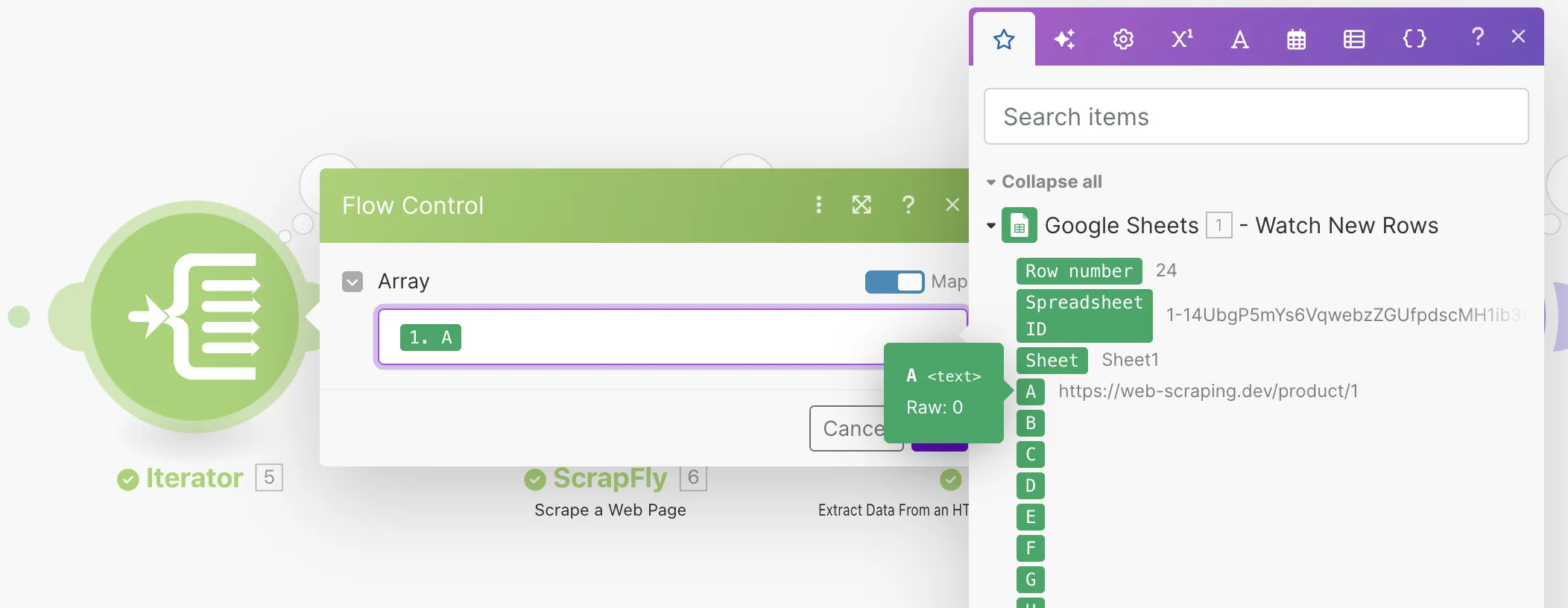

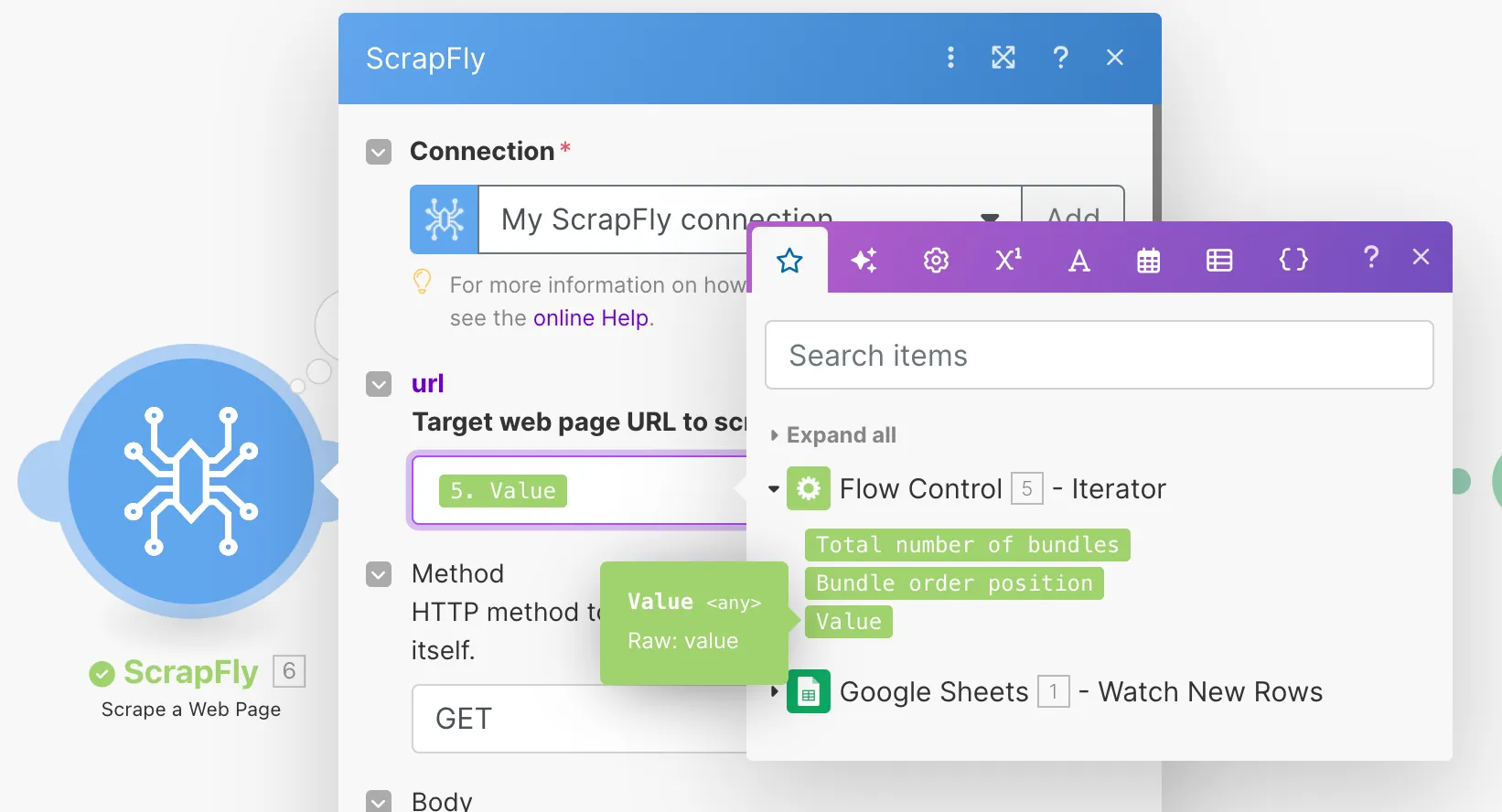

Since the "Watch New Rows" action returns a list of new rows, we will need to add an "Iterator" node that iterates over this list and allows us to scrape each URL one by one.

The URLs will be located in column A of the sheet, so will add this column as the iterartor array.

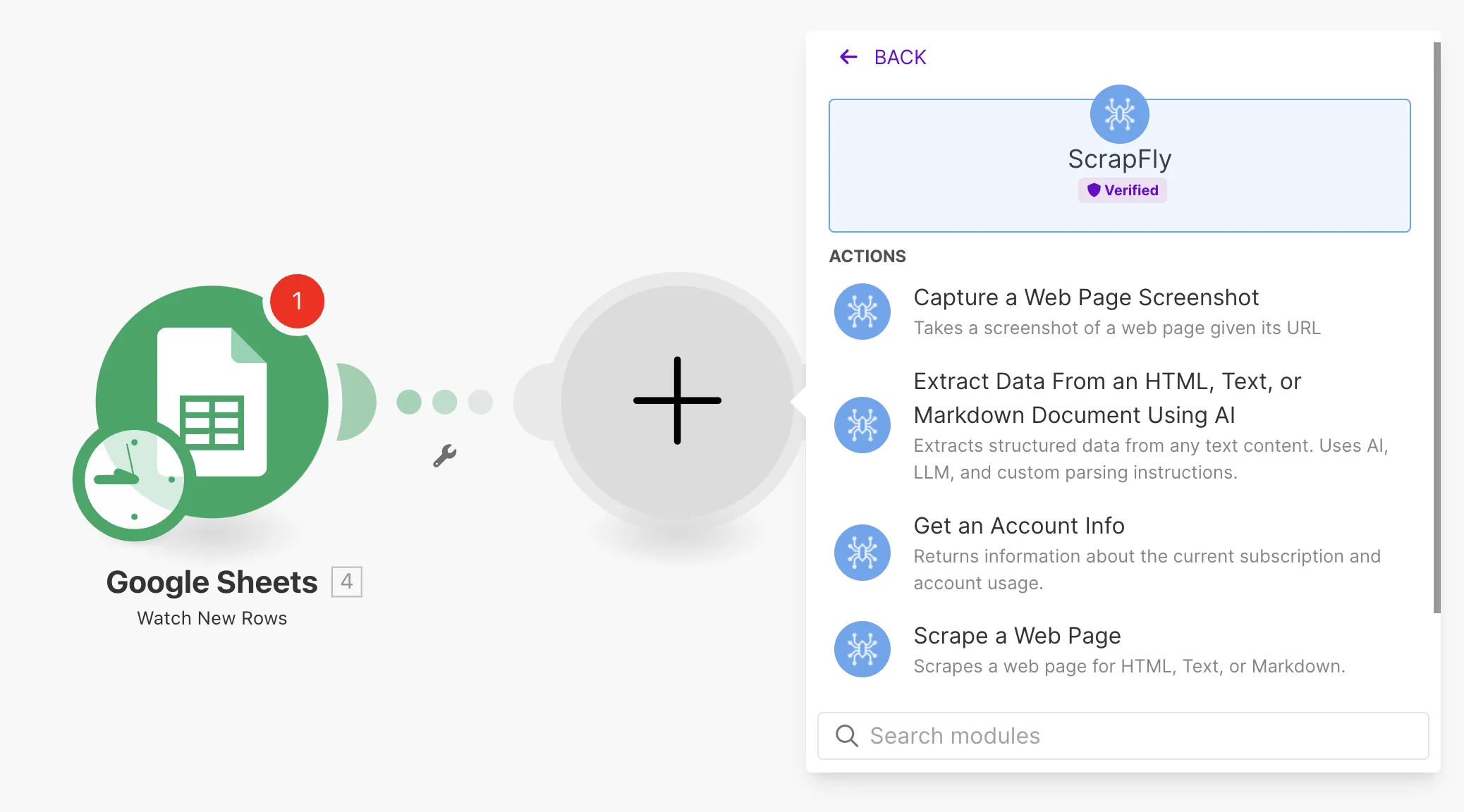

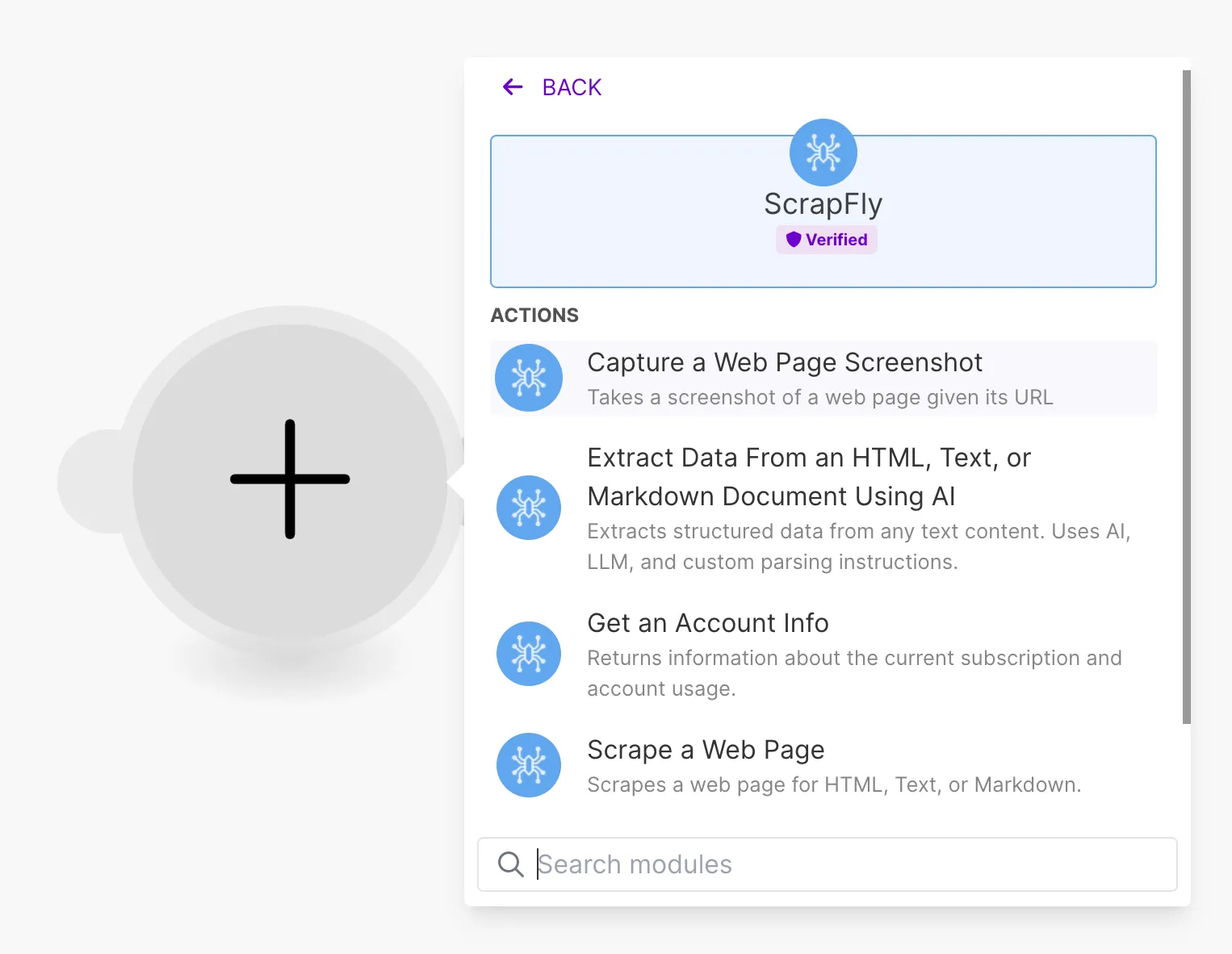

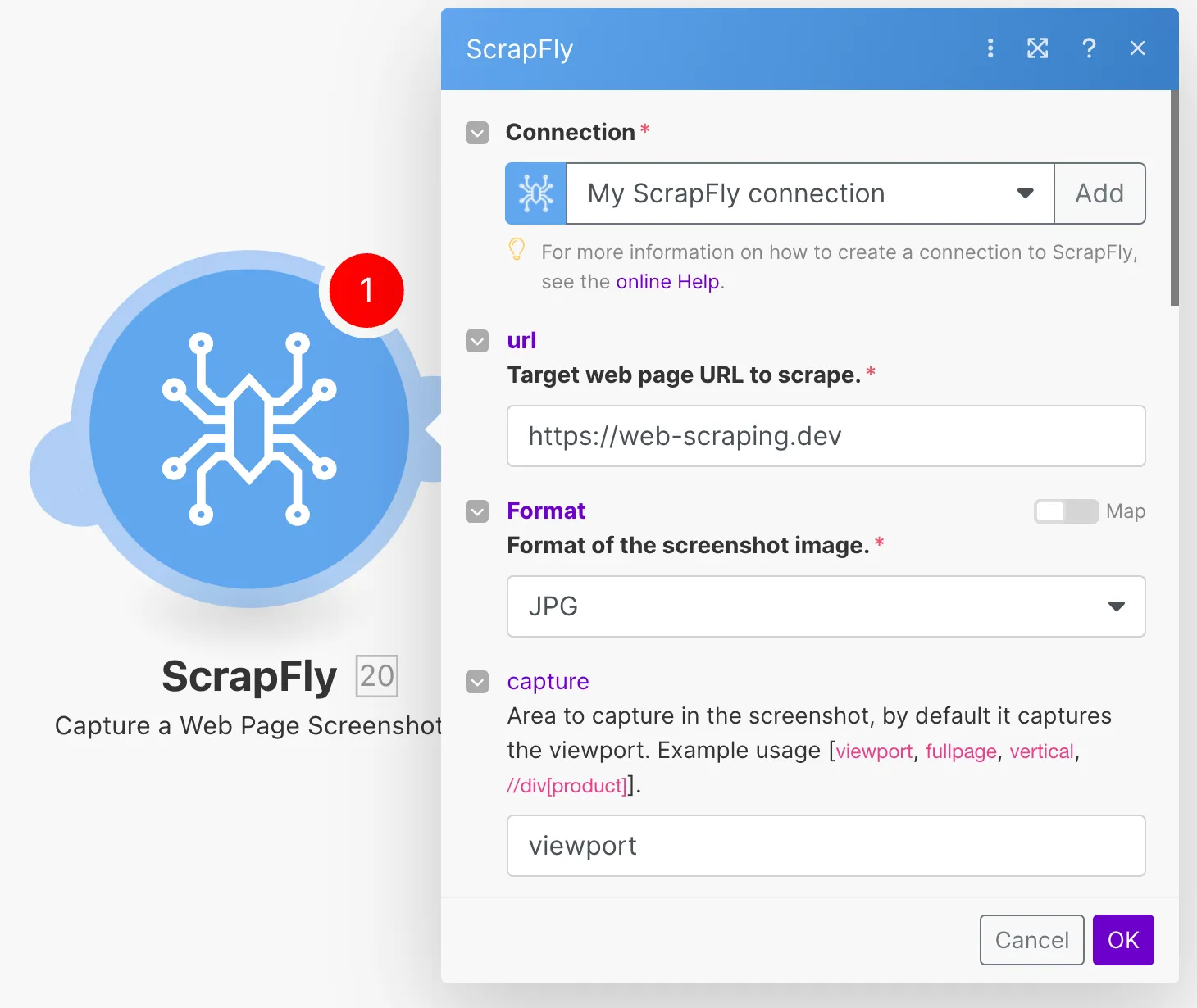

Now we will use the Scrapfly's "Scrape a webpage" action.

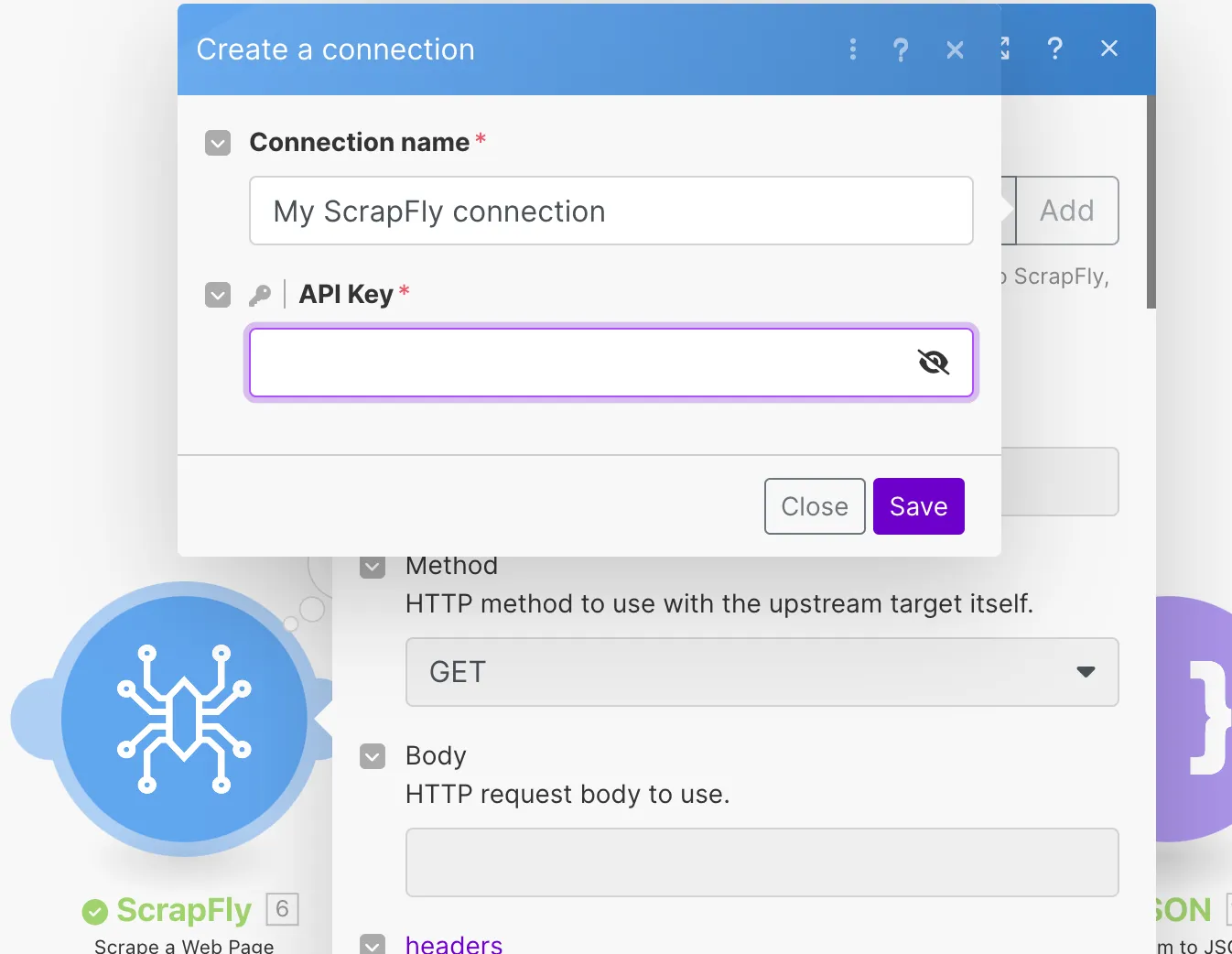

The action will prompt you to connect you Scrapfly account using the API key which you can find a the top of your Scrapfly dashbaord.

The URL value fed to the "Scrape a webpage" action will be the value coming from the "Iterator".

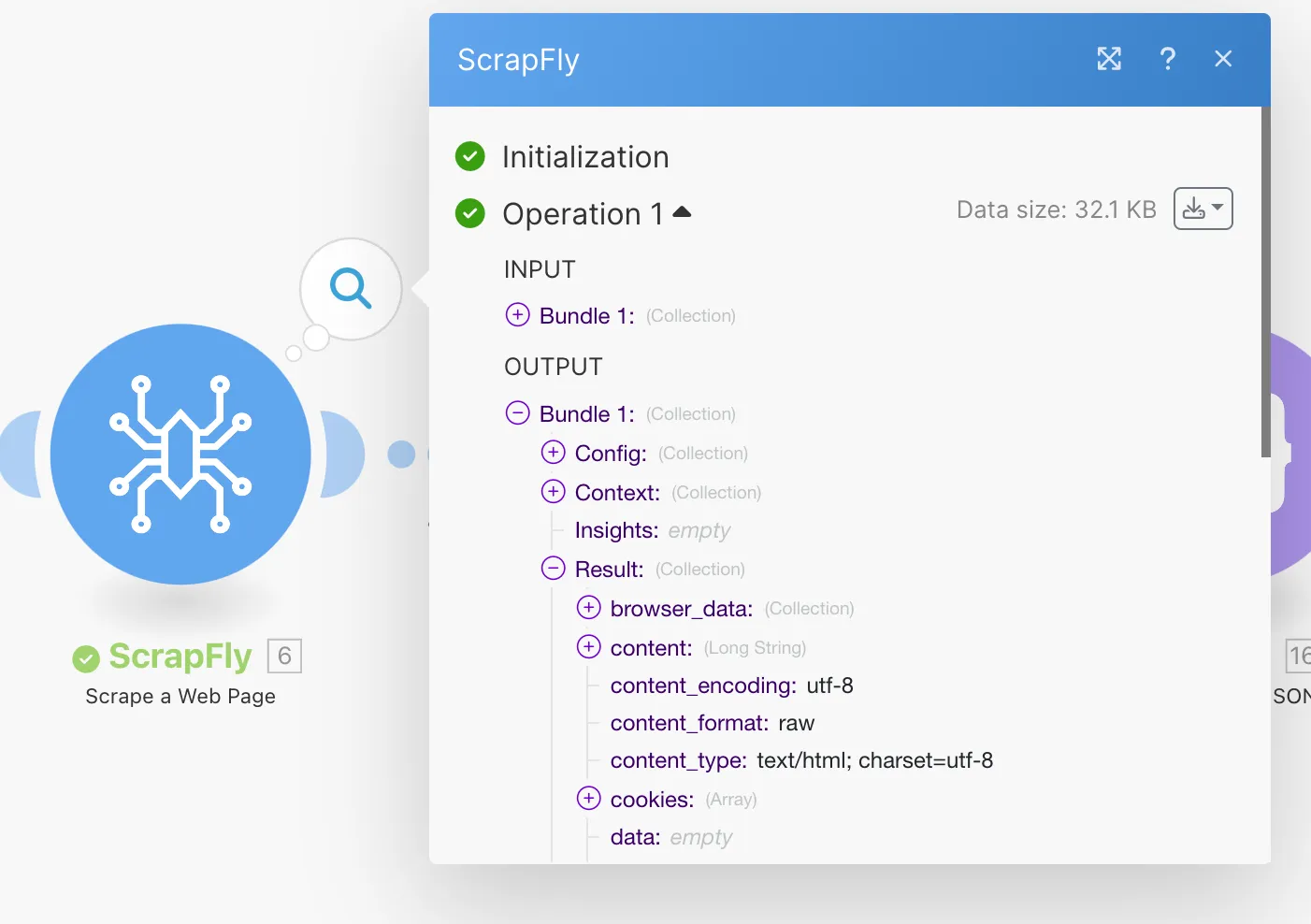

The "Scrape a webpage" action returns a collection with a "Result" object that contains the "Content" of the the scraped webpage.

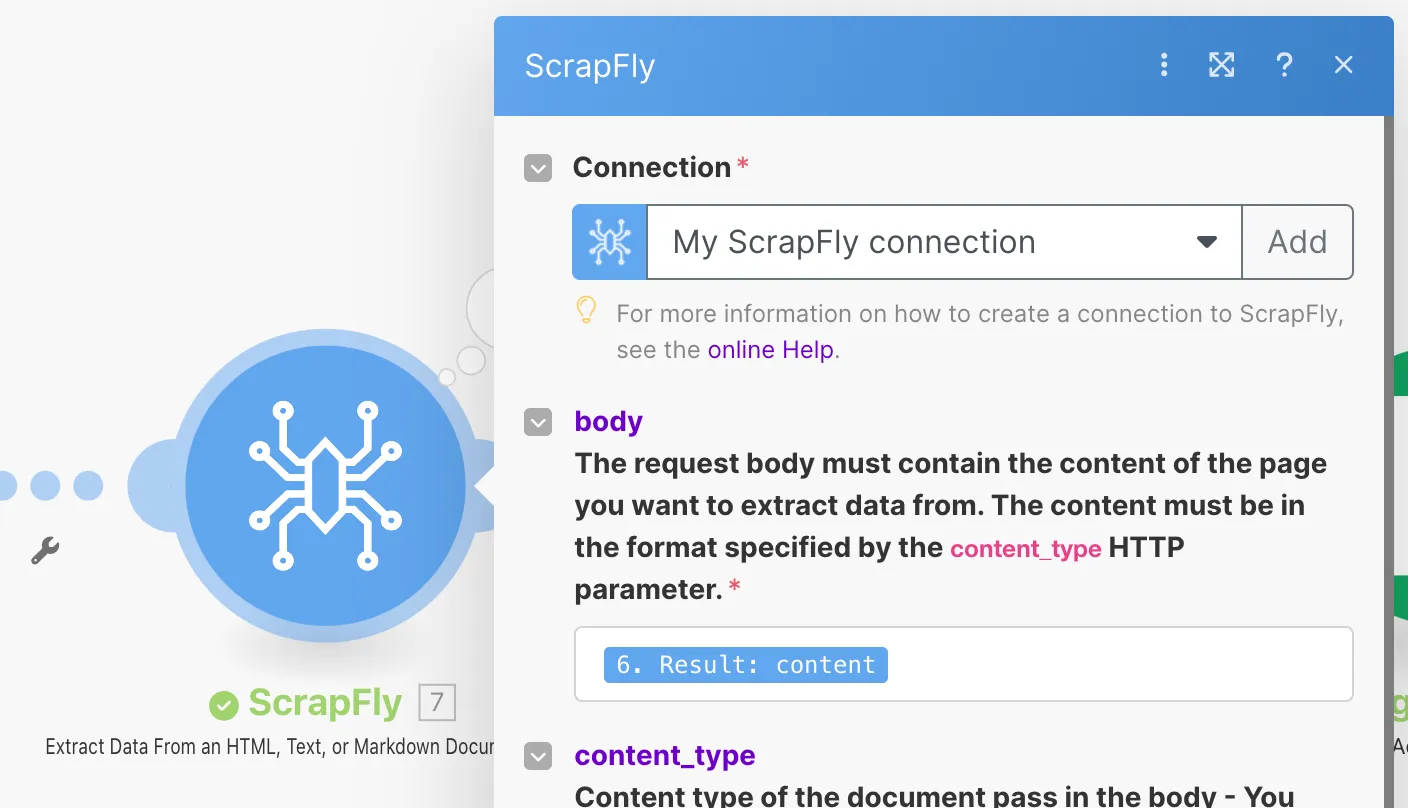

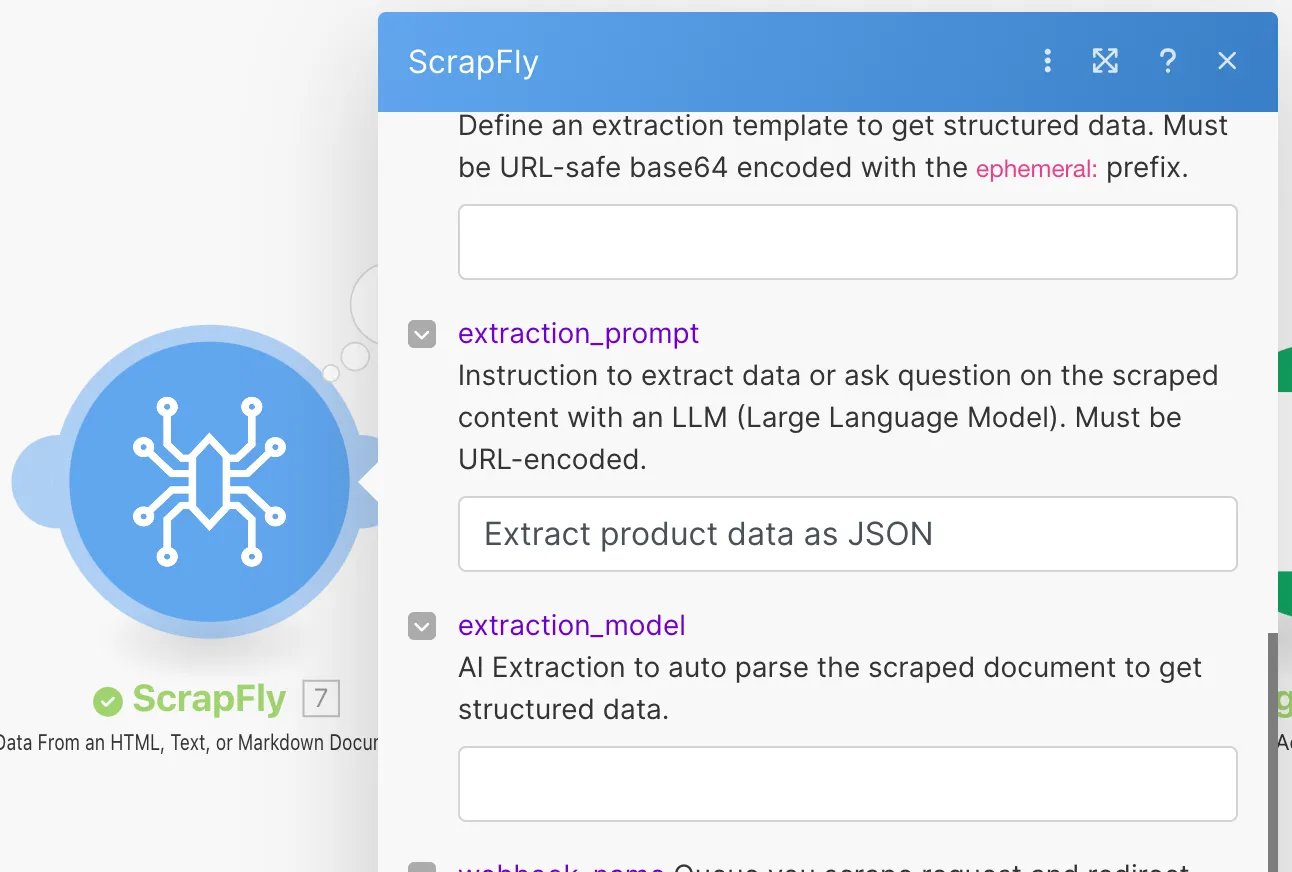

Now connect the "Scrape a webpage" action to a new "Extract Data" action provided by Scrapfly. This action allows you to extract structured JSON data from unstructured data like HTML, raw text, or markdown using AI.

Inside the action options, set the body to the content returned by the scraping action.

Also set the extraction prompt, which is a simple AI prompt specifying what data you need to be extracted. You can set this prompt to be static for all URLs, but it can also be dynamic and fed from the excel sheet for advanced uses.

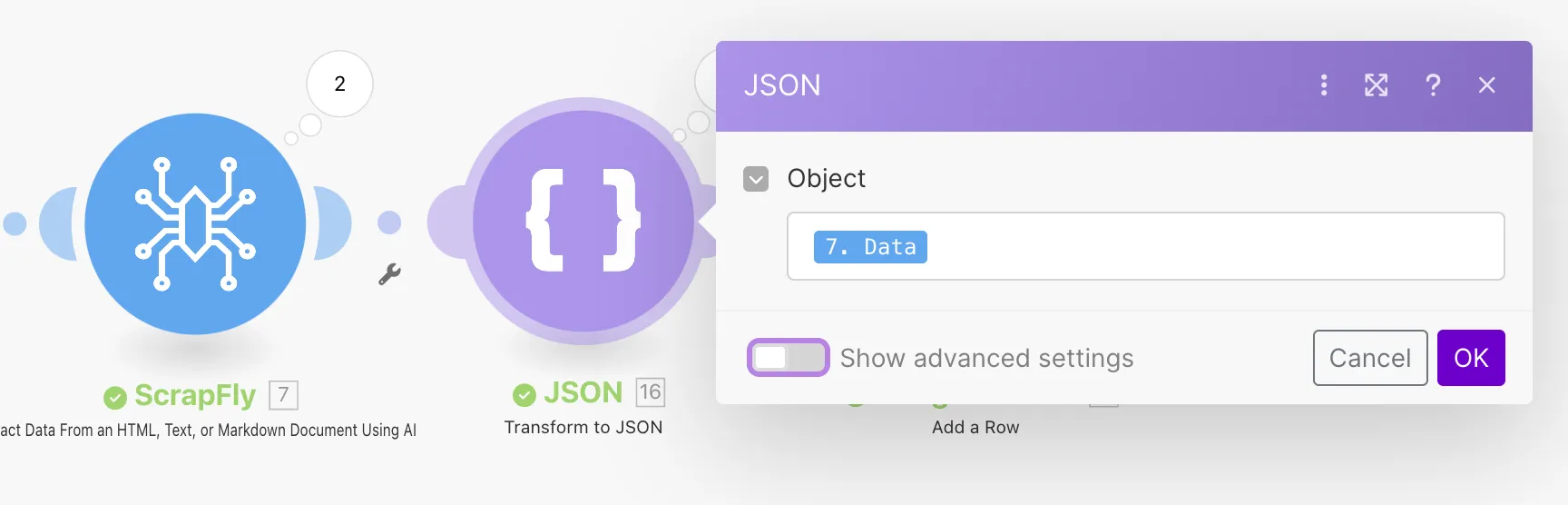

Once we have the extracted data, we can transform it into a JSON string using the "Transform to JSON" action.

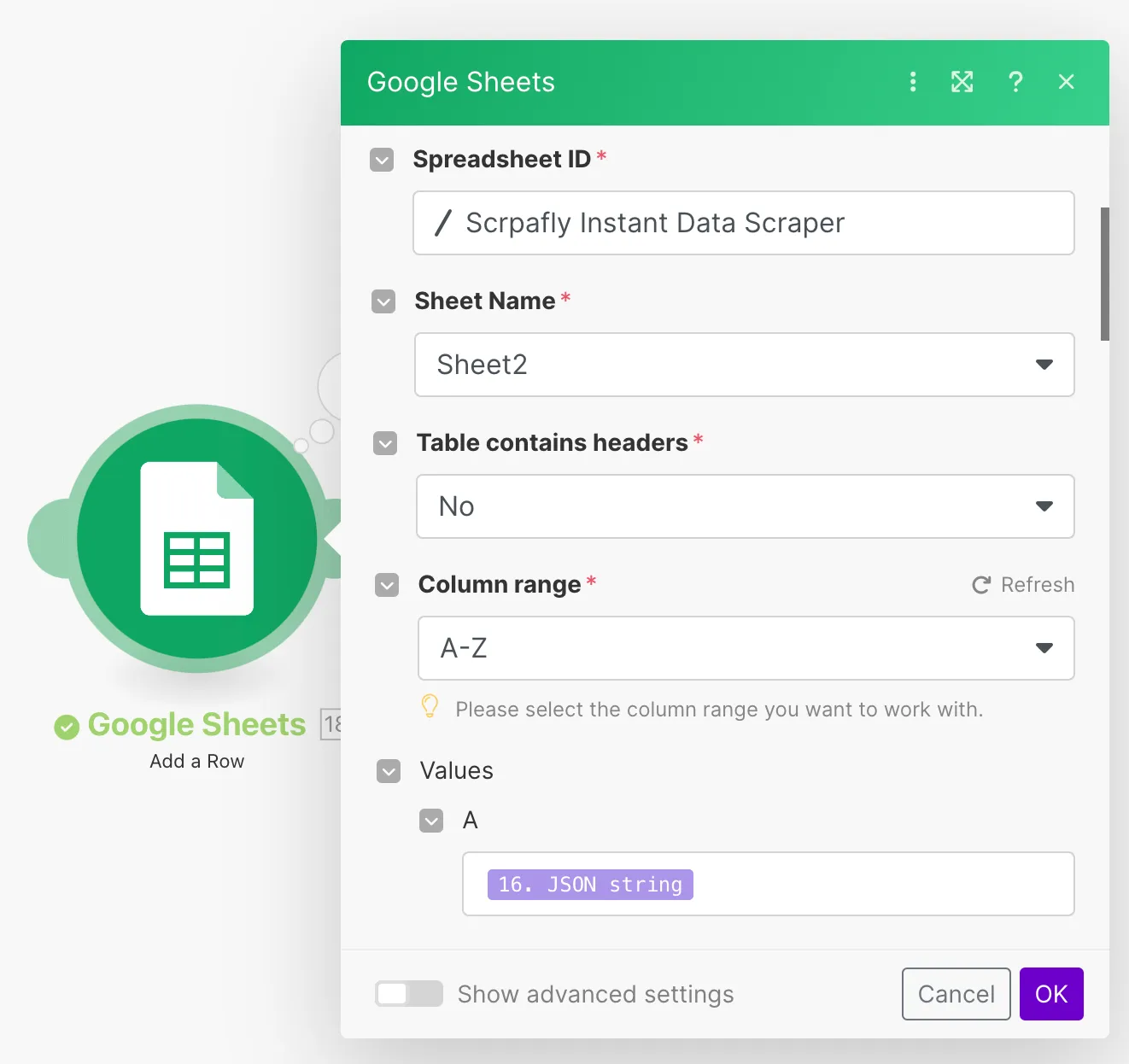

Now that we have the extracted data as a JSON string, we can create a new sheet to store the results (Sheet 2) and send the data to the google sheet using the "Add a Row" Action.

Finally, the scenario is done, activate it from your make.com dashboard and add a new URL to your spreadsheet to see it in the works.

With this we've made a no code scraper using Google Sheets, Make.com and Scrapfly in just a few minutes! This is a simple example of what you can do with these tools, you can add more complexity to the scenario by adding more actions and conditions to suit your needs.

Scrapfly Screenshot API Action

Scrapfly also offers the "Capture Webpage Screenshot" action on make.com. This action allows you to automate capturing screenshots for any wesbite, which can be used to web testing or price monitoring tools.

In its most basic form, the action expects the website URL for which the screenshot will be captured. You can also provide additional options to the action for more customization, like:

- Screenshot image format (png, jpg, webp...)

- Capture target (full viewport, specific element with CSS selector or XPATH)

- Screen Resolution (1920x1080, 2560x1440...)

- And more

You can add this action to scenario we built above and store the image on Google Drive.

Instant Scraping Inputs

Beyond Google Sheets, there are many other ways to trigger instant data scraping using change detection. The beauty of Instant Data Scraping lies in its flexibility; you can set up automations to kick off data scraping whenever specific changes are detected in a variety of inputs. Here are some examples:

Email Triggers: Automatically scrape data when specific types of emails are received, such as notifications or order confirmations.

Slack Bots: Use a Slack bot to detect messages in a particular channel and trigger a scraping workflow.

Webhook Triggers: Use webhooks to start scraping whenever data is pushed from another service or app.

Cloud File Uploads: Detect new file uploads to cloud storage (like Google Drive or Dropbox) and initiate a scraping process to extract data from documents or links.

Database Changes: Set up triggers based on changes in a database, such as new records being added or existing ones updated.

With these diverse input options, Instant Data Scraping provides a powerful, adaptable way to gather the information you need, right when you need it. This flexibility makes it ideal for anyone wanting to automate their data collection workflows without spending time on manual processes or coding.

On-Demand Scraping

Another powerful feature of Instant Data Scraping is the ability to initiate scraping workflows on demand. This means you can start data collection whenever it suits you, rather than relying solely on change detection triggers. Here are a few options to consider:

- Google Forms Integration: Google Forms can be linked to Google Sheets to easily add new entries that trigger the scraping workflow. For example, users can submit a form with a URL, and that URL is instantly scraped using Scrapfly.

- Zapier Browser Extension: Zapier has a browser extension that allows you to manually trigger actions while browsing. With this, you can scrape data from a web page with a single click, making it convenient for quick, on-the-fly data collection.

- Make.com Mobile App: Make.com also has a mobile app that allows you to trigger workflows from your phone. This means you can initiate scraping actions from anywhere, making data collection as simple as tapping a button on your device.

These on-demand scraping options provide the flexibility to collect data exactly when you need it. Whether you’re at your desk or on the go, you can always get the data you need instantly and without any coding.

Scheduled Scraping

While Instant Data Scraping provides excellent flexibility with real-time and on-demand data collection, there are times when scheduling scraping tasks makes more sense.

Scheduled scraping is perfect for when you need consistent data at regular intervals without having to manually initiate the process. For example, you can set up scraping to happen daily, weekly, or even hourly, depending on your requirements.

Using tools like Scrapfly, you can easily set up these schedules to ensure your data is always up-to-date. You won't be locked into a single solution either; using platforms like Make.com or Zapier, you can schedule scraping workflows to pull information at your preferred times, giving you the freedom to maintain an automated yet highly controlled data collection process.

Here an example of how scheduling works in make.com:

This alternative provides a balanced approach for users who require both instant access to data and regular updates, ensuring no opportunity is missed, and data accuracy is always maintained.

Summary

To recap what we covered in this guide:

- Instant Data Scraping: A no-code concept for web scraping using accessible tools like Zapier, Make.com, and Scrapfly, enabling anyone to gather data without coding skills.

- What is Instant Data Scraping?: Explained how tools like browser extensions and Google Sheet integrations can automate web scraping.

- Google Sheets Integration: Demonstrated change detection powered scraping using Google Sheets, Scrapfly, and Make.com for an end-to-end automation workflow.

- Diverse Inputs for Scraping: Explored different trigger inputs, such as email notifications, Slack bots, database changes, webhooks, and cloud file uploads.

- On-Demand Scraping: Showed how scraping can be triggered manually using tools like Google Forms, the Zapier browser extension, or the Make.com mobile app.

- Scheduled Scraping: Discussed how scheduled scraping is a viable alternative for consistent data collection, allowing workflows to run automatically at specified intervals.

Whether you need real-time data, scheduled scraping, or on-demand access, Instant Data Scraping gives you multiple flexible options for gathering the data you need, without writing a single line of code.