Manufacturers and brands distribute their products across numerous retailers, but how do they ensure their pricing gridlines are followed? The answer is Minimum Advertised Price monitoring.

In this article, we'll explain what MAP monitoring is and how to apply its concept using Python web scraping. Let's dive in!

Key Takeaways

- MAP monitoring ensures brand compliance - helps manufacturers maintain consistent pricing across retailers and protect brand reputation

- Web scraping is the core technology - automated price extraction from retailer websites enables efficient MAP compliance monitoring

- Python ecosystem provides excellent tools - httpx for HTTP requests, parsel for HTML parsing, loguru for logging, and asyncio for performance

- Asynchronous processing improves efficiency - concurrent requests significantly reduce monitoring time for large product catalogs

- Robust error handling is essential - network issues, site changes, and anti-bot measures require comprehensive error management

- Automation enables continuous monitoring - cron jobs and scheduling ensure regular compliance checks without manual intervention

- Professional services handle complex scenarios - services like Scrapfly provide anti-bot protection and proxy rotation for protected sites

- Data validation ensures accuracy - proper parsing and validation of price data prevents false compliance violations

What is MAP Monitoring?

Minimum Advertised Price monitoring (MAP monitoring) is the process of ensuring that all online products from a specific vendor follow the lowest price defined, known as MAP compliance.

There are different reasons for applying the MAP pricing enforcement on online retailers, including:

- Maintaining the brand reputation and image.

- Ensuring fair competition among retailers.

- Preserving the desired profit margins.

- Maintaining consistent consumer perception.

A MAP monitoring software does a fairly easy job: it requests the product page, parses the listing price, and compares it with the minimum advertised price. In other words, a it resembles a web scraper for automated price monitoring!

Project Setup

In this guide, we'll build a minimum advertised price monitoring tool using Python with a few community packages.

- httpx to request the product web pages and retrieve their HTML source.

- parsel to parse the product prices from the HTML using XPath and CSS selectors.

- loguru to log our MAP monitoring tool through terminal outputs.

- asyncio to increase the web scraping speed using asynchronous execution.

Since asyncio comes pre-installed in Python, we'll only have to install the other packages using the following pip command:

pip install httpx parsel loguru

Note that it's safe to replace httpx with any other HTTP client, such as requests. As for parsel, another alternative is BeautifulSoup.

Create a MAP Monitoring Tool

to monitor the minimum advertised pricing data, we'll split our logic into three parts:

- Web scraper to extract the product prices.

- Price comparator to check for map compliance violations.

- Scheduler to automate the above steps in specified intervals.

For a similar price monitoring tool, refer to our guide on tracking historical prices.

Web Scraping Prices

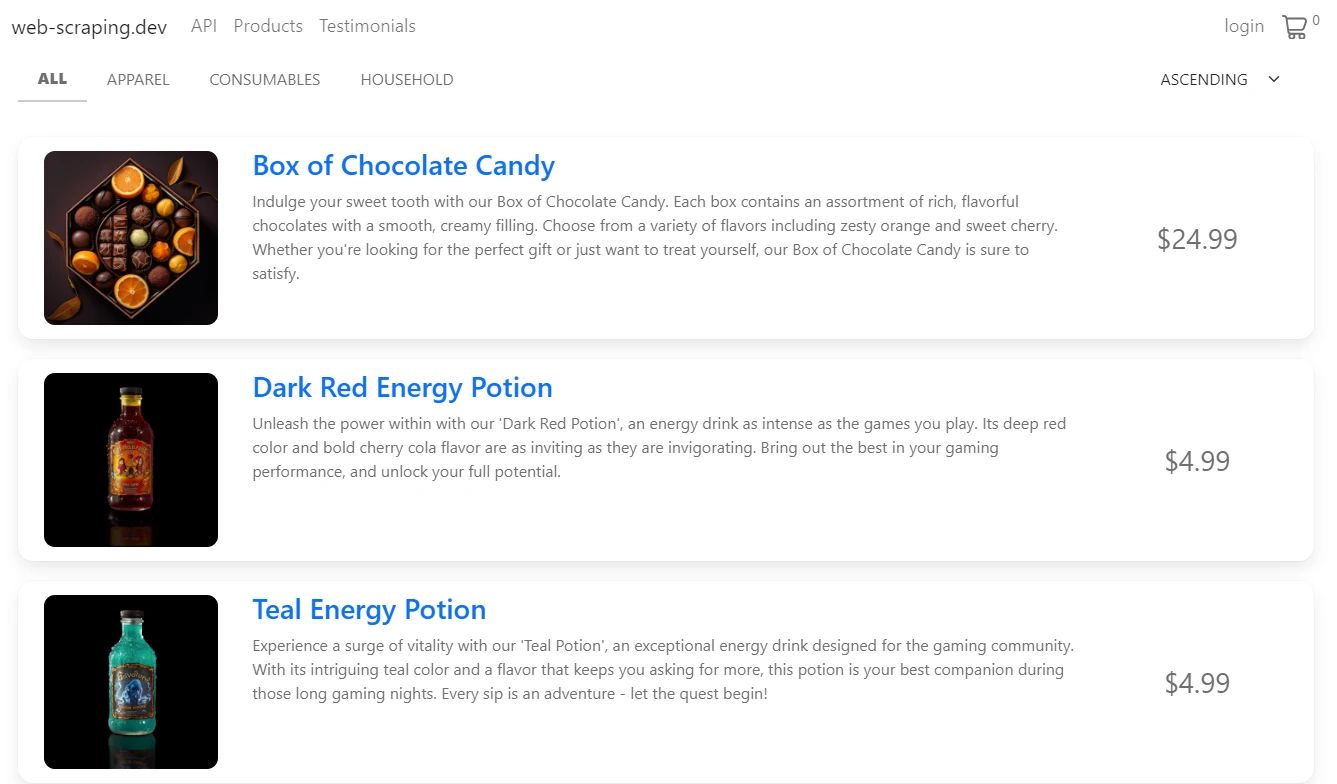

In this MAP monitoring guide, we'll use the products on web-scraping.dev/products as our scraping target:

To start, we'll create the parsing logic to parse the prices from the HTML:

import random

from httpx import Response

from parsel import Selector

from typing import List, Dict

def parse_products(response: Response) -> List[Dict]:

"""parse products from HTML"""

selector = Selector(response.text)

data = []

for product in selector.xpath("//div[@class='row product']"):

price = float(product.xpath(".//div[@class='price']/text()").get())

price = round(random.uniform(1, 4), 2) if random.random() > 0.75 else price

data.append({

"id": product.xpath(".//div[contains(@class, description)]/h3/a/@href").get().split("/product/")[-1],

"link": product.xpath(".//div[contains(@class, description)]/h3/a/@href").get(),

"price": price

})

return data

Here, we define the parse_products function to:

- Iterate over product boxes on the HTML and extract the ID, link, and price.

- Mimic random price changes, as our target web page data is static, and dynamic prices are required to check against map policy violations.

Next, we'll utilize the parsing logic while requesting the web page to extract the product data:

import asyncio

import json

from httpx import AsyncClient, Response

from loguru import logger as log

from typing import List, Dict

# initializing a async httpx client

client = AsyncClient(

headers = {

"Accept-Language": "en-US,en;q=0.9",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36",

}

)

def parse_products(response: Response) -> List[Dict]:

# rest of the function code

async def scrape_prices(url: str) -> List[Dict]:

"""scrape product prices from a given URL"""

# create an array of URLs to paginate and request concurrently

to_scrape = [

client.get(url + f"?page={page_number}")

for page_number in range(1, 5 + 1)

]

data = []

log.info(f"scraping prices from {len(to_scrape)} pages")

for response in asyncio.as_completed(to_scrape):

response = await response

data.extend(parse_products(response))

return data

Run the code

async def run():

product_data = await scrape_prices(

url="https://web-scraping.dev/products"

)

print(json.dumps(product_data, indent=2, ensure_ascii=False))

if __name__ == "__main__":

asyncio.run(run())

Here, we initiate an httpx client with basic headers to mimic a normal browser and define a scrape_prices function. It adds the page URLs to a scraping list and then requests them concurrently while utilizing the parse_products with each page response.

Here's a sample output of the results we got:

[

{

"id": "16",

"link": "https://web-scraping.dev/product/16",

"price": 1.87

},

{

"id": "17",

"link": "https://web-scraping.dev/product/17",

"price": 4.99

},

{

"id": "18",

"link": "https://web-scraping.dev/product/18",

"price": 4.99

},

]

The above results include products that vary from the minimum advertised price. However, the price deviations aren't detected yet. Let's implement this logic!

Detecting Outlier Prices

The next step of the MAP monitoring price is detecting the price changes. Such a process requires the minimum advertised price itself to be known, which is a threshold to check based on.

In our example project's context, we'll check against the scraped product prices:

import json

from loguru import logger as log

from typing import List, Dict

from time import gmtime, strftime

def check_map_compliance(data: List[Dict], price_threshold: int):

"""compare scraped prices with a price threshold"""

outliers = []

for item in data:

if item["price"] < price_threshold:

item["change"] = f"{round((price_threshold - item['price']) / price_threshold, 2) * 100}%"

item["data"] = strftime("%Y-%m-%d %H:%M:%S", gmtime())

log.debug(f"Item with ID {item['id']} and URL {item['link']} violates the MAP compliance")

outliers.append(item)

if outliers:

with open("outliers.json", "w", encoding="utf-8") as file:

json.dump(outliers, file, indent=2)

Here, we define a check_map_compliance function. It iterates over an array of data to compare the product's price against the minimum advertised price, determined by the price_threshold parameter.

The next step is utilizing the above function on the scraped data:

from loguru import logger as log

from typing import List, Dict

async def scrape_prices(url: str) -> List[Dict]:

# ....

def check_map_compliance(data: List[Dict], price_threshold: int):

# ....

async def map_monitor(url: str, price_threshold: int):

log.info("======= MAP monitor has started =======")

data = await scrape_prices(url=url)

check_map_compliance(data, price_threshold)

log.success("======= MAP monitor has finished =======")

Run the code

async def run():

await map_monitor(

url="https://web-scraping.dev/products",

price_threshold=4

)

if __name__ == "__main__":

asyncio.run(run())

Running the above map_monitor function will MAP monitor the products by scraping and comparing them with the lowest price threshold. It will also save the products that violate the MAP enforcement below with the change percentage and capturing date:

[

{

"id": "10",

"link": "https://web-scraping.dev/product/10",

"price": 2.08,

"change": "48.0%",

"data": "2024-04-30 16:38:23"

},

....

]

MAP Monitoring Scheuling

The last feature of our minimum advertised monitoring tool is scheduling. We'll use asyncio to execute the final map_monitor function as a crone job:

# ....

async def map_monitor(url: str, price_threshold: int):

log.info("======= MAP monitor has started =======")

data = await scrape_prices(url=url)

check_map_compliance(data, price_threshold)

log.success("======= MAP monitor has finished =======")

async def main():

while True:

# Run the script every 3 hours

await map_monitor(

url="https://web-scraping.dev/products",

price_threshold=4

)

await asyncio.sleep(3 * 60 * 60)

if __name__ == "__main__":

asyncio.run(main())

The above snippet will allow our MAP monitoring tool to run every specified interval to log the outlier products and save them to a file:

Full MAP Monitoring Code

Here is what the full minimum advertised price monitoring tool should look like:

import random

import asyncio

import json

from httpx import AsyncClient, Response

from loguru import logger as log

from parsel import Selector

from typing import List, Dict

from time import gmtime, strftime

# initializing a async httpx client

client = AsyncClient(

headers = {

"Accept-Language": "en-US,en;q=0.9",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36",

}

)

def parse_products(response: Response) -> List[Dict]:

"""parse products from HTML"""

selector = Selector(response.text)

data = []

for product in selector.xpath("//div[@class='row product']"):

price = float(product.xpath(".//div[@class='price']/text()").get())

price = round(random.uniform(1, 4), 2) if random.random() > 0.75 else price

data.append({

"id": product.xpath(".//div[contains(@class, description)]/h3/a/@href").get().split("/product/")[-1],

"link": product.xpath(".//div[contains(@class, description)]/h3/a/@href").get(),

"price": price

})

return data

async def scrape_prices(url: str) -> List[Dict]:

"""scrape product prices from a given URL"""

# create an array of URLs to paginate and request concurrently

to_scrape = [

client.get(url + f"?page={page_number}")

for page_number in range(1, 5 + 1)

]

data = []

log.info(f"scraping prices from {len(to_scrape)} pages")

for response in asyncio.as_completed(to_scrape):

response = await response

data.extend(parse_products(response))

return data

def check_map_compliance(data: List[Dict], price_threshold: int):

"""compare scraped prices with a price threshold"""

outliers = []

for item in data:

if item["price"] < price_threshold:

item["change"] = f"{round((price_threshold - item['price']) / price_threshold, 2) * 100}%"

item["data"] = strftime("%Y-%m-%d %H:%M:%S", gmtime())

log.debug(f"Item with ID {item['id']} and URL {item['link']} violates the MAP compliance")

outliers.append(item)

if outliers:

with open("outliers.json", "w", encoding="utf-8") as file:

json.dump(outliers, file, indent=2)

async def map_monitor(url: str, price_threshold: int):

log.info("======= MAP monitor has started =======")

data = await scrape_prices(url=url)

check_map_compliance(data, price_threshold)

log.success("======= MAP monitor has finished =======")

Run the code:

async def main():

while True:

# Run the script every 3 hours

await map_monitor(

url="https://web-scraping.dev/products",

price_threshold=4

)

await asyncio.sleep(3 * 60 * 60)

if __name__ == "__main__":

asyncio.run(main())

The retrieved MAP monitoring data provide several insight details, which can be visualized as charts. Refer to our guide on observing e-commerce trends for a quick introduction to visualizing web scraping results.

Powering Up With ScrapFly

Monitoring minimum advertised prices can require requesting hundreds of product pages. This is where a common limitation is encountered: web scraping blocking.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Here's how to scrape for MAP monitoring using ScrapFly without getting blocked. All we have to do is enable the asp parameter and choose a proxy pool and country:

# standard web scraping code

import httpx

from parsel import Selector

response = httpx.get("target web page URL")

selector = Selector(response.text)

# in ScrapFly becomes this 👇

from scrapfly import ScrapeConfig, ScrapflyClient

# replaces your HTTP client (httpx in this case)

scrapfly = ScrapflyClient(key="Your ScrapFly API key")

response = scrapfly.scrape(ScrapeConfig(

url="target web page URL",

asp=True, # enable the anti scraping protection to bypass blocking

country="US", # set the proxy location to a specfic country

proxy_pool="public_residential_pool", # select a proxy pool

render_js=True # enable rendering JavaScript (like headless browsers) to scrape dynamic content if needed

))

# use the built in Parsel selector

selector = response.selector

# access the HTML content

html = response.scrape_result['content']

FAQ

To wrap up this guide on MAP price monitoring, let's have a look at some frequently asked questions.

What is the MAP compliance?

Minimum advertised price compliance is a practice in which manufacturers set the lowest price for online retailers to advertise at. Retailers are free to sell at any chosen price but not below the minimum value specified.

How does MAP monitoring work?

MAP price monitoring involves comparing retailer prices with the minimum advertised price to check for MAP enforcement violations. Such a process can be automated with web scraping by requesting listing pages and extracting their price data.

What is the difference between price tracking and MAP monitoring?

Price tracking represents capturing and recording price changes. On the other hand, MAP monitoring doesn't care about the exact change but whether the price has degraded below its minimum advertised.Summary

In this guide, we explored MAP monitoring. We started by defining what is the minimum advertised price and why it's monitored. Then, we went through a step-by-step tutorial on monitoring MAP violations using Python by:

- Scraping product listing pages for their price data

- Comparing the product prices with the minimum advertised ones.

- Logging products that violate MAP compliance.

- Automating the MAP monitoring process as a cron job.