Large Language Models (LLMs) are revolutionizing how we interact with technology, powering everything from chatbots to content generation tools. But understanding how to make these models work for you requires knowing the difference between training, fine-tuning, and Retrieval-Augmented Generation (RAG).

This article will break down each concept, highlighting their strengths, weaknesses, and common use cases, providing a comprehensive guide to harnessing the power of LLMs.

Key Takeaways

Master how are llms trained with fine-tuning, RAG techniques, and model optimization for comprehensive AI application development.

- Use fine-tuning for customizing pre-trained models with domain-specific data and task optimization

- Implement RAG systems for real-time knowledge retrieval and context-aware response generation

- Configure model quantization and parameter-efficient fine-tuning for resource-constrained environments

- Apply advanced data preprocessing and training techniques for optimal model performance

- Use specialized tools like ScrapFly for automated data collection and preprocessing for LLM training

- Implement proper model evaluation and deployment strategies for production AI applications

What is LLM Training?

LLM training is the process of teaching a large language model to understand and generate human language. This process involves feeding the model massive amounts of text data and using algorithms to adjust the model's parameters so that it can accurately predict the next word in a sequence.

Key Components of LLM Training

Understanding the core elements of LLM training is crucial for grasping how these powerful models learn and function. Let's break down the key components:

Pretraining:

During pretraining, the model is exposed to large-scale datasets such as Common Crawl, Wikipedia, and other publicly available text sources. This phase helps the model learn grammar, facts, and reasoning abilities.Reinforcement Learning with Human Feedback (RLHF):

After pretraining, the model is fine-tuned using human feedback to align its outputs with desired behaviors. This step ensures the model generates more accurate and contextually appropriate responses.

Why Full LLM Training Is Not Feasible

Training an LLM from scratch provides control and customization but is highly impractical for most organizations due to:

- High Costs: Requires thousands of GPUs/TPUs, running for weeks, costing millions in compute power.

- Massive Data Needs: Training requires terabytes of high-quality text, posing challenges in collection, cleaning, and legal compliance.

- Infrastructure Demands: Needs specialized AI infrastructure with high-speed networking, scalable storage, and cloud resources.

- Expertise Gap: Requires AI researchers, ML engineers, and data scientists, making in-house development unrealistic for most companies.

Due to these barriers, most businesses opt for fine-tuning pre-trained models instead of full-scale training.

Now that you understand the fundamentals of full LLM training, let's explore the more practical approach of fine-tuning.

What is Fine-Tuning?

Fine-tuning LLM is the process of adapting a pretrained LLM to perform specific tasks or cater to particular domains. Unlike full training, fine-tuning requires significantly fewer resources and can be done with smaller, task-specific datasets.

Fine-Tuning: A Cost-Effective Alternative

Fine-tuning is a cost-effective way to customize large language models. However, it is only possible with open-source models like LLaMA and deepseek, as closed-source models generally do not allow fine-tuning. This makes it an attractive option, enabling organizations to tailor AI models to their needs without the high costs of building one from scratch.

Methods of Fine-Tuning

There are different techniques used for fine-tuning an LLM. Each has its own advantages and disadvantages.

LoRA (Low-Rank Adaptation):

LoRA is a lightweight fine-tuning method that modifies only a small subset of the model’s parameters. This approach is cost-effective and efficient, making it ideal for organizations with limited resources.Supervised Fine-Tuning:

In this method, the model is trained on custom datasets tailored to specific tasks. For example, a company might fine-tune an LLM using its internal documentation to create an AI assistant for employees.

Common Use Cases

Fine-tuning unlocks various practical applications across different industries. Let's explore some common use cases where fine-tuning proves to be particularly beneficial.

- AI Assistants: Fine-tuning LLMs with company-specific data to create personalized virtual assistants.

- Domain-Specific Chatbots: Adapting models for industries like legal, medical, or customer support to provide accurate and relevant responses.

Now that you understand fine-tuning, let’s see how Retrieval-Augmented Generation (RAG) can further enhance LLM capabilities.

What is RAG (Retrieval-Augmented Generation)?

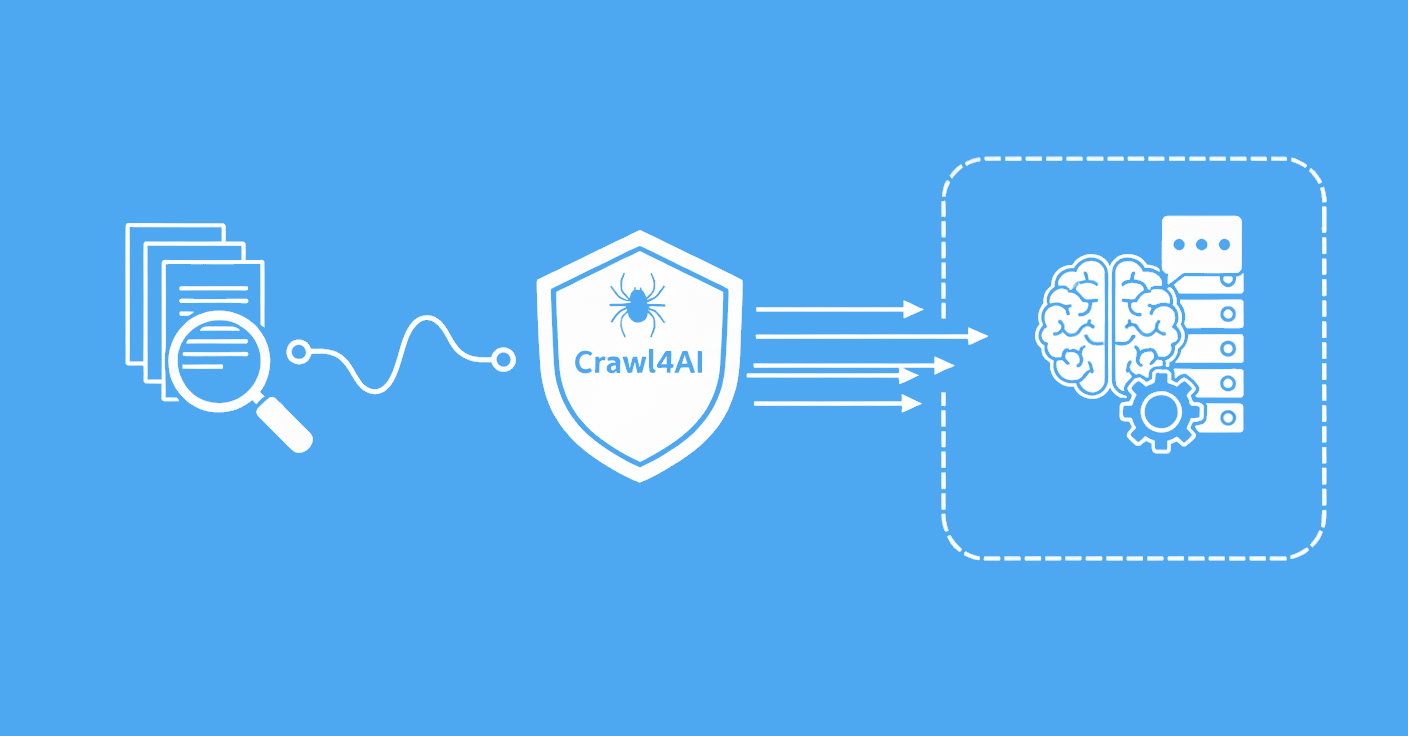

Retrieval-Augmented Generation (RAG) is an advanced technique that enhances Large Language Models (LLMs) by dynamically retrieving external data at runtime. Unlike fine-tuning, which modifies the model’s parameters, RAG keeps the model unchanged and instead augments prompts with relevant, real-time information.

How RAG Works

Instead of relying solely on pre-trained knowledge, RAG retrieves external information such as documents, web pages, or databases before generating a response. This process allows LLMs to stay up-to-date, context-aware, and factually accurate without requiring periodic model updates.

Why RAG is Different

RAG stands out from other LLM enhancement techniques due to its ability to dynamically fetch information rather than relying on static training data. Key advantages include:

- Real-Time Knowledge Updates: RAG enables LLMs to access up-to-date data without retraining, making it ideal for dynamic industries like news, finance, and healthcare.

- No Need for Extensive Fine-Tuning: Since RAG works by retrieving external data, it eliminates the need for resource-intensive fine-tuning processes.

- Flexible and Scalable: Businesses can connect RAG to various external data sources, such as APIs, document repositories, or web scrapers, to tailor responses to their needs.

- Overcomes Training Limitations: Traditional LLMs have a knowledge cutoff and token constraints; RAG mitigates these issues by fetching only the most relevant information when needed.

Example Use Cases

RAG is particularly useful in scenarios where real-time information retrieval is crucial. Some common applications include:

- AI Chatbots with Live Data: Chatbots enhanced with RAG can fetch the latest news, stock prices, or support documentation, ensuring users receive up-to-date responses.

- Legal and Medical Research Assistants: RAG-powered assistants can pull information from legal databases or medical journals to provide context-specific insights.

- Web-Scraped Data Integration: Businesses can combine web scraping with RAG to integrate external data into AI responses, ensuring the most relevant and recent information is always available.

Now, let’s explore how to seamlessly integrate web data into your LLM workflows using RAG and web scraping techniques.

Enhancing LLMs with RAG and Web Scraping Using ScrapFly

It's common for web scraping tools to send HTTP requests to web pages in order to retrieve their data as HTML. However, utilizing web scraping as the RAG data source, we have to extract the web data in a format that LLMs understand, either as Text or Markdown.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

Here's how to use web scraping for RAG models using OpenAI. First, get your OpenAI key and use the following code:

import os

from llama_index.readers.web import ScrapflyReader

from llama_index.core import VectorStoreIndex

scrapfly_reader = ScrapflyReader(

api_key="Your Scrapfly API key",

ignore_scrape_failures=True,

)

# Load documents from URLs as markdown

documents = scrapfly_reader.load_data(

urls=["https://web-scraping.dev/products"]

)

# Set the OpenAI key as a environment variable

os.environ['OPENAI_API_KEY'] = "Your OpenAI Key"

# Create an index store for the documents

index = VectorStoreIndex.from_documents(documents)

# Create the RAG engine with using the index store

query_engine = index.as_query_engine()

# Submit a query

response = query_engine.query("What is the flavor of the dark energy potion?")

print(response)

"The flavor of the dark energy potion is bold cherry cola."

Here, we start by creating a VectorStoreIndex, a component required by the RAG model. It splits the documents into a set of chunks, sets the relationship between their text, and saves them into memory.

You can learn more about How to Power-Up LLMs with Web Scraping and RAG in our dedicated article:

How to Power-Up LLMs with Web Scraping and RAG

In depth look at how to use LLM and web scraping for RAG applications using either LlamaIndex or LangChain.

FAQ

Below are quick answers to common questions about LLM training, fine-tuning, and RAG.

What is the difference between fine-tuning and RAG?

Fine-tuning modifies the LLM's parameters using custom data, while RAG enhances LLM responses with real-time, external data without changing the model itself. Fine-tuning is used to modify the LLM's behavior, while RAG modifies the knowledge.

When should I use LoRA for fine-tuning?

Use LoRA when you have limited computational resources, need to fine-tune quickly, and want to minimize the number of trainable parameters.

What are the limitations of RAG?

RAG cannot deeply modify the LLM's behavior. For knowledge modification, it's primarily limited by the token size constraints of the LLM model, which restricts the amount of external data that can be incorporated into a single prompt at any given time.

Summary

This article provided a comprehensive overview of three critical techniques for leveraging Large Language Models (LLMs): full training, fine-tuning, and Retrieval-Augmented Generation (RAG). Here's a recap of the key takeaways:

Full LLM Training: While powerful, it's often impractical for most organizations due to high costs, massive data requirements, and specialized infrastructure.

Fine-Tuning (with LoRA): A more accessible approach, enabling you to customize pre-trained LLMs for specific tasks with significantly fewer resources. LoRA, in particular, offers a lightweight and efficient method.

Retrieval-Augmented Generation (RAG): A dynamic technique that enhances LLM responses with real-time data, keeping knowledge current without the need for retraining.

Here's a handy table to illustrate this:

| Attribute | Fine-Tuning | Retrieval-Augmented Generation (RAG) | Training from Scratch |

|---|---|---|---|

| Cost | Moderate | Low | Very High |

| Resources | Requires access to GPUs/TPUs, but significantly fewer than full training. | Minimal computational resources needed. | Requires thousands of GPUs/TPUs, running for weeks. |

| Data | Needs task-specific datasets, which are smaller and more manageable. | Uses existing knowledge bases or documents, no need for extensive datasets. | Needs terabytes of high-quality text data. |

| Infrastructure | Requires some AI infrastructure but less demanding than full training. | Basic infrastructure suffices. | Requires specialized AI infrastructure with high-speed networking and scalable storage. |

| Expertise | Requires knowledge in machine learning and model fine-tuning. | Easier to implement with less specialized knowledge. | Requires a team of AI researchers, ML engineers, and data scientists. |

Understanding the nuances of each approach full training, fine-tuning, and RAG is crucial for effectively and efficiently harnessing the power of LLMs in your projects.