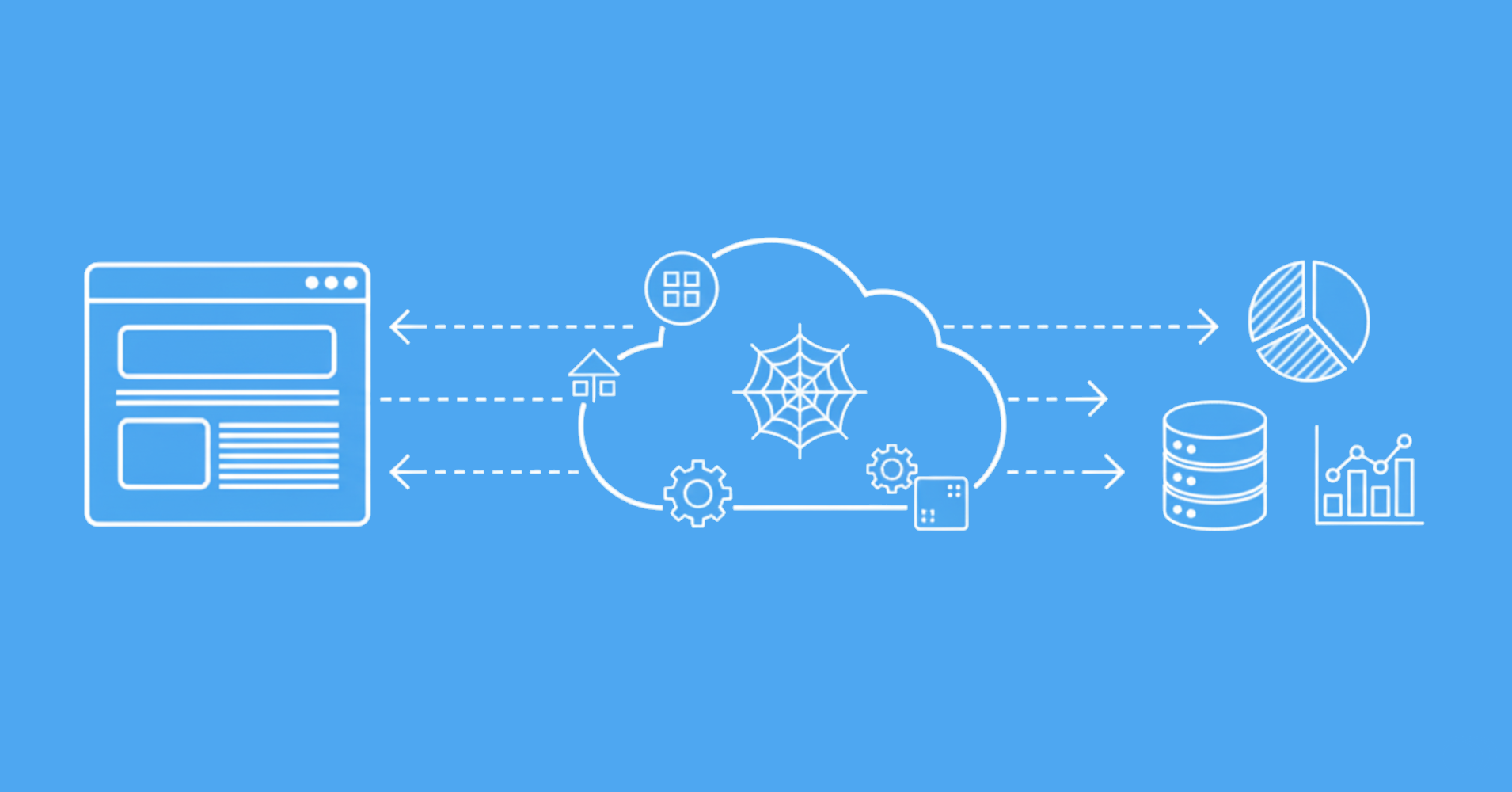

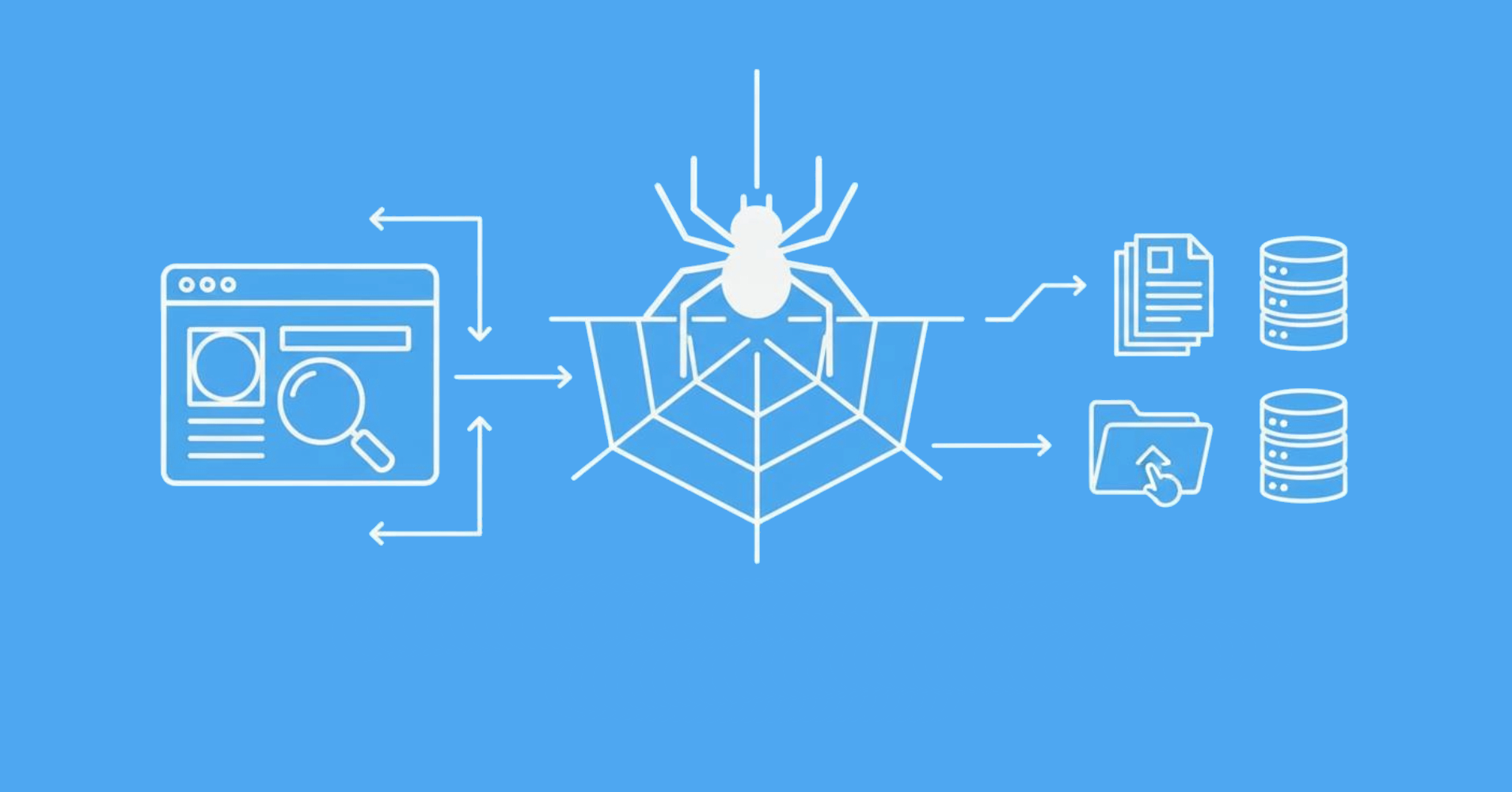

Web scraping tools are essential for building web scraping projects. They provide the necessary functionality to extract data from websites, handle requests, parse HTML, and manage data storage.

As scraping is a complex and varied task there are many tools available, each with its own strengths and weaknesses. Some tools are designed for specific tasks, while others are more general-purpose. The choice of tool often depends on the specific requirements of the scraping project.

See our web scraping tool highlights below 👇