HTML tables are commonly found across different web pages. They exist as a data frame on the web page. In this guide, we'll explain how to scrape an HTML table with BeautifulSoup as the parsing library through a real-life example. Let's get started!

Setup

Before we start, let's ensure the required libraries are installed.First, let's install the BeautifulSoup package using the pip terminal command:

pip install beautifulsoup4

As for the HTTP client, we'll be using the built-in requests Python library. However, it can be replaced with any other client, such as httpx.

Retrieve Table Data

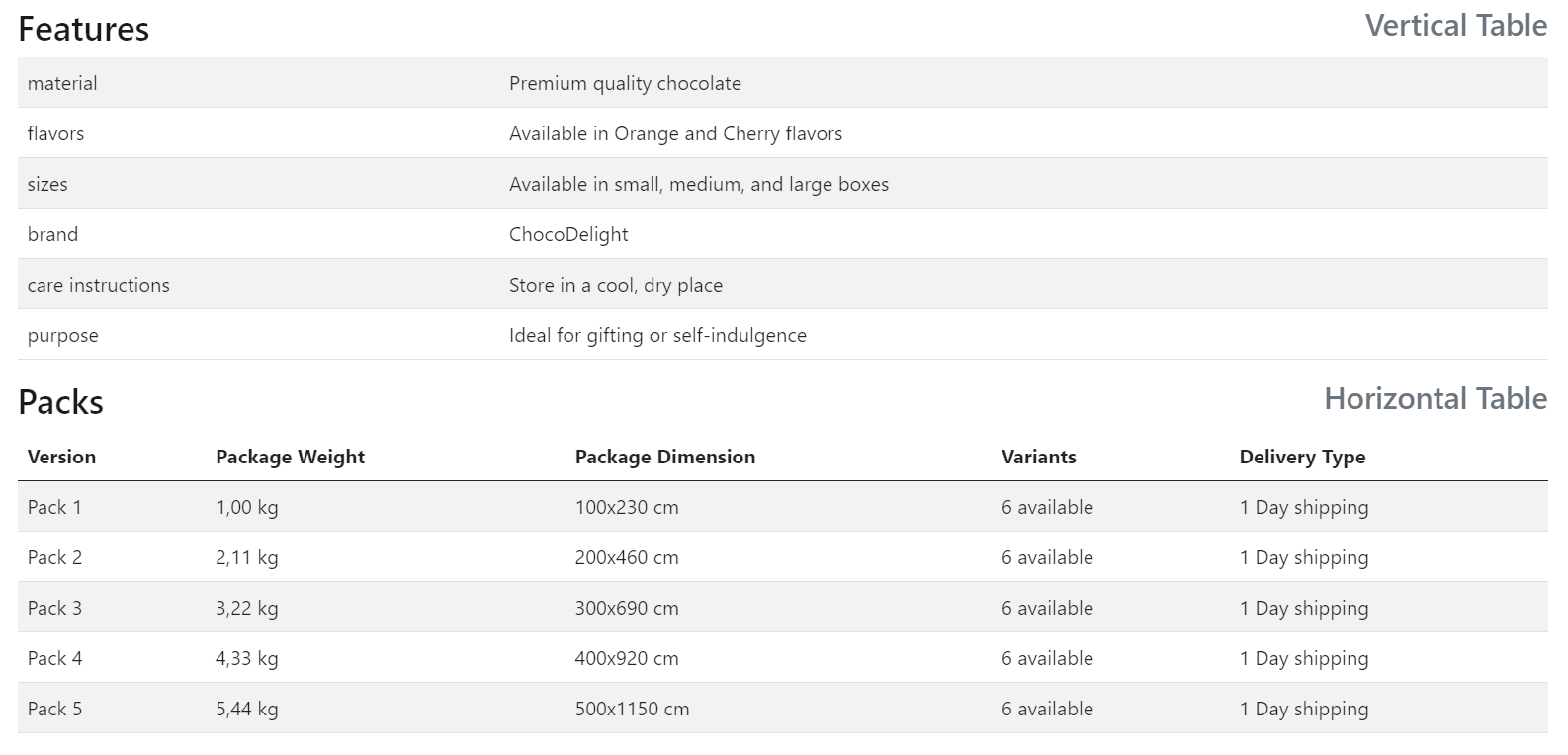

o start, let's have a look at our target table. We'll be using the target table classes web-scraping.dev/product/1:

We'll request the above page to retrieve the tables data available in the HTML:

from bs4 import BeautifulSoup

import requests

response = requests.get("https://web-scraping.dev/product/1")

html = response.text

# Create the soup object

soup = BeautifulSoup(html, "lxml")

Above, we start by requesting the target webpage to retive the HTML tables. Then, we use BeautifulSoup to create a parser object.

Parse HTML Tables

The BeautifulSoup package uses CSS selectors to select HTML elements. Hence, we'll target the table class, and then iterate over its rows:

from bs4 import BeautifulSoup

import requests

response = requests.get("https://web-scraping.dev/product/1")

html = response.text

soup = BeautifulSoup(html, "lxml")

# First, select the desried table element (the 2nd one on the page)

table = soup.find_all('table', {'class': 'table-product'})[1]

headers = []

rows = []

for i, row in enumerate(table.find_all('tr')):

if i == 0:

headers = [el.text.strip() for el in row.find_all('th')]

else:

rows.append([el.text.strip() for el in row.find_all('td')])

Above, we first use the find_all method to find all table elements and select the second table on the page. Then, we find each table row and iterate through them extracting their text contents. As for the i == 0 condition, we use it to extract the table header rows, as it's first row in our BeautifulSoup table.

Here are what the results we got should look like:

print(headers)

['Version', 'Package Weight', 'Package Dimension', 'Variants', 'Delivery Type']

for row in rows:

print(row)

['Pack 1', '1,00 kg', '100x230 cm', '6 available', '1 Day shipping']

['Pack 2', '2,11 kg', '200x460 cm', '6 available', '1 Day shipping']

['Pack 3', '3,22 kg', '300x690 cm', '6 available', '1 Day shipping']

['Pack 4', '4,33 kg', '400x920 cm', '6 available', '1 Day shipping']

['Pack 5', '5,44 kg', '500x1150 cm', '6 available', '1 Day shipping']

For more details on parsing with BeautifulSoup, refer to our dedicated guide.