The Google Jobs API was once a powerful tool for integrating job postings directly into websites, helping businesses and job boards streamline recruitment processes. However, in 2021, Google decided to discontinue this service, leaving developers and businesses searching for alternatives.

In this blog, we will cover everything you need to know about the Google Jobs API, why it was discontinued, and what options exist today. We'll also explore how to scrape Google Jobs listings, using tools like Scrapfly, and discuss alternative job data sources.

Key Takeaways

Master google jobs api alternatives with advanced web scraping techniques, structured data extraction, and third-party API integration for comprehensive job listing aggregation.

- Use Google Jobs scraping with advanced anti-blocking techniques including proxy rotation and fingerprint management

- Implement structured data extraction using JobPosting schema and JSON-LD markup for job listing integration

- Configure third-party job APIs including Indeed, LinkedIn, and Glassdoor for comprehensive job data collection

- Apply advanced web scraping techniques with Playwright and Selenium for dynamic job listing extraction

- Use specialized tools like ScrapFly for automated Google Jobs scraping with anti-blocking features

- Apply advanced techniques for salary data extraction, location-based filtering, and real-time job market analysis

What is Google Jobs?

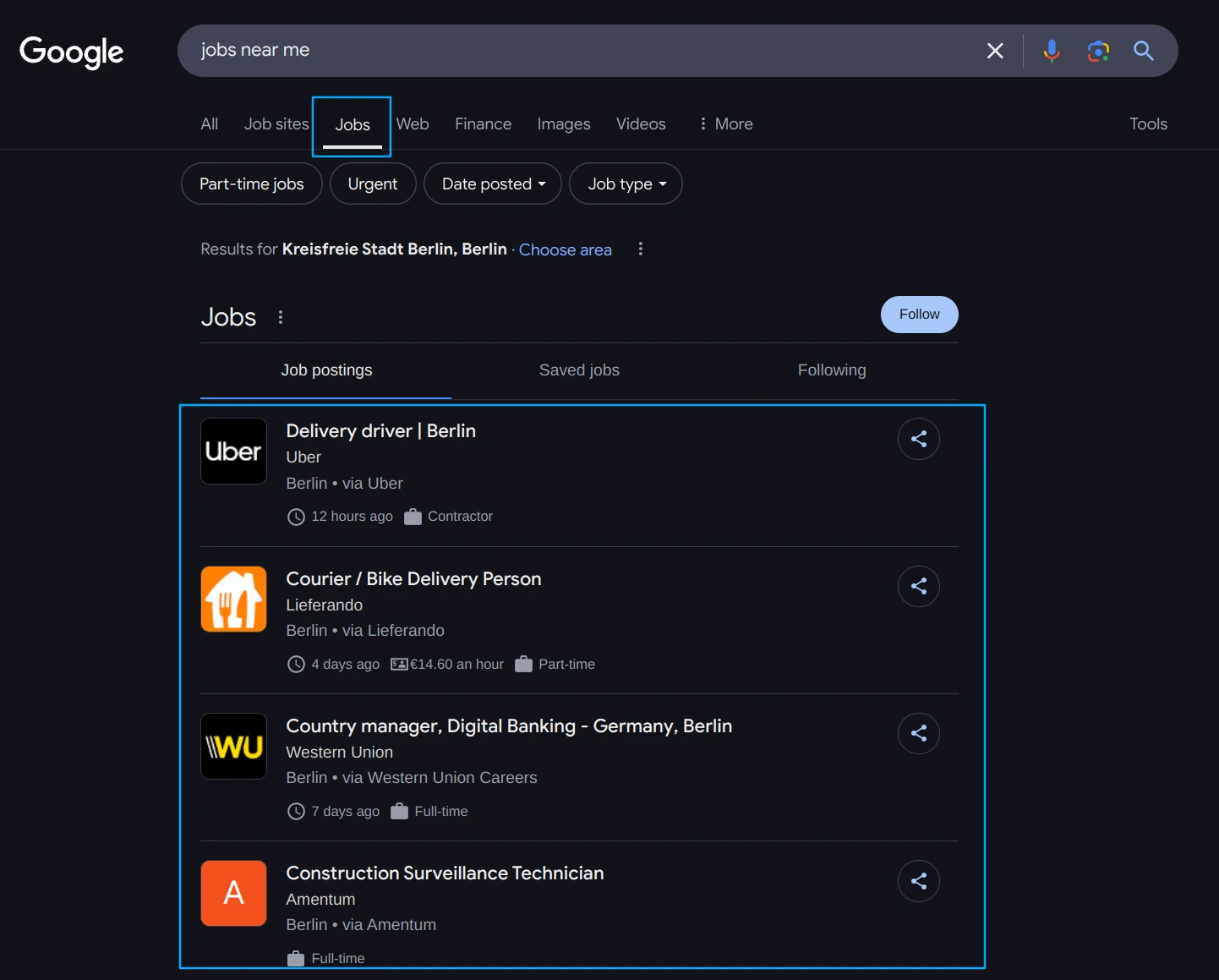

Google Jobs, also known as Google for Jobs, is a search engine feature that aggregates job listings from multiple job boards, company career pages, and recruitment platforms. It displays job postings directly in Google Search results, improving job visibility for employers and helping job seekers find opportunities more efficiently.

How Google for Jobs Works

Google for Jobs functions as a job listing aggregator, using structured data and AI-driven ranking to present relevant job openings. Here’s how it works:

- Structured Data Markup: Companies and job boards can mark up their job postings using Google's structured data (JobPosting schema).

- Job Indexing: Google automatically indexes job postings and displays them in a dedicated job search widget within Google Search.

- AI & Relevance Matching: Google uses machine learning to rank job listings based on the user’s search query, location, and preferences.

Example: Structuring Job Data for Google Jobs

If you'd like your job listing to be featured on Google jobs all you have to do is include your job listing as a JSON-LD structured element:

<script type="application/ld+json">

{

"@context": "https://schema.org/",

"@type": "JobPosting",

"title": "Software Engineer",

"description": "Join our team as a Software Engineer. Responsibilities include backend development, API integration, and cloud computing.",

"datePosted": "2025-02-01",

"validThrough": "2025-03-01",

"employmentType": "FULL_TIME",

"hiringOrganization": {

"@type": "Organization",

"name": "Tech Innovators Inc.",

"sameAs": "https://www.techinnovators.com",

"logo": "https://www.techinnovators.com/logo.png"

},

"jobLocation": {

"@type": "Place",

"address": {

"@type": "PostalAddress",

"streetAddress": "1234 Innovation Drive",

"addressLocality": "San Francisco",

"addressRegion": "CA",

"postalCode": "94107",

"addressCountry": "US"

}

},

"baseSalary": {

"@type": "MonetaryAmount",

"currency": "USD",

"value": {

"@type": "QuantitativeValue",

"value": 120000,

"unitText": "YEAR"

}

},

"jobLocationType": "REMOTE",

"applicantLocationRequirements": {

"@type": "Country",

"name": "US"

}

}

</script>

This format helps search engines index job postings, boosting visibility in Google for Jobs. Converting scraped data into JSON-LD improves job discovery and streamlines recruitment.

Benefits of Google for Jobs

Google for Jobs provides multiple advantages for both employers and job seekers:

- Increased visibility for job postings in Google Search

- Mobile-friendly and easy-to-use interface

- Free job listing feature (no paid promotion needed)

- Improved job search engine optimization (SEO) for companies

Google for Jobs remains active, but its API has been discontinued, which has left many developers looking for ways to retrieve job data from Google Search.

Google Jobs API Discontinuation

In May 2021, Google officially shut down the Google Jobs API, a service that previously allowed businesses and job platforms to integrate job listings directly into their websites. This decision forced companies to find alternative ways to display and retrieve job postings, shifting the focus toward structured data and web scraping.

Why Was the Google Jobs API Discontinued?

Google has not provided a direct explanation for the shutdown, but there are a few key reasons why:

- Shift to Structured Data: Instead of an API, Google has encouraged websites to use JobPosting structured data to ensure their job listings appear in search results.

- Limited Adoption: The API had a limited user base, primarily large job platforms, making it an unnecessary service for Google to maintain.

- Google for Jobs as a Direct Solution: Google prefers job listings to be indexed organically, rather than through API integration.

This discontinuation has left businesses and job platforms searching for alternatives for retrieving job data from Google search.

Google Jobs API Alternatives

While Google Jobs API is no longer available there are alternative ways for extracting job data from Google Search and other job platforms. Here are some effective strategies for accessing job listings at scale:

1. Scraping Google Jobs

Web scraping allows businesses to extract job postings directly from Google for Jobs search results.

Scraping Google Jobs bypasses the lack of an official API, providing immediate access to job data.

- A web scraper mimics a user searching on Google for Jobs.

- It extracts job details such as job titles, salaries, company names, and locations.

- The data is then processed and stored for analysis or integration.

2. Scraping Other Job Websites

Since many job platforms restrict or limit API access, scraping becomes the best alternative for collecting large-scale job data. Instead of relying on third-party job APIs with strict rate limits, paid plans, and access restrictions, job scrapers can target alternative sources for reliable job listings.

Best Alternative Job Platforms for Scraping:

| Platform | Scraping Difficulty | Why Scrape It? |

|---|---|---|

| Indeed.com | Medium | High job volume, salary transparency. |

| Linkedin.com Jobs | Hard | Verified professional job postings. |

| Glassdoor.com | Medium | Includes company reviews & salaries. |

| Wellfound.com | Medium | Focuses on Startup |

| ZipRecruiter | Easy | Employer-submitted jobs. |

| CareerBuilder | Medium | Covers multiple industries & roles. |

Scraping job sites offers flexible job data collection without API restrictions but requires technical effort to maintain. Combining scraping with available APIs ensures broader coverage and reliability.

Web Scraping Google Jobs Using Selenium

While Google no longer offers an official API for job data, its public search interface remains a valuable resource. Selenium, a popular browser automation tool, can be used to scrape Google Jobs listings by simulating human interactions and rendering dynamic content. Here’s how to approach it:

Setup

Install Selenium and download a compatible WebDriver (e.g., ChromeDriver):

pip install selenium

Download ChromeDriver from here and ensure it matches your Chrome version.

Extract Job Listing

Use Selenium to load the Google Jobs page and parse dynamic content:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.service import Service

# Initialize Chrome WebDriver with the local chromedriver executable

driver = webdriver.Chrome(service=Service('./chromedriver'))

# Navigate to Google Jobs search results page

driver.get("https://www.google.com/search?q=developer+jobs&ibp=htl;jobs")

# Create a WebDriverWait instance with 15 seconds timeout

wait = WebDriverWait(driver, 15)

# Wait for the parent div containing all job cards using XPath

parent_div = wait.until(EC.presence_of_element_located((By.XPATH,

"/html/body/div[3]/div/div[13]/div/div/div[2]/div[2]/div/div/div/div/div/div/div[3]/div/div/div/div/infinity-scrolling/div[1]/div[1]")))

# Find all job cards by locating anchor tags under divs with jsslot attribute

job_cards = parent_div.find_elements(By.XPATH, ".//div[@jsslot]//a")

# Iterate through each job card and extract information

for job in job_cards:

try:

title = job.find_element(By.XPATH, ".//span[2]/div[1]/div[1]/div[1]").text

company = job.find_element(By.XPATH, ".//span[2]/div[1]/div[1]/div[2]").text

location = job.find_element(By.XPATH, ".//span[2]/div[1]/div[1]/div[3]").text

print(f"{title} | {company} | {location}")

except:

continue

# Close the browser and cleanup

driver.quit()

Example Output

Full Stack Developer | proleaders | proleaders

Flutter Mobile Developer | proleaders | proleaders

Senior Backend Developer (.net @ Faainex) | Finiex Soft | Finiex Soft

Senior Android Developer | Madar Soft | Madar Soft

Senior Web Developer | Madar Soft | Madar Soft

Dot NET Developer | Tritecs | Tritecs

PHP Developer | Softxpert Incorporation | Softxpert Incorporation

Senior Front-End Developer | Tritecs | Tritecs

Senior Backend Developer | Finiex Soft | Finiex Soft

iOS Developer | 3rabapp | 3rabapp

This script uses Selenium to scrape Google Jobs listings. It launches a Chrome browser, navigates to search results, and waits for dynamic job cards to load. then locates the parent container holding all job cards and extracts individual job elements using XPath selectors. For each job listing, it retrieves the title, company name, and location. If any element is missing, the script gracefully handles exceptions and continues processing.

Other Sources for Jobs Data

While Google Jobs was a powerful aggregator, numerous alternatives exist for accessing job postings at scale. Below are key platforms and strategies to source job data effectively:

Major Job Boards

- Indeed:

The world’s largest job board, offering millions of listings globally. Use its Partner API for structured data or scrape its public pages for roles, salaries, and company details. - LinkedIn Jobs:

A hub for professional networking and high-quality listings. While its API is restricted to enterprise partners, scraping public profiles and job posts can yield insights into hiring trends. - Glassdoor:

Combines job postings with company reviews and salary reports. Ideal for analyzing workplace culture alongside opportunities. - Wellfound (formerly AngelList):

A go-to platform for startup job listings and investor insights. Scraping Wellfound can uncover emerging trends in startup hiring, remote opportunities, and salary benchmarks.

Niche Platforms

- Dice: Specializes in tech roles, with detailed filters for programming languages and frameworks.

- RemoteOK: Focuses on remote jobs across industries, perfect for tracking distributed work trends.

Aggregators with APIs

The following aggregators offer APIs that provide direct access to job data, making it easier to gather comprehensive listings, salary trends, and other essential details.

| Platform | API Availability | Key Data Points |

|---|---|---|

| ZipRecruiter | Paid | SME-focused roles, quick apply links |

| Adzuna | Free tier | Salary trends, location analytics |

| CareerJet | Limited | Multilingual job search support |

Scraping Custom Sources

For unique needs, scrape:

- Company Career Pages: Target specific employers (e.g., Tesla, Amazon).

- Freelance Platforms: Upwork, Fiverr for gig economy trends.

- University Job Portals: Track entry-level and academic roles.

Many platforms limit API access, making web scraping essential for large-scale data collection. For step-by-step guides on extracting data from these sources, explore our Jobs Data Scraping Guides.

By diversifying your data sources, you can build richer datasets for competitive analysis, salary benchmarking, and market forecasting.

Broad Crawling the Web

As each company has their own website they often have their own job listing section. For mass job data scraping web crawling can be a viable option.

For crawling job data broad crawling is often used where crawler scrape business websites looking for job listings. For that microformat scraping is often used as job data is conveniently marked up for easy discovery. For more see our Crawling with Python introduction

Why Scrape Jobs Data?

Job data is a valuable resource for recruitment platforms, market analysis, and competitive research. Businesses use it to track hiring trends, benchmark salaries, and forecast industry demand. Whether you're building a job board or analyzing workforce shifts, automated job data collection provides real-time insights at scale.

These days job data is directly ingested in RAG LLM applications for real-time labor market analysis.

Learn more about our Jobs Web Scraping service and how it can streamline your data needs.

Power-Up with Scrapfly

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

For example, we could replace our scraper code to use ScrapFly through python sdk:

from scrapfly import ScrapflyClient, ScrapeConfig

# Initialize Scrapfly client with your API key

client = ScrapflyClient(key="YOUR_SCRAPFLY_KEY")

def scrape_google_jobs(query: str, max_results: int = 50):

"""Scrape Google Jobs listings using Scrapfly's API"""

result = await client.async_scrape(

ScrapeConfig(

url=f"https://www.google.com/search?q={query}&ibp=htl;jobs",

# Enable anti-blocking protection bypass

asp=True,

# Render JavaScript to load dynamic content

render_js=True,

# Use residential proxies to avoid blocks

proxy_pool="public_residential_pool",

# Set country for localized results

country="US"

)

)

jobs = []

# Updated selectors for job cards and details (verify via browser inspection)

for job_card in result.selector.css('.MQUd2b'):

job = {

"title": job_card.css('.PUpOsf::text').get(),

"company": job_card.css('.a3jPc::text').get(),

"location": job_card.css('.FqK3wc::text').get(),

}

jobs.append(job)

return jobs[:max_results]

Now our data API can scrape jobs in real-time without being blocked or throttled.

FAQ

Below are quick answers to common questions about scraping job data and alternative APIs following the Google Jobs API discontinuation.

How do I ensure real-time updates when scraping job postings?

Use automated scrapers with scheduling tools (e.g., cron jobs) to refresh data hourly or daily.

How do job posting frequencies vary by industry?

Tech and healthcare roles are updated daily, while niche fields (e.g., academia) may post weekly. Track timestamps during scraping to analyze trends.

Can I scrape salary data from job postings?

Yes, but only some listings include explicit salary ranges and often in article body as natural language. For that, use NLP techniques or LLMs to parse phrases like “80k–120k” or “competitive compensation” for approximations.

Summary

The discontinuation of Google Jobs API in 2021 left a void in automated job data access, but it also ignited a wave of innovation. Businesses and developers pivoted to hybrid strategies, blending modern tools and creative approaches to maintain access to critical hiring insights. By leveraging evolving technologies like advanced scraping solutions and competitor APIs, organizations transformed a setback into an opportunity to build more resilient, flexible data pipelines.

Alternative APIs: Tap into platforms like Indeed and LinkedIn for structured job data.

Web Scraping: Tools like Scrapfly enable efficient extraction from search results, overcoming API limits.

Real-Time Analytics: Monitor trends, salaries, and skill demands with automated data pipelines.

Adaptability: Combine APIs, scraping, and niche boards (e.g., Dice, RemoteOK) for comprehensive coverage.

Stay ahead by blending technology and strategy to unlock actionable job market intelligence.