To start - what are sitemaps? Sitemaps are web files that list the locations of web objects like product pages, articles etc. for web crawlers. It's mostly used to tell search engines what to index though in web scraping we can use them to discover targets to scrape.

In this tutorial, we'll take a look at how to discover sitemap files and scrape them using Python and Javascript. In this guide we'll cover:

- Finding sitemap location.

- How to understand and navigate sitemaps.

- How to parse sitemap files using XML parsing tools in Python and Javascript.

For this, we'll be using Python or Javascript with a few community packages and some real-life examples. Let's dive in!

Key Takeaways

Master scrape sitemap techniques with Python and JavaScript to discover target URLs efficiently using XML parsing, robots.txt analysis, and sitemap index navigation.

- Use robots.txt files to discover sitemap locations and access comprehensive URL directories

- Parse XML sitemap files with Python's lxml or JavaScript's xml2js for efficient data extraction

- Handle gzip-compressed sitemaps to reduce bandwidth costs while discovering thousands of URLs

- Navigate sitemap index files to access categorized URL collections across large websites

- Extract URL metadata including last modification dates and priority information from sitemap entries

- Use sitemaps as low-bandwidth alternatives to scraping search and category pages for target discovery

Why Scrape Sitemaps?

Scraping sitemaps is an efficient way to discover targets listed on the website be it product pages, blog posts or any other web object. Sitemaps are usually XML documents that list URLs by category in batches of 50 000. Sitemaps are often gzip compressed making it a low-bandwidth way to discover page URLs.

So, instead of scraping a website's search or directory which can be thousands of HTML pages, we can scrape sitemap files and get the same results in a fraction of time and bandwidth costs. Additionally, since sitemaps are created for web scrapers the likelihood of getting blocked is much lower.

Where to find Sitemaps?

Sitemaps are usually located at the root of the website /sitemap.xml file. For example, scrapfly.io/sitemap.xml of this blog.

However, there's no clear standard location so another way to find the sitemap location is to explore the standard /robots.txt file which provides instructions for web crawlers.

For example, scrapflys's /robots.txt file contains the following line:

Sitemap: https://scrapfly.io/sitemap.xml

Many programming languages have robot.txt parsers built-in or it's as easy as retrieving the page content and finding the line with Sitemap: prefix:

# built-in sitemap parser:

from urllib.robotparser import RobotFileParser

rp = RobotFileParser('http://scrapfly.io/robots.txt')

rp.read()

print(rp.site_maps())

['https://scrapfly.io/blog/sitemap-posts.xml', 'https://scrapfly.io/sitemap.xml']

# or httpx

import httpx

response = httpx.get('https://scrapfly.io/robots.txt')

for line in response.text.splitlines():

if line.strip().startswith('Sitemap:'):

print(line.split('Sitemap:')[1].strip())

const axios = require('axios');

const response = await axios.get(url);

const sitemaps = [];

const lines = robotsTxt.split('\n');

for (const line of lines) {

const trimmedLine = line.trim();

if (trimmedLine.startsWith('Sitemap:')) {

sitemaps.push(trimmedLine.split('Sitemap:')[1].trim());

}

}

console.log(sitemaps);

Scraping Sitemaps

Sitemaps are XML documents with specific, simple structures. For example:

<?xml version="1.0" encoding="UTF-8"?><?xml-stylesheet type="text/xsl" href="//scrapfly.io/blog/sitemap.xsl"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9" xmlns:image="http://www.google.com/schemas/sitemap-image/1.1">

<url>

<loc>https://scrapfly.io/blog/web-scraping-with-python/</loc>

<lastmod>2023-04-04T06:20:35.000Z</lastmod>

<image:image>

<image:loc>https://scrapfly.io/blog/content/images/web-scraping-with-python_banner.svg</image:loc>

<image:caption>web-scraping-with-python_banner.svg</image:caption>

</image:image>

</url>

</urlset>

When it comes to web scraping we're mostly interested in the <urlset> values where each <url> contains location and some optional metadata like:

<loc>- URL location of the resource.<lastmod>- Timestamp when the resource was modified last time.<changefreq>- How often the resource is expected to change (always, hourly, daily, weekly, monthly, yearly or never).<priority>- resource indexing importance (0.0 - 1.0). This mostly used for search engines.<image:image>- A list of images associated with the resource.<image:loc>- The URL of the image (e.g. main product image or article feature image).<image:caption>- A short description of the image.<image:geo_location>- The geographic location of the image.<image:title>- The title of the image.<image:license>- The URL of the license for the image.<video:video>- similar to<image:loc>but for videos.

To parse sitemaps we need an XML parser which we can use to find all <url> elements and extract the values.

In Python, we can use lxml or parsel packages to parse XML documents with XPath or CSS selectors.

from parsel import Selector

import httpx

response = httpx.get("https://scrapfly.io/blog/sitemap-posts.xml")

selector = Selector(response.text)

for url in selector.xpath('//url'):

location = url.xpath('loc/text()').get()

modified = url.xpath('lastmod/text()').get()

print(location, modified)

["https://scrapfly.io/blog/how-to-scrape-nordstrom/", "2023-04-06T13:35:47.000Z"]

In javascript (as well as NodeJS and TypeScript), the most popular XML parsing library is cheerio which can be used with CSS selectors:

// npm install axios cheerio

import axios from 'axios';

import * as cheerio from 'cheerio';

const response = await axios.get('https://scrapfly.io/blog/sitemap-posts.xml');

const $ = cheerio.load(response.data, { xmlMode: true });

$('url').each((_, urlElem) => {

const location = $(urlElem).find('loc').text();

const modified = $(urlElem).find('lastmod').text();

console.log(location, modified);

});

For bigger websites, the sitemap limit of 50 000 URLs is often not enough so there are multiple sitemap files contained in a sitemap hub. To scrape this we need a bit of recursion.

For example, let's take a look at StockX sitemap hub. To start, we can see that robots.txt file contains the following line:

Sitemap: https://stockx.com/sitemap/sitemap-index.xml

This index file contains multiple sitemaps:

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<loc>https://stockx.com/sitemap/sitemap-0.xml</loc>

</sitemap>

<sitemap>

<loc>https://stockx.com/sitemap/sitemap-1.xml</loc>

</sitemap>

<sitemap>

<loc>https://stockx.com/sitemap/sitemap-2.xml</loc>

</sitemap>

<sitemap>

<loc>https://stockx.com/sitemap/sitemap-3.xml</loc>

</sitemap>

<sitemap>

<loc>https://stockx.com/sitemap/sitemap-4.xml</loc>

</sitemap>

<sitemap>

<loc>https://stockx.com/sitemap/sitemap-5.xml</loc>

</sitemap>

</sitemapindex>

Sometimes, sitemap hubs split sitemaps by category, location which can help us to target specific types of pages. Though to scrape hubs all we have to do is parse the index file and scrape each sitemap file:

from parsel import Selector

import httpx

response = httpx.get("https://stockx.com/sitemap/sitemap-index.xml")

selector = Selector(response.text)

for sitemap_url in selector.xpath("//sitemap/loc/text()").getall():

response = httpx.get(sitemap_url)

selector = Selector(response.text)

for url in selector.xpath('//url'):

location = url.xpath('loc/text()').get()

change_frequency = url.xpath('changefreq/text()').get() # it's an alternative field to modification date

print(location, change_frequency)

import axios from 'axios';

import * as cheerio from 'cheerio';

const response = await axios.get('https://stockx.com/sitemap/sitemap-index.xml');

const sitemapIndex = cheerio.load(response.data, { xmlMode: true });

sitemapIndex('loc').each(async (_, urlElem) => {

const sitemapUrl = sitemapIndex(urlElem).text();

const response = await axios.get(sitemapUrl);

const $ = cheerio.load(response.data, { xmlMode: true });

$('url').each((_, urlElem) => {

const location = $(urlElem).find('loc').text();

const changefreq = $(urlElem).find('changefrq').text();

console.log(location, changefreq);

});

});

When Sitemaps Aren't Available

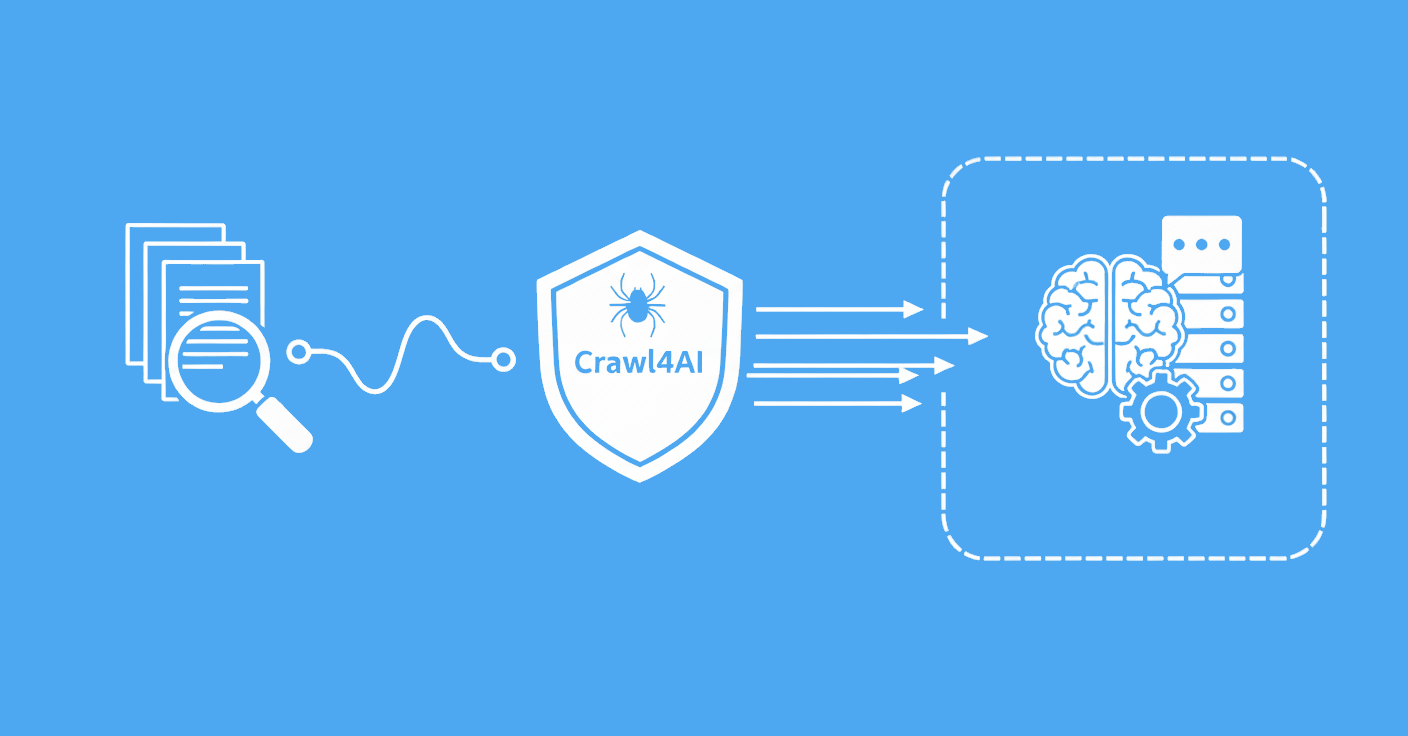

Not every website has sitemaps, and even when they exist, they may not include all URLs. For comprehensive URL discovery across entire domains, Scrapfly's Crawler API can automatically crawl websites by following links:

- Automatic URL Discovery: Recursively finds all pages by following internal links

- Anti-Bot Bypass: Handles protected sites where manual crawling would get blocked

- JavaScript Rendering: Discovers URLs in dynamic SPAs that don't appear in sitemaps

The Crawler API is a good complement to sitemap scraping when you need complete coverage of a domain.

Sitemap Scraping Summary

In this quick introduction, we've taken a look at the most popular way to discover scraping targets - the sitemap system. To scrape it we used an HTTP client (httpx in Python or axios in Javascript) and XML parser (lxml or cheerio).

Sitemaps are a great way to discover new scraping targets and get a quick overview of the website structure. Though, not every website supports them and it's worth noting that the data on sitemaps can be more dated than on the website itself.