Modern websites often localize web content like language, pricing, currency and other details for a particular audience. Website localization is a key factor in providing a better user experience but can be a difficult problem in web scraping.

In this article, we'll take a look at the most common web scraping localization techniques. We'll also go over a practical web scraping example by scraping an e-commerce website in a different language and currency using popular scraping tools like playwright, httpx and Scrapfly SDK. Let's dive in!

Key Takeaways

Master web scraping languages with Python techniques, headers, cookies, and proxy rotation for comprehensive international data extraction.

- Implement language detection and currency conversion for international web scraping workflows

- Configure HTTP headers and cookies to specify target language and regional preferences

- Use proxy rotation with geo-targeted IP addresses to access region-specific content

- Implement data normalization and currency conversion for consistent international data processing

- Configure browser automation with locale settings for JavaScript-rendered localized content

- Use specialized tools like ScrapFly for automated localization with anti-blocking features

What is Website Localization?

Localization means changing the website's preferences to meet the local culture of a specific location. It's a design technique that optimizes the web content for better user experience. Here are parts of websites that are most commonly localized:

- Language

- Payment Options

- Currency

- E-commerce details like shipping and taxes.

- Text formatting like dates, times, numbers and units.

- Pricing - Websites can change pricing to suite regional economy. i.e. lower prices in developing countries.

So, correct localization is an essential step in practical and clean data scraping.

How Does It Work?

Localization settings can be found in several places, including headers, URLs and even cookies. Websites retrieve these settings to determine which localization to apply.

By default, many websites rely on the IP address to determine the initial user's location. For example, if the IP address is located in the USA, the website will default to US locality.

Setup

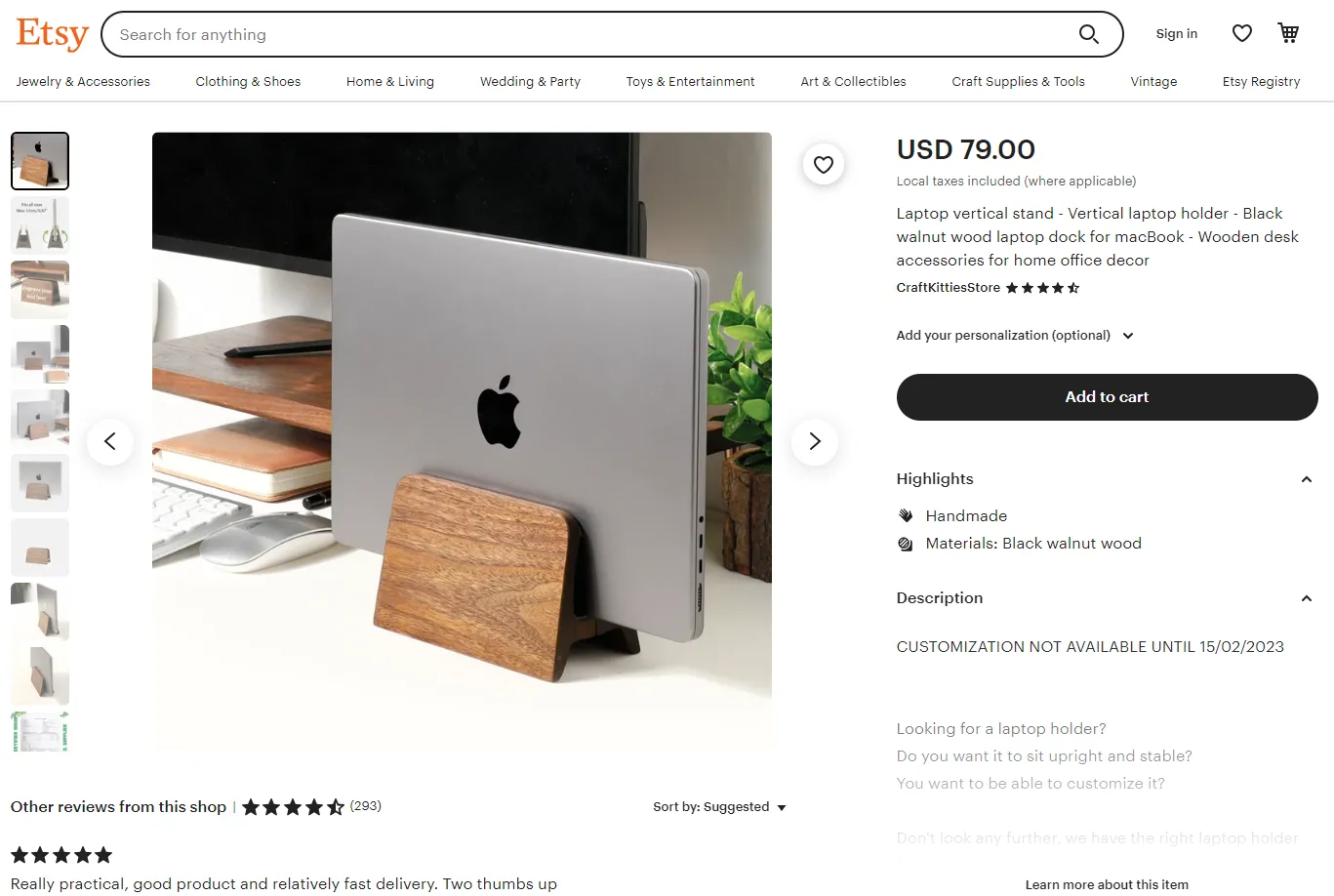

In this example, we'll scrape a product page on Etsy:

We'll cover examples in 3 popular web scraping tools:

- Playwright web browser automation library which is used in webscraping with headless browsers.

- HTTPX http client for python which is the most popular http client for Python.

- Scrapfly SDK - for Scrapfly web scraping API users.

Finally, for parsing scraped data to confirm our localization changes we'll use parsel which allows HTML parsing using XPath and CSS selectors.

All of these tools can be installed using pip console command:

$ pip install playwright httpx "scrapfly-sdk[all]"

Note: Playwright headless browser binaries must also be installed using playwright install console command for first-time Playwright users.

How to Change Web Scraper Localization

As we have discussed earlier, we can approach web scraping localization in different ways - changing headers, cookies, URLs, and IP location. Let's go over each of them in detail.

Changing Headers

HTTP headers are key-value pairs that allow for passing information between a client and a web server. There are several types of headers, including request, response and payload headers.We'll be using the request headers, which provide information about the request sender. It includes headers like User-Agent, Accept-Language, Host and many others.

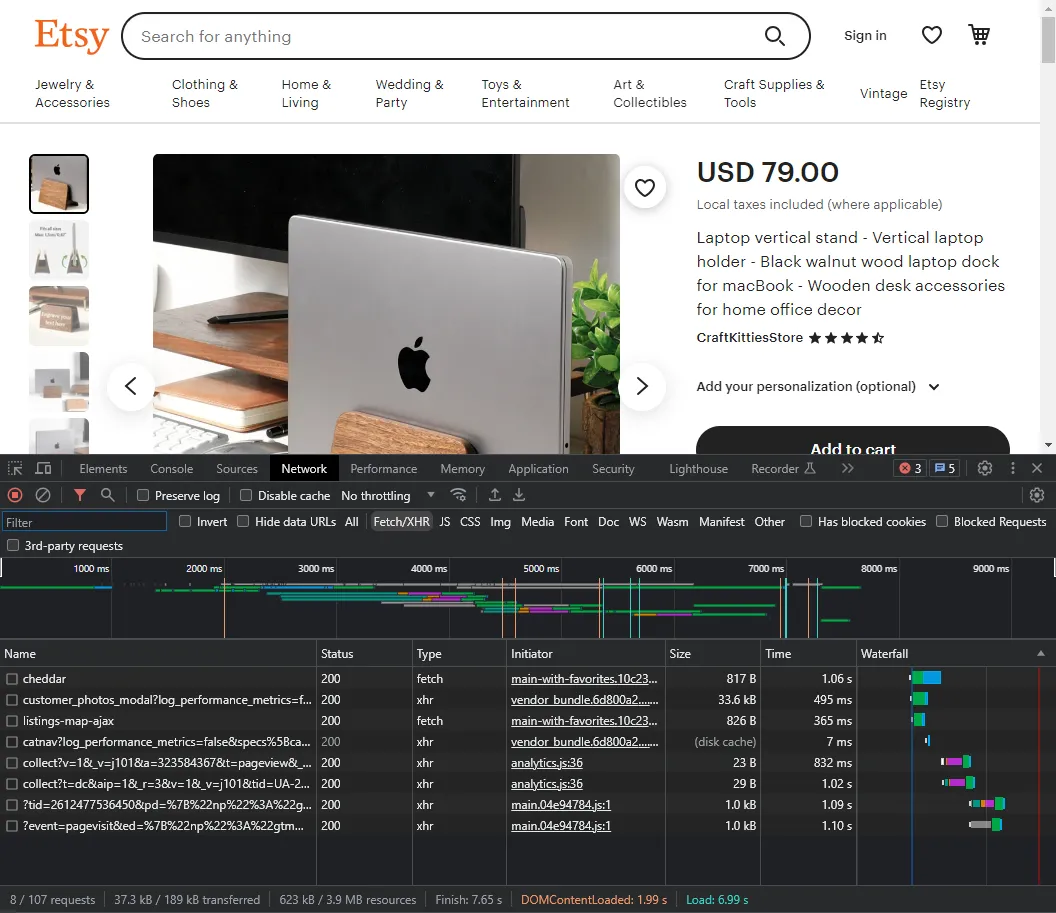

For example, let's inspect the target Etsy website page and take a look at the request headers. To do that, hit the (F12) key to open the browser Browser Developer Tools. Then select the Network tab and refresh the page to save the requests' data:

Here, we can see all of the requests the web browser is making on page load. We can use this to figure out how Etsy is localizing the page. In this example, let's take a look at the first request it makes:

In this example we can see the request is using the Accept-Language and X-Detected-Locale headers to define locality. The Accept-Language header defines the browser's preference for content language. The X-Detected-Locale is an explicit Etsy header used for choosing the currency, language and location.

Let's try changing these headers in our web scraper. We'll do this by modifying the default headers that are being sent:

from playwright.sync_api import sync_playwright

from parsel import Selector

with sync_playwright() as playwight:

# Launch a chrome headless browser

browser = playwight.chromium.launch(headless=True)

context = browser.new_context()

# Add HTTP headers

context.set_extra_http_headers({

# Modify language, currency and location values

"Accept-Language": "de",

"X-Detected-Locale": "EUR|de|DE"

})

page = context.new_page()

page.goto('https://www.etsy.com/listing/1282382525/laptop-vertical-stand-vertical-laptop')

page_content = page.content()

selector = Selector(text=page_content)

data = {

"specs": selector.css('#listing-page-cart h1::text').get().strip(),

"description": ' '.join(selector.css('#wt-content-toggle-product-details-read-more p::text').getall()).strip(),

"price": ''.join(selector.css('[data-selector=price-only] p::text').getall()).strip(),

}

print(data)

from httpx import Client

from parsel import Selector

headers = {

"Accept-Language": "de",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36",

"X-Detected-Locale": "EUR|de|DE"

}

with Client(headers=headers, follow_redirects=True) as client:

response = client.get('https://www.etsy.com/listing/1282382525/laptop-vertical-stand-vertical-laptop')

assert response.status_code == 200, "request has been blocked"

selector = Selector(text=response.text)

data = {

"specs": selector.css('#listing-page-cart h1::text').get().strip(),

"description": ' '.join(selector.css('#wt-content-toggle-product-details-read-more p::text').getall()).strip(),

"price": ''.join(selector.css('[data-selector=price-only] p::text').getall()).strip(),

}

print(data)

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly = ScrapflyClient(key="YOUR SCRAPFLY KEY")

api_response:ScrapeApiResponse = scrapfly.scrape(scrape_config=ScrapeConfig(

url='https://www.etsy.com/listing/1282382525/laptop-vertical-stand-vertical-laptop',

headers ={

"Accept-Language": "de",

"X-Detected-Locale": "EUR|de|DE"

},

render_js=True, # enable headless browsers (like playwright)

asp=True, # enable scraper blocking bypass

))

selector = api_response.selector

data = {

"specs": selector.css('#listing-page-cart h1::text').get().strip(),

"description": ' '.join(selector.css('#wt-content-toggle-product-details-read-more p::text').getall()).strip(),

"price": ''.join(selector.css('[data-selector=price-only] p::text').getall()).strip(),

}

print(data)

Example Output

[

{

"specs": "Laptop St\u00e4nder - Vertikale Laptop Halter - Schwarze Nussbaum Holz Laptop Dock f\u00fcr MacBook - Holz Schreibtisch Zubeh\u00f6r f\u00fcr Home Office Dekor",

"description": "INDIVIDUALISIERUNG BIS 15.02.2023 NICHT VERF\u00dcGBAR Auf der Suche nach einer Laptophalterung? Soll er aufrecht und stabil sitzen? Du m\u00f6chtest es individualisieren k\u00f6nnen? Suchen Sie nicht weiter, wir haben die passende Laptophalterung f\u00fcr Sie! Wir m\u00fcssen Ihnen nicht sagen, dass das Dock Ihren Laptop f\u00fcr einen saubereren, unordnungsfreien Arbeitsplatz oder Schreibtischeinrichtung mit Monitor erh\u00f6ht. Oder dass es eine innovative Mikro-Saugleiste zum bequemen An- und Ausdocken gibt. Was Sie wissen m\u00fcssen, ist, dass es genial entwickelt ist, um alle Laptopbreiten (1,7cm / 0,67\" max) zu akzeptieren und deshalb wird Ihr Laptop stabil und perfekt gerade positioniert! Nat\u00fcrlich haben wir amerikanisches schwarzes Walnussholz verwendet, eines der wertvollsten und gesch\u00e4tzten H\u00f6lzer der Welt, um ein Dock zu schaffen, das Ihren Laptop halten wird! Wenn Sie Ihren Laptop also zu 100% stabil tragen m\u00f6chten, bestellen Sie jetzt Ihren anpassbaren Laptophalter! PS: Die personalisierte Gravur ist eine tolle Idee f\u00fcr ein ganz pers\u00f6nliches Geschenk!",

"price": "90,09 \u20ac"

}

]

Here, we use CSS selectors to scrape basic product data from the HTML which should generate results similar to this:

Success - changing these two headers allowed for web scraping in different language and currency!

Here is what the full code should look like:

So, localizing using headers is a simple and effective method, however it's not always available. Next, let's take a look at a different strategy - using cookies.

Changing Cookies

As with headers, many websites use cookies to store localization settings.

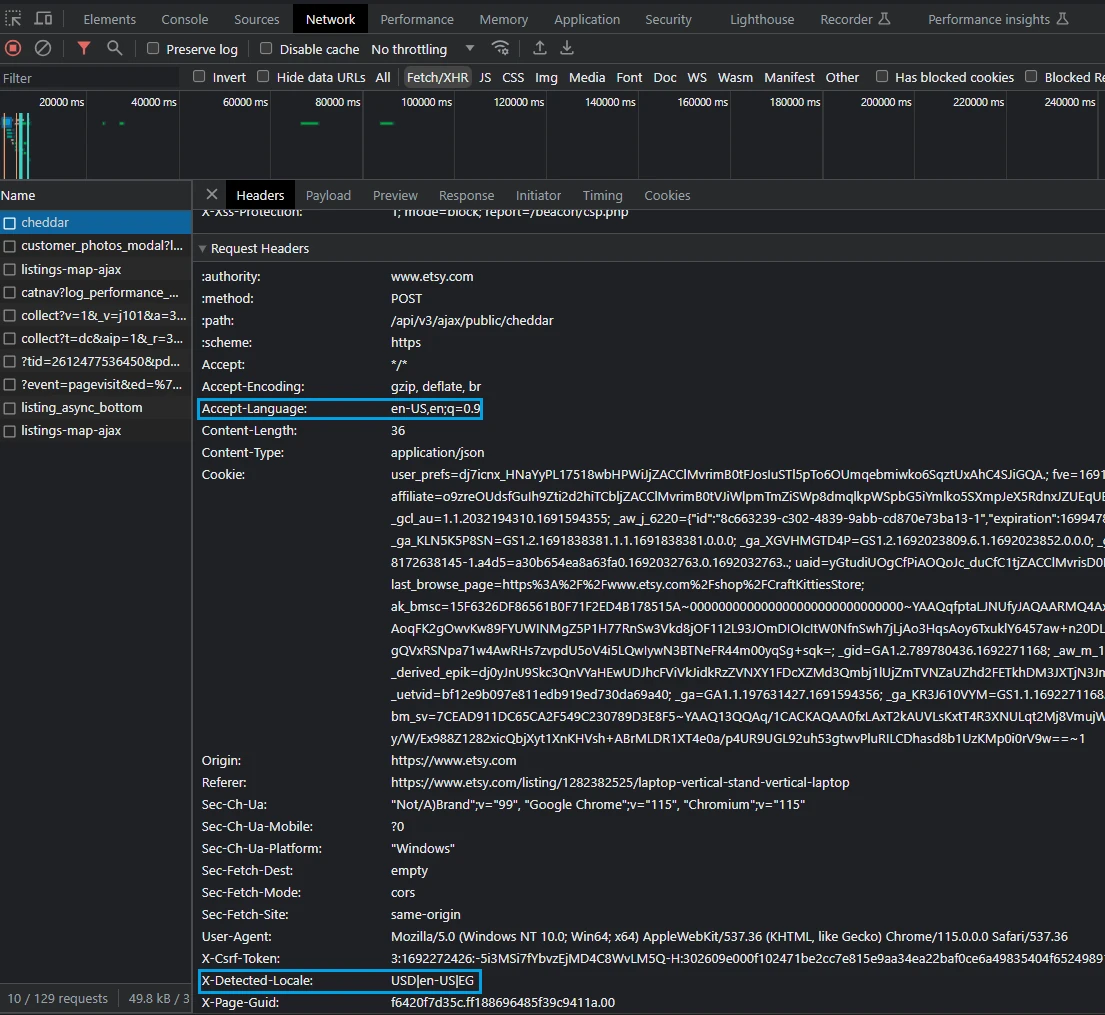

Our Etsy example doesn't store these settings in cookies, so let's take a look at a new example AWS homepage which does use cookies for localization.

Unlike headers which are standard across many websites, cookies are implemented uniquely on each website. For that, we need to investigate cookie behavior ourselves.

We'll be using Browser Developer Tools again. First, open the browser developer tools by pressing the (F12) key and select the application tab. Cookies section can be found on the left bar:

It seems quite obvious that the aws_lang cookie is responsible for setting the website language. Let's try changing it by double-clicking the value and changing it to something else, like fr for French. If we reload the page with this new cookie value, we can see success - the website is being served in French now!

So, to replicate this in our webscraper, all we have to do is add the aws_lang cookie with desired language value. Here's how we can do it in Playwright, httpx and Scrapfly:

from playwright.sync_api import sync_playwright

with sync_playwright() as playwight:

browser = playwight.chromium.launch(headless=True)

context = browser.new_context()

# Modify the aws_lang cookie

context.add_cookies(

[{"name":"aws_lang","value":"de", "domain": '.amazon.com', 'path': '/'},]

)

page = context.new_page()

page.goto('https://aws.amazon.com')

title = page.query_selector("h1").inner_text()

print(title)

'Beginnen Sie heute die Erstellung mit AWS'

from httpx import Client

from parsel import Selector

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36",

}

cookies = {'aws_lang': 'de'}

with Client(headers=headers, cookies=cookies, follow_redirects=True) as client:

response = client.get('https://aws.amazon.com/')

assert response.status_code == 200, "request has been blocked"

selector = Selector(text=response.text)

title = selector.css('h1::text').get()

print(title)

'Beginnen Sie heute die Erstellung mit AWS'

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly = ScrapflyClient(key='YOUR SCRAPFLY KEY')

api_response:ScrapeApiResponse = scrapfly.scrape(scrape_config=ScrapeConfig(

# Modify aws_lang cookie value

cookies = {'aws_lang': 'de' },

url='https://aws.amazon.com,

# extra: save screenshots

render_js=True,

screenshots={

'screenshot': 'fullpage'

}

))

title = api_response.selector.query_selector("h1").inner_text()

print(title)

'Beginnen Sie heute die Erstellung mit AWS'

# Extra: Save screenshot

scrapfly.save_screenshot(api_response=api_response, name='screenshot')

Above we use cookies to configure locality of the web page through aws_lang value.

So far we've taken a look at headers and cookies for changing website locality but there's another option - using the URL itself. Let's take a look at it next.

Changing URLs

Many websites allow for changing localization settings using URLs in different ways. The most common way works by adding the language or country code to the url itself. For example, a website can support German localization by adding the German country code to the URL path (e.g. www.example.com/de) or URL parameters (e.g. www.example.com/?lang=de).

Let's try to apply this method to our Etsy target. All we have to do is add the country code at the end of the domain name:

from playwright.sync_api import sync_playwright

from parsel import Selector

with sync_playwright() as playwight:

# Launch a chrome headless browser

browser = playwight.chromium.launch(headless=True)

context = browser.new_context()

page = context.new_page()

# instead of old localization unaware URL

# page.goto('https://www.etsy.com/listing/1282382525/laptop-vertical-stand-vertical-laptop')

# let's use localized URL:

page.goto('https://www.etsy.com/de/listing/1282382525/laptop-vertical-stand-vertical-laptop')

# ^^^^

page_content = page.content()

selector = Selector(text=page_content)

title = selector.css('h1::text').get()

print(title)

'Laptop Ständer - Vertikale Laptop Halter - Schwarze Nussbaum Holz Laptop Dock für MacBook - Holz Schreibtisch Zubehör für Home Office Dekor'

from httpx import Client

from parsel import Selector

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36",

}

with Client(headers=headers, follow_redirects=True) as client:

response = client.get('https://www.etsy.com/de/listing/1282382525/laptop-vertical-stand-vertical-laptop')

# ^^^^

assert response.status_code == 200, "request has been blocked"

selector = Selector(text=response.text)

title = selector.css('h1::text').get()

print(title)

'Laptop Ständer - Vertikale Laptop Halter - Schwarze Nussbaum Holz Laptop Dock für MacBook - Holz Schreibtisch Zubehör für Home Office Dekor'

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly = ScrapflyClient(key='YOUR SCRAPFLY KEY')

api_response:ScrapeApiResponse = scrapfly.scrape(scrape_config=ScrapeConfig(

url='https://www.etsy.com/de/listing/1282382525/laptop-vertical-stand-vertical-laptop',

# ^^^^^

))

title = api_response.selector.css("h1::text").get()

print(title)

'Laptop Ständer - Vertikale Laptop Halter - Schwarze Nussbaum Holz Laptop Dock für MacBook - Holz Schreibtisch Zubehör für Home Office Dekor'

With this approach, all we've done is change the global Etsy URL to a localized one by prefixing /de/ to the initial URL path: etsy.com/listing... -> etsy.com/de/listing...

Although this web scraping localization method is straightforward, websites don't always support it. Let's move on to the last and easiet strategy - IP address location.

Changing Location

Every web request is associated with an IP address, which represents the location address of the request sender. Websites use this location to determine which localization to apply. For example, if the IP address is located in Germany, the website will assume the connecting user requires German localization.

To apply this method, we need to use a proxy to change the IP address location. ScrapfFly is a web scraping API solution that uses millions of residential proxies from over 50+ countries.

With ScrapFly, we can set proxies to a specific country, which the easiest way to apply localization settings. It also supports JavaScript rendering, which allows for scraping dynamic websites like Etsy.

To apply web scraping localization using Scrapfly, all we need to do is select an appropriate proxy:

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

from parsel import Selector

scrapfly = ScrapflyClient(key='Your Scrapfly API key')

# Scrape the page content

api_response:ScrapeApiResponse = scrapfly.scrape(scrape_config=ScrapeConfig(

url='https://www.etsy.com/listing/1282382525/laptop-vertical-stand-vertical-laptop',

# Enable the JavaScript rendering feature

render_js=True,

# Set the proxies to a specific country:

country="DE" # or US, FR, GB, etc.

))

FAQs

Is web scraping localization available on all websites?

Unfortunately, there's no single standard for localization and not every website supports it. Each website has to be explored individually to determine which localization settings are available.

How can I scrape in another language, currency or location?

As this article covers, localization is configured through request headers, cookies or URL parameters. These are the most commonly used configuration methods though Local storage can also be used in Javascript web applications.

What is the Accept-Language header?

The Accept-Language header represents the locale language of a user. This allows websites to change their language based on this header value, though this header is not always supported or respected.

What is website localization?

Website localization means adapting the website content to meet the preferences and culture of local users. It searches for these preferences in different places to change factors like language and currency for specific groups of users.

How do I determine which localization method a website uses?

Use browser developer tools (F12) to inspect network requests, cookies, and URL patterns. Check for headers like Accept-Language, cookies with language/locale values, or URL parameters like ?lang=en or country codes in the path.

Can I use proxies to change website localization automatically?

Yes, using proxies from specific countries can automatically trigger localization based on IP geolocation. This is often the easiest method as websites automatically detect the proxy's country and apply appropriate language, currency, and regional settings.

What's the difference between localization headers, cookies, and URL parameters?

Headers (likeAccept-Language) are standard HTTP mechanisms, cookies store user preferences persistently, and URL parameters provide immediate control. Each method has different levels of support and persistence across websites.Localization in Web Scraping - Summary

Web scraping localization is usually achieved through applying different website preferences through cookies, headers or URL itself. This allows for scraping content in different languages, currencies, pricing and other location-based data points.

In this tutorial, we've explored examples how localization is applied through request headers, cookies and URL patterns. We covered each strategy by exploring an Etsy.com example in Playwright, HTTPX and Scrapfly SDK.

The easiest strategy is proxy IP address use for web locality in web scraping. For this, we used Scrapfly web scraping API which offers IP addresses from over 50+ countries.