One of the sneakiest and least known ways of detecting and fingerprinting web scraper traffic is Transport Layer Security (TLS) analysis. Every HTTPS connection has to establish a secure handshake, and the way this handshake is performed can lead to fingerprinting and web scraping blocking.

In this article we'll take a look at how TLS can leak the fact that connecting client is a web scraper and how can it be used to establish fingerprint to track the client across the web.

Key Takeaways

Bypass TLS fingerprint detection by using browser automation tools and custom TLS configurations that mimic real browser handshakes to avoid scraper identification.

- Use browser automation tools like Selenium, Playwright, and Puppeteer for authentic TLS fingerprints

- Implement JA3 fingerprinting analysis to understand and modify TLS handshake characteristics

- Configure custom TLS settings including cipher suites, extensions, and protocol versions

- Upgrade to TLS 1.3 for better protection against fingerprinting compared to TLS 1.2

- Use specialized libraries like utls, CycleTLS, and curl-impersonate for TLS fingerprint spoofing

- Leverage proxy services with TLS fingerprint rotation and spoofing capabilities

What Is TLS?

Transport Security Layer is what powers all HTTPS connections. It's what allows end-to-end encrypted communication between the client and the server.

In the context of web scraping we rarely care whether the website uses HTTP or HTTPS connections as that doesn't affect our data collection logic. However, an emerging fingerprinting technology is targeting this connection step to not only fingerprint users for tracking but also to block web scrapers.

TLS Fingerprinting

TLS is a rather complicated protocol, and we don't need to understand all of it to identify our problem, though some basics will help. Let's take a quick TLS overview so we can understand how it can be used in fingerprinting.

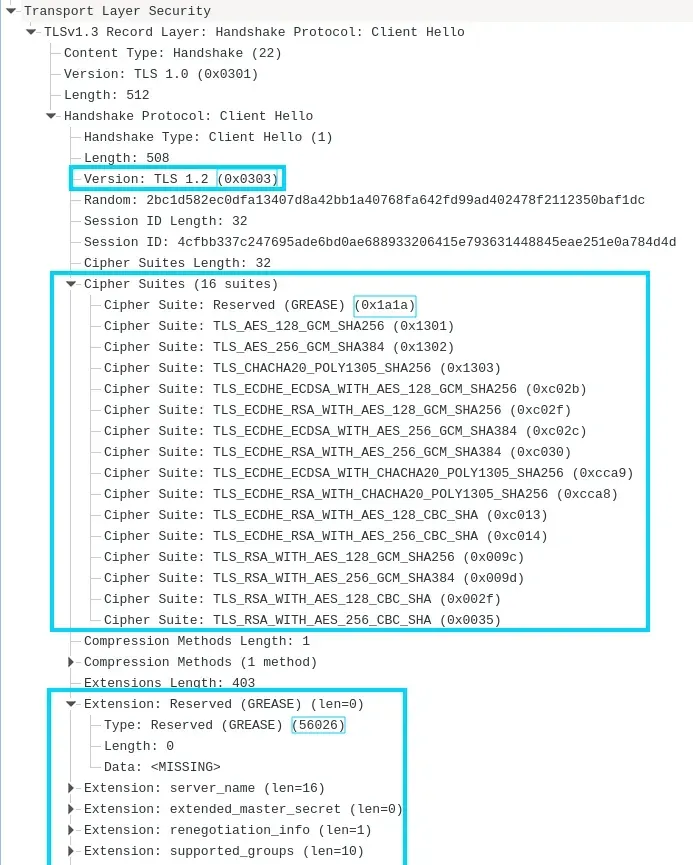

At the beginning of every HTTPS connection the client and the server needs to greet each other and negotiate the way connection will be secured. This is called "Client Hello" handshake. Data wise it looks something like this:

There's a lot of data here and this is where spot the difference game begins: which values of this handshake can vary in different HTTP clients like web browser or programming libraries?

The first thing to note is that there are multiple TLS Versions: usually it's either 1.2 or 1.3 (the latest one).

This version determines the rest of the data used in the handshake. TLS 1.3 provides extra optimizations and less data so it's easier to fortify but whether it's 1.2 or 1.3 our goal remains the same - make it look like a real web browser. In reality, we have to fortify multiple versions as some websites do not support TLS 1.3 yet.

Further, we have the most important field: Cipher Suites.

This field is a list of what encryption algorithms the negotiating parties support. This list is ordered by priority and both parties settle on the first matching value.

So, we must ensure that our HTTP client list matches that of a common web browser, including the order.

Similarly to list of Cipher Suites we have list of Enabled Extensions.

These extensions signify features the client supports, and some metadata like server domain name. Just like with Cipher Suites we need to ensure these values and their order match that of a common web browser.

JA3 Fingerprint

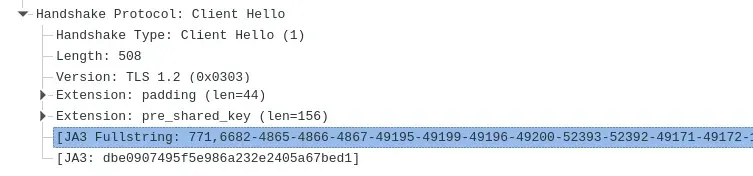

As we can see there are several values that can vary vastly across clients. For this, JA3 fingerprint technique is often used which essentially is a string of the varying values:

TLSVersion,

Ciphers,

Extensions,

support_groups(previously EllipticCurves),

EllipticCurvePointFormats,

Each value is separated by a , and array values are separated by a -.

So, for example this profile of Chrome web browser on Linux:

Handshake Type: Client Hello (1)

Length: 508

Version: TLS 1.2 (0x0303) #1 (note that 0x0303 is hex for 771)

Cipher Suites Length: 32

Cipher Suites (16 suites)

#2.1 Cipher Suite: Reserved (GREASE) (0x1a1a)

#2.2 Cipher Suite: TLS_AES_128_GCM_SHA256 (0x1301)

#2.3 Cipher Suite: TLS_AES_256_GCM_SHA384 (0x1302)

#2.4 Cipher Suite: TLS_CHACHA20_POLY1305_SHA256 (0x1303)

#2.5 Cipher Suite: TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256 (0xc02b)

#2.6 Cipher Suite: TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 (0xc02f)

#2.7 Cipher Suite: TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384 (0xc02c)

#2.8 Cipher Suite: TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 (0xc030)

#2.9 Cipher Suite: TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305_SHA256 (0xcca9)

#2.10 Cipher Suite: TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305_SHA256 (0xcca8)

#2.11 Cipher Suite: TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA (0xc013)

#2.12 Cipher Suite: TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA (0xc014)

#2.13 Cipher Suite: TLS_RSA_WITH_AES_128_GCM_SHA256 (0x009c)

#2.14 Cipher Suite: TLS_RSA_WITH_AES_256_GCM_SHA384 (0x009d)

#2.15 Cipher Suite: TLS_RSA_WITH_AES_128_CBC_SHA (0x002f)

#2.16 Cipher Suite: TLS_RSA_WITH_AES_256_CBC_SHA (0x0035)

Extensions Length: 403

Extension: Reserved (GREASE) (len=0)

#3.1 Type: Reserved (GREASE) (56026)

Extension: server_name (len=16)

#3.2 Type: server_name (0)

Extension: extended_master_secret (len=0)

#3.3 Type: extended_master_secret (23)

Extension: renegotiation_info (len=1)

#3.4 Type: renegotiation_info (65281)

Extension: supported_groups (len=10)

#3.5 Type: supported_groups (10)

Supported Groups (4 groups)

#4.1 Supported Group: Reserved (GREASE) (0x7a7a)

#4.2 Supported Group: x25519 (0x001d)

#4.3 Supported Group: secp256r1 (0x0017)

#4.4 Supported Group: secp384r1 (0x0018)

Extension: ec_point_formats (len=2)

#3.6 Type: ec_point_formats (11)

Elliptic curves point formats (1)

#5 EC point format: uncompressed (0)

Extension: session_ticket (len=0)

#3.7 Type: session_ticket (35)

Extension: application_layer_protocol_negotiation (len=14)

#3.8 Type: application_layer_protocol_negotiation (16)

Extension: status_request (len=5)

#3.9 Type: status_request (5)

Extension: signature_algorithms (len=18)

#3.10 Type: signature_algorithms (13)

Extension: signed_certificate_timestamp (len=0)

#3.11 Type: signed_certificate_timestamp (18)

Extension: key_share (len=43)

#3.12 Type: key_share (51)

Extension: psk_key_exchange_modes (len=2)

#3.13 Type: psk_key_exchange_modes (45)

Extension: supported_versions (len=7)

#3.14 Type: supported_versions (43)

Extension: compress_certificate (len=3)

#3.15 Type: compress_certificate (27)

Extension: application_settings (len=5)

#3.16 Type: application_settings (17513)

Extension: Reserved (GREASE) (len=1)

#3.17 Type: Reserved (GREASE) (27242)

Extension: padding (len=44)

#3.18 Type: padding (21)

Extension: pre_shared_key (len=156)

#3.19 Type: pre_shared_key (41)

Would yield a fingerprint of:

771,6682-4865-4866-4867-49195-49199-49196-49200-52393-52392-49171-49172-156-157-47-53,56026-0-23-65281-10-11-35-16-5-13-18-51-45-43-27-17513-27242-21-41,31354-29-23-24,0

JA3 fingerprints are often further md5 hashed to reduce fingerprint length:

dbe0907495f5e986a232e2405a67bed1

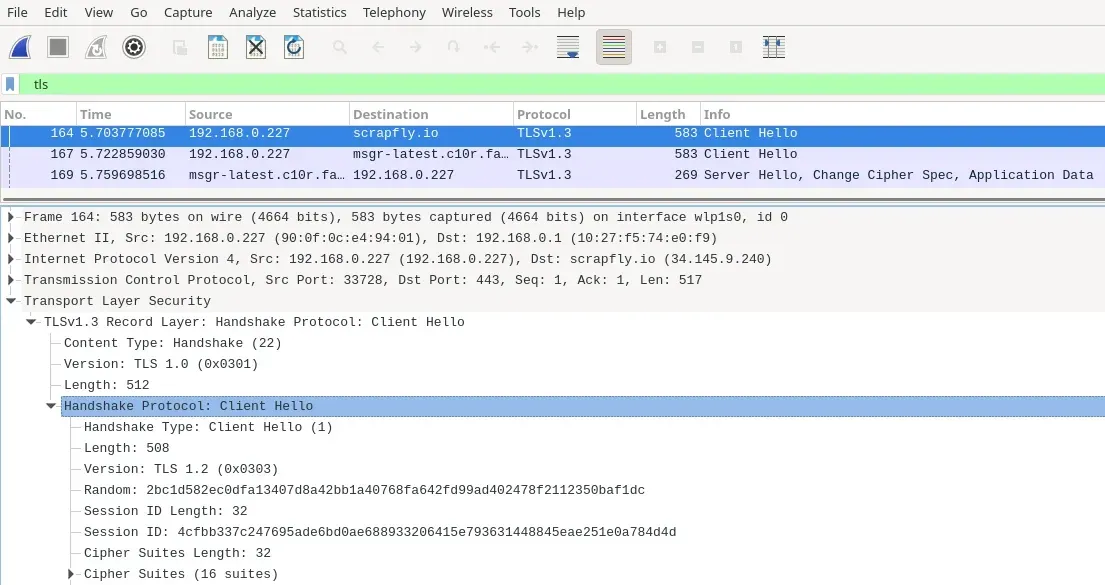

How To Read TLS Data?

Most common way to observe TLS handshakes is to use Wireshark packet analyzer:

Using filter tls we can easily observe TLS handshake when we submit a request in a web browser or a web scraper script. Look for "Client Hello" message which is the first step in the handshake process.

Wireshark even calculates the JA3 fingerprint for you:

To test JA3 fingerprint we made an open [ScrapFly JA3 tool] which makes it easy to test HTTP client fingerprints.

For example this is the results of requests library in Python:

import requests

import json

print(json.dumps(requests.get("https://tools.scrapfly.io/api/fp/ja3?extended=1").json())

{

"digest": "8d9f7747675e24454cd9b7ed35c58707",

"ja3": "771,4866-4867-4865-49196-49200-49195-49199-52393-52392-159-158-52394-49327-49325-49326-49324-49188-49192-49187-49191-49162-49172-49161-49171-49315-49311-49314-49310-107-103-57-51-157-156-49313-49309-49312-49308-61-60-53-47-255,0-11-10-16-22-23-49-13-43-45-51-21,29-23-30-25-24,0-1-2",

"tls": {

"version": "0x303 - TLS 1.2",

"ciphers": [

"TLS_AES_256_GCM_SHA384",

"TLS_CHACHA20_POLY1305_SHA256",

"TLS_AES_128_GCM_SHA256",

"TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384",

"..."

],

"curves": [

"X25519 (29)",

"secp256r1 (23)",

"X448 (30)",

"secp521r1 (25)",

"secp384r1 (24)"

],

"extensions": [

"server_name (0) (IANA)",

"ec_point_formats (11) (IANA)",

"supported_groups (10) (IANA)",

"application_layer_protocol_negotiation (16) (IANA)",

"encrypt_then_mac (22) (IANA)",

"extended_master_secret (23) (IANA)",

"post_handshake_auth (49) (IANA)",

"signature_algorithms (13) (IANA)",

"supported_versions (43) (IANA)",

"psk_key_exchange_modes (45) (IANA)",

"key_share (51) (IANA)",

"padding (21) (IANA)"

],

"points": [

"0",

"1",

"2"

],

"protocols": [

"http/1.1"

],

"versions": [

"0x303 - TLS 1.2",

"0x302 - TLS 1.1",

"0x301 - TLS 1.0",

"0x300 - SSL 3.0"

]

}

}

This doesn't look a lot like Chrome or Firefox - meaning these Python web scrapers would be quite easy to identify! Let's take a look how could we remedy this.

How Does TLS Fingerprinting Lead To Blocking?

When it comes to blocking web scrapers the main goal is the difference spotting - is this client different from a general web browser?

We can see that JA3 fingerprint algorithm considers very few variables meaning there are relatively few unique fingerprint possibilities which makes it easy to create whitelist and blacklist databases.

There are mnay public JA3 fingerprint database like: ja3er.com, ja3.zone. These databases allow to lookup fingerprints and see counts by user agent string which is useful for gather some context before committing to fingerprint faking.

Anti web scraping services collect massive JA3 fingerprint databases which are used to whitelist browser-like ones and blacklist common web scraping ones. Meaning to avoid blocking we must ensure that our JA3 fingerprint is whitelisted (matches common web browser) or unique enough.

How To Fake TLS Fingerprint?

Unfortunately, configuring TLS spoofing is pretty complicated and not easily achievable in many scenarios. Nevertheless, let's take a look at some common cases.

TLS Fortification in Python

In Python we can only configure "Cipher Suite" and "TLS version" variables, meaning every python HTTP client is vulnerable to TLS extension fingerprinting. We cannot achieve whitelisted fingerprints but by spoofing these two variables we can at least avoid the blacklists:

Change Cipher Suite and TLS version in requests

import ssl

import requests

from requests.adapters import HTTPAdapter

from urllib3.poolmanager import PoolManager

from urllib3.util.ssl_ import create_urllib3_context

# see "openssl ciphers" command for cipher names

CIPHERS = "ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384"

class TlsAdapter(HTTPAdapter):

def __init__(self, ssl_options=0, **kwargs):

self.ssl_options = ssl_options

super(TlsAdapter, self).__init__(**kwargs)

def init_poolmanager(self, *pool_args, **pool_kwargs):

ctx = create_urllib3_context(ciphers=CIPHERS, cert_reqs=ssl.CERT_REQUIRED, options=self.ssl_options)

self.poolmanager = PoolManager(*pool_args, ssl_context=ctx, **pool_kwargs)

adapter = TlsAdapter(ssl.OP_NO_TLSv1 | ssl.OP_NO_TLSv1_1) # prioritize TLS 1.2

default = requests.get("https://tools.scrapfly.io/api/fp/ja3?extended=1").json()

session = requests.session()

session.mount("https://", adapter)

fixed = session.get("https://tools.scrapfly.io/api/fp/ja3?extended=1").json()

print('Default:')

print(default['tls']['ciphers'])

print(default['ja3'])

print('Patched:')

print(fixed['tls']['ciphers'])

print(fixed['ja3'])

Change Cipher Suite and TLS version in httpx

import httpx, ssl

ssl_ctx = ssl.SSLContext(protocol=ssl.PROTOCOL_TLSv1_2) # prefer TLS 1.2

ssl_ctx.set_alpn_protocols(["h2", "http/1.1"])

# see "openssl ciphers" command for cipher names

CIPHERS = "TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256:TLS_AES_128_GCM_SHA256:ECDHE-ECDSA-AES256-GCM-SHA384"

ssl_ctx.set_ciphers(CIPHERS)

default = httpx.get("https://tools.scrapfly.io/api/fp/ja3?extended=1").json()

fixed = httpx.get("https://tools.scrapfly.io/api/fp/ja3?extended=1", verify=ssl_ctx).json()

print('Default:')

print(default['tls']['ciphers'])

print(default['ja3'])

print('Patched:')

print(fixed['tls']['ciphers'])

print(fixed['ja3'])

TLS Fortification in Go

Go language is one of few languages that support accessible TLS spoofing via Refraction Networking's utls, CycleTLS or ja3transport libraries.

LibCurl Based HTTP Clients

HTTP clients based on libcurl can be updated to use curl-impersonate - a modified version of libcurl library which patches TLS fingerprinting to resemble that of a common web browser.

Here are few libraries that use libcurl and can be patched this way:

- Typhoeus library for Ruby

- curl default library and crul community libraries for R

- Guzzle library for PHP has an option

- PyCurl library for Python

Headless Browser Based Scrapers

When we're scraping using Playwright, Puppeteer or Selenium we're using real browsers, so we get genuine TLS fingerprints which is great! That being said, when scraping at scale using diverse collection of browser/operating-system versions can help to spread out connection through multiple fingerprints rather than one.

Do Headless Browsers Have Different Fingerprints?

No. Generally, running browsers in headless mode (be it Selenium, Playwright or Puppeteer) should not change TLS fingerprint. Meaning JA3 and other TLS fingerprinting techniques cannot identify whether connecting browser is headless or not.

ScrapFly - Making it Easy

TLS fingerprinting is really powerful for identifying bots and unusual HTTPS clients in general. We've taken a look at how JA3 fingerprinting works and to treat and spoof it in web scraping.

As you see, TLS is a really complex security protocol which can be a huge time sink when it comes to fortifying web scrapers.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale. Each product is equipped with an automatic bypass for any anti-bot system and we achieve this by:

- Maintaining a fleet of real, reinforced web browsers with real fingerprint profiles.

- Millions of self-healing proxies of the highest possible trust score.

- Constantly evolving and adapting to new anti-bot systems.

- We've been doing this publicly since 2020 with the best bypass on the market!

ScrapFly supports javascript rendering via fast and smart automated browser pool, anti scraping protection bypass for accessing even the most well guarded targets, residential proxies for accessing geographically restricted content and much more!