Webhook

Scrapfly's webhook feature is ideal for managing long-running scrape tasks asynchronously.

When webhook is specified through the webhook_name parameter, Scrapfly will call your HTTP endpoint with the scrape response as soon as the scrape is done.

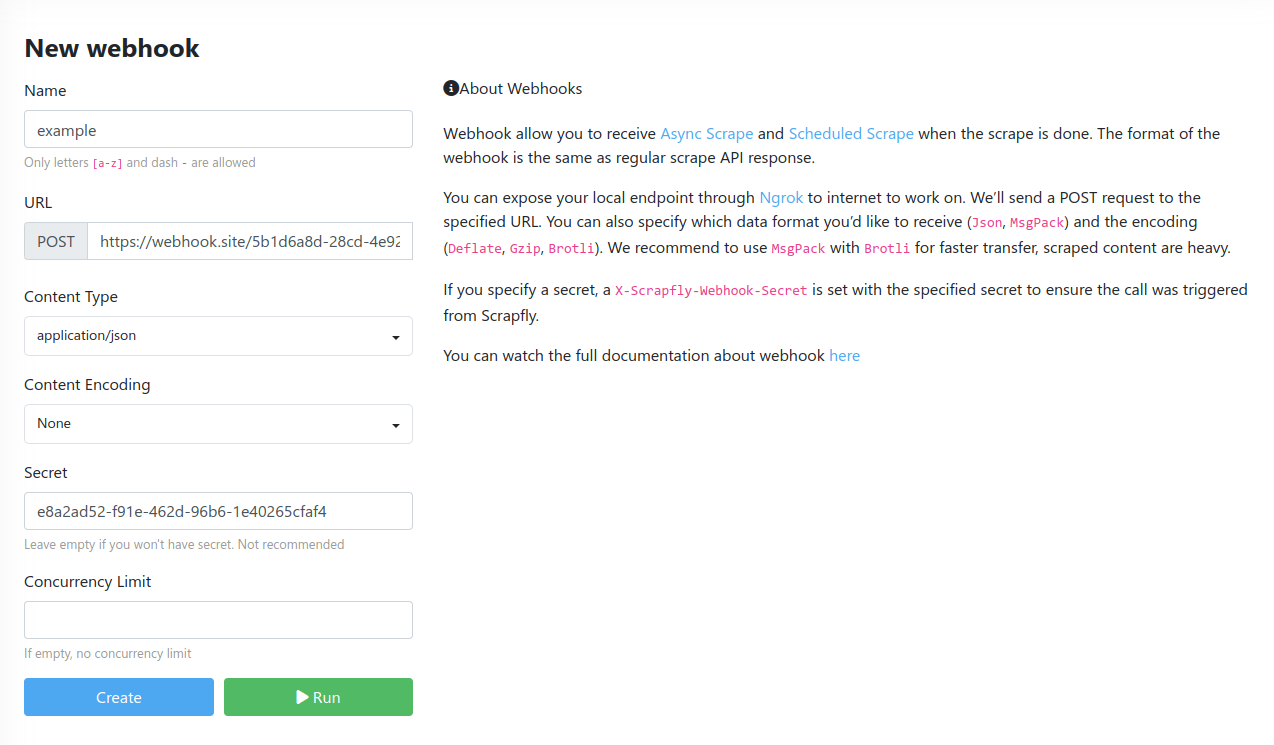

To start using webhooks first one must be created using webhook web interface.

Then the webhook

scrape parameter can be used with the created webhook's name (e.g. webhook_name=example) to enable webhook callbacks per scrape request basis.

The body sent to your endpoint is the same as a regular API scrape response, plus webhook information in the context part.

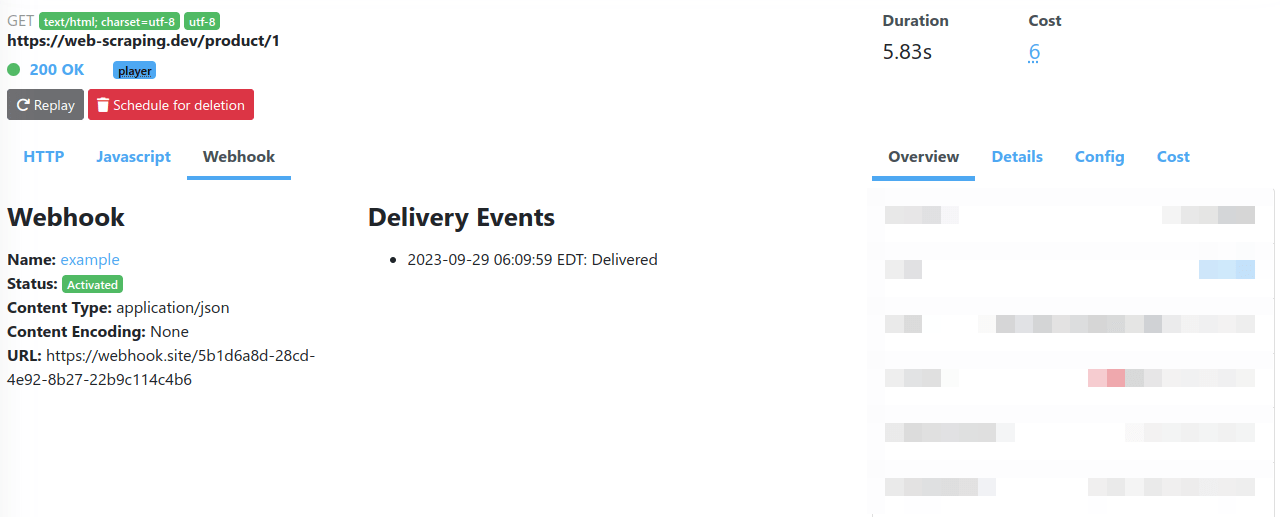

The webhook execution information can be found in the webhook tab of each scrape log page in the monitoring dashboard:

Webhook Queue SizeThe webhook queue size indicates the maximum number of queued webhooks that can be scheduled. After the scraping process is completed and your application is notified, the queue size is reduced. This allows you to schedule additional scrapes beyond the concurrency limit of your subscription. The scheduler will handle this and ensure that your concurrency limit is met.

FREE

$0.00/moDISCOVERY

$30.00/moPRO

$100.00/moSTARTUP

$250.00/moENTERPRISE

$500.00/mo0 500 2,000 5,000 10,000

Scope

Webhooks are scoped per scrapfly projects and environments. Make sure to create a webhook for each of your project and environment (test/live).

Usage

Webhook can be used for multiple purpose, in context of Web Scraping API, to assert you received a scrape, you must check the headerX-Scrapfly-Webhook-Resource-Typeand check the value isscrape

To enable webhook callbacks, all you need to do is specify the webhook_name parameter in your scrape requests.

Then, Scrapfly will immediately return a promise response and call your webhook endpoint as soon as the scrape is done.

Note that your webhook has to be configured to respond to 2xx response code for webhook to be considered a success.

The 3xx redirect responses will be followed and response codes 4xx and 5xx are considered failures and will be retried as per the retry policy.

The below examples assume you have a webhook named example registered. You can create a webhook named "example" via the web dashboard.

import requests

url = "https://api.scrapfly.io/scrape"

params = {

"webhook_name": "example",

"key": "__API_KEY__",

"url": "https://httpbin.dev/html",

}

response = requests.request("GET", url, params=params)

# Raise exception for HTTP errors (4xx, 5xx)

response.raise_for_status()

data = response.json()

print(data)

# Access the scrape result

if 'result' in data:

print(data['result'])

https://api.scrapfly.io/scrape?webhook_name=example&key=&url=https%3A%2F%2Fhttpbin.dev%2FhtmlExample Of Response

Tracking

When you enqueue a scrape, you receive a unique job uuid response.context.job.uuid, when your webhook will be notified,

you will retrieve the processed job id on the response header X-Scrapfly-Webhook-Job-Id or inside the response (same structure as enqueue request response.context.job.uuid)

to reconcile it in your system and track it.

Retry Policy

Webhook callbacks are retried if Scrapfly can't notify the endpoint specified in your webhook settings based on this retry policy:

- 30 seconds

- 1 minute

- 5 minutes

- 30 minutes

- 1 hour

- 1 day

If we failed to reach your application more than 100 times in a row, the system automatically disables it, and you will be notified. You can re-enable it from the UI at any point after.

Development

Useful tools to develop locally :

- https://webhook.site Collect and display webhook

- https://ngrok.com Expose you local application through a secured tunnel to the internet

Security

Webhooks are signed using HMAC (Hash-based Message Authentication Code) with the SHA-256 algorithm to ensure the integrity of the webhook content and verify its authenticity. This mechanism helps prevent tampering and ensures that webhook payloads are from trusted sources.

HMAC Overview

HMAC is a cryptographic technique that combines a secret key with a hash function (in this case, SHA-256) to produce a fixed-size hash value known as the HMAC digest. This digest is unique to both the original message and the secret key, providing a secure way to verify the integrity and authenticity of the message.

Signature in HTTP Header

When sending a webhook request, a signature is generated using HMAC-SHA256 and included in the HTTP header X-Scrapfly-Webhook-Signature. This signature is computed based on the webhook payload and a secret key known only to the sender and receiver.

Verification Process

Upon receiving a webhook request, the receiver extracts the payload and computes its own HMAC-SHA256 signature using the same secret key. It then compares this computed signature with the signature provided in the X-Scrapfly-Webhook-Signature header. If the two signatures match, it indicates that the payload has not been tampered with and originates from the expected sender.

Security Considerations

- Keep Secret Key Secure: The secret key used for HMAC computation should be kept confidential and not exposed publicly.

- Use HTTPS: Webhook communication should be conducted over HTTPS to ensure data privacy and integrity during transit.

- Regular Key Rotation: Periodically rotate the secret key used for HMAC computation to enhance security.

Headers

Following headers are added :

X-Scrapfly-Webhook-Env: Related environment where webhook is triggeredX-Scrapfly-Webhook-Project: Related project nameX-Scrapfly-Webhook-SignatureHMAC SHA-256 Integrity SignatureX-Scrapfly-Webhook-NameName of the webhookX-Scrapfly-Webhook-Resource-TypeResource typeX-Scrapfly-Webhook-Job-IdUnique Job Identifier given in the enqueue call

Related Errors

All related errors are listed below. You can see the full description and example of the error response on Errors section of the documentation.

- ERR::WEBHOOK::DISABLED - Given webhook is disabled, please check out your webhook configuration for the current project / env

- ERR::WEBHOOK::ENDPOINT_UNREACHABLE - We were not able to contact your endpoint

- ERR::WEBHOOK::QUEUE_FULL - You reach the maximum concurrency limit

- ERR::WEBHOOK::MAX_RETRY - Maximum retry exceeded on your webhook

- ERR::WEBHOOK::NOT_FOUND - Unable to find the given webhook for the current project / env

- ERR::WEBHOOK::QUEUE_FULL - You reach the limit of scheduled webhook - You must wait pending webhook are processed

Pricing

No additional fee applied on usage.