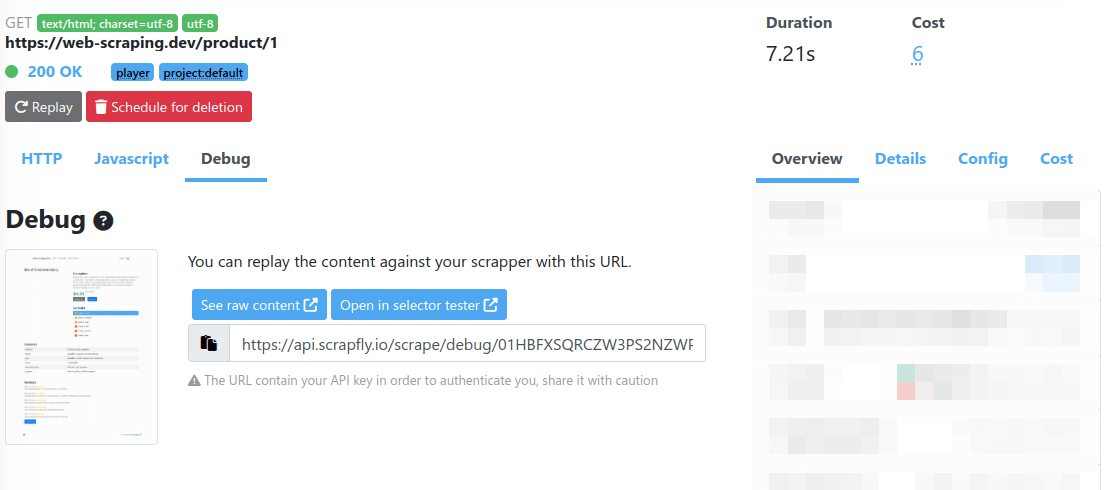

Debug

Scrapfly's debug feature allows debugging each API call by:

- Storing the scraped result for 1+ weeks (based on log retention policy)

- Replaying any debug API call through web dashboard

- Screenshot capturing for javascript rendering enabled API calls

We recommend using debug feature during scraper development process, as it makes it easier to investigate any scraper issues.

The stored debug content will be deleted when the log retention policy expires

The debug details can be found in each monitoring log page Debug tab:

Usage

Debug has to be enabled per request basis using the debug parameter.

If used together with the render_js=true parameter the debugger will also attempt to capture screenshots:

import requests

url = "https://api.scrapfly.io/scrape"

params = {

"debug": True,

"render_js": True,

"key": "__API_KEY__",

"url": "https://web-scraping.dev/product/1",

}

response = requests.request("GET", url, params=params)

# Raise exception for HTTP errors (4xx, 5xx)

response.raise_for_status()

data = response.json()

print(data)

# Access the scrape result

if 'result' in data:

print(data['result'])

https://api.scrapfly.io/scrape?debug=true&render_js=true&key=&url=https%3A%2F%2Fweb-scraping.dev%2Fproduct%2F1Example of API Response

To access the debug results via provided urls the?keyparameter should be added with your API key. For example:https://api.scrapfly.io/scrape/debug/ee8484c6-ee5f-4775-a665-0a2b57631c1c?key={{ YOUR_API_KEY }}

Pricing

Running debug in TEST environment is free. In production (LIVE env) we charge +2 additional API Credit.

The goal is too keep this feature accessible while preventing running debug mode on full scale production workload (we store api response, screenshots, full execution trace for account manager and technical review - This avoid a large amount of wasted resources.