Rate limiting is a vital concept in APIs, web services, and application development. It controls how many requests a user or system can make to a resource within a set time frame, helping ensure system stability, fair access, and protection against abuse like spam or denial-of-service attacks.

For both developers and beginners, understanding rate limiting is key to building secure and scalable systems. In this guide, we'll cover what rate limiting is, why it matters, how it works, common algorithms, practical examples, and tips for implementing it effectively.

Key Takeaways

Implement rate limiting to control request frequency and prevent system overload by limiting how many requests users can make within specific time windows, protecting your APIs and web services from abuse.

- Rate limiting controls request frequency - prevents system overload by limiting how many requests users can make within a specific time window

- Essential for system stability - protects against abuse, ensures fair resource usage, and prevents DDoS attacks

- Multiple implementation layers - can be applied at application, API gateway, or network levels depending on requirements

- Common algorithms serve different needs - Token Bucket for burst handling, Leaky Bucket for smooth output, Fixed Window for simplicity, Sliding Window for accuracy

- Industry-specific applications - e-commerce protects checkout systems, financial services secure transactions, social media prevents bot abuse

- Implementation challenges exist - false positives, scaling complexity, and user experience degradation require careful planning

- Best practices improve effectiveness - clear error messages, user-specific limits, monitoring, and graceful degradation

- Tools and libraries simplify deployment - Redis for counters, NGINX for HTTP limits, and language-specific libraries reduce development time

What is an IP Address?

Before diving deeper into rate limiting, it is essential to understand what an IP (Internet Protocol) address is, as rate limiting often involves tracking IPs. An IP address is a unique identifier assigned to each device connected to a network that uses the Internet Protocol for communication. Think of it like a mailing address for your computer or smartphone.

There are two main types of IP addresses:

- IPv4: The most commonly used format, consisting of four groups of numbers separated by dots (e.g., 192.168.1.1).

- IPv6: A newer format designed to accommodate the growing number of internet devices, using eight groups of hexadecimal numbers separated by colons (e.g., 2001:0db8:85a3:0000:0000:8a2e:0370:7334).

for more details checkout our article:

IP addresses allow devices to find and communicate with each other across networks. In rate limiting, systems often monitor requests based on IP addresses to identify and control the source of traffic.

Why is Rate Limiting Important?

Without rate limiting, systems are vulnerable to overwhelming traffic that can slow down or crash services. Here are some essential reasons why rate limiting is important:

- Prevents Abuse: Stops malicious users from spamming or overloading systems.

- Ensures Fair Use: Guarantees that no single user can monopolize resources.

- Protects System Stability: Maintains predictable and reliable service.

- Enhances Security: Acts as a defensive mechanism against DDoS attacks.

Now that you understand its importance, let’s dive into how rate limiting actually works.

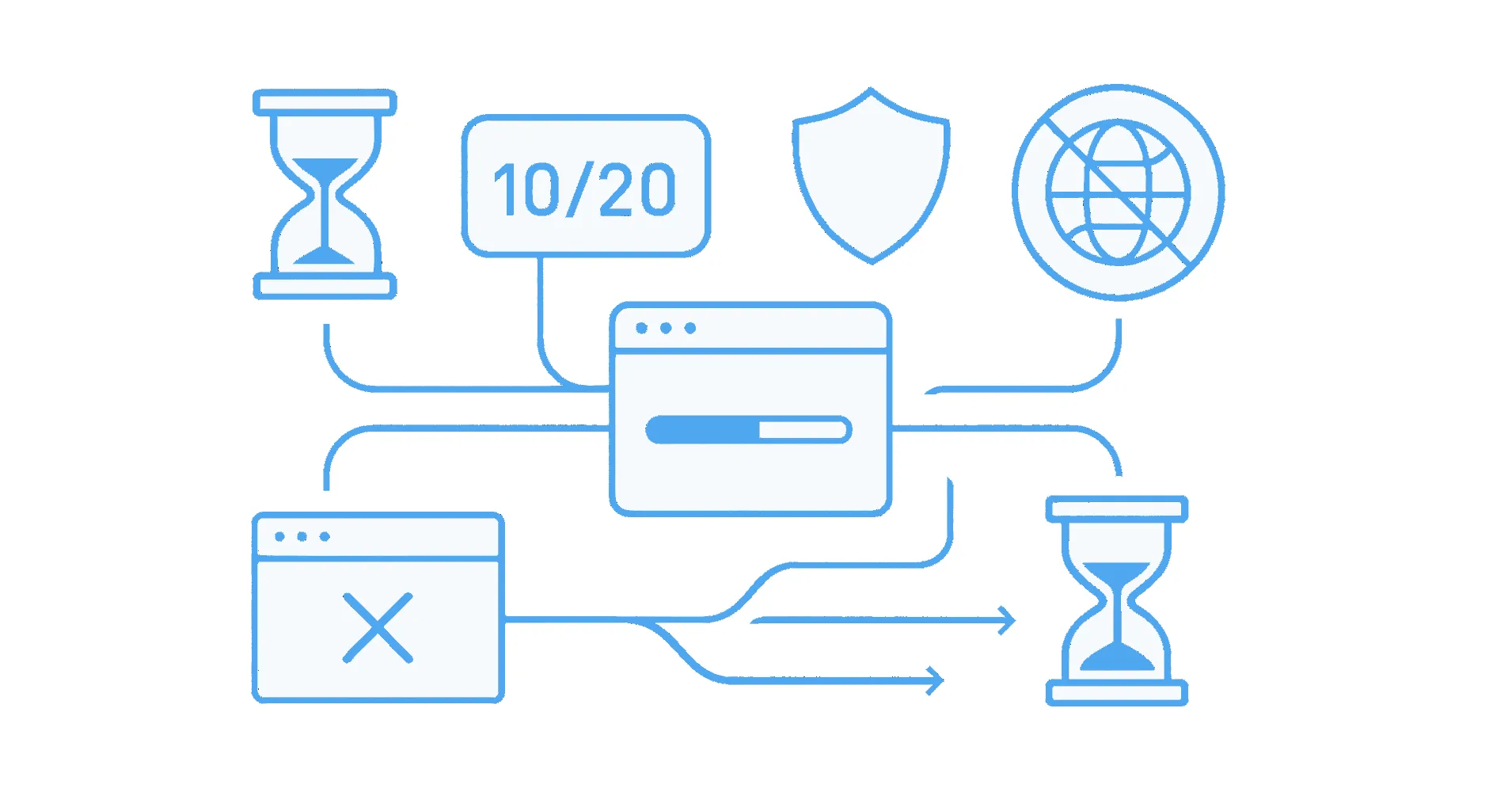

How Does Rate Limiting Work?

Rate limiting monitors the number of requests from a user, IP address, or API key over a given time window (e.g., 100 requests per minute). If the threshold is exceeded, the system responds with an error code, often HTTP 429 (Too Many Requests).

Rate limiters can be implemented at different layers:

- Application Layer: Code-level checks within the application.

- API Gateway Layer: Dedicated gateways like Kong, Apigee, or AWS API Gateway.

- Network Layer: Firewalls and load balancers limiting by IP addresses.

Common Rate Limiting Algorithms

Understanding different rate limiting algorithms helps developers choose the best strategy for their application. Here are a few common ones:

Token Bucket

The token bucket algorithm allows for a certain number of tokens to be added to a bucket at a fixed rate. Each request "spends" a token. If tokens are available, the request is allowed.

# Simple Python simulation of Token Bucket

class TokenBucket:

def __init__(self, capacity, refill_rate):

self.capacity = capacity

self.tokens = capacity

self.refill_rate = refill_rate

def allow_request(self):

if self.tokens > 0:

self.tokens -= 1

return True

return False

def refill(self):

self.tokens = min(self.capacity, self.tokens + self.refill_rate)

In the above code, allow_request checks if tokens are available, and refill simulates token regeneration.

Leaky Bucket

The leaky bucket algorithm treats incoming requests like water poured into a bucket with a small hole at the bottom. Water (requests) leaks at a constant rate, regardless of the inflow rate. If too much water is poured at once and the bucket overflows, incoming requests are discarded. This method ensures a consistent, controlled output rate, smoothing traffic bursts and preventing system overload.

Fixed Window

Fixed window rate limiting divides time into equal segments (like 1-minute windows). It counts the number of requests in the current window and blocks requests that exceed the limit. For instance, a limit of 1000 requests per minute resets at the beginning of every minute. Although simple to implement, it may allow traffic spikes at window boundaries, causing short-term bursts.

Sliding Window Log

Sliding window log is a more accurate but resource-intensive method. It keeps a timestamped log of every request and continuously checks how many requests occurred within a moving time frame (e.g., the last 60 seconds). When a new request arrives, the system purges old timestamps and decides based on the updated log. This provides smoother traffic management and avoids sudden spikes seen in fixed windows.

Practical Examples of Rate Limiting

Example 1: API Usage

A public API like GitHub's API uses rate limiting to prevent abuse. For instance, unauthenticated users might be limited to 60 requests per hour, while authenticated users can have higher limits.

Example 2: Login Systems

Login endpoints implement rate limiting to prevent brute-force attacks. For instance, a system might allow 5 login attempts per IP address every 10 minutes.

Best Practices for Implementing Rate Limiting

Implementing rate limiting effectively requires thoughtful planning to balance user experience, system performance, and security. Below are some best practices to guide you.

- Return Clear Error Messages: Include “Retry-After” headers when blocking requests.

- Different Limits for Different Users: Offer higher limits for authenticated or premium users.

- Monitoring and Alerts: Track rate limit events and trigger alerts if thresholds are consistently exceeded.

- Graceful Degradation: Allow limited access instead of outright blocking whenever possible.

Rate Limiting in Different Industries

Rate limiting plays a critical role across many industries, ensuring that applications remain stable, secure, and efficient under varying loads. Different industries apply rate limiting strategies based on their unique operational needs.

E-commerce: In e-commerce, rate limiting protects checkout and payment APIs to prevent fraud and service degradation during major sales events like Black Friday.

Financial Services: Banks and financial institutions use rate limiting to secure sensitive transaction endpoints, prevent fraud, and comply with regulatory requirements such as PSD2 or PCI-DSS.

Social Media Platforms: Social media networks like Twitter and Instagram aggressively apply rate limiting to curb bots, reduce scraping activities, and maintain platform health.

Gaming Industry: Online games use rate limiting to ensure fairness in gameplay and protect their servers from bot attacks and spam requests.

Healthcare Applications: Healthcare systems implement rate limiting to control access to sensitive patient data, ensuring compliance with standards like HIPAA and minimizing risks of system overload.

Challenges of Rate Limiting

While rate limiting is powerful, it can introduce challenges:

- False Positives: Legitimate users might get blocked.

- Scaling Issues: Managing rate limits across distributed systems can be complex.

- User Frustration: Overly aggressive limits can degrade the user experience.

Solutions include adaptive rate limits, user-specific thresholds, and clear communication through error messages.

Tools and Libraries for Rate Limiting

- Redis: Often used for storing counters and implementing rate limits efficiently.

- NGINX: Built-in modules for HTTP rate limiting.

- Envoy Proxy: Offers dynamic rate limiting via external services.

- Libraries: Libraries like

express-rate-limitfor Node.js ordjango-ratelimitfor Django.

Proxies at ScrapFly

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale. Each product is equipped with an automatic bypass for any anti-bot system and we achieve this by:

- Maintaining a fleet of real, reinforced web browsers with real fingerprint profiles.

- Millions of self-healing proxies of the highest possible trust score.

- Constantly evolving and adapting to new anti-bot systems.

- We've been doing this publicly since 2020 with the best bypass on the market!

FAQs

Below are quick answers to common questions about rate limiting.

What is a 429 Error?

A 429 Error means "Too Many Requests." It indicates that the user has sent too many requests in a given amount of time and has hit the rate limit.

How Can I Bypass Rate Limits?

Bypassing rate limits is generally unethical and discouraged. Instead, consider applying for higher usage quotas or optimizing your application's request patterns.

Can Rate Limiting Be Dynamic?

Yes, dynamic rate limiting adjusts thresholds based on server load, user tiers, or other runtime parameters to offer flexible control.

Summary

Rate limiting is an essential tool for any developer working with APIs, web services, or scalable applications. It ensures system stability, fairness, and security. By understanding the different algorithms, real-world applications, challenges, and best practices, you can implement effective rate-limiting strategies in your projects.

Now that you have a clear understanding of what is rate limiting and how to implement it, you can build more reliable and secure systems.