OpenAI's ChatGPT has just launched a new feature called code interpreter which can run Python code right there in the chat. While chatgpt can't scrape the web directly yet the interpreter can be a great help with web scraper development, especially when it comes to HTML parsing.

Using this feature the LLM can take in HTML documents and find all of the data by itself and output code that can be used in web scraping.

In this tutorial, we'll take a look at how to use chatgpt for AI web scraper development. In particular, how to use it to create Python and BeautifulSoup HTML parsing code. We'll craft some prompts and use GPT's code interpreter feature to write the web scraping code for us.

We'll also take a look at some common pitfalls and tips for writing prompts that will get the best results for web scraping tasks. Let's dive in!

Key Takeaways

Master ChatGPT Code Interpreter for web scraper development, automating HTML parsing and BeautifulSoup code generation with AI assistance.

- Use ChatGPT Code Interpreter to automatically generate Python and BeautifulSoup parsing code from HTML samples

- Upload HTML files directly to ChatGPT for automated data extraction and selector generation

- Leverage AI's self-correction capabilities to iteratively improve parsing code accuracy and handle errors

- Apply effective prompting techniques to get optimal web scraping code generation results

- Use ChatGPT for CSS selector and XPath expression generation based on HTML structure analysis

- Apply advanced techniques for hidden data extraction, dynamic content parsing, and error handling in production scrapers

Enable ChatGPT Code Interpreter

Note that currently Code Interpreter is only available to Premium users and needs to be enabled in openAI settings:

- Login to chatgpt and select the

...menu to access settings panel. - Select

Beta Featurestab and enableCode Interpreter. - Start a new GPT4 chat with

Code Interpreterfeature.

Now files can be uploaded to each chat using the + button and chatgpt can start reading your data and coding with it.

Can ChatGPT be used for scraper development without Code Interpreter?

Yes though it's not nearly as effective. The key value of the code interpreter is that GPT executes the code and self-corrects if there are any errors.

For example, if it guesses that product price appears to be under <div class="product-price"> element and it's not there it'll ingest the error message and make a new, better guess.

Without the code interpreter, we have to take the position of code validation ourselves which can take several attempts and corrections to get the working code from GPT. This time-consuming process defeats the purpose of using GPT in the first place.

HTML parsing with ChatGPT Interpreter

Extracting data from scraped pages is one of the most time-consuming tasks in web scraping and now we can use GPT to help us with that!

When scraping we usually use HTML parsing tools like CSS Selectors, XPath or BeautifulSoup - all of which can be used with ChatGPT Interpreter.

That being said, currently, GPT works best with beautilfulsoup4. We'll also be using httpx to download the HTML files. To follow along install these Python packages using the pip install command:

$ pip install beautifulsoup4 httpx

Getting a Sample Page

To start, we need to provide an example page file to ChatGPT Interpreter. This can be saved from the browser directly (ctrl+s) or downloaded with wget or curl commands.

For this project we'll be using an example product page from the web-scraping.dev website:

$ wget https://web-scraping.dev/product/1 -O product.html

For Headless Browsers

When scraping using headless browsers (like Puppeteer, Playwright or Selenium) we get rendered HTML files that tools like wget or Python requests/httpx cannot retrieve.

Rendered HTML can contain dynamic data that otherwise would not be present in the source HTML so if your scraper can handle that use it in your GTP prompts as well.

To get rendered HTML of a web page the easiest approach is to use the Browser Developer Tools console:

copy(document.body.innerHTML);

This will copy the fully rendered HTML of the current page to the clipboard.

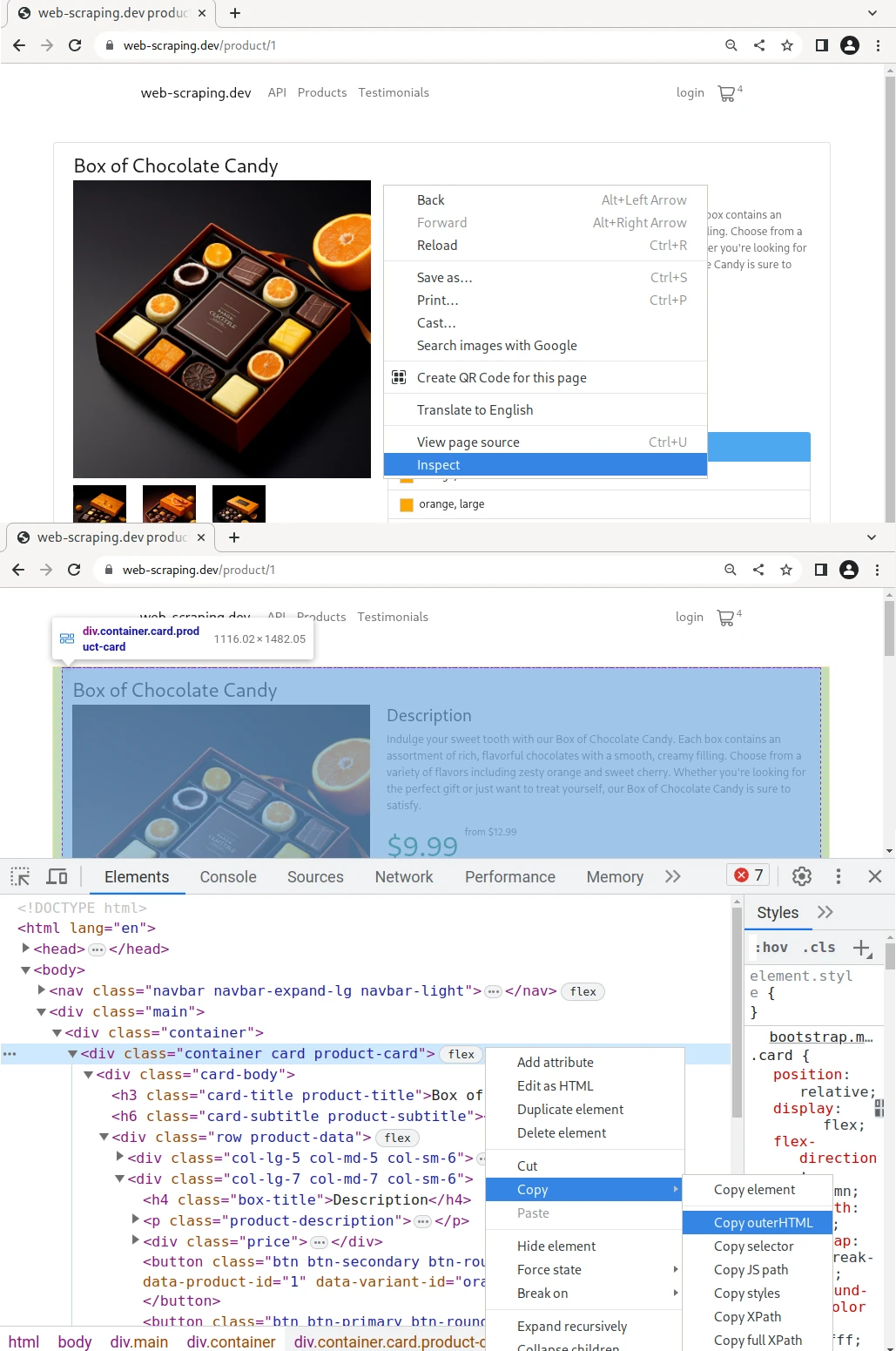

Snippet Extraction

Some pages can contain a lot of HTML which can reduce the quality of GPT responses. GPT needs to take in all of that data and the more data there is the harder it'll be for it to find the right results.

In cases like that, save only the HTML part that contains the data you want to extract. Here's an example using Chrome Devtools to extract an HTML snippet:

Parsing HTML with ChatGPT and BeautifulSoup

With the HTML file ready we can start to query chatGPT with parsing assistance.

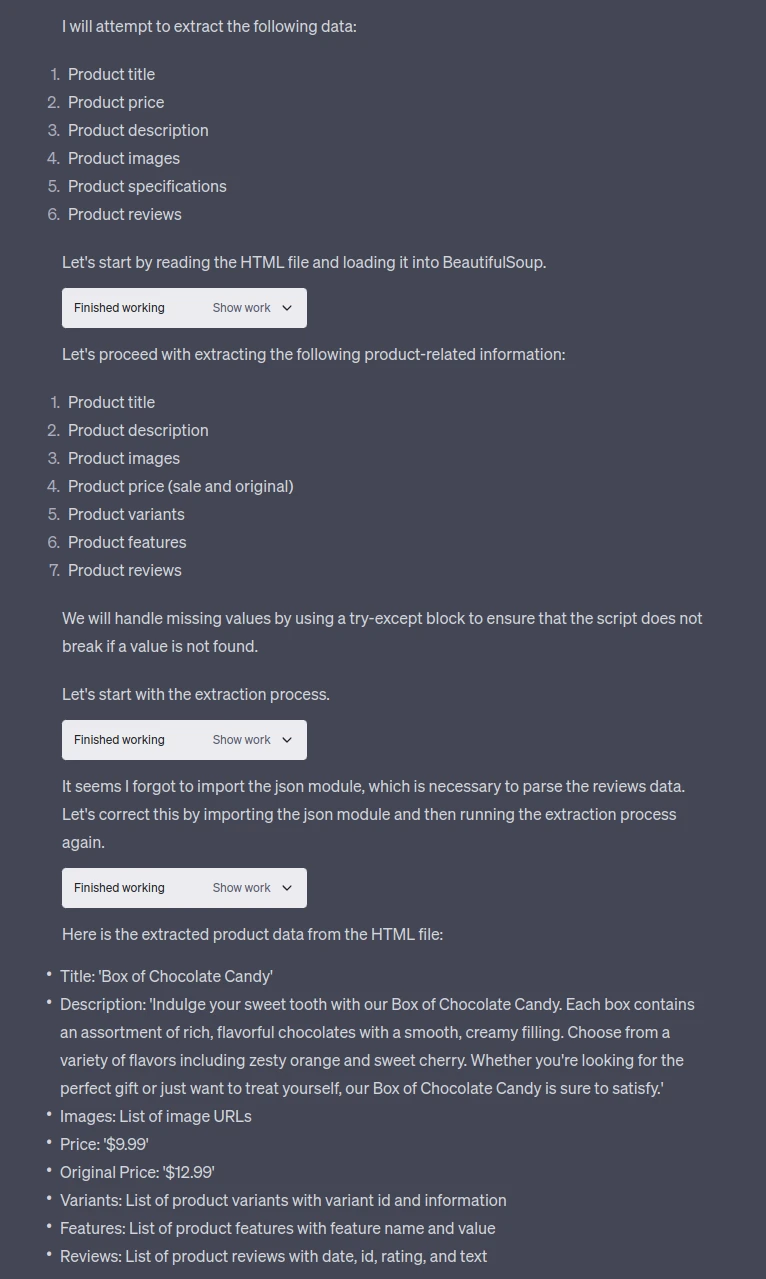

For that, we'll start off with this prompt with our example HTML file uploaded (use the + button in the chat to upload files):

Using Python and Beautifulsoup can you parse all product data from the this HTML. Ingest ALL of the HTML file before performing any parsing operations. In the parser assume that the datafields present on this example page could be missing in others so the parser should not crash when encountering missing values.

Some key prompt suggestions:

- Be explicit about parsing all of the HTML. GPT is optimized to be lazy and will try to avoid doing work which can result in a faulty parser.

- Be explicit about what tools to use. Currently, GPT code interpreter works best with BeautifulSoup and its

findandfind_allmethods. - Be explicit about what data to extract if possible. If you know what data your target contains (product name, price etc.) list it out.

Let's give this prompt a shot:

Output Data

{'title': 'Box of Chocolate Candy',

'description': "Indulge your sweet tooth with our Box of Chocolate Candy. Each box contains an assortment of rich, flavorful chocolates with a smooth, creamy filling. Choose from a variety of flavors including zesty orange and sweet cherry. Whether you're looking for the perfect gift or just want to treat yourself, our Box of Chocolate Candy is sure to satisfy.",

'images': ['https://web-scraping.dev/assets/products/orange-chocolate-box-small-2.png',

'https://web-scraping.dev/assets/products/orange-chocolate-box-small-3.png',

'https://web-scraping.dev/assets/products/orange-chocolate-box-small-4.png'],

'price': '$9.99',

'price_full': '$12.99',

'variants': [{'variant_id': 'orange-medium',

'variant_info': 'orange, medium'},

{'variant_id': 'orange-large', 'variant_info': 'orange, large'},

{'variant_id': 'cherry-small', 'variant_info': 'cherry, small'},

{'variant_id': 'cherry-medium', 'variant_info': 'cherry, medium'},

{'variant_id': 'cherry-large', 'variant_info': 'cherry, large'}],

'features': [{'feature': 'material', 'value': 'Premium quality chocolate'},

{'feature': 'flavors', 'value': 'Available in Orange and Cherry flavors'},

{'feature': 'sizes', 'value': 'Available in small, medium, and large boxes'},

{'feature': 'brand', 'value': 'ChocoDelight'},

{'feature': 'care instructions', 'value': 'Store in a cool, dry place'},

{'feature': 'purpose', 'value': 'Ideal for gifting or self-indulgence'}],

'reviews': [{'date': '2022-07-22',

'id': 'chocolate-candy-box-1',

'rating': 5,

'text': 'Absolutely delicious! The orange flavor is my favorite.'},

{'date': '2022-08-16',

'id': 'chocolate-candy-box-2',

'rating': 4,

'text': 'I bought these as a gift, and they were well received. Will definitely purchase again.'},

{'date': '2022-09-10',

'id': 'chocolate-candy-box-3',

'rating': 5,

'text': 'Nice variety of flavors. The chocolate is rich and smooth.'},

{'date': '2022-10-02',

'id': 'chocolate-candy-box-4',

'rating': 5,

'text': 'The cherry flavor is amazing. Will be buying more.'},

{'date': '2022-11-05',

'id': 'chocolate-candy-box-5',

'rating': 4,

'text': 'A bit pricey, but the quality of the chocolate is worth it.'}]}

Here, we uploaded our example product HTML file and asked GPT to use BeautifulSoup and parse it. This is the parsing code it has produced:

import json

product_data = {}

# Product title

try:

product_data["title"] = soup.find("h3", class_="card-title product-title").text.strip()

except AttributeError:

product_data["title"] = None

# Product description

try:

product_data["description"] = soup.find("p", class_="product-description").text.strip()

except AttributeError:

product_data["description"] = None

# Product images

try:

product_images = soup.find_all("img", class_="img-responsive product-img")

product_data["images"] = [img["src"] for img in product_images]

except AttributeError:

product_data["images"] = None

# Product price

try:

product_data["price"] = soup.find("span", class_="product-price mt-5 fs-1 text-success").text.strip()

product_data["price_full"] = soup.find("span", class_="product-price-full").text.strip()

except AttributeError:

product_data["price"] = None

product_data["price_full"] = None

# Product variants

try:

product_variants = soup.find_all("a", class_="variant list-group-item list-group-item-action")

product_data["variants"] = [{"variant_id": variant["data-variant-id"], "variant_info": variant.text.strip()} for variant in product_variants]

except AttributeError:

product_data["variants"] = None

# Product features

try:

product_features = soup.find_all("tr", class_="feature")

product_data["features"] = [{"feature": feature.find("td", class_="feature-label").text.strip(), "value": feature.find("td", class_="feature-value").text.strip()} for feature in product_features]

except AttributeError:

product_data["features"] = None

# Product reviews

try:

reviews_script = soup.find("script", {"id": "reviews-data"}).string

reviews_json = json.loads(reviews_script)

product_data["reviews"] = reviews_json

except AttributeError:

product_data["reviews"] = None

This code isn't amazing but it's very readable and it works. We're mostly interested in the soup.find() methods which makes this a great starting point for any scraping project.

Though if you'd like more polished results from the GPT itself try asking it:

Can you make this parse code more idiomatic and abstract it to avoid repetition

import httpx

from bs4 import BeautifulSoup

def extract_single_item(soup, tag, attr=None, class_=None, process_func=lambda x: x):

try:

if attr is not None:

element = soup.find(tag, {attr: class_})

else:

element = soup.find(tag, class_=class_)

return process_func(element.text.strip())

except AttributeError:

return None

def extract_multiple_items(soup, tag, attr=None, class_=None, process_func=lambda x: x):

try:

if attr is not None:

elements = soup.find_all(tag, {attr: class_})

else:

elements = soup.find_all(tag, class_=class_)

return [process_func(element) for element in elements]

except AttributeError:

return None

# Product data extraction

def scrape_product(url):

response = httpx.get(url)

assert response.status_code == 200

soup = BeautifulSoup(response.text)

return {

"title": extract_single_item(soup, "h3", class_="card-title product-title"),

"description": extract_single_item(soup, "p", class_="product-description"),

"images": extract_multiple_items(soup, "img", class_="img-responsive product-img", process_func=lambda x: x["src"]),

"price": extract_single_item(soup, "span", class_="product-price mt-5 fs-1 text-success"),

"price_full": extract_single_item(soup, "span", class_="product-price-full"),

"variants": extract_multiple_items(

soup,

"a",

class_="variant list-group-item list-group-item-action",

process_func=lambda x: {"variant_id": x["data-variant-id"], "variant_info": x.text.strip()}

),

"features": extract_multiple_items(

soup,

"tr",

class_="feature",

process_func=lambda x: {"feature": x.find("td", class_="feature-label").text.strip(), "value": x.find("td", class_="feature-value").text.strip()}

),

"reviews": extract_single_item(

soup,

"script",

attr="id",

class_="reviews-data",

process_func=lambda x: json.loads(x.string)

),

}

# example use:

scrape_product("https://web-scraping.dev/product/1")

So, we can see that with a bit of nudging GPT is capable of producing readable and sustainable HTML parsing code that uses Beautifulsoup for web scraping. The key to high-quality results is to provide a good HTML sample and a narrow enough prompt that GPT can focus on the task at hand.

Prompt Follow Ups

We got great results from our current prompt but every web page is different and your results can vary. Here are some follow-up prompts that can help you get better results:

Some data can be in hidden web data instead of HTML. Try asking it to explore invisible elements:

Is there any product data available in script tags or other invisible elements?

Some HTML pages use dynamic CSS classes which can be difficult to parse using beautifulsoup. Try requesting it to avoid using dynamic class names:

Avoid using dynamic CSS classes for parsing

Pages with a lot of information can be too complex for GPT to parse. Try splitting up parsing tasks into smaller chunks:

- Parse product data

- Parse product reviews

Keep in mind that chatGPT is quite good at understanding follow-up instructions so you can guide it to produce better results. Later the working additions can be incorporated into the original prompt.

FAQ

To wrap this guide on scraping using chatgpt code interpreter let's take a look at some relevant frequently asked questions:

Is it legal to use chatgpt for web scraping?

Yes, it's perfectly legal and allowed by openAI to use chatgpt for AI website scraper development. It's worth noting that chatgpt can complain about assisting with automation tasks when using dark terms like "bots" so it's best to be as descriptive as possible using industry terms like web scraping.

Can chatgpt scrape the web directly?

Currently chatGPT code interpreter has no access to the web so it can't retrieve sample pages by itself. This will likely be a feature available in the future but for now, you'll need to provide it with HTML samples through the file upload function.

Can chatgpt code interpreter help with NodeJS or Javascript scraping?

No. Currently, chatGPT code interpreter doesn't support any javascript execution environments. However, this will likely be a feature in the future and for that, we recommend paying attention to tools like NodeJS and Cheerio.

Can chatgpt code interpreter help with scraping JSON data?

Yes, it can. JSON is increasingly prevelant in web scraping through techniques like hidden web data - chatGPT code interpreter can help with finding and parsing JSON data using the same prompting techniques described in this guide.

Summary

In this quick tutorial, we've taken a look at how the new ChatGPT's code interpreter feature can be used to generate HTML parsing code. We've seen that it's possible to get good results with a narrow prompt and a good HTML sample.

While ChatGPT can't scrape the web convincingly yet the new code interpreter feature allows it to self-correct and improve making it a great tool for bootstrapping new AI-powered web scrapers.