Aliexpress contains millions of products and product reviews that can be used in market analytics, business intelligence and dropshipping.

In this tutorial, we'll take a look at how to scrape Aliexpress. We'll start by finding products by scraping the search system. Then we'll scrape the found product data, pricing and customer reviews.

This will be a relatively easy scraper in just a few lines of Python code. Let's dive in!

Key Takeaways

Learn to scrape AliExpress product data and reviews using Python with httpx and parsel, handling dynamic content and anti-bot measures for e-commerce data extraction.

- Reverse engineer AliExpress's search API endpoints by intercepting browser network requests

- Parse dynamic JSON data embedded in HTML using XPath selectors for product details and variants

- Bypass AliExpress's anti-scraping measures with realistic headers, user agents, and request spacing

- Extract structured product data including titles, prices, descriptions, and review information

- Implement exponential backoff retry logic with 403 status code detection for rate limiting

- Use specialized tools like ScrapFly for automated AliExpress scraping with anti-blocking features

Latest Aliexpress.com Scraper Code

Why Scrape Aliexpress?

There are many reasons to scrape Aliexpress data. For starters, because Aliexpress is the biggest e-commerce platform in the world, it's a prime target for business intelligence or market analytics. Having an awareness of top products and their meta-information on Aliexpress can be used to great advantage in business and market analysis.

Another common use is e-commerce primarily via dropshipping - one of the biggest emergent markets of this century is curating a list of products and reselling them directly rather than managing a warehouse. In this case, many shop curators would scrape Aliexpress products to generate curated product lists for their dropshipping shops.

Project Setup

In this tutorial we'll be using Python with Scrapfly's Python SDK.

It can be easily installed via the below pip command:

$ pip install scrapfly-sdk

This guide uses covers core web scraping topics, including HTML parsing, crawling, and concurrent scraping. We recommend visting the below article if you are new these topics:

Finding Aliexpress Products

There are many ways to discover products on Aliexpress.

We could use the search system to find products we want to scrape or explore many product categories. Whichever approach we take our key target is all the same - scrape product previews and pagination.

Let's take a look at Aliexpress listing page that is used in the search or category view:

If we take a look at the page source of either search or category page we can see that all the product previews are stored in a javascript variable window.runParams tucked away in the <script> tag in the HTML source of the page:

This is a common web development pattern, which enables dynamic data management using javascript.

It's good news for us though, as we can pick this data up with a simple regex pattern and parse it like a Python dictionary! This is generally called hidden web data scraping and it's a common pattern in modern web scraping.

With this, we can write the first piece of our Aliexpress scraper code - the product category scraper. We'll be using it to extract product data from category pages:

Above, we request Aliexpress category pages and extract the script tag containing product preview data as JSON. Then, we use regex to extract a clean JSON dataset.

Below is an example output of the data extracted by the above Aliexpress scraping code:

Example output

[

{

"redirectedId": "3256809991664337",

"itemType": "productV3",

"productType": "natural",

"nativeCardType": "nt_srp_cell_g",

"itemCardType": "app_us_local_card",

"transitionaryExpFrame": false,

"productId": "3256809991664337",

"lunchTime": "2025-10-17 00:00:00",

"image": {

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/S83644cf0dd944bb684ef745c1f7ac0f5b.png",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

"title": {

"displayTitle": "Ulefone Armor 29 Pro 5G AI Rugged Phone 6.67\" 120Hz Up to 32GB RAM 512GB ROM 21200mAh 120W 64MP Night Vision Mobile Phone"

},

"prices": {

"skuId": "12000051417710108",

"pricesStyle": "default",

"builderType": "skuCoupon",

"currencySymbol": "$",

"prefix": "Sale price:",

"originalPrice": {

"priceType": "original_price",

"currencyCode": "USD",

"minPrice": 804.98,

"formattedPrice": "US $804.98",

"cent": 80498

},

"salePrice": {

"discount": 34,

"minPriceDiscount": 34,

"priceType": "sale_price",

"currencyCode": "USD",

"minPrice": 524.79,

"formattedPrice": "US $524.79",

"cent": 52479

},

"taxRate": "0"

},

"sellingPoints": [

{

"sellingPointTagId": "m0000026",

"position": 1,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image",

"tagImgUrl": "https://ae01.alicdn.com/kf/Sb6a0486896c44dd8b19b117646c39e36J/116x64.png",

"tagImgWidth": 116,

"tagImgHeight": 64,

"tagStyle": {

"position": "1"

}

},

"source": "bigSale_atm",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000430",

"position": 1,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image",

"tagImgUrl": "https://ae01.alicdn.com/kf/Sbd36ca6043d0446dbe96b6263eded7fcC.png",

"tagImgWidth": 167,

"tagImgHeight": 32,

"tagStyle": {

"position": "1"

}

},

"source": "local_flag",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000598",

"position": 4,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image_text",

"tagText": "$55 off on $405",

"tagImgUrl": "https://ae-pic-a1.aliexpress-media.com/kf/Sa6dfa4ec1ae34f9aad15fc579d3c8bf2T.png",

"tagImgWidth": 28,

"tagImgHeight": 28,

"tagStyle": {

"color": "#F00633",

"isPricePower": "false",

"position": "4"

}

},

"source": "common_big_sale_coupon_sale_atm",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000469",

"position": 4,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image_text",

"tagText": "New shoppers save $280.19",

"tagImgUrl": "https://ae-pic-a1.aliexpress-media.com/kf/S9957aad5196c404bbae29db0392ef0b24/45x60.png",

"tagImgWidth": 45,

"tagImgHeight": 60,

"tagStyle": {

"color": "#D3031C",

"position": "4"

}

},

"source": "welcomedeal_test",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000609",

"position": 10,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image",

"tagImgUrl": "https://ae-pic-a1.aliexpress-media.com/kf/S6850bb93a1684a93bb80c65cc797e929F/527x54.png",

"tagImgWidth": 527,

"tagImgHeight": 54,

"tagStyle": {

"isPricePower": "false",

"position": "10"

}

},

"source": "common_brand_plus_picture_atm",

"resourceCode": "searchItemCard"

}

],

"trade": {

"tradeDesc": "1 sold"

},

"moreAction": {

"actions": [

{

"actionText": "Add to cart",

"actionType": "shopCart"

},

{

"actionText": "See preview",

"actionType": "quickView"

},

{

"actionText": "Similar items",

"actionType": "similarItems"

}

]

},

"trace": {

"pdpParams": {

"pdp_cdi": "%7B%22traceId%22%3A%22210328db17661615275527290ea1f0%22%2C%22itemId%22%3A%223256809991664337%22%2C%22fromPage%22%3A%22search%22%2C%22skuId%22%3A%2212000051417710108%22%2C%22shipFrom%22%3A%22US%22%2C%22order%22%3A%221%22%2C%22star%22%3A%22%22%7D",

"channel": "direct",

"pdp_npi": "6%40dis%21USD%21804.98%21524.79%21%21%21804.98%21524.79%21%40210328db17661615275527290ea1f0%2112000051417710108%21sea%21US%210%21ABX%211%210%21n_tag%3A-29910%3Bd%3Ac2baf3fa%3Bm03_new_user%3A-29895%3BpisId%3A5000000187461912",

"pdp_perf": "main_img=%2F%2Fae-pic-a1.aliexpress-media.com%2Fkf%2FS83644cf0dd944bb684ef745c1f7ac0f5b.png",

"pdp_ext_f": "%7B%22order%22%3A%221%22%2C%22eval%22%3A%221%22%2C%22fromPage%22%3A%22search%22%7D"

},

"exposure": {

"selling_point": "m0000026,m0000430,m0000598,m0000469,m0000609",

"displayCategoryId": "",

"postCategoryId": "5090301",

"algo_exp_id": "009449f0-85c4-4d13-83bb-4acc82320a4d-0"

},

"p4pExposure": {},

"click": {

"algo_pvid": "009449f0-85c4-4d13-83bb-4acc82320a4d",

"haveSellingPoint": "true"

},

"detailPage": {

"algo_pvid": "009449f0-85c4-4d13-83bb-4acc82320a4d",

"algo_exp_id": "009449f0-85c4-4d13-83bb-4acc82320a4d-0"

},

"custom": {},

"utLogMap": {

"formatted_price": "US $524.79",

"csp": "524.79,1",

"x_object_type": "productV3",

"is_detail_next": "1",

"real_trade_count": "1",

"dress_plan_log_info": "",

"salePriceAmount": "52479",

"originPriceAmount": "80498",

"sellerOfferPriceAmount": "80498",

"mixrank_success": "false",

"title_type": "origin_title",

"hitNasaStrategy": "3#2000125006#1;1#2000126003#0",

"tr_source_scene": "-1",

"spu_best_flags": "0,0,0",

"oip": "804.98,1",

"spu_type": "group",

"if_store_enter": "0",

"adjustRatio": "1",

"image_type": "0",

"dress_txt_flag": "",

"isChoice": "false",

"pro_tool_code": "platformItemSubsidy,proEngine",

"algo_pvid": "009449f0-85c4-4d13-83bb-4acc82320a4d",

"hit_19_forbidden": false,

"promotionResultDetails": "5000000187461912:1:650;5000000196272186:1:27369",

"model_ctr": "0.053742967545986176",

"all_platform_value": "0",

"sku_id": "12000051417710108",

"quotaCurrencyCode": "USD",

"custom_group": 1,

"is_all_platform": "0",

"tr_promotion_stage": "-1",

"sku_ic_tags": "[721998,721997]",

"pic_group_id": "1005009513007131",

"dress_creative_flag": "",

"img_url_trace": "S83644cf0dd944bb684ef745c1f7ac0f5b.png",

"is_adult_certified": false,

"mixrank_enable": "false",

"enable_dress": false,

"ump_atmospheres": "new_user_platform_allowance,none",

"BlackCardBizType": "app_us_local_card",

"spBizType": "normal",

"selling_point": "m0000026,m0000430,m0000598,m0000469,m0000609",

"nasaCode": "3#2000125006#-101150002",

"nasa_tag_ids": [],

"categoryId": "509,5090301",

"x_object_id": "1005010177979089"

}

},

"config": {

"evaluation": {

"showSingleRating": "false"

},

"action": {

"closeAnimation": "false"

},

"prices": {

"isPricePower": "false"

}

},

"images": [

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/S83644cf0dd944bb684ef745c1f7ac0f5b.png",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/Se4f52dd200054c48aa4d82ece36075a9i.jpeg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/S3d6f7c6be9ec462da2b9127d8037c404a.jpeg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/Sbaeae579959d4d168e8aca65fdb9d0e2t.jpeg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/Se74e7666d41242efac0c3bc649320e42z.jpeg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/Sef4351d44cf54d4bab031876da9934a78.jpeg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

}

],

"extraParams": {

"sku_images": "H4sIAAAAAAAAADM0sTKytAq2SLYwNElOMjZLNUs1Mba0SExMTbEwtbRMTkkyTUpKNg/WK8hLBwBqAbU9LAAAAA=="

},

"seoWhite": "true"

},

....

]

There's a lot of useful information, but we've limited our parser to the bare essentials to keep things brief. Next, let's scrape Aliexpress search pages.

Scraping Aliexpress Search

To scrape Aliexpress search pages, we'll use a very similar code to the above scraper:

Above, we defined our scrape_search function we use a common web scraping idiom for known length pagination:

We scrape the first page to extract the total number of pages and scrape the remaining pages concurrently. We also add a max_pages parameter to control the number of pagination pages.

Below is an example output of the data extracted:

Example search results:

{

"redirectedId": "3256809260449850",

"itemType": "productV3",

"productType": "natural",

"nativeCardType": "nt_srp_cell_g",

"itemCardType": "app_us_local_card",

"transitionaryExpFrame": false,

"productId": "3256809260449850",

"lunchTime": "2025-07-08 00:00:00",

"image": {

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/S7eb0b80475ca40e691cfd371ac97ed024.jpg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

"title": {

"displayTitle": "Multifunctional 21V rechargeable impact drill lithium battery cross-border flashlight gun drill electric screwdriver electric dr"

},

"prices": {

"skuId": "12000049127951486",

"pricesStyle": "default",

"builderType": "skuCoupon",

"currencySymbol": "$",

"prefix": "Sale price:",

"originalPrice": {

"priceType": "original_price",

"currencyCode": "USD",

"minPrice": 65.85,

"formattedPrice": "US $65.85",

"cent": 6585

},

"salePrice": {

"discount": 64,

"minPriceDiscount": 64,

"priceType": "sale_price",

"currencyCode": "USD",

"minPrice": 23.29,

"formattedPrice": "US $23.29",

"cent": 2329

},

"taxRate": "0"

},

"sellingPoints": [

{

"sellingPointTagId": "m0000430",

"position": 1,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image",

"tagImgUrl": "https://ae01.alicdn.com/kf/Sbd36ca6043d0446dbe96b6263eded7fcC.png",

"tagImgWidth": 167,

"tagImgHeight": 32,

"tagStyle": {

"position": "1"

}

},

"source": "local_flag",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000405",

"position": 1,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image",

"tagImgUrl": "https://ae-pic-a1.aliexpress-media.com/kf/Sc4739506e72e48c69b7714f1bb6b7c75F.png",

"tagImgWidth": 228,

"tagImgHeight": 64,

"tagStyle": {

"position": "1"

}

},

"source": "card_ten_billion_subsidies_common",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000469",

"position": 4,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "image_text",

"tagText": "New shoppers save $42.56",

"tagImgUrl": "https://ae-pic-a1.aliexpress-media.com/kf/S9957aad5196c404bbae29db0392ef0b24/45x60.png",

"tagImgWidth": 45,

"tagImgHeight": 60,

"tagStyle": {

"color": "#D3031C",

"position": "4"

}

},

"source": "welcomedeal_test",

"resourceCode": "searchItemCard"

},

{

"sellingPointTagId": "m0000412",

"position": 4,

"tagStyleType": "default",

"tagContent": {

"displayTagType": "text",

"tagText": "Save $42.56",

"tagStyle": {

"color": "#D3031C",

"position": "4"

}

},

"source": "card_ten_billion_subsidies_common",

"resourceCode": "searchItemCard"

}

],

"evaluation": {

"starRating": 5

},

"trade": {

"tradeDesc": "84 sold"

},

"moreAction": {

"actions": [

{

"actionText": "Add to cart",

"actionType": "shopCart"

},

{

"actionText": "See preview",

"actionType": "quickView"

},

{

"actionText": "Similar items",

"actionType": "similarItems"

}

]

},

"trace": {

"pdpParams": {

"pdp_cdi": "%7B%22traceId%22%3A%222103212517664307590001993e1932%22%2C%22itemId%22%3A%223256809260449850%22%2C%22fromPage%22%3A%22search%22%2C%22skuId%22%3A%2212000049127951486%22%2C%22shipFrom%22%3A%22US%22%2C%22order%22%3A%2284%22%2C%22star%22%3A%225.0%22%7D",

"channel": "direct",

"pdp_npi": "6%40dis%21USD%2165.85%2123.29%21%21%21460.83%21163.00%21%402103212517664307590001993e1932%2112000049127951486%21sea%21US%210%21ABX%211%210%21n_tag%3A-29910%3Bd%3Aa5064ca3%3Bm03_new_user%3A-29895%3BpisId%3A5000000187461913",

"pdp_perf": "main_img=%2F%2Fae-pic-a1.aliexpress-media.com%2Fkf%2FS7eb0b80475ca40e691cfd371ac97ed024.jpg",

"pdp_ext_f": "%7B%22order%22%3A%2284%22%2C%22eval%22%3A%221%22%2C%22fromPage%22%3A%22search%22%7D"

},

"exposure": {

"selling_point": "m0000430,m0000405,m0000469,m0000412",

"displayCategoryId": "",

"postCategoryId": "141705",

"algo_exp_id": "dd34797a-c2ea-4b0d-8cea-d0c03e9a2607-0"

},

"p4pExposure": {},

"click": {

"algo_pvid": "dd34797a-c2ea-4b0d-8cea-d0c03e9a2607",

"haveSellingPoint": "true"

},

"detailPage": {

"algo_pvid": "dd34797a-c2ea-4b0d-8cea-d0c03e9a2607",

"algo_exp_id": "dd34797a-c2ea-4b0d-8cea-d0c03e9a2607-0"

},

"custom": {},

"utLogMap": {

"formatted_price": "US $23.29",

"csp": "23.29,1",

"x_object_type": "productV3",

"is_detail_next": "1",

"real_trade_count": "84",

"dress_plan_log_info": "",

"salePriceAmount": "2329",

"originPriceAmount": "6585",

"sellerOfferPriceAmount": "6393",

"mixrank_success": "false",

"title_type": "origin_title",

"hitNasaStrategy": "3#2000125006#1;1#2000126003#0",

"spu_best_flags": "0,0,0",

"oip": "65.85,1",

"star_rating": "5.0",

"spu_type": "group",

"if_store_enter": "0",

"adjustRatio": "1.03",

"spu_id": "1005008677244713",

"image_type": "0",

"dress_txt_flag": "",

"isChoice": "false",

"pro_tool_code": "platformItemSubsidy,superDeals",

"algo_pvid": "dd34797a-c2ea-4b0d-8cea-d0c03e9a2607",

"hit_19_forbidden": false,

"promotionResultDetails": "5000000187461913:1:700;5000000187548630:1:3556",

"model_ctr": "0.18391621112823486",

"spu_replace_id": "-1",

"sku_id": "12000049127951486",

"quotaCurrencyCode": "CNY",

"custom_group": 3,

"is_all_platform": "0",

"sku_ic_tags": "[721998,721997]",

"pic_group_id": "1005008677244713",

"dress_creative_flag": "",

"img_url_trace": "S7eb0b80475ca40e691cfd371ac97ed024.jpg",

"is_adult_certified": false,

"mixrank_enable": "false",

"enable_dress": false,

"ump_atmospheres": "new_user_platform_allowance,fd_discount",

"BlackCardBizType": "app_us_local_card",

"spBizType": "normal",

"selling_point": "m0000430,m0000405,m0000469,m0000412",

"nasaCode": "3#2000125006#-101150002",

"nasa_tag_ids": [],

"categoryId": "1420,1417,141705",

"x_object_id": "1005009446764602"

}

},

"config": {

"evaluation": {

"showSingleRating": "false"

},

"action": {

"closeAnimation": "false"

},

"prices": {

"isPricePower": "false"

}

},

"images": [

{

"imgUrl": "//ae-pic-a1.aliexpress-media.com/kf/S7eb0b80475ca40e691cfd371ac97ed024.jpg",

"imgWidth": 350,

"imgHeight": 350,

"imgType": "0"

},

....

],

"extraParams": {

"sku_images": "H4sIAAAAAAAAABXKuQ2AMAwAwI2QfyehZIRMkM+RqNi/AqprDqWgc6mDP5IjgmZh576cJgUg2prNLI772SeblWoqPfoAdSBp4QlkjhwrR2Ik0uuvL9Vu3KhYAAAA"

},

"seoWhite": "true"

},

Now, that we can find products let's take a look at how we can scrape product data, pricing info and reviews!

Scraping Aliexpress Products

Aliexpress product pages are protected againist CAPTCHA challenges and load its data using JavaScript. Hence, scraping Aliexpress product pages requires JavaScript to be enabled. For this, we'll use ScrapFly's JavaScript rendering feature.

To scrape Aliexpress products. all we need is a product numeric ID, which we already found in the previous chapter by scraping product previews from the Aliexpress search scraper. For example, this hand drill product has the numeric ID of 4000927436411.html .

We'll request the product pages and parse their data using XPath selectors:

Here, wait for the full page to load and click the button responsible for loading the full product specifications. Then, we use XPath selectors to parse the full product details. Here's what the retrieved results should look like:

Eexample output:

{

"info": {

"name": "10mm Electric Brushless Drill 2-Speed Self-locking Cordless Drill Screwdriver 60-100Nm Torque Power Tools For Makita 18V Battery",

"productId": 1005006717259012,

"link": "https://www.aliexpress.com/item/1005006717259012.html",

"media": [

"https://ae-pic-a1.aliexpress-media.com/kf/Sab4cf830d63149b7acf4b95773a75fe2k.png_80x80.png_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/S8129eebce8fd466993f36afd1e874563Z.jpg_80x80.jpg_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/Sa5c6d11d7bf54b98b756438067f08c25S.png_80x80.png_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/Sf865a7763bda4c1c9366b6c91763df922.png_80x80.png_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/Sb0f9bd88a59e4c859acb21e1b48e821e4.png_80x80.png_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/S03f54a829e464bfbafc5df0741d5007d4.jpg_80x80.jpg_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/S41bbac0e2a2a4bfd87bba0307e69a040G.jpg_120x120.jpg_.webp",

"https://ae-pic-a1.aliexpress-media.com/kf/S0cb3b457c92d4e82825365d4b1bfc66ac.png_120x120.png_.webp"

],

"rate": 5,

"reviews": 494,

"soldCount": 2000,

"availableCount": null

},

"pricing": {

"priceCurrency": "USD $",

"price": 40.04,

"originalPrice": 42.8,

"discount": "6% off"

},

"specifications": [

{

"name": "Hign-concerned Chemical",

"value": "None"

},

{

"name": "Max. Drilling Diameter",

"value": "10mm"

},

{

"name": "Origin",

"value": "Mainland China"

},

{

"name": "Brand Name",

"value": "PATUOPRO"

},

{

"name": "Motor type",

"value": "Brushless"

},

{

"name": "Power Source",

"value": "Battery"

},

{

"name": "Drill Type",

"value": "Cordless Drill"

},

{

"name": "No Load Speed",

"value": "0-450/0-2000r/min"

},

{

"name": "Torque Setting",

"value": "21+1"

},

{

"name": "Chuck Size",

"value": "3/8\" (0.8-10mm)"

}

],

"shipping": {

"cost": null,

"currency": "$",

"delivery": "Oct 04"

},

"faqs": [

{

"question": "Is the device as powerful as the observed force on the chord known by DTD",

"answer": null

},

{

"question": "Comes with charger",

"answer": null

},

{

"question": "Bought a patuopro screwdriver jammed the engine. I can't find the same on the site. 50 cm long",

"answer": null

}

],

"seller": {

"name": "PATUOPRO Official Store",

"link": "//www.aliexpress.com/store/1102818328",

"id": 1102818328,

"info": {

"positiveFeedback": "97.2%",

"followers": "2292"

}

}

}

Above, we extracted the full product details. However, we miss the review data. Let's take a look at how to scrape Aliexpress review data.

Scraping Aliexpress Reviews

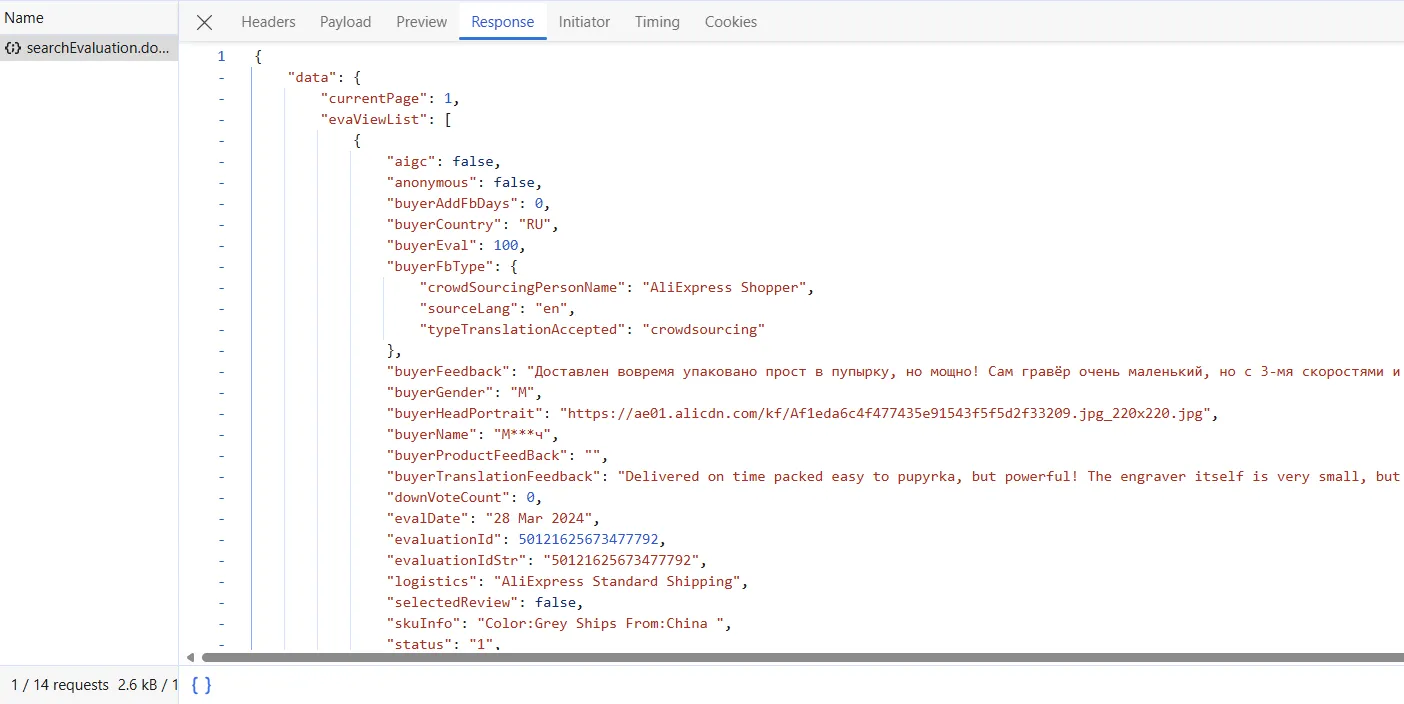

Aliexpress' product reviews are dynamically retrieved through background API requests. To view this API, follow the steps below:

- Open the browser developer tools by pressing the

F12key - Select the Network tab and filter by

Fetch/XHRcalls - Trigger the review API by clicking the "View More" button to fetch all reviews

After following the above steps, you will the below XHR request captured:

Above, we can see the review data retrieved directly as JSON, which is later rendered into HTML. To scrape Aliexpress reviews, we'll request the API endpoint directly. This approach is commonly known as hidden API scraping.

Let's request the hidden Aliexpress reviews API within our scraper. We'll also utilize its URL parameters for pagination:

Above, we create a review crawler. It starts by requesting the review API for the first page to retrieve the total number of pages available. Then, the remaining review pages are scraped concurrently. Here's an example output of the above Aliexpress scraper:

Example Output:

{

"reviews": [

{

"aigc": false,

"anonymous": false,

"buyerAddFbDays": 0,

"buyerCountry": "US",

"buyerEval": 80,

"buyerFbType": {

"crowdSourcingPersonName": "AliExpress Shopper",

"sourceLang": "en",

"typeTranslationAccepted": "crowdsourcing"

},

"buyerFeedback": "Патрон меьалевий, все інше пластик, дуже легкий, патрон не швидкознімний - для дому піде, дуууже легкий.",

"buyerName": "a***n",

"buyerProductFeedBack": "",

"buyerTranslationFeedback": "The cartridge is mealevy, everything is plastic, it is light, the cartridge is not shvidkoznym-for the House, the duuguge is light.",

"downVoteCount": 0,

"evalDate": "25 Apr 2024",

"evaluationId": 30072011607750988,

"evaluationIdStr": "30072011607750988",

"logistics": "Aliexpress Selection Standard",

"selectedReview": false,

"skuInfo": "Color:1PC 2.0Ah Battery Plug Type:EU Ships From:CHINA ",

"status": "1",

"trendyol": false,

"upVoteCount": 0

},

....

],

"evaluation_stats": {

"evarageStar": 4.8,

"evarageStarRage": 96.8,

"fiveStarNum": 447,

"fiveStarRate": 89.0,

"fourStarNum": 31,

"fourStarRate": 6.2,

"negativeNum": 15,

"negativeRate": 3.0,

"neutralNum": 9,

"neutralRate": 1.8,

"oneStarNum": 11,

"oneStarRate": 2.2,

"positiveNum": 478,

"positiveRate": 95.2,

"threeStarNum": 9,

"threeStarRate": 1.8,

"totalNum": 502,

"twoStarNum": 4,

"twoStarRate": 0.8

}

}

With this last feature, we've covered the main scrape targets of Aliexpress - we scraped search to find products, product pages to find product data and product reviews to gather feedback intelligence. Finally, to scrape at scale let's take a look at how can we avoid blocking and captchas.

Bypass Aliexpress Blocking and Captchas

Scraping product data of Aliexpress.com seems to be easy though unfortunately when scraping at the scale we might be blocked or requested to start solving captchas which will hinder our web scraping process.

To get around this, let's take advantage of ScrapFly API, which can avoid all of these blocks for us!

Which offers several powerful features that'll help us to get around AliExpress's blocking:

For this, we'll be using scrapfly-sdk python package and ScrapFly's anti scraping protection bypass feature. First, let's install scrapfly-sdk using pip:

$ pip install scrapfly-sdk

To take advantage of ScrapFly's API in our AliExpress product scraper all we need to do is our httpx session code with scrapfly-sdk requests.

FAQ

To wrap this guide up, let's take a look at some frequently asked questions about web scraping aliexpress.com:

Is web scraping aliexpress.com legal?

Yes. Aliexpress product data is publicly available, and we're not extracting anything personal or private. Scraping aliexpress.com at slow, respectful rates would fall under the ethical scraping definition. See our Is Web Scraping Legal? article for more.

Is there an Aliexpress API?

No. Currently there's no public API for retrieving product data from Aliexpress.com. Fortunately, as covered in this tutorial, web scraping Aliexpress is easy and can be done with a few lines of Python code!

Scraped Aliexpress data is not accurate, what can I do?

The main cause of data difference is geo location. Aliexpress.com shows different prices and products based on the user's location so the scraper needs to match the location of the desired data. See our previous guide on web scraping localization for more details on changing the web scraping language, price or location.

Aliexpress Scraping Summary

In this tutorial, we built an Aliexpress data scraper capable of using the search system to discover products and scraping product data and product reviews.

We have used Python with httpx and parsel packages and to avoid being blocked we used ScrapFly's API, which smartly configures every web scraper connection to avoid being blocked. For more on ScrapFly see our documentation and try it out for free!

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.