How to Scrape With Headless Firefox

Discover how to use headless Firefox with Selenium, Playwright, and Puppeteer for web scraping, including practical examples for each library.

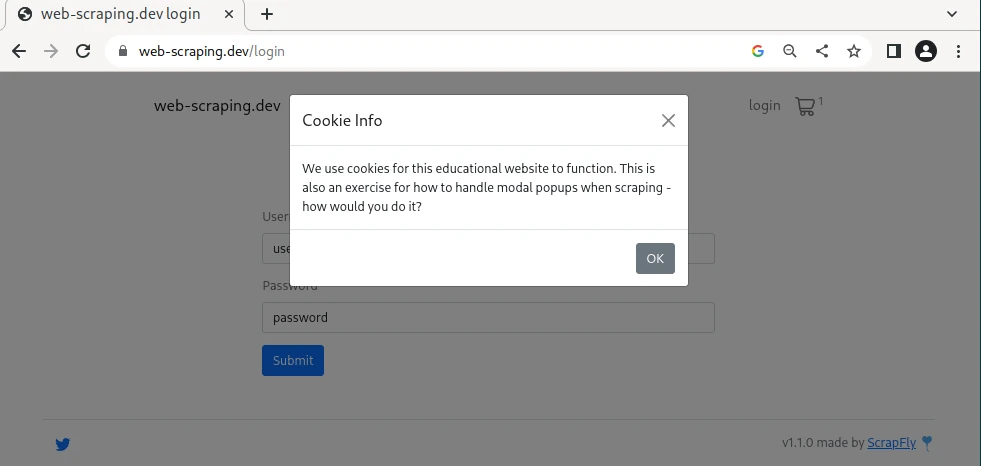

Modal pop-us are most commonly encountered as cookie consent popups or request to login popups. They are created using custom javascript that hide the content on page load and show some sort of message like this one:

There are multiple ways to handle modal pop-ups:

For example, let's take a look at web-scraping.dev/login page which on page load throws a cookie pop-up:

from playwright.sync_api import sync_playwright, TimeoutError

with sync_playwright() as p:

browser = p.chromium.launch(headless=False)

page = browser.new_page()

page.goto("https://web-scraping.dev/login")

# Option #1 - use page.click() to click on the button

try:

page.click("#cookie-ok", timeout=2_000)

except TimeoutError:

print("no cookie popup")

# Option #2 - delete the popup HTML

# remove pop up

cookie_modal = page.query_selector("#cookieModal")

if cookie_modal:

cookie_modal.evaluate("el => el.remove()")

# remove grey backgdrop which covers the screen

modal_backdrop = page.query_selector(".modal-backdrop")

if modal_backdrop:

modal_backdrop.evaluate("el => el.remove()")

Above, we explore two ways to handle modal pop-ups: clicking a button that would dismiss it and hard removing them from the DOM. Generally, the first approach is more reliable as the real button click can have functionality attached to it like setting a cookie so the pop-up doesn't appear again. For cases when it's a login requirement or advertisement the second approach is more suited.

This knowledgebase is provided by Scrapfly — a web scraping API that allows you to scrape any website without getting blocked and implements a dozens of other web scraping conveniences. Check us out 👇