PerimeterX is one of the most popular anti-bot protection services on the market, offering a wide range of protection against bots and scrapers. PerimeterX has different products, such as bot defender, page defender, and API defender, which are all used as anti bot systems to block web scrapers.

In this article, we'll explain how to bypass PerimeterX bot protection. We'll look at how PerimeterX detects scrapers first using varios web technologies.

We'll also cover common PerimeterX errors and signs that indicate PerimeterX detection and blocking.

Finally, follow up with how to modify the scraper code to prevent being detected by PerimeterX in data extraction process. Let's dive in!

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.

What is PerimeterX?

PerimeterX (previously Human) is a web service that detects bots on websites and apps from automated scripts, such as web scrapers. It uses a combination of web technologies and behavior analysis to determine whether the request sender is a human or a bot.

It is used as bot networks on popular websites like Zillow.com, Fiverr, and many others. So, by understanding PerimeterX bot detection system bypass, we can open the for scraping many target websites.

Next, let's take a look at some popular PerimeterX errors.

Popular PerimeterX Errors

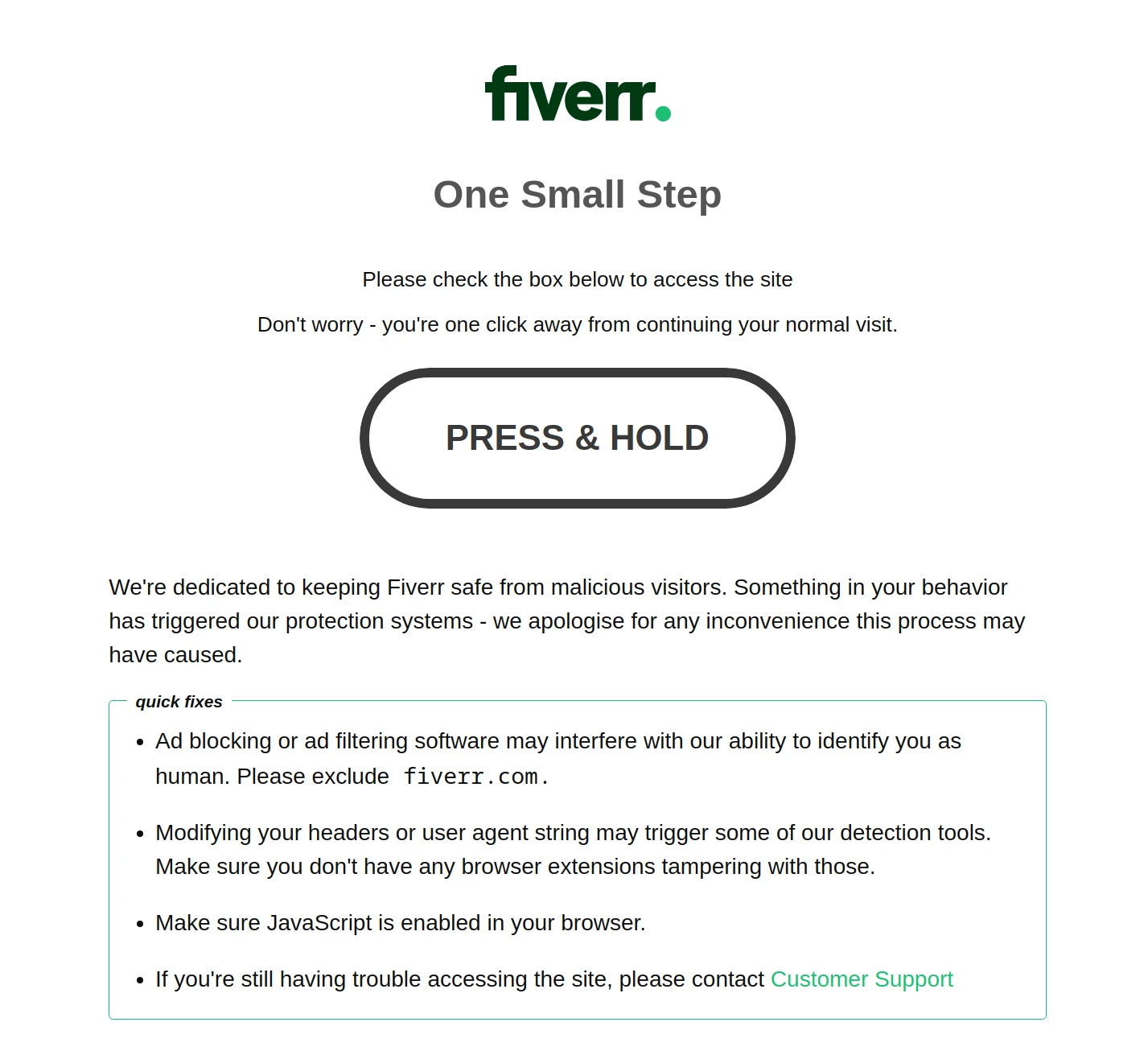

Most of the PerimeterX bot blocks result in HTTP status codes 400-500, 403 being the most common one. The response body usually contains a request to "enable JavaScript" or "press and hold" button:

This error is mostly encountered on the first request to the target website. However, PerimeterX uses behavioral analysis techniques, making it able to block http requests at any point during the web scraping process.

Let's take a look at how exactly PerimeterX is detecting bots and web scrapers, which results in the "press and hold" CAPTCHA challenge.

How Does PerimeterX Detect Web Scrapers?

To detect bots and web scraping attempts, PerimeterX uses various different technologies to estimate whether the traffic comes from bots or genuine user interactions.

PerimeterX uses a combination of various fingerprinting methods and connection analysis to calculate a trust score for each client. This score determines whether the user can access the website or not.

Based on the final trust score, the user is either allowed to access the website or blocked with a PerimeterX CAPTCHA block page, such as solving JavaScript challenges (i.e. the "press and hold" button).

This process makes it challenging to scrape a PerimeterX-protected website, as many factors play a role in the detection process. However, if we study each factor individually, we can see that bypassing PerimeterX is very much possible!

TLS Fingerprinting

TLS (or SSL) is the first step in the establishment of HTTP connections when a request is sent to a web page. It is used to encrypt the sent between the client and the server during the secure HTTPS channel.

The first step of this process is called the TLS handshake, where both the client and the server have to negotiate how the encryption methods. This is where the TLS fingerprinting comes into play. Since different computers, programs and even programming libraries have different TLS capabilities. It leads to creating a unique fingerprint.

So, if a web scraper uses a library with different TLS capabilities compared to a regular web browser, it can be identified quite easily. This is generally referred to as JA3 fingerprinting.

For example, some libraries and tools used in web scraping have unique TLS negotiation patterns that can be recognized instantly. On the other hand, other clients can use the same TLS techniques as a regular web browser, making it difficult to detect them as bots.

To validate TLS fingerprint, see ScrapFly's JA3 fingerprint web tool, which extracts the JA3 fingerprint used.

So, use web scraping tools and libraries that are resistant to JA3 fingerprinting to have a PerimeterX bypass with a high success rate.

For further details, refer to our dedicated guide on TLS fingerprinting.

How TLS Fingerprint is Used to Block Web Scrapers?

TLS fingeprinting is a popular way to identify web scrapers that not many developers are aware of. What is it and how can we fortify our scrapers to avoid being detected?

IP Address Fingerprinting

The next step of the PerimeterX anti-bot identification process is the IP address analysis. Since IP addresses come in different types, they can reveal a lot of information about the client.

PerimeterX uses the IP address details to determine whether traffic is coming from bots or human users.

There are different types of IP addresses that are offered by most proxy providers:

- Residential proxies - home addresses assigned by internet providers to retail individuals. So, residential IP addresses provide a positive trust score as these are mostly used by humans and are expensive to acquire.

- Mobile proxies - addresses assigned by mobile phone towers to mobile users. Mobile IPs also provide a positive trust score as these are mostly used by humans. In addition, since mobile towers might share and recycle IP addresses, it makes it more difficult for anti-bot solutions to rely on IP addresses for bot identification.

- Datacenter proxies - addresses assigned to various data centers and server platforms like Amazon's AWS, Google Cloud etc. Datacenter IPs provide a significant negative trust score, as they are likely to be used by bots and scripts.

Using IP monitoring, the PerimeterX anti bot system can estimate how likely the connecting client is a human, as most people browse from residential IPs while most mobile IPs are used for mobile traffic.

Furthermore, PerimeterX can detect a high amount of traffic coming from the same IP address, leading to IP throttling or even blocking. Therefore, hiding the IP address and distributing the traffic across multiple IP addresses can prevent PerimeterX from detecting the IP origin.

For a more in-depth look, refer to our guide on IP address blocking.

How to Avoid Web Scraper IP Blocking?

How IP addresses are used in web scraping blocking. Understanding IP metadata and fingerprinting techniques to avoid web scraper blocks.

HTTP Details

The next phase of the PerimeterX fingerprinting methods is the HTTP connections. This includes HTTP connection details like:

Most of the web is running on HTTP2 and many web scraping tools still use HTTP1.1, which is a dead giveaway that this is a bot. Many newer HTTP client libraries like httpx or cURL support HTTP2, but it's not enabled by default.

HTTP2 can also be succeptible to fingerprinting. So, check ScrapFly's http2 fingerprint test page for more info.

Then HTTP header values and their order can play a major role:

- Header Values

Pay attention toX-prefixed headers and the usual suspects like the User-Agent,OriginandRefereras they can be used to identify web scrapers. - Header Order

Web browsers have a specific way of ordering request headers. So, if the headers are not ordered in the same way as a web browser, the request can be identified as coming from a bot. Moreover, some HTTP libraries, such asrequestsin Python, do not respect the header order and can be easily identified.

So, make sure the browser headers used in web scraper requests match a real web browser, including the ordering to bypass PerimeterX protected websites while scraping.

For more details, refer to our guide on the role of HTTP headers in web scraping.

How Headers Are Used to Block Web Scrapers and How to Fix It

Introduction to web scraping headers - what do they mean, how to configure them in web scrapers and how to avoid being blocked.

Javascript Fingerprinting

Finally, the most powerful tool in PerimeterX's arsenal is the JavaScript fingerprinting.

Since the server can execute arbitrary JavaScript code on the client's side, it can extract various details about the connecting client, such as:

- Javascript runtime details.

- Hardware details and capabilities.

- Operating system details.

- Web browser details.

That's loads of data that can be used while calculating the trust score.

Fortunately, JavaScript takes time to execute and is prone to false positives. This limits the practical Javascript fingerprinting application. In other words, not many users can wait 3 seconds for the page to load or tolerate false positives.

For an in-depth look, refer to our article on JavaScript use in web scraping detection.

Bypassing the JavaScript fingerprinting is the most difficult task here. In theory, it's possible to reverse engineer and simulate all of the JavaScript tasks PerimeterX is performing and feed it fake results, but it's not practical.

A more practical approach is to use a real web browser for web scraping.

This can be done using browser automation libraries like Selenium, Puppeteer or Playwright that can start a real headless browser and navigate it for web scraping.

So, introducing browser automation to your scraping pipeline can drastically raise the trust score for bypassing PerimeterX.

Tip: many advanced scraping tools can even combine browser and HTTP scraping capabilities for optimal performance. Using resource-heavy browsers to establish a trust score and continue scraping using fast HTTP clients like httpx in Python (this feature is also available using Scrapfly sessions)

Behavior Analysis

Even when scrapers' initial connection is the same as a real web browser, PerimeterX can still detect them through behavior analysis using machine learning algorithms.

This is done by monitoring the connection and analyzing the behavior of the client, including:

- Pages that are being visited, humans browse in more chaotic patterns.

- Connection speed and rate, humans are slower and more random than bots.

- Loading of resources like images, scripts, stylesheets etc.

This means that the trust score is not a constant number and will be constantly adjusted based on the requests' behavior.

So, it's important to distribute web scraper traffic through multiple agents using proxies and different fingerprint configurations to prevent behavior analysis. For example, if browser automation tools are used different browser configurations should be used for each agent like screen size, operating system, web browser version, IP address etc.

How to Bypass PerimeterX Anti Bot?

Now that we're familiar with all of the ways PerimeterX can detect web scrapers, let's see how to bypass it.

To bypass Perimeter X at scale we need high technical knowledge of how to reverse engineer and fortify our scraper against detection as well as use real web browser to solve browser javascript challenges.

Let's take a look at existing options and how they play a role in Perimeter X bypass

Start with Headless Browsers

As Perimeter X is using JavaScript fingerprinting and challenges to detect web scrapers, using headless browsers is often a requirements.

Scraping using headless browsers is a common web scraping technique which uses tools like Selenium, Puppeteer or Playwright to automate a real browser without it's GUI elements.Headless browsers can be used to execute javascript challenges and Perimeter X fingerprinting which can be used to bypass the anti-bot systems. Alternatively, each javasript challenge and fingerprint has to be reverse engineered and solved manually which is a very tough task even for seasoned developers.

Use High Quality Residential Proxies

As Perimeter X is using IP address analysis to determine the trust score, using high-quality residential proxies can help bypass the IP address fingerprinting.

Residential proxies are real IP addresses assigned by internet providers to retail individuals, making them look like real users.

The Complete Guide To Using Proxies For Web Scraping

Introduction to proxy usage in web scraping. What types of proxies are there? How to evaluate proxy providers and avoid common issues.

Web scraping APIs like Scrapfly already use high quality proxies by default as that's often the best way to bypass anti-scraping protection at scale.

Try undetected-chromedriver

To bypass TLS, HTTP and Javascript fingerprinting, we can use a real web browser like Chrome or Firefox through Selenium, Playwright or Puppetteer automation libraries. However, these browsers are easily detected by PerimeterX when they're being run in headless mode.

Headless browsers perform slightly differently and this is where undetected-chromedriver community patch can be helpfull.

undetected-chromedriver patches Selenium chromedriver with various fixes that prevent headless browser identification by PerimeterX. This includes fixing the TLS, HTTP and Javascript fingerprints.

Try Puppeteer Stealth Plugin

Puppeteer for NodeJS is another popular headless browser automation library like Selenium that can be used to bypass PerimeterX.Just like undetected-chromedriver for Selenium, Puppeteer Stealth Plugin is a community patch for Puppeteer that fixes the headless browser detection issues. It patches the TLS, HTTP and Javascript fingerprints to make the headless browser look like a real web browser.

Try curl-impersonate

curl-impersonate is a community tool that fortifieslibcurl HTTP client library to mimic the behavior of a real web browser. It patches the TLS, HTTP and Javascript fingerprints to make the HTTP requests look like they're coming from a real web browser.However, this works only curl powered web scrapers which can be difficult to use especially compared to contemporary http clients like fetch or requests. For more see how to scrape with curl intro.

Try Warming Up Scrapers

To bypass behavior analysis adjusting scraper behavior to appear more natural can drastically increase Perimeter X trust scores.

In reality, most human users don't visit product URLs directly. They often explore websites in steps like:

- Start with homepage

- Browser product categories

- Search for product

- View product page

Prefixing scraping logic with this warmup behavior can make the scraper appear more human-like and increase behavior analysis detection.

Rotate Real User Fingerprints

For sustained web scraping and PerimeterX bypass in 2024, these browsers should always be remixed with different, realistic fingerprint profiles: screen resolution, operating system, browser type all play an important role in PerimeterX's trust score.

Each headless browser library can be configured to use different resolution and rendering capabilities. Distributing scraping through multiple realistic browser configurations can prevent PerimeterX from detecting the scraper.

For more see ScrapFly's browser fingerprint tool to see how your browser looks like to PerimeterX. This tool can be used to collect different browser fingerprints from real web browsers which can be used scraping.

Keep an Eye on New Tools

Open source web scraping is tough as each new discovered technique is quickly patched for by anti-bot services like Perimeter X which results in a cat and mouse game.

For best results tracking web scraping news and popular github repositories can help to stay ahead of the curve:

- Scrapfly Blog for latest web scraping news and tutorials.

- Github issue and network pages for tools like curl-impersonate, undetected-chromdriver often contain new bypass techniques and patches that are not available on the

mainbranch.

If all that seems like too much trouble let Scrapfly handle it for you! 👇

Bypass PerimeterX with ScrapFly

Bypassing Perimeter X anti-bot while possible is very difficult - let Scrapfly do it for you!

ScrapFly is a web scraping API with an automatic PerimeterX bypass and we achieve this by:

- Maintaining a fleet of real, reinforced web browsers.

- Collecting a database of thousands of real fingerprint profiles.

- Millions of self-healing proxies of the highest possible trust score.

- Constantly evolving and adapting to new anti-bot systems.

- We've been doing this publicly since 2020 with the best bypass on the market!

It takes Scrapfly several full-time engineers to maintain this system, so you don't have to!

For example, to scrape web pages protected by PerimeterX using ScrapFly's Python SDK all we need to do is enable the Anti Scraping Protection bypass feature:

from scrapfly import ScrapflyClient, ScrapeConfig

scrapfly = ScrapflyClient(key="YOUR API KEY")

result = scrapfly.scrape(ScrapeConfig(

url="https://fiverr.com/",

asp=True,

# we can also enable headless browsers to render web apps and javascript powered pages

render_js=True,

# and set proxies by country like Japan

country="JP",

# and proxy type like residential:

proxy_pool=ScrapeConfig.PUBLIC_RESIDENTIAL_POOL,

))

print(result.scrape_result)

FAQ

To wrap this article let's take a look at some frequently asked questions regarding web scraping PerimeterX pages:

Is it legal to scrape PerimeterX protected pages?

Yes. Web scraping publicly available data is perfectly legal around the world as long as the scrapers do not cause damage to the website.

Is it possible to bypass PerimeterX using cache services?

Yes, public page caching services like Google Cache or Archive.org can be used to bypass PerimeterX protected pages as Google and Archive is tend to be whitelisted. However, since caching takes time the cached page data is often outdated and not suitable for web scraping. Cached pages can also be missing parts of content that are loaded dynamically.

Is it possible to bypass PerimeterX entirely and scrape the website directly?

No. PerimeterX integrates directly with the server software and is very difficult to reach the server without going through it. It is possible that some servers could have PerimeterX misconfigured but it's very unlikely.

What are some other anti-bot services?

There are many other anti-bot WAF services like Cloudflare, Akamai, Datadome, Imperva Incapsula and Kasada. However, they function very similarly to PerimeterX. So, all the technical aspects in this tutorial can be applied to them as well.

Summary

In this article, we took a deep dive into how to bypass PerimeterX anti-bot system when web scraping.

To start, we've taken a look at how Perimeter X identifies web scrapers through TLS, IP and JavaScript client fingerprint analysis. We saw that using residential proxies and fingerprint-resistant libraries is a good start. Further, using real web browsers and remixing their browser fingerprinting data can make web scrapers much more difficult to detect.

Finally, we've taken a look at some frequently asked questions like alternative bypass methods and the legality of it all.

For an easier way to handle web scraper blocking and power up your web scrapers check out ScrapFly for free!