Imperva (aka Incapsula) is a popular WAF service used by many websites like Glassdoor, Udemy, Zillow, and wix.com.

This service is used to block bots, such as web scrapers from accessing the website. So, to scrape public data from these websites, the scrapers need to bypass Imperva Incapsula bot protection.

In this article, we'll explain how to bypass Imperva's anti-scraping protection in 2026. We'll start by taking a quick look at what is Imperva, how to identify it and how does it identify web scrapers. Then, we'll take a look at existing techniques and tools for bypassing Imperva bot protection. Let's dive in!

Key Takeaways

Learn to bypass Imperva Incapsula anti-scraping protection using Python with proper headers, proxy rotation, and specialized tools for accessing protected websites and avoiding bot detection.

- Identify Incapsula protection through specific error messages and HTTP status codes

- Bypass Incapsula's fingerprinting by mimicking real browser headers and TLS signatures

- Use residential proxies with proper IP rotation to avoid geographical blocking

- Handle Incapsula's JavaScript challenges with browser automation tools like Playwright or Puppeteer

- Implement exponential backoff retry logic with 403 status code detection for rate limiting

- Use specialized tools like ScrapFly for automated Incapsula bypass with anti-blocking features

What is Imperva (aka Incapsula)?

Imperva (previously known as Incapsula) is a WAF service suite that is used to protect websites from unwanted connections. It has legitimate uses in the context of web scraping, which is blocking web scrapers from accessing public data.

Imperva/Incapsula is one of the first WAF services to be used by websites to block web scraping attempts and is generally well understood by the web scraping community. Despite being around for years, Imperva continues to evolve with new detection methods in 2025-2026. So, let's explain how to identify it and how it's identifying web scrapers.

Imperva Bot Protection & WAF Features

Imperva offers a comprehensive suite of bot protection and web application firewall features designed to stop automated threats. Understanding these features helps us better prepare our scrapers to handle them. Here are the main protection mechanisms:

Advanced Bot Protection: Imperva's advanced bot protection uses machine learning and behavioral analysis to distinguish between legitimate users and automated bots. In 2025, this system has become more sophisticated, analyzing hundreds of signals in real-time to make blocking decisions.

Web Scraping Defense: Specifically designed to detect and block web scraping attempts, this feature monitors for patterns typical of data extraction activities such as rapid page navigation, consistent timing patterns, and unusual request volumes.

Bot Management Features: Imperva provides granular control over bot traffic, allowing websites to:

- Block malicious bots completely

- Rate-limit suspicious traffic

- Challenge borderline cases with CAPTCHAs

- Allow verified good bots (like search engine crawlers)

API Abuse Protection: As APIs become prime targets for data extraction, Imperva includes specialized protection for API endpoints. This monitors for credential stuffing, account takeover attempts, and automated API scraping.

DDoS Protection: While primarily for preventing denial-of-service attacks, the DDoS protection also helps identify distributed scraping operations that might look like attack traffic patterns.

The combination of these features creates a multi-layered defense system. This is why bypassing Imperva requires a comprehensive approach addressing multiple detection vectors rather than a single technique.

Imperva Block Page Examples

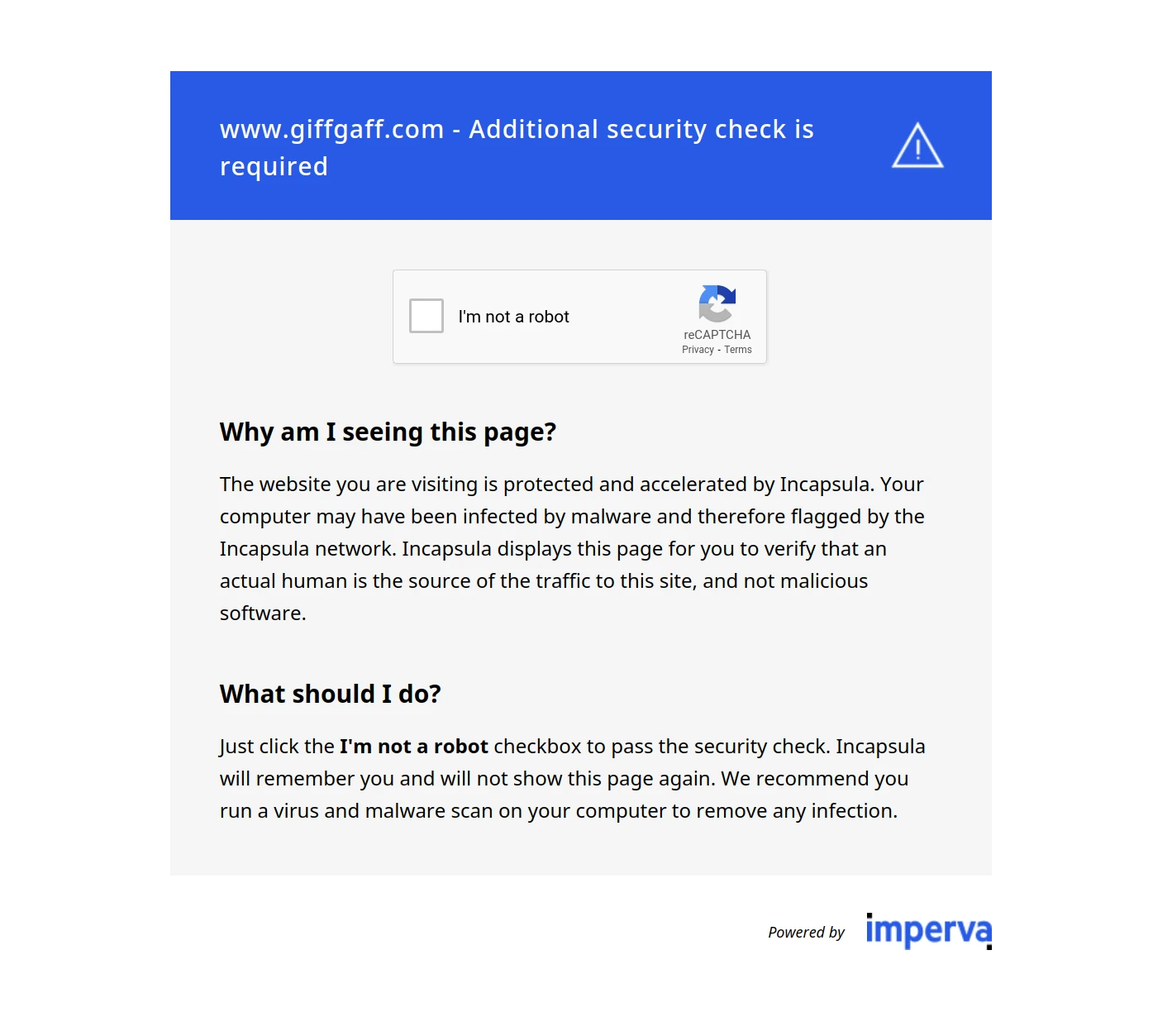

Most of Imperva bot blocks result in HTTP status codes 400-500, 403 being the most common one. Moreover, blocked pages can appear in status code 200 to confuse web scrapers.

The HTML content often indicates the block is powered by Imperva:

These errors are mostly encountered on the first request to the website. However, it can block the requests at any point during the scrape process as it constantly tracks connections.

Here's the full list of Incapsula block fragments.

Powered By Incapsulatext snippet in HTML.Incapsula incident IDkeyword in HTML._Incapsula_Resourcekeyword in HTML.subject=WAF Block Pagekeyword in HTML.visid_incapvalue in request headers.X-Iinforesponse header.Set-Cookieheader has cookie fieldincap_sesandvisid_incap.X-CDNheader containing "Incapsula" or "Imperva" values.

How does Imperva identify web scrapers?

To detect web scrapers, Imperva is taking advantage of many different analysis and fingerprinting techniques.

Imperva is using combination of the above techniques to establish a unique fingerprint called a trust score for each connecting client.

Based on the final trust score, Imperva decides whether to block the client, let it through or request an additional verification, such as CAPTCHA.

This process seems to be complex and daunting. However, if we study each component individually, we'll find that bypassing Imperva is possible. Let's take a look at each of these components.

TLS Fingerprinting

TLS (or SSL) fingerprinting is a modern technique of identifying a client based on the way the client and server negotiate an encrypted connection, called as the JA3 fingerprint (and its successor JA4).

For a secure connection (i.e., https), the encryption method needs to be negotiated between the client and server, as there are many different cipher and encryption options. So, if a connecting client has unusual capabilities, it can be easily identified as a bot.

Libraries used in web scraping can have different encryption capabilities compared to a web browser. So, web scrapers can be easily identified by their TLS fingerprint even before the actual HTTP request is made.

To avoid this, use libraries and tools that are JA3/JA4 resistant. To validate this, see ScrapFly's JA3 fingerprint web tool, which shows your connection fingerprint.

For more see our full introduction to TLS fingerprinting which covers TLS fingerprinting in greater detail.

IP Address Fingerprinting

The next step is the IP address analysis. Imperva has access to IP meta information databases that can be used to identify client's intentions and capabilities.

For example, if the IP address belongs to a known proxy or datacenter service, it can be easily identified as a bot and get blocked. If the IP address is from a residential ISP, it is much more likely to be a human. Same for mobile networks.

As of 2025, Imperva has significantly expanded its IP reputation databases, making datacenter proxy detection more sophisticated. So, use high-quality residential or mobile proxies to avoid being detected.

For a more in-depth look, see our full introduction to IP blocking and what IP metadata fields are used in bot detection.

HTTP Details

With the connection established the next step is HTTP connection analysis.

Most of the natural web runs on HTTP/2 and increasingly HTTP/3 protocols (that's what modern web browsers prefer). This makes any HTTP/1.1 connection suspicious. Moreover, some HTTP libraries still use or default to HTTP/1.1, which is a dead giveaway that the request is automated. More modern and feature-rich libraries like Python's httpx or cURL support HTTP/2 (and HTTP/3 in newer versions) though it's not always enabled by default.

Then, request header values and ordering can be used to identify the client. Web browser headers generation is well understood and reliable, so it's on web scrapers to match it. For example, web browsers send headers like User-Agent, Sec-CH-UA, Sec-Fetch-Site, Origin and Referer headers and in a specific order to boot.

So, make sure to use HTTP/2 (or HTTP/3) and match header values and ordering of a real web browser.

For more see our full introduction to request headers role in blocking

Javascript Fingerprinting

The final step is JavaScript fingerprinting. This is a very powerful technique that can be used to identify a client based on the way it executes JavaScript code.

Since the server is allowed to execute almost any arbitrary javascript code on the client's machine it can extract a lot of information about the client like:

- JavaScript engine details

- Hardware and operating system information

- Web browser data and rendering capabilities

- Canvas and WebGL fingerprinting

- Audio context fingerprinting

This combination of data can be used by Imperva to identify web scrapers. To get around this, we have two approaches:

The first one is to intercept the JavaScript fingerprinting and feed Imperva with fake data. However, this requires a lot of effort and is not very reliable as any updates to the fingerprinting code will break our logic.

Alternatively, we can use a headless browser to execute the javascript code. This is a much more reliable approach as it's very unlikely that the fingerprinting code will change dramatically.

Headless browsers can be controlled by web scraping libraries like Puppeteer, Selenium or Playwright. Modern tools like Playwright (as of 2025-2026) have built-in stealth modes that make detection even harder. These tools can be used to control a real web browser to establish a trust-worthy connection with Imperva.

So, using headless browser automation with Selenium, Puppeteer or Playwright is an easy way to handle JavaScript fingerprinting

Many advanced web scraping tools can jump between headless browsers and raw HTTP connections. So, the trust score can be established using a headless browser-based scraping and then switch to fast HTTP requests - this feature is also available in ScrapFly.

Behavioral Analysis

Even if we address all of these detection methods, Imperva can still identify scrapers due to continuous behavioral analysis.

As Imperva is tracking all connection details and patterns, it can use this information to adjust the trust score constantly which can lead to blocking or captcha challenges. In 2025-2026, Imperva's machine learning models have become more sophisticated at detecting unnatural browsing patterns.

So, it's important to distribute the scraping load through multiple agents using proxies and different fingerprint configurations. For example, when scraping using browser automation tools, it's important to use a collection of different profiles like screen size, operating system, rendering capabilities together with IP proxies.

How to Bypass Imperva?

We can see that there's a lot going on when it comes to Imperva's anti-bot technology and since it's using score based approach, we don't necessarily need to bypass all of the detection methods perfectly. To quickly summarize, here's where scrapers can be improved to avoid detection:

- Use high quality residential or mobile proxies (datacenter proxies are easily detected in 2026)

- Use HTTP/2 or HTTP/3 version for all requests

- Match request header values and ordering of a real web browser (including Sec-CH-UA headers)

- Use headless browser automation to generate Javascript fingerprints (Playwright recommended for 2025)

- Distribute web scraper traffic through multiple agents

- Implement realistic timing delays between requests

- Rotate user agents and browser profiles

Note that as Imperva is developing and improving their methods it's important to stay in touch with web scraping tool and library updates. For example, see Puppeteer stealth plugin for Puppeteer that keeps track of new fingerprinting techniques.

Troubleshooting Common Imperva Blocks

Even with proper bypass techniques in place, you may still encounter blocks from Imperva. Here are the most common issues and how to resolve them:

403 Forbidden Errors

The most common Imperva block manifests as a 403 HTTP status code. This indicates your request was received but rejected by the WAF. Common causes include:

- Datacenter IP detected: Switch to residential or mobile proxies

- Bad TLS fingerprint: Ensure your HTTP client supports modern TLS configurations (JA3/JA4)

- Missing or incorrect headers: Verify you're sending complete browser-like headers including

Sec-CH-UA,Sec-Fetch-*headers - Rate limiting triggered: Slow down your request rate and add random delays between requests

To debug 403 errors, check the response headers for X-Iinfo which may contain clues about why the request was blocked.

CAPTCHA Challenges

When Imperva's trust score is borderline, it may present a CAPTCHA challenge instead of an outright block:

- JavaScript challenges: Use headless browsers like Playwright or Puppeteer to automatically solve these

- reCAPTCHA/hCaptcha: Consider CAPTCHA solving services or human verification for critical requests

- Persistent CAPTCHAs: This usually indicates your proxy IP is already flagged - rotate to a fresh IP

Pro tip: If you're consistently getting CAPTCHAs, your fingerprint or behavioral patterns are suspicious. Review your scraper's timing, navigation patterns, and browser fingerprint.

Rate Limiting and 429 Errors

Imperva implements sophisticated rate limiting that goes beyond simple request-per-second counting:

- Implement exponential backoff: Start with 1 second delay, double after each 429 response

- Distribute across multiple IPs: Use a proxy pool to spread requests across different IP addresses

- Respect retry-after headers: Some Imperva configurations include

Retry-Afterheaders - honor these - Session-based rate limits: Imperva may track requests per session cookie, so rotate sessions periodically

Cookie and Session Issues

Imperva uses cookies like incap_ses and visid_incap to track sessions:

- Cookie persistence: Always maintain cookies across requests in the same session

- Session expiry: Imperva sessions can expire - be prepared to establish a new session when you get blocks

- Cookie tampering detection: Never manually modify Imperva cookies as this triggers immediate blocks

Intermittent Blocks

Sometimes requests work initially but fail later:

- Trust score degradation: Your behavioral patterns became suspicious over time - introduce more randomness

- IP reputation changed: Your proxy IP may have been recently flagged - rotate to fresh IPs

- Dynamic rules: Websites can adjust Imperva sensitivity in real-time during high traffic or attacks

The key to troubleshooting Imperva blocks is systematic testing. Change one variable at a time (IP, headers, timing, browser) to identify which factor is causing the block.

Bypass Imperva with ScrapFly

Bypassing Imperva anti-bot while possible is very difficult - let Scrapfly do it for you!

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale. Each product is equipped with an automatic bypass for any anti-bot system and we achieve this by:

- Maintaining a fleet of real, reinforced web browsers with real fingerprint profiles.

- Millions of self-healing proxies of the highest possible trust score.

- Constantly evolving and adapting to new anti-bot systems.

- We've been doing this publicly since 2020 with the best bypass on the market!

It takes Scrapfly several full-time engineers to maintain this system and keep up with Imperva's latest updates, so you don't have to!

For example, to scrape pages protected by Imperva using ScrapFly SDK all we need to do is enable the Anti Scraping Protection bypass feature:

from scrapfly import ScrapflyClient, ScrapeConfig

scrapfly = ScrapflyClient(key="YOUR API KEY")

result = scrapfly.scrape(ScrapeConfig(

url="https://www.glassdoor.com/",

asp=True,

# we can also enable headless browsers to render web apps and javascript powered pages

render_js=True,

# and set proxies by country like France

country="FR",

# and proxy type like residential:

proxy_pool=ScrapeConfig.PUBLIC_RESIDENTIAL_POOL,

))

print(result.scrape_result)

FAQs

How do I identify if a website is using Imperva protection?

Look for these indicators: "Powered By Incapsula" text in HTML, "Incapsula incident ID" keywords, "_Incapsula_Resource" in HTML, "subject=WAF Block Page" text, "visid_incap" in request headers, "X-Iinfo" response header, "X-CDN" header with Imperva values, or "incap_ses" and "visid_incap" cookies.

What's the most effective way to bypass Imperva's JavaScript fingerprinting?

Use headless browsers (Playwright, Selenium, Puppeteer) to execute JavaScript naturally. Playwright is particularly recommended in 2026 for its built-in stealth features. This provides authentic browser fingerprints that Imperva expects, making detection much harder than trying to spoof fingerprinting data.

Can I bypass Imperva using only HTTP requests without browsers?

It's possible but very difficult in 2026. You'd need to match TLS fingerprints (JA3/JA4), use HTTP/2 or HTTP/3, perfect header ordering, residential proxies, and handle behavioral analysis. Headless browsers are much more reliable for consistent bypassing.

Summary

In this guide, we've taken a look at how to bypass Incapsula (now known as Imperva) when web scraping in 2026.

To start, we've taken a look at the detection methods Imperva is using and how can we address each one of them in our scraper code. We saw that using residential proxies and patching common fingerprinting techniques can vastly improve trust scores when it comes to Imperva's bot blocking.

We also covered the latest updates to Imperva's detection systems, including improved machine learning models for behavioral analysis and expanded IP reputation databases.

Finally, we've taken a look at some frequently asked questions like alternative bypass methods and the legality of it all.

For an easier way to handle web scraper blocking and power up your web scrapers check out ScrapFly for free!