How to Power-Up LLMs with Web Scraping and RAG

In depth look at how to use LLM and web scraping for RAG applications using either LlamaIndex or LangChain.

Web scraping is a powerful data retrieval method that can be used to extract a vast amount of text data found in reviews, tweets and blogs. In turn, this data can be used for sentiment analysis to classify text into useful social insights.

In this article, we'll explore using web scraping for sentiment analysis. We'll start by defining sentiment analysis and then walk through a practical example of performing sentiment analysis on web-scraped data with community Python libraries.

Sentiment analysis is the process of using NLP techniques to determine emotions. It analyzes text data to determine the opinions and emotional tone on a given subject, new or product.

Sentiment analysis uses machine learning models trained on classified data using neural networks. Then, it reuses these models to classify the live data as positive, neutral or negative based on a calculated score.

Sentiment analysis requires a high amount of data to validate a theory without biases or skews. Therefore, it's common to use web scraping with sentiment analysis to either gather data for training or use them for research.

User-generated text data is everywhere on the internet, from forums, blogs and social media to review websites. Sentiment analysis can be applied to this vast realm of data for various use cases.

Businesses can use sentiment analysis to analyze their customers' reviews, messages, and social media messages to evaluate the performance of their products and services and how users perceive them. This allows for statistic-based decision-making and better business evaluation.

Furthermore, manually researching a specific topic or trend can be tedious due to the overwhelming amount of available data. However, sentiment analysis can analyze thousands of data points in no time.

In this guide, we'll apply sentiment analysis with web scraping by performing it on extracted data. For this, we'll use a few Python libraries:

The above packages can be installed using pip:

pip install httpx parsel textblob nltk pandas matplotlib seaborn

Sentiment analysis requires machine learning models trained with classified data. However, training these models is complicated and requires attention to various details. Therefore, we'll use a pre-trained sentiment analysis model instead.

In this project on web scraping with sentiment analysis, we'll follow these steps:

TextBlob.Let's start with scraping the text data!

Sentiment analysis requires text to determine the underlying emotions. Therefore, we'll scrape review data from web-scraping.dev:

The above review data are coming from a private API. We'll use the hidden APIs web scraping approach to scrape them.

Learn how to find hidden APIs, retrieve data from them and overcome common challenges encountered when developing web scrapers for hidden APIs.

We'll request the review pages using httpx and then parse the actual review text from the HTML using parsel:

import httpx

from parsel import Selector

def scrape_reviews(url: str):

"""scrape review data from web-scraping.dev"""

def parse_reviews(response):

"""parse review data from the HTML"""

selector = Selector(response.text)

data = []

for review_box in selector.xpath("//div[@class='testimonial']"):

data.append(review_box.xpath(".//p/text()").get())

return data

reviews = []

# crawl over the total review pages (6 pages)

for page_number in range(1, 7):

response = httpx.get(url + f"?page={page_number}", headers={"referer": "https://web-scraping.dev/testimonials"})

data = parse_reviews(response)

# extend the empty reviews array with the new ones

reviews.extend(data)

print(f"scraped {len(reviews)} reviews")

return reviews

reviews = scrape_reviews(

url="https://web-scraping.dev/api/testimonials"

)

print(reviews)

In the above code, we define two functions, let's break them down:

scrape_reviews() which requests the review pages to get the data as HTML.parse_reviews() which parses the HTML for review text by iterating over the product boxes on the page.The result is 60 reviews with different emotions:

[

"We've been using this utility for years - awesome service!",

"This Python app simplified my workflow significantly. Highly recommended.",

"Had a few issues at first, but their support team is top-notch!",

"A fantastic tool - it has everything you need and more.",

"The interface could be a little more user-friendly.",

...

]

Let's perform the sentiment analysis on the web-scraped data we got using TextBlob!

TextBlob itself is an API for processing the text and performing the sentiment analysis. It relies on NLTK for the actual processing tasks. Let's download the required NLTK modules and explore the TextBlob features:

import nltk

from textblob import TextBlob

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

nltk.download('vader_lexicon')

text = "We've been using this utilety for years - awesome service!"

blob = TextBlob(text)

# get the text blob tags

print(blob.tags)

"""[('We', 'PRP'), ("'ve", 'VBP'), ('been', 'VBN'), ('using', 'VBG'), ('this', 'DT'), ('utilety', 'NN'), ('for', 'IN'), ('years', 'NNS'), ('awesome', 'JJ'), ('service', 'NN')]"""

# get the text blob words

print(blob.words)

"""['We', "'ve", 'been', 'using', 'this', 'utilety', 'for', 'years', 'awesome', 'service']"""

# get the text blob sentences

print(blob.sentences)

"""[Sentence("We've been using this utilety for years - awesome service!")]"""

# correct the blob text

print(blob.correct())

"""He've been using this utility for years - awesome service!"""

# get the blob sentiment analysis

print(blob.sentiment)

"""Sentiment(polarity=1.0, subjectivity=1.0)"""

Here, we start by downloading essential NLTK modules for the sentiment analysis

punkt: A tokenizer that divides a text into a list of sentences.averaged_perceptron_tagger: A pre-trained part-of-speech model for assigning the sentence words into grammatical categories.vader_lexicon: A representation of the VADER model, a rule-based sentiment analysis model analyzing and scoring the sentiment of text used in social media.Then, we convert the text into a TextBlob object and use a few of its text-processing utilities. However, we are only interested in the sentiment analysis. We can see that TextBlob provided two values for the sentiment analysis:

polarity: A score between -1 and 1. A higher value means positive text, while a lower one means negative.subjectivity: A score between 0 and 1. A higher value means an opinionated text, while a lower one means factual information.Let's apply sentiment analysis with data we got earlier from web scraping using TextBlob:

import json

import httpx

from textblob import TextBlob

from parsel import Selector

def scrape_reviews(url: str):

"""scrape review data from web-scraping.dev"""

# the rest of the function logic

def sentiment_analysis(review_data):

"""perform sentiment analysis on text"""

# make sure to download the ntlk binaries in the previous snippet

data = []

for review in review_data:

blob = TextBlob(review)

data.append({

"text": review,

"polarity_value": round(blob.polarity, 3),

"polarity": "Positive" if blob.polarity >= 0.2 else ("Negative" if blob.polarity <= -0.2 else "Neutral"),

"subjectivity_value": round(blob.subjectivity, 3),

"subjectivity": "Opiniated" if blob.subjectivity >= 0.5 else "Factual"

})

return data

# scrape the review data

reviews = scrape_reviews(url="https://web-scraping.dev/api/testimonials")

# perform the sentiment analysis

sentiment_data = sentiment_analysis(reviews)

# save the results to a JSON file

with open("sentiment_data.json", "w", encoding="utf-8") as file:

json.dump(sentiment_data, file, indent=2, ensure_ascii=False)

Here, we start by scraping the review data like we did earlier. Then, we use the sentiment_analysis function to perform the sentiment analysis on the we got. It iterates over each review, converts it into a TextBlob and saves its raw polarity and subjectivity values with the human-readable text representation. Finally, we save the sentiment analysis result into a JSON file.

Here is a sample output of the result we got:

[

{

"text": "We've been using this utility for years - awesome service!",

"polarity_value": 1.0,

"polarity": "Positive",

"subjectivity_value": 1.0,

"subjectivity": "Opiniated"

},

{

"text": "This Python app simplified my workflow significantly. Highly recommended.",

"polarity_value": 0.16,

"polarity": "Neutral",

"subjectivity_value": 0.54,

"subjectivity": "Opiniated"

},

{

"text": "Had a few issues at first, but their support team is top-notch!",

"polarity_value": 0.35,

"polarity": "Positive",

"subjectivity_value": 0.478,

"subjectivity": "Factual"

},

...

]

TextBlob has successfully analyzed the text for its sentiments. It's not bad for a generic without an explicit configuration model! However, it can be less accurate for some text. Let's visualize the results for more clear insights!

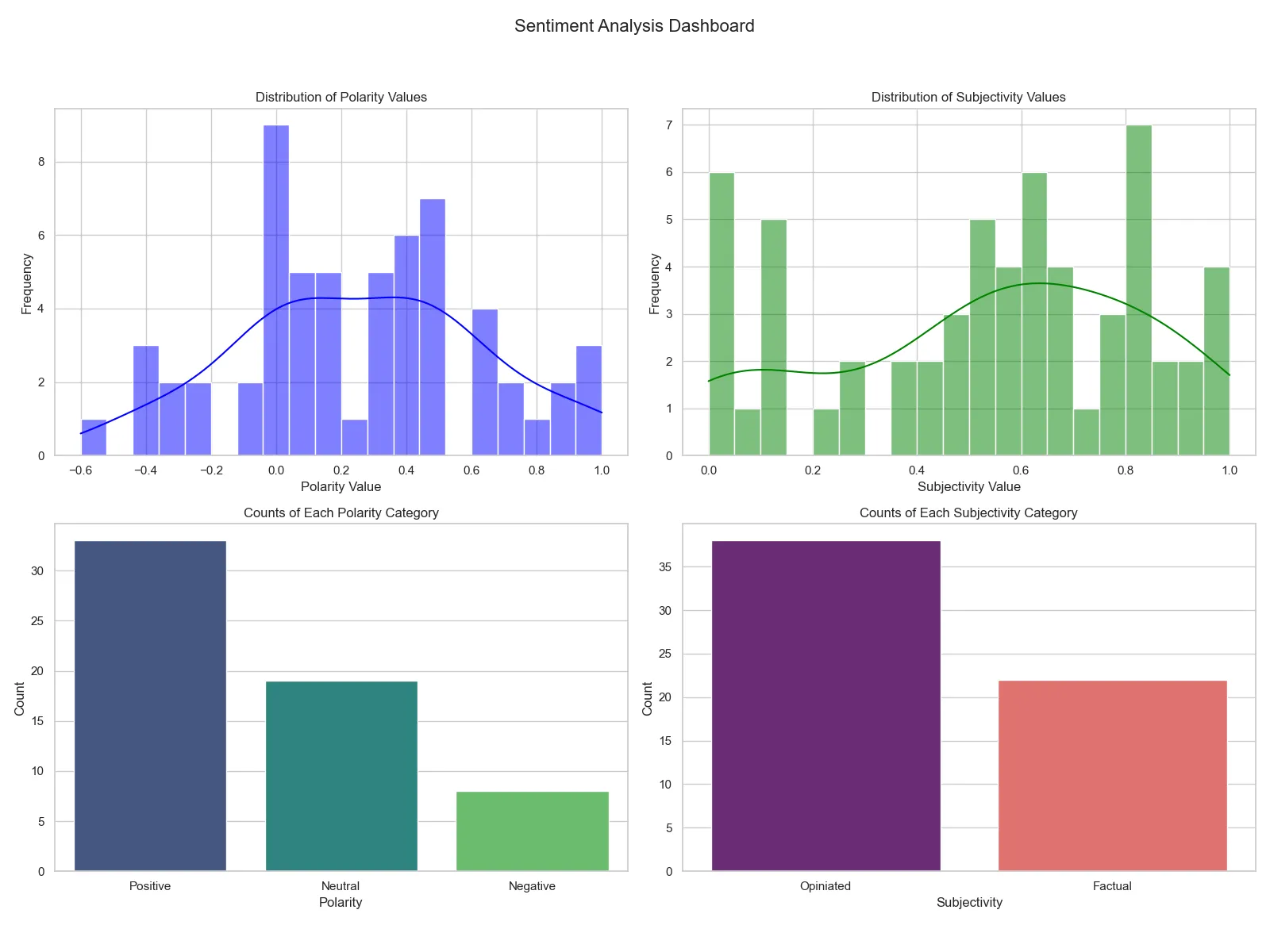

Visualizing the sentiment analysis results is a common practice as it's often used with large amounts of data.

We'll convert the JSON data into a Pandas data frame to calculate statistics about each text classification. Then, we'll use Matplotlib and Seaborn to visualize the statistics:

import json

import httpx

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

from textblob import TextBlob

from parsel import Selector

def scrape_reviews(url: str):

"""scrape review data from web-scraping.dev"""

# the rest of the function logic from the previous snippet

def sentiment_analysis(review_data):

"""perform sentiment analysis on text"""

# make sure to download the ntlk binaries in the previous snippet

# the rest of the function logic from the previous snippet

def create_insights(data):

"""create sentiment analysis insights"""

# create a pandas dataframe

df = pd.DataFrame(data)

# set a visualization theme

sns.set_theme(style="whitegrid")

# set the figure layouts

fig, axes = plt.subplots(2, 2, figsize=(16, 12))

fig.suptitle('Sentiment Analysis Dashboard', fontsize=16)

# 1. distribution of polarity values

sns.histplot(df['polarity_value'], kde=True, ax=axes[0, 0], bins=20, color='blue')

axes[0, 0].set_title('Distribution of Polarity Values')

axes[0, 0].set_xlabel('Polarity Value')

axes[0, 0].set_ylabel('Frequency')

# 2. distribution of subjectivity values

sns.histplot(df['subjectivity_value'], kde=True, ax=axes[0, 1], bins=20, color='green')

axes[0, 1].set_title('Distribution of Subjectivity Values')

axes[0, 1].set_xlabel('Subjectivity Value')

axes[0, 1].set_ylabel('Frequency')

# 3. counts of each polarity category

sns.countplot(x='polarity', order=['Positive', 'Neutral', 'Negative'], data=df, ax=axes[1, 0], palette='viridis')

axes[1, 0].set_title('Counts of Each Polarity Category')

axes[1, 0].set_xlabel('Polarity')

axes[1, 0].set_ylabel('Count')

# 4. counts of each subjectivity category

sns.countplot(x='subjectivity', data=df, ax=axes[1, 1], palette='magma')

axes[1, 1].set_title('Counts of Each Subjectivity Category')

axes[1, 1].set_xlabel('Subjectivity')

axes[1, 1].set_ylabel('Count')

# save the fig to a file

plt.tight_layout(rect=[0, 0.03, 1, 0.95])

plt.savefig("./sentiment_analysis_insights.png")

# scrape the review data

reviews = scrape_reviews(url="https://web-scraping.dev/api/testimonials")

# perform the sentiment analysis

sentiment_data = sentiment_analysis(reviews)

# create the visualization charts

create_insights(sentiment_data)

Here, we define a new create_insights function. It converts the data into a Pandas dataframe, which Seaborn will then analyze. Next, we create 4 charts using Seaborn and save the dashboards into one figure.

Here is the figure created:

From the above insights, the reviews tend to be positive with more opinionated subjectivity. However, the insights could be biased due to the usage of a weak sentiment analysis model. Let's have a look at more advanced sentiment analysis techniques.

At its core, all sentiment analysis models require training datasets for feeding the model. Therefore, using a trained sentiment analysis model with a specific data type and using it with a different one can lead to biased or skewed results.

That being said, building and training machine learning models from scratch can be complicated and resource intensive. Therefore, it's more convenient to use open-source models. One of these machine learning providers is Huggingface. It provides for various types of machine learning models, including ones for sentiment analysis.

Sentiment analysis with Huggingface can be performed in Python through the transformers library. Here is an example of loading a generic sentiment analysis model into Python using transformers:

# pip install transformers

from transformers import pipeline

# load a model into the pipline

sentiment_pipeline = pipeline("sentiment-analysis")

data = [

"We've been using this utility for years - awesome service!",

"I'm not satisfied with the app's performance. Disappointing.",

]

for text in data:

print(sentiment_pipeline(text))

"""

[{'label': 'POSITIVE', 'score': 0.9990767240524292}]

[{'label': 'NEGATIVE', 'score': 0.9998065829277039}]

"""

For further details on different sentiment analysis, refer to the Huggingface model hub.

Sentiment analysis requires a lot of text data for accurate results. Using web scraping for sentiment analysis, such as scraping Twitter, can be efficient for retrieving the data. However, there is a catch: scaling scrapers can be challenging and this is where Scrapfly can lend a hand!

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

Here is how we can scale web scraping for sentiment analysis. All we have to do is replace the HTTP client with the ScrapFly client and enable the anti-scraping protection using the asp parameter:

# standard web scraping code

import httpx

response = httpx.get("page URL")

# in ScrapFly, it becomes this 👇

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly = ScrapflyClient(key="Your ScrapFly API key")

api_response: ScrapeApiResponse = scrapfly.scrape(

ScrapeConfig(

# target website URL

url="page URL",

# bypass aweb scraping blockingn

asp=True,

# select a proxy pool (residential or datacenter)

proxy_pool="public_residential_pool",

# set the proxy location to a specific country

country="US",

# enable JavaScript rendering if needed, similar to headless browsers

render_js=True,

)

)

# get the HTML from the response

html = api_response.scrape_result['content']

# use the built-in Prasel selector

selector = api_response.selector

To wrap up this guide on web scraping and sentiment analysis. Let's have a look at a few frequently asked questions.

Web scraping can be utilized for sentiment analysis to gather the text data required for the analysis process. This web-scraped data can then be used as training data for the sentiment analysis models or as an evaluation dataset for performing the analysis.

Twitter data can be scraped based on specific domains and keywords related to the research topic and then used with the sentiment analysis model. We have explained how to scrape Twitter in a previous guide.

Performing sentiment analysis can be achieved using different methods. The easiest method is to use the Python TextBlob with the NLTK toolkit for NLP. A more advanced approach is using Huggingface sentiment analysis models.

In this article, we explained about sentiment analysis - an NLP technique used to determine the underlying emotions of a specific text.

We started by scraping text review data and how to perform the sentiment analysis on it using TextBlob and HuggingFace. We have also explained using Matplotlib and Seaborn to visualize the sentiment analysis results as dashboards for clear data insights.