How to Scrape YouTube in 2025

Learn how to scrape YouTube, channel, video, and comment data using Python directly in JSON.

In this Python web scraping tutorial we'll explain how to scrape Instagram - one of the most popular social media websites out there. We'll explain at how to create an Instagram scraper to extract data from Instagram profiles and post pages.

We'll focus on utilizing the unofficial Instagram API for scraping Instagram data. Furthermore, we'll take into account Instagram web scraping blocking and extract its data without login. Let's dive in!

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:

Scrapfly does not offer legal advice but these are good general rules to follow in web scraping

and for more you should consult a lawyer.

The amount of public data on Instagram is significant, allowing for various insights. Businesses can scrape Instagram data for lead generation, where popular Instagram profiles with similar interests can be reached to attract new customers.

Moreover, scraped Instagram data is a viable resource for performing sentiment analysis research. This data is found in posts and comments, which can be used to gather public opinions on specific trends and news.

For further details on scraping Instagram data use cases, refer to our dedicated guide.

To create an Instagram scraping tool, we'll be using Python with a few community packages:

For Scrapfly users, we'll also be including a version of each code snippet using the ScrapFly Python SDK.

Many Instagram endpoints require login, though not all. Our Instagram scraper will only cover endpoints that don't require login and are publicly accessible to everyone.

Web Scraping Instagram through login can have many unintended consequences, from your account being blocked to Instagram taking legal action for explicitly breaking their Terms of Service. As noted in this tutorial, login is often unnecessary, so let's look at how to scrape Instagram without having to log in and risk suspension.

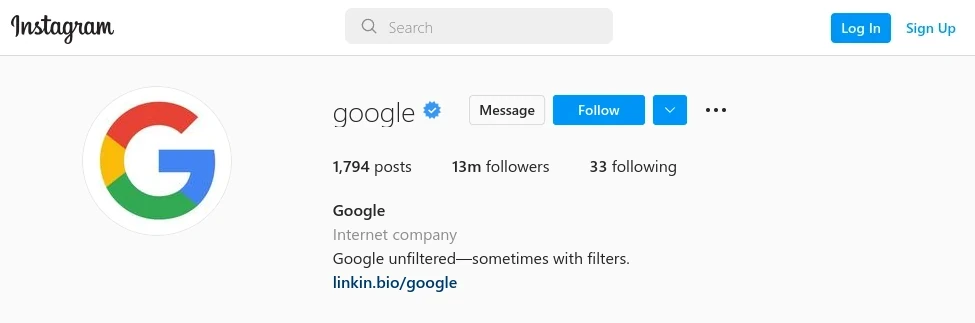

Let's start with scraping Instagram user profiles. For this, we'll use Instagram's backend API endpoint, which gets triggered when browsing the profile URL. For example, here's Google's Instagram profile page:

This endpoint is called on page load and returns a JSON dataset with the profile's data. We can use this endpoint to scrape Instagram profile data without having to login to Instagram:

import json

import httpx

client = httpx.Client(

headers={

# this is internal ID of an instegram backend app. It doesn't change often.

"x-ig-app-id": "936619743392459",

# use browser-like features

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36",

"Accept-Language": "en-US,en;q=0.9,ru;q=0.8",

"Accept-Encoding": "gzip, deflate, br",

"Accept": "*/*",

}

)

def scrape_user(username: str):

"""Scrape Instagram user's data"""

result = client.get(

f"https://i.instagram.com/api/v1/users/web_profile_info/?username={username}",

)

data = json.loads(result.content)

return data["data"]["user"]

print(scrape_user("google"))

import asyncio

import json

from typing import Dict

from scrapfly import ScrapflyClient, ScrapeConfig

scrapfly = ScrapflyClient(key="YOUR SCRAPFLY KEY")

BASE_CONFIG = {

# Instagram.com requires Anti Scraping Protection bypass feature:

# for more: https://scrapfly.io/docs/scrape-api/anti-scraping-protection

"asp": True,

"country": "CA",

}

INSTAGRAM_APP_ID = "936619743392459" # this is the public app id for instagram.com

async def scrape_user(username: str) -> Dict:

"""Scrape instagram user's data"""

result = await SCRAPFLY.async_scrape(

ScrapeConfig(

url=f"https://i.instagram.com/api/v1/users/web_profile_info/?username={username}",

headers={"x-ig-app-id": INSTAGRAM_APP_ID},

**BASE_CONFIG,

)

)

data = json.loads(result.content)

return parse_user(data["data"]["user"])

print(asyncio.run(scrape_user("google")))

The above snippet is able to scrape Instagram profiles. It started by initiating an httpx client with basic headers to reduce the chances of getting blocked. Then, we use the defined client to request the Instagram API for profiles and get the scraped data as JSON.

The above code can extract Instagram data such as bio description, follower counts, profile pictures, etc:

{

"biography": "Google unfiltered—sometimes with filters.",

"external_url": "https://linkin.bio/google",

"external_url_linkshimmed": "https://l.instagram.com/?u=https%3A%2F%2Flinkin.bio%2Fgoogle&e=ATOaH1Vrx_TkkMUhpCCh1_PM-C1k5t35gAtJ0eBjTPE84RItj-cCFdqRoRHwlbiCSrB5G_v6MgjePl1SQN4vTw&s=1",

"edge_followed_by": {

"count": 13015078

},

"fbid": "17841401778116675",

"edge_follow": {

"count": 33

},

"full_name": "Google",

"highlight_reel_count": 5,

"id": "1067259270",

"is_business_account": true,

"is_professional_account": true,

"is_supervision_enabled": false,

"is_guardian_of_viewer": false,

"is_supervised_by_viewer": false,

"is_embeds_disabled": false,

"is_joined_recently": false,

"guardian_id": null,

"is_verified": true,

"profile_pic_url": "https://instagram.furt1-1.fna.fbcdn.net/v/t51.2885-19/126151620_3420222801423283_6498777152086077438_n.jpg?stp=dst-jpg_s150x150&_nc_ht=instagram.furt1-1.fna.fbcdn.net&_nc_cat=1&_nc_ohc=bmDCZ2Q8wTkAX-Ilbqq&edm=ABfd0MgBAAAA&ccb=7-4&oh=00_AT9pRKzLtnysPjhclN6TprCd9FBWo2ABbn9cRICPhbQZcA&oe=62882D44&_nc_sid=7bff83",

"username": "google",

...

}

Great! Our Instagram data scraper can extract profile data - it even includes the details of the first 12 posts including photos and videos!

The profile dataset we scraped is quite comprehensive, and it contains many useless details. To reduce it to the most important bits, we can use JMESPath:

import jmespath

def parse_user(data: Dict) -> Dict:

"""Parse instagram user's hidden web dataset for user's data"""

log.debug("parsing user data {}", data['username'])

result = jmespath.search(

"""{

name: full_name,

username: username,

id: id,

category: category_name,

business_category: business_category_name,

phone: business_phone_number,

email: business_email,

bio: biography,

bio_links: bio_links[].url,

homepage: external_url,

followers: edge_followed_by.count,

follows: edge_follow.count,

facebook_id: fbid,

is_private: is_private,

is_verified: is_verified,

profile_image: profile_pic_url_hd,

video_count: edge_felix_video_timeline.count,

videos: edge_felix_video_timeline.edges[].node.{

id: id,

title: title,

shortcode: shortcode,

thumb: display_url,

url: video_url,

views: video_view_count,

tagged: edge_media_to_tagged_user.edges[].node.user.username,

captions: edge_media_to_caption.edges[].node.text,

comments_count: edge_media_to_comment.count,

comments_disabled: comments_disabled,

taken_at: taken_at_timestamp,

likes: edge_liked_by.count,

location: location.name,

duration: video_duration

},

image_count: edge_owner_to_timeline_media.count,

images: edge_felix_video_timeline.edges[].node.{

id: id,

title: title,

shortcode: shortcode,

src: display_url,

url: video_url,

views: video_view_count,

tagged: edge_media_to_tagged_user.edges[].node.user.username,

captions: edge_media_to_caption.edges[].node.text,

comments_count: edge_media_to_comment.count,

comments_disabled: comments_disabled,

taken_at: taken_at_timestamp,

likes: edge_liked_by.count,

location: location.name,

accesibility_caption: accessibility_caption,

duration: video_duration

},

saved_count: edge_saved_media.count,

collections_count: edge_saved_media.count,

related_profiles: edge_related_profiles.edges[].node.username

}""",

data,

)

return result

The above Instagram parsing logic will take in the full dataset and reduce it to a more flat structure that contains only the important fields. We use JMESPath to rephrase the raw Instagram scraping into refined structured data.

For further details on JMESPath, refer to our dedicated guide.

Learn how to use JMESPath to filter and refine JSON datasets when scraping to exclude tons of unnecessary details.

Let's empower our Instagram scrapers' capabilities to collect data from post pages. For this, we'll utilize the Instagram post endpoint this time.

Instagram uses GraphQL to generate the post views dynamically using a backend query. This endpoints returns the different post data, including comments, likes, as well other details. Hence, we'll utilize this GraphQL endpoint to scrape Instagram post data.

Below is the Graph endpoint for retrieving posts' data

https://www.instagram.com/graphql/query

It's known that every GraphQL request requires an HTTP body. For post pages scraping, the below values are only requried:

INSTAGRAM_DOCUMENT_ID = "8845758582119845" # contst id for post documents instagram.com

shortcode = "CJ9KxZ2l8jT" # the post id

variables = {

'shortcode':shortcode,'fetch_tagged_user_count':None,

'hoisted_comment_id':None,'hoisted_reply_id':None

}

variables = quote(json.dumps(variables, separators=(',', ':')))

body = f"variables={variables}&doc_id={INSTAGRAM_DOCUMENT_ID}"

From the above details, we can conclude that the post ID (shortcode) is the only variable required to scrape Instagram post pages through POST requests to the GraphQL endpoint. Let's add this functionality to our Instagram scraper:

import httpx

import json

from typing import Dict

from urllib.parse import quote

INSTAGRAM_DOCUMENT_ID = "8845758582119845" # contst id for post documents instagram.com

def scrape_post(url_or_shortcode: str) -> Dict:

"""Scrape single Instagram post data"""

if "http" in url_or_shortcode:

shortcode = url_or_shortcode.split("/p/")[-1].split("/")[0]

else:

shortcode = url_or_shortcode

print(f"scraping instagram post: {shortcode}")

variables = quote(json.dumps({

'shortcode':shortcode,'fetch_tagged_user_count':None,

'hoisted_comment_id':None,'hoisted_reply_id':None

}, separators=(',', ':')))

body = f"variables={variables}&doc_id={INSTAGRAM_DOCUMENT_ID}"

url = "https://www.instagram.com/graphql/query"

result = httpx.post(

url=url,

headers={"content-type": "application/x-www-form-urlencoded"},

data=body

)

data = json.loads(result.content)

return data["data"]["xdt_shortcode_media"]

# Example usage:

posts = scrape_post("https://www.instagram.com/p/CuE2WNQs6vH/")

# save a JSON file

with open("result.json", "w",encoding="utf-8") as f:

json.dump(posts, f, indent=2, ensure_ascii=False)

import json

from typing import Dict

from urllib.parse import quote

from scrapfly import ScrapeConfig, ScrapflyClient

SCRAPFLY = ScrapflyClient("Your ScrapFly API Key")

INSTAGRAM_DOCUMENT_ID = "8845758582119845" # contst id for post documents instagram.com

BASE_CONFIG = {

"asp": True, # bypass anti-bots

}

def scrape_post(url_or_shortcode: str) -> Dict:

"""Scrape single Instagram post data"""

if "http" in url_or_shortcode:

shortcode = url_or_shortcode.split("/p/")[-1].split("/")[0]

else:

shortcode = url_or_shortcode

print(f"scraping instagram post: {shortcode}")

variables = quote(json.dumps({

'shortcode':shortcode,'fetch_tagged_user_count':None,

'hoisted_comment_id':None,'hoisted_reply_id':None

}, separators=(',', ':')))

body = f"variables={variables}&doc_id={INSTAGRAM_DOCUMENT_ID}"

url = "https://www.instagram.com/graphql/query"

result = SCRAPFLY.scrape(

ScrapeConfig(

url=url,

method="POST",

body=body,

headers={"content-type": "application/x-www-form-urlencoded"},

**BASE_CONFIG

)

)

data = json.loads(result.content)

return data["data"]["xdt_shortcode_media"]

# Example usage:

posts = scrape_post("https://www.instagram.com/p/CuE2WNQs6vH/")

# save a JSON file

with open("result.json", "w",encoding="utf-8") as f:

json.dump(posts, f, indent=2, ensure_ascii=False)

The above Instagram scraper code will return the entire post dataset, including various fields, such as post captions, comments, likes, and other information. However, it also includes many flags and unnecessary fields, which aren't very useful.

To reduce the collected data points' size, we'll parse it with JMESPath.

Instagram post data is even more complex than the user profile data. Therefore, we'll use JMESPath to create an even more comprehensive Instagram parser to reduce its size:

import jmespath

from typing import Dict

def parse_post(data: Dict) -> Dict:

print("parsing post data {}", data['xdt_shortcode_media'])

result = jmespath.search("""{

id: id,

shortcode: shortcode,

dimensions: dimensions,

src: display_url,

src_attached: edge_sidecar_to_children.edges[].node.display_url,

has_audio: has_audio,

video_url: video_url,

views: video_view_count,

plays: video_play_count,

likes: edge_media_preview_like.count,

location: location.name,

taken_at: taken_at_timestamp,

related: edge_web_media_to_related_media.edges[].node.shortcode,

type: product_type,

video_duration: video_duration,

music: clips_music_attribution_info,

is_video: is_video,

tagged_users: edge_media_to_tagged_user.edges[].node.user.username,

captions: edge_media_to_caption.edges[].node.text,

related_profiles: edge_related_profiles.edges[].node.username,

comments_count: edge_media_to_parent_comment.count,

comments_disabled: comments_disabled,

comments_next_page: edge_media_to_parent_comment.page_info.end_cursor,

comments: edge_media_to_parent_comment.edges[].node.{

id: id,

text: text,

created_at: created_at,

owner: owner.username,

owner_verified: owner.is_verified,

viewer_has_liked: viewer_has_liked,

likes: edge_liked_by.count

}

}""", data)

return result

Similar to the previous parse_user function, we define the desired fields within our parsing logic to only extract the valuable data from our Instagram scraper. Note that different post types (reels, images, videos, etc.) have different fields available.

In this section, we'll extract post and comment data from user profiles. For this, we'll utilize another GraphQL endpoint, which requires three mandatory variables: the user's username, page size, and page offset cursor:

{

"after": "CURSOR ID FOR PAGING",

"before": null,

"data": {

"count": 12,

"include_reel_media_seen_timestamp": true,

"include_relationship_info": true,

"latest_besties_reel_media": true,

"latest_reel_media": true

},

"first": 12,

"last": null,

"username": "PROFILE USERNAME"

}

As an example, let's scrape Instagram for all the post data created by the Google profile. First, we have to retrieve the user ID and then compile our GraphQL request.

Requesting the endpoint for user posts will return the JSON data from Instagram. It includes the first 12 posts' data, with the following details:

However, to extract all the posts, our Instagram scraper needs a pagination logic to request multiple pages. Here's how to create such logic:

import json

import httpx

from urllib.parse import quote

from typing import Optional

INSTAGRAM_ACCOUNT_DOCUMENT_ID = "9310670392322965"

async def scrape_user_posts(username: str, page_size=12, max_pages: Optional[int] = None):

"""Scrape all posts of an Instagram user given the username."""

base_url = "https://www.instagram.com/graphql/query"

variables = {

"after": None,

"before": None,

"data": {

"count": page_size,

"include_reel_media_seen_timestamp": True,

"include_relationship_info": True,

"latest_besties_reel_media": True,

"latest_reel_media": True

},

"first": page_size,

"last": None,

"username": f"{username}",

"__relay_internal__pv__PolarisIsLoggedInrelayprovider": True,

"__relay_internal__pv__PolarisShareSheetV3relayprovider": True

}

prev_cursor = None

_page_number = 1

async with httpx.AsyncClient(timeout=httpx.Timeout(20.0)) as session:

while True:

body = f"variables={quote(json.dumps(variables, separators=(',', ':')))}&doc_id={INSTAGRAM_ACCOUNT_DOCUMENT_ID}"

response = await session.post(

base_url,

data=body,

headers={"content-type": "application/x-www-form-urlencoded"}

)

response.raise_for_status()

data = response.json()

with open("ts2.json", "w", encoding="utf-8") as f:

json.dump(data, f, indent=2, ensure_ascii=False)

posts = data["data"]["xdt_api__v1__feed__user_timeline_graphql_connection"]

for post in posts["edges"]:

yield post["node"]

page_info = posts["page_info"]

if not page_info["has_next_page"]:

print(f"scraping posts page {_page_number}")

break

if page_info["end_cursor"] == prev_cursor:

print("found no new posts, breaking")

break

prev_cursor = page_info["end_cursor"]

variables["after"] = page_info["end_cursor"]

_page_number += 1

if max_pages and _page_number > max_pages:

break

# Example run:

if __name__ == "__main__":

import asyncio

async def main():

posts = [post async for post in scrape_user_posts("google", max_pages=3)]

print(json.dumps(posts, indent=2, ensure_ascii=False))

asyncio.run(main())

import json

from typing import Optional

from urllib.parse import quote

from loguru import logger as log

from scrapfly import ScrapeConfig, ScrapflyClient

SCRAPFLY = ScrapflyClient("SCRAPFLY_KEY")

BASE_CONFIG = {

"asp": True, # bypass web scraping blocking

"country": "CA", # proxy country location

}

INSTAGRAM_ACCOUNT_DOCUMENT_ID = "9310670392322965"

async def scrape_user_posts(username: str, page_size=12, max_pages: Optional[int] = None):

"""Scrape all posts of an instagram user of given the username"""

base_url = "https://www.instagram.com/graphql/query"

variables = {

"after": None,

"before": None,

"data": {

"count": page_size,

"include_reel_media_seen_timestamp": True,

"include_relationship_info": True,

"latest_besties_reel_media": True,

"latest_reel_media": True

},

"first": page_size,

"last": None,

"username": f"{username}",

"__relay_internal__pv__PolarisIsLoggedInrelayprovider": True,

"__relay_internal__pv__PolarisShareSheetV3relayprovider": True

}

prev_cursor = None

_page_number = 1

while True:

body = f"variables={quote(json.dumps(variables, separators=(',', ':')))}&doc_id={INSTAGRAM_ACCOUNT_DOCUMENT_ID}"

result = await SCRAPFLY.async_scrape(ScrapeConfig(

base_url, **BASE_CONFIG, method="POST", body=body,

headers={"content-type": "application/x-www-form-urlencoded"},

))

data = json.loads(result.content)

with open("ts2.json", "w", encoding="utf-8") as f:

json.dump(data, f, indent=2, ensure_ascii=False)

posts = data["data"]["xdt_api__v1__feed__user_timeline_graphql_connection"]

for post in posts["edges"]:

yield post["node"]

page_info = posts["page_info"]

if not page_info["has_next_page"]:

log.info(f"scraping posts page {_page_number}")

break

if page_info["end_cursor"] == prev_cursor:

log.warning("found no new posts, breaking")

break

prev_cursor = page_info["end_cursor"]

variables["after"] = page_info["end_cursor"]

_page_number += 1

if max_pages and _page_number > max_pages:

break

[

{

"__typename": "GraphImage",

"id": "2890253001563912589",

"dimensions": {

"height": 1080,

"width": 1080

},

"display_url": "https://scontent-atl3-2.cdninstagram.com/v/t51.2885-15/295343605_719605135806241_7849792612912420873_n.webp?stp=dst-jpg_e35&_nc_ht=scontent-atl3-2.cdninstagram.com&_nc_cat=101&_nc_ohc=cbVYU-YGD04AX9-DGya&edm=APU89FABAAAA&ccb=7-5&oh=00_AT-C93CjLzMapgPHOinoltBXypU_wi7s6zzLj1th-s9p-Q&oe=62E80627&_nc_sid=86f79a",

"display_resources": [

{

"src": "https://scontent-atl3-2.cdninstagram.com/v/t51.2885-15/295343605_719605135806241_7849792612912420873_n.webp?stp=dst-jpg_e35_s640x640_sh0.08&_nc_ht=scontent-atl3-2.cdninstagram.com&_nc_cat=101&_nc_ohc=cbVYU-YGD04AX9-DGya&edm=APU89FABAAAA&ccb=7-5&oh=00_AT8aF_4X2Ix9neTg1obSzOBgZW83oMFSNb-i5uqZqRqLLg&oe=62E80627&_nc_sid=86f79a",

"config_width": 640,

"config_height": 640

},

"..."

],

"is_video": false,

"tracking_token": "eyJ2ZXJzaW9uIjo1LCJwYXlsb2FkIjp7ImlzX2FuYWx5dGljc190cmFja2VkIjp0cnVlLCJ1dWlkIjoiOWJiNzUyMjljMjU2NDExMTliOGI4NzM5MTE2Mjk4MTYyODkwMjUzMDAxNTYzOTEyNTg5In0sInNpZ25hdHVyZSI6IiJ9",

"edge_media_to_tagged_user": {

"edges": [

{

"node": {

"user": {

"full_name": "Jahmar Gale | Data Analyst",

"id": "51661809026",

"is_verified": false,

"profile_pic_url": "https://scontent-atl3-2.cdninstagram.com/v/t51.2885-19/284007837_5070066053047326_6283083692098566083_n.jpg?stp=dst-jpg_s150x150&_nc_ht=scontent-atl3-2.cdninstagram.com&_nc_cat=106&_nc_ohc=KXI8oOdZRb4AX8w28nr&edm=APU89FABAAAA&ccb=7-5&oh=00_AT-4iYsawdTCHI5a2zD_PF9F-WCyKnTIPuvYwVAQo82l_w&oe=62E7609B&_nc_sid=86f79a",

"username": "datajayintech"

},

"x": 0.68611115,

"y": 0.32222223

}

},

"..."

]

},

"accessibility_caption": "A screenshot of a tweet from @DataJayInTech, which says: \"A recruiter just called me and said The Google Data Analytics Certificate is a good look. This post is to encourage YOU to finish the course.\" The background of the image is red with white, yellow, and blue geometric shapes.",

"edge_media_to_caption": {

"edges": [

{

"node": {

"text": "Ring, ring — opportunity is calling📱\nStart your Google Career Certificate journey at the link in bio. #GrowWithGoogle"

}

},

"..."

]

},

"shortcode": "CgcPcqtOTmN",

"edge_media_to_comment": {

"count": 139,

"page_info": {

"has_next_page": true,

"end_cursor": "QVFCaU1FNGZiNktBOWFiTERJdU80dDVwMlNjTE5DWTkwZ0E5NENLU2xLZnFLemw3eTJtcU54ZkVVS2dzYTBKVEppeVpZbkd4dWhQdktubW1QVzJrZXNHbg=="

},

"edges": [

{

"node": {

"id": "18209382946080093",

"text": "@google your company is garbage for meddling with supposedly fair elections...you have been exposed",

"created_at": 1658867672,

"did_report_as_spam": false,

"owner": {

"id": "39246725285",

"is_verified": false,

"profile_pic_url": "https://scontent-atl3-2.cdninstagram.com/v/t51.2885-19/115823005_750712482350308_4191423925707982372_n.jpg?stp=dst-jpg_s150x150&_nc_ht=scontent-atl3-2.cdninstagram.com&_nc_cat=104&_nc_ohc=4iOCWDHJLFAAX-JFPh7&edm=APU89FABAAAA&ccb=7-5&oh=00_AT9sH7npBTmHN01BndUhYVreHOk63OqZ5ISJlzNou3QD8A&oe=62E87360&_nc_sid=86f79a",

"username": "bud_mcgrowin"

},

"viewer_has_liked": false

}

},

"..."

]

},

"edge_media_to_sponsor_user": {

"edges": []

},

"comments_disabled": false,

"taken_at_timestamp": 1658765028,

"edge_media_preview_like": {

"count": 9251,

"edges": []

},

"gating_info": null,

"fact_check_overall_rating": null,

"fact_check_information": null,

"media_preview": "ACoqbj8KkijDnBOfpU1tAkis8mcL2H0zU8EMEqh1Dc56H0/KublclpoejKoo3WtylMgQ4HeohW0LKJ+u7PueaX+z4v8Aa/OmoNJJ6kqtG3UxT0pta9xZRxxswzkDjJrIoatuawkpq6NXTvuN9f6VdDFeAMAdsf8A16oWDKFYMQMnuR6e9Xd8f94fmtax2OGqnzsk3n/I/wDsqN7f5H/2VR74/wC8PzWlEkY7g/iv+NVcys+wy5JML59P89zWDW3dSx+UwGMnjjH9KxKynud1BWi79wpQM+g+tJRUHQO2+4pCuO4pKKAFFHP+RSUUgP/Z",

"owner": {

"id": "1067259270",

"username": "google"

},

"location": null,

"viewer_has_liked": false,

"viewer_has_saved": false,

"viewer_has_saved_to_collection": false,

"viewer_in_photo_of_you": false,

"viewer_can_reshare": true,

"thumbnail_src": "https://scontent-atl3-2.cdninstagram.com/v/t51.2885-15/295343605_719605135806241_7849792612912420873_n.webp?stp=dst-jpg_e35_s640x640_sh0.08&_nc_ht=scontent-atl3-2.cdninstagram.com&_nc_cat=101&_nc_ohc=cbVYU-YGD04AX9-DGya&edm=APU89FABAAAA&ccb=7-5&oh=00_AT8aF_4X2Ix9neTg1obSzOBgZW83oMFSNb-i5uqZqRqLLg&oe=62E80627&_nc_sid=86f79a",

"thumbnail_resources": [

{

"src": "https://scontent-atl3-2.cdninstagram.com/v/t51.2885-15/295343605_719605135806241_7849792612912420873_n.webp?stp=dst-jpg_e35_s150x150&_nc_ht=scontent-atl3-2.cdninstagram.com&_nc_cat=101&_nc_ohc=cbVYU-YGD04AX9-DGya&edm=APU89FABAAAA&ccb=7-5&oh=00_AT9nmASHsbmNWUQnwOdkGE4PvE8b27MqK-gbj5z0YLu8qg&oe=62E80627&_nc_sid=86f79a",

"config_width": 150,

"config_height": 150

},

"..."

]

},

...

]

Our Instagram scraper can successfully retrieve all the profile posts. Let's use it to extract hashtag mentions of a profile.

For this, we'll scrape Instagram post data, extract each mentioned hashtag, and then group the results:

from collections import Counter

def scrape_hashtag_mentions(user_id, session: httpx.AsyncClient, page_limit:int=None):

"""find all hashtags user mentioned in their posts"""

hashtags = Counter()

hashtag_pattern = re.compile(r"#(\w+)")

for post in scrape_user_posts(user_id, session=session, page_limit=page_limit):

desc = '\n'.join(post['captions'])

found = hashtag_pattern.findall(desc)

for tag in found:

hashtags[tag] += 1

return hashtags

import json

import httpx

if __name__ == "__main__":

with httpx.Client(timeout=httpx.Timeout(20.0)) as session:

# if we only know the username but not user id we can scrape

# the user profile to find the id:

user_id = scrape_user("google")["id"] # will result in: 1067259270

# then we can scrape the hashtag profile

hashtags = scrape_hastag_mentions(user_id, session, page_limit=5)

# order results and print them as JSON:

print(json.dumps(dict(hashtags.most_common()), indent=2, ensure_ascii=False))

{

"MadeByGoogle": 10,

"TeamPixel": 5,

"GrowWithGoogle": 4,

"Pixel7": 3,

"LifeAtGoogle": 3,

"SaferWithGoogle": 3,

"Pixel6a": 3,

"DoodleForGoogle": 2,

"MySuperG": 2,

"ShotOnPixel": 1,

"DayInTheLife": 1,

"DITL": 1,

"GoogleAustin": 1,

"Austin": 1,

"NestWifi": 1,

"NestDoorbell": 1,

"GoogleATAPAmbientExperiments": 1,

"GoogleATAPxKOCHE": 1,

"SoliATAP": 1,

"GooglePixelWatch": 1,

"Chromecast": 1,

"DooglersAroundTheWorld": 1,

"GoogleSearch": 1,

"GoogleSingapore": 1,

"InternationalDogDay": 1,

"Doogler": 1,

"BlackBusinessMonth": 1,

"PixelBuds": 1,

"HowTo": 1,

"Privacy": 1,

"Settings": 1,

"GoogleDoodle": 1,

"NationalInternDay": 1,

"GoogleInterns": 1,

"Sushi": 1,

"StopMotion": 1,

"LetsInternetBetter": 1

}

With this simple analytics script, we've scraped profile hashtags, which can be used to determine the interests of a public Instagram account.

Web scraping Instagram can be straightforward. However, Instagram restricts access to its publicly available data. It only allows for a few requests per day for non-logged-in users, and exceeding this limit will redirect the requests to a login page:

To avoid Instagram scraper blocking, we'll take advantage of the ScrapFly API, which manages the turnaround for us!

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

Here is how we can empower our Instagram scraper with the ScrapFly API. All we have to do is replace out HTTP client with the ScrapFly client, enable the asp parameter, and select a proxy country:

# standard web scraping code

import httpx

from parsel import Selector

response = httpx.get("some instagram.com URL")

selector = Selector(response.text)

# in ScrapFly becomes this 👇

from scrapfly import ScrapeConfig, ScrapflyClient

# replaces your HTTP client (httpx in this case)

scrapfly = ScrapflyClient(key="Your ScrapFly API key")

response = scrapfly.scrape(ScrapeConfig(

url="website URL",

asp=True, # enable the anti scraping protection to bypass blocking

country="US", # set the proxy location to a specfic country

render_js=True # enable rendering JavaScript (like headless browsers) to scrape dynamic content if needed

))

# use the built in Parsel selector

selector = response.selector

# access the HTML content

html = response.scrape_result['content']

To wrap this guide up let's take a look at some frequently asked questions about web scraping instagram.com:

At the time of writing, Instagram doesn't provide APIs for public usage. However, we have seen that we can utilize hidden Instagram APIs for a fast and efficient Instagram scraper.

Yes. Instagram's data is publicly available so scraping instagram.com at slow, respectful rates would fall under the ethical scraping definition. However, when working with personal data we need to be aware of local copyright and user data laws like GDPR in the EU. For more details, refer our guide on web scraping legality.

To get the private user ID from the public username we can scrape user profile using our scrape_user function and the private id will be located in the id field:

with httpx.Client(timeout=httpx.Timeout(20.0)) as session:

user_id = scrape_user('google')['id']

print(user_id)

To get the public username from Instagram's private user ID we can take advantage of public iPhone API https://i.instagram.com/api/v1/users/<USER_ID>/info/:

import httpx

iphone_api = "https://i.instagram.com/api/v1/users/{}/info/"

iphone_user_agent = "Mozilla/5.0 (iPhone; CPU iPhone OS 10_3_3 like Mac OS X) AppleWebKit/603.3.8 (KHTML, like Gecko) Mobile/14G60 Instagram 12.0.0.16.90 (iPhone9,4; iOS 10_3_3; en_US; en-US; scale=2.61; gamut=wide; 1080x1920"

resp = httpx.get(iphone_api.format("1067259270"), headers={"User-Agent": iphone_user_agent})

print(resp.json()['user']['username'])

Instagram has been rolling out new changes and slowly retiring this feature. However, in this article we've covered two alternatives for ?__a=1 features which are the /v1/ API endpoints and GraphQl endpoints which perform even better!

In this Instagram scraping tutorial, we've taken a look at how to easily scrape Instagram using Python and hidden API endpoints.

We've scraped user profile pages containing user details, posts, meta information, and each individual post data. To reduce scraped datasets, we used the JMESPath parsing library.

Finally, we have explored scaling our Instagram web scraper using the ScrapFly web scraping API, which bypasses Instagram scraping blocking.