Domain.com.au is a popular website for real estate listings in Australia, with thousands of properties available for sale or rent.

In this article, we will explain how to scrape real estate data from domain.com.au using only a few lines of Python. We will be extracting property listing data from both property and search pages. Let's get started!

Latest Domain.com.au Scraper Code

Legal Disclaimer and Precautions

This tutorial covers popular web scraping techniques for education. Interacting with public servers requires diligence and respect and here's a good summary of what not to do:- Do not scrape at rates that could damage the website.

- Do not scrape data that's not available publicly.

- Do not store PII of EU citizens who are protected by GDPR.

- Do not repurpose the entire public datasets which can be illegal in some countries.

Why Scrape Domain.com.au?

Scraping domain.com.au opens the door to a broad list of property listing data. Allowing businesses and traders to track not only the market trends but also prices, demand and supply changes over time.

Real estate data from scraping domain.com.au can help investors identify underpriced properties or areas with potential growth, leading to better decision-making and higher profit margins.

Moreover, manually exploring real estate data from websites can take time and effort. Therefore, domain.com.au web scraping can save a lot of manual effort by retrieving thousands of listings quickly.

For more details, refer to our previous article on web scraping real estate use cases.

Project Setup

In this guide, we'll be scraping domain.com.au using a few Python packages:

httpxFor sending HTTP requests to the website and retrieving HTML pages.parselFor parsing the HTML using XPath and CSS selectors.JMESPathFor refining and parsing JSON datasets.scrapfly-sdkA Python SDK for ScrapFly, a web scraping API that bypasses web scraping blocking.asyncioFor running our code asynchronously to increase our web scraping speed.

It's worth noting that asyncio comes pre-installed in Python. You can install the other packages using the following pip command:

pip install httpx parsel jmespath scrapfly-sdk

Scrape Domain.com.au Property Pages

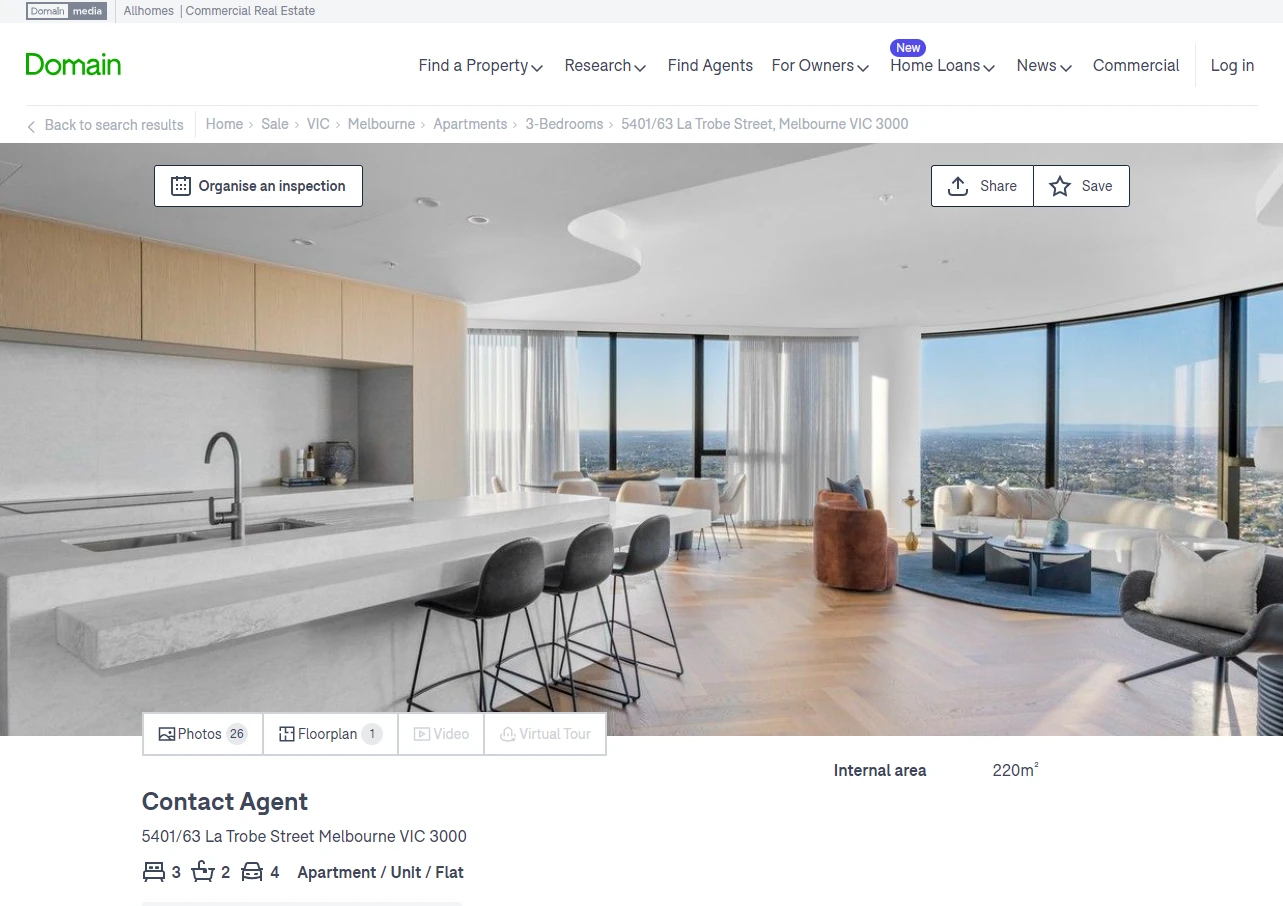

Let's start by scraping property pages on domain.com.au. Go to any property page on the website like this one and you will get a page similar to this:

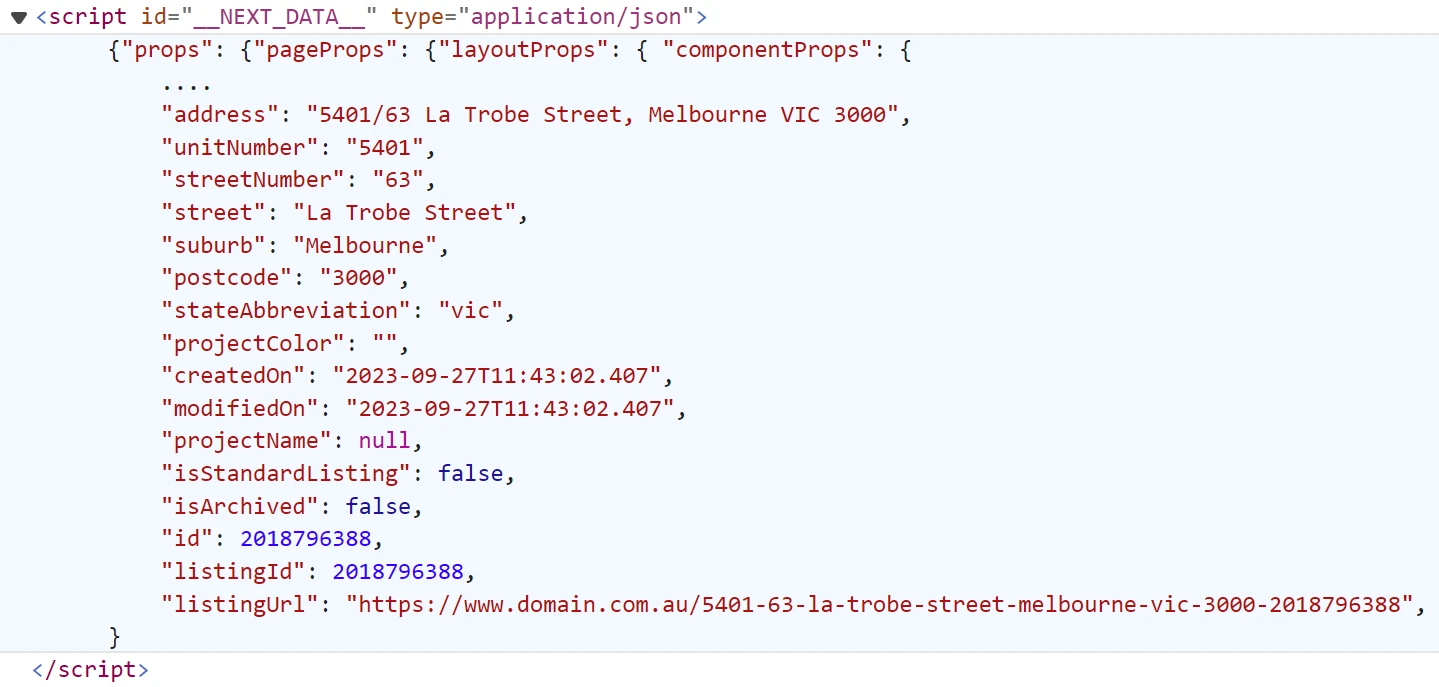

To scrape this page data, we'll parse data directly from script tags in JSON as this page uses hidden web data to store property details.

To view this data, open the browser developer tools by clicking the F12 key. Then, find the script tag with the id __NEXT_DATA__, which contain the property listing data:

This data is the same on the web page but before getting rendered into the HTML, which is often known as hidden web data.

How to Scrape Hidden Web Data

The visible HTML doesn't always represent the whole dataset available on the page. In this article, we'll be taking a look at scraping of hidden web data. What is it and how can we scrape it using Python?

To scrape the property pages' data, we'll extract the JSON data inside the script tag. We'll also refine it using JMESPath to exclude the unnecessary details:

import json

import asyncio

import jmespath

from httpx import AsyncClient, Response

from parsel import Selector

from typing import List, Dict

client = AsyncClient(

# enable http2

http2=True,

# add basic browser headers to mimize blocking chancesd

headers={

"accept-language": "en-US,en;q=0.9",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36",

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"accept-language": "en-US;en;q=0.9",

"accept-encoding": "gzip, deflate, br",

}

)

def parse_property_page(data: Dict) -> Dict:

"""refine property pages data"""

if not data:

return

result = jmespath.search(

"""{

listingId: listingId,

listingUrl: listingUrl,

unitNumber: unitNumber,

streetNumber: streetNumber,

street: street,

suburb: suburb,

postcode: postcode,

createdOn: createdOn,

propertyType: propertyType,

beds: beds,

phone: phone,

agencyName: agencyName,

propertyDeveloperName: propertyDeveloperName,

agencyProfileUrl: agencyProfileUrl,

propertyDeveloperUrl: propertyDeveloperUrl,

description: description,

loanfinder: loanfinder,

schools: schoolCatchment.schools,

suburbInsights: suburbInsights,

gallery: gallery,

listingSummary: listingSummary,

agents: agents,

features: features,

structuredFeatures: structuredFeatures,

faqs: faqs

}""",

data,

)

return result

def parse_hidden_data(response: Response):

"""parse json data from script tags"""

selector = Selector(response.text)

script = selector.xpath("//script[@id='__NEXT_DATA__']/text()").get()

data = json.loads(script)

return data["props"]["pageProps"]["componentProps"]

async def scrape_properties(urls: List[str]) -> List[Dict]:

"""scrape listing data from property pages"""

# add the property page URLs to a scraping list

to_scrape = [client.get(url) for url in urls]

properties = []

# scrape all the property page concurrently

for response in asyncio.as_completed(to_scrape):

response = await response

assert response.status_code == 200, "request has been blocked"

data = parse_hidden_data(response)

data = parse_property_page(data)

properties.append(data)

print(f"scraped {len(properties)} property listings")

return properties

import json

import asyncio

import jmespath

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

from typing import Dict, List

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

def parse_property_page(data: Dict) -> Dict:

"""refine property pages data"""

if not data:

return

result = jmespath.search(

"""{

listingId: listingId,

listingUrl: listingUrl,

unitNumber: unitNumber,

streetNumber: streetNumber,

street: street,

suburb: suburb,

postcode: postcode,

createdOn: createdOn,

propertyType: propertyType,

beds: beds,

phone: phone,

agencyName: agencyName,

propertyDeveloperName: propertyDeveloperName,

agencyProfileUrl: agencyProfileUrl,

propertyDeveloperUrl: propertyDeveloperUrl,

description: description,

loanfinder: loanfinder,

schools: schoolCatchment.schools,

suburbInsights: suburbInsights,

gallery: gallery,

listingSummary: listingSummary,

agents: agents,

features: features,

structuredFeatures: structuredFeatures,

faqs: faqs

}""",

data,

)

return result

def parse_hidden_data(response: ScrapeApiResponse):

"""parse json data from script tags"""

selector = response.selector

script = selector.xpath("//script[@id='__NEXT_DATA__']/text()").get()

data = json.loads(script)

return data["props"]["pageProps"]["componentProps"]

async def scrape_properties(urls: List[str]) -> List[Dict]:

"""scrape listing data from property pages"""

# add the property page URLs to a scraping list

to_scrape = [ScrapeConfig(url, country="AU", asp=True) for url in urls]

properties = []

# scrape all the property page concurrently

async for response in SCRAPFLY.concurrent_scrape(to_scrape):

# parse the data from script tag

data = parse_hidden_data(response)

# aappend the data to the list after refining

properties.append(parse_property_page(data))

print(f"scraped {len(properties)} property listings")

return properties

Run the code

async def run():

data = await scrape_properties(

urls = [

"https://www.domain.com.au/610-399-bourke-street-melbourne-vic-3000-2018835548"

]

)

# print the data in JSON format

print(json.dumps(data, indent=2)

if __name__ == "__main__":

asyncio.run(run())

Note that different property pages on our target website can have different in HTML structure, leading to parsing errors. Check out our Domain.com-scraper source code for the full parsing logic.

In the above code, we define three functions, let's break them down:

parse_property_page()for parsing the property page data after extracting it from thescripttag using JMESPath.parse_hidden_data()for extracting the hidden data in JSON from thescripttag.scrape_properties()for scraping the property pages by adding the page URLs to a scraping list and scraping them concurrently.

Here is what the result should look like:

Sample output

[

{

"listingId": 2018799963,

"listingUrl": "https://www.domain.com.au/101-29-31-market-street-melbourne-vic-3000-2018799963",

"unitNumber": "101",

"streetNumber": "29-31",

"street": "Market Street",

"suburb": "Melbourne",

"postcode": "3000",

"createdOn": "2023-09-28T13:15:16.053",

"propertyType": "Apartment / Unit / Flat",

"beds": 3,

"phone": "03 98201111",

"agencyName": "Kay & Burton Stonnington",

"propertyDeveloperName": "Kay & Burton Stonnington",

"agencyProfileUrl": "https://www.domain.com.au/real-estate-agencies/kayburtonstonnington-88/",

"propertyDeveloperUrl": "https://www.domain.com.au/real-estate-agencies/kayburtonstonnington-88/",

"description": [

"Inspect by Private Appointment",

"",

"A statement of grandeur from the city's maritime age, this majestic board room apartment is a landmark property in terms of stateliness, luxury and amenity, beautifully positioned in the city's west end on the corner of Flinders Lane.",

"",

"An award-winning landmark since its inception, the apartment is privately cocooned within the iconic heritage surroundings of the Port Authority Building designed in flamboyant Beaux Arts style by Sydney Smith Ogg & Serpell in 1929. Today a magnificent place of residence, this three bedroom, two bathroom apartment with secure parking for 2.5 cars is one of Melbourne's most noteworthy town residences.",

"",

"Featured in Vogue Living, the 325 sqm (approx.) interiors have been renovated by SJB Interiors to reflect the richness of the building but also Melbourne in the 21st century offering flexibility, liveability and purity of detail.",

"",

"Highly decorated, the heritage interiors are backdropped by regal ornate ceilings, handsome blackwood paneling, beautiful Jarrah, Ash and Blackwood parquetry flooring, bronze light fittings, soaring imperial marble fireplace mantels and banks of majestic bronze fanlight windows taking in the surrounding plane tree lined streetscape with private views towards Southbank.",

"",

"The outstanding craftmanship is testament to the power, wealth and extravagance of the Harbour commissioners presiding over maritime Melbourne, most notably in the board room which is the keystone of this illustrious home. A remarkable space, a flagship for entertaining yet intimate for daily living with the softening inclusions of a marble kitchen, ethanol fireplace and moveable bar balancing the classical architecture. A second marble kitchen adds additional flexibility to the internal footprint that also hosts a refined sitting room and handsome study.",

"",

"Luxurious natural materials respond elegantly to the buildings original design including the three bedroom accommodation that features two bedrooms with silk faced wardrobes and marble ensuites, one with a freestanding bath. Practical modern appointments include two Gaggenau ovens, central heating and a large storage cage.",

"",

"Crafted from granite with a grand foyer and internal lift, the exclusive Port Authority Building is situated within walking distance of Melbourne's financial and Law Court districts, a stroll to Southbank restaurants, the Yarra River and Crown Entertainment complex and enjoys transport options in every direction including trams and trains from Flinders Street and Spencer Street stations.",

"",

"In conjunction with Cushman & Wakefield - Oliver Hay 0419 528 540"

],

"loanfinder": {

"homeLoansApiUrl": "https://home-loans-api.domainloanfinder.com.au",

"configName": "dhl-domain-web",

"calculators": [

{

"name": "repayments",

"propertyPrice": "1080000",

"priceSource": "median",

"ingressApi": "https://www.domain.com.au/transactions-users-ingress-api/v1/dlf",

"usersApi": "https://www.domain.com.au/transactions-users-api/v1/users/loan-tools/repayments",

"loanTerm": "30",

"formActionUrl": "https://www.domain.com.au/loanfinder/basics/new-home?ltm_source=domain<m_medium=referral<m_campaign=domain_r_calculator_buy-listing_web<m_content=newloan_compare-home-loans",

"displayLogo": false,

"submitText": "Compare Home Loans",

"queryParams": {

"ltm_source": "domain",

"ltm_medium": "referral",

"ltm_campaign": "domain_r_calculator_buy-listing_web",

"ltm_content": "newloan_compare-home-loans"

},

"experimentName": "buy_listing_page_calculator_repayments",

"groupStats": {

"listingId": "2018799963",

"deviceType": "desktop"

},

"hasDeviceSessionId": false

},

{

"name": "upfront-costs",

"propertyPrice": "1080000",

"ingressApi": "https://www.domain.com.au/transactions-users-ingress-api/v1/dlf",

"usersApi": "https://www.domain.com.au/transactions-users-api/v1/users/loan-tools/ufc",

"formActionUrl": "https://www.domain.com.au/loanfinder/basics/new-home?ltm_source=domain<m_medium=referral<m_campaign=domain_ufc_calculator_buy-listing_web<m_content=newloan_check-options",

"submitText": "Compare Home Loans",

"firstHomeBuyer": "firstHomeBuyer",

"queryParams": {

"ltm_source": "domain",

"ltm_medium": "referral",

"ltm_campaign": "domain_ufc_calculator_buy-listing_web",

"ltm_content": "newloan_check-options"

},

"experimentName": "buy_listing_page_calculator_stamp_duty",

"groupStats": {

"listingId": "2018799963",

"deviceType": "desktop"

},

"hasDeviceSessionId": false

}

],

"productReviewConfig": {

"brandId": "ba7f14c4-3f43-3018-b06c-93d6657e7602",

"widgetId": "04115d3d-113e-3cb1-8103-ba0bb5e8d765",

"widgetUrl": "https://cdn.productreview.com.au/assets/widgets/loader.js"

}

},

"schools": [

{

"id": "",

"educationLevel": "secondary",

"name": "Hester Hornbrook Academy - City Campus",

"distance": 332.25763189076645,

"state": "VIC",

"postCode": "3000",

"year": "",

"gender": "",

"type": "Private",

"address": "Melbourne, VIC 3000",

"url": "",

"domainSeoUrlSlug": "hester-hornbrook-academy-city-campus-vic-3000-52519"

},

.....

],

"suburbInsights": {

"beds": 3,

"propertyType": "Unit",

"suburb": "Melbourne",

"suburbProfileUrl": "/suburb-profile/melbourne-vic-3000",

"medianPrice": 1080000,

"medianRentPrice": 1000,

"avgDaysOnMarket": 110,

"auctionClearance": 64,

"nrSoldThisYear": 102,

"entryLevelPrice": 600000,

"luxuryLevelPrice": 3065000,

"renterPercentage": 0.6970133677881032,

"singlePercentage": 0.7628455892048701,

"demographics": {

"population": 47279,

"avgAge": "20 to 39",

"owners": 0.3029866322118968,

"renters": 0.6970133677881032,

"families": 0.2371544107951299,

"singles": 0.7628455892048701,

"clarification": true

},

"salesGrowthList": [

{

"medianSoldPrice": 1053000,

"annualGrowth": 0,

"numberSold": 205,

"year": "2018",

"daysOnMarket": 108

},

.....

],

"mostRecentSale": null

},

"gallery": {

"slides": [

{

"thumbnail": "https://rimh2.domainstatic.com.au/liH61Q-Xq2U3NVz8_CuS7s4WhQk=/fit-in/144x106/filters:format(jpeg):quality(80):no_upscale()/2018799963_1_1_230928_031516-w7000-h4667",

"images": {

"original": {

"url": "https://rimh2.domainstatic.com.au/P_chzZfBOvp4O1uRKgJ1YaGcVyg=/fit-in/1920x1080/filters:format(jpeg):quality(80):no_upscale()/2018799963_1_1_230928_031516-w7000-h4667",

"width": 1620,

"height": 1080

},

"tablet": {

"url": "https://rimh2.domainstatic.com.au/GZAXgEYb2R39uL25FZBNJwtIvxI=/fit-in/1020x1020/filters:format(jpeg):quality(80):no_upscale()/2018799963_1_1_230928_031516-w7000-h4667",

"width": 1020,

"height": 680

},

"mobile": {

"url": "https://rimh2.domainstatic.com.au/0hmZIfTO914hdD_tdBgAPcAQHew=/fit-in/600x800/filters:format(jpeg):quality(80):no_upscale()/2018799963_1_1_230928_031516-w7000-h4667",

"width": 600,

"height": 400

}

},

"embedUrl": null,

"mediaType": "image"

},

.....

],

"features": [],

"structuredFeatures": [],

"faqs": [

{

"question": "How many bedrooms does 101/29-31 Market Street, Melbourne have?",

"answer": [

{

"text": "101/29-31 Market Street, Melbourne is a 3 bedroom apartment."

}

]

},

.....

]

}

]

Our domain.com.au scraper can scrape real estate data from property pages. Let's apply what we learned with search pages so we can discover desired properties for our property scraper.

Scrape Domain.com.au Search Pages

Just like property pages, we can find the search pages' data under script tags:

Domain.com.u search supports pagination by adding a ?page parameter at the end of the search page URLs:

https://www.domain.com.au/rent/melbourne-vic-3000/?page=page_number

We'll extract the data directly from the script tag in JSON and use the pagination parameter to crawl over search pages:

import json

import asyncio

import jmespath

from httpx import AsyncClient, Response

from parsel import Selector

from typing import List, Dict

client = AsyncClient(

# enable http2

http2=True,

# add basic browser headers to mimize blocking chancesd

headers={

"accept-language": "en-US,en;q=0.9",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36",

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"accept-language": "en-US;en;q=0.9",

"accept-encoding": "gzip, deflate, br",

}

)

def parse_search_page(data):

"""refine search pages data"""

if not data:

return

data = data["listingsMap"]

result = []

# iterate over card items in the search data

for key in data.keys():

item = data[key]

parsed_data = jmespath.search(

"""{

id: id,

listingType: listingType,

listingModel: listingModel

}""",

item,

)

# execulde the skeletonImages key from the data

parsed_data["listingModel"].pop("skeletonImages")

result.append(parsed_data)

return result

def parse_hidden_data(response: Response):

"""parse json data from script tags"""

selector = Selector(response.text)

script = selector.xpath("//script[@id='__NEXT_DATA__']/text()").get()

data = json.loads(script)

with open("newdata.json", "w") as file:

json.dump(data, file, indent=2)

return data["props"]["pageProps"]["componentProps"]

async def scrape_search(url: str, max_scrape_pages: int = None) -> List[Dict]:

"""scrape property listings from search pages"""

first_page = await client.get(url)

print("scraping search page {}", url)

data = parse_hidden_data(first_page)

search_data = parse_search_page(data)

# get the number of maximum search pages

max_search_pages = data["totalPages"]

# scrape all available pages if not max_scrape_pages or max_scrape_pages >= max_search_pages

if max_scrape_pages and max_scrape_pages < max_search_pages:

max_scrape_pages = max_scrape_pages

else:

max_scrape_pages = max_search_pages

print(f"scraping search pagination, remaining ({max_scrape_pages - 1} more pages)")

# add the remaining search pages to a scraping list

other_pages = [client.get(str(first_page.url) + f"?page={page}") for page in range(2, max_scrape_pages + 1)]

# scrape the remaining search pages concurrently

for response in asyncio.as_completed(other_pages):

response = await response

assert response.status_code == 200, "request has been blocked"

# parse the data from script tag

data = parse_hidden_data(response)

# aappend the data to the list after refining

search_data.extend(parse_search_page(data))

print(f"scraped ({len(search_data)}) from {url}")

return search_data

import json

import asyncio

import jmespath

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

from typing import Dict, List

from pathlib import Path

from loguru import logger as log

SCRAPFLY = ScrapflyClient(key="Your ScrapFly API key")

def parse_search_page(data):

"""refine search pages data"""

if not data:

return

data = data["listingsMap"]

result = []

# iterate over card items in the search data

for key in data.keys():

item = data[key]

parsed_data = jmespath.search(

"""{

id: id,

listingType: listingType,

listingModel: listingModel

}""",

item,

)

# execulde the skeletonImages key from the data

parsed_data["listingModel"].pop("skeletonImages")

result.append(parsed_data)

return result

def parse_hidden_data(response: ScrapeApiResponse):

"""parse json data from script tags"""

selector = response.selector

script = selector.xpath("//script[@id='__NEXT_DATA__']/text()").get()

data = json.loads(script)

return data["props"]["pageProps"]["componentProps"]

async def scrape_search(url: str, max_scrape_pages: int = None):

"""scrape property listings from search pages"""

first_page = await SCRAPFLY.async_scrape(ScrapeConfig(url, **BASE_CONFIG))

log.info("scraping search page {}", url)

data = parse_hidden_data(first_page)

search_data = parse_search_page(data)

# get the number of maximum search pages

max_search_pages = data["totalPages"]

# scrape all available pages if not max_scrape_pages or max_scrape_pages >= max_search_pages

if max_scrape_pages and max_scrape_pages < max_search_pages:

max_scrape_pages = max_scrape_pages

else:

max_scrape_pages = max_search_pages

print(f"scraping search pagination, remaining ({max_scrape_pages - 1} more pages)")

# add the remaining search pages to a scraping list

other_pages = [

ScrapeConfig(

# paginate the search pages by adding a "?page" parameter at the end of the URL

str(first_page.context["url"]) + f"?page={page}",

country="AU", asp=True

)

for page in range(2, max_scrape_pages + 1)

]

# scrape the remaining search pages concurrently

async for response in SCRAPFLY.concurrent_scrape(other_pages):

# parse the data from script tag

data = parse_hidden_data(response)

# aappend the data to the list after refining

search_data.extend(parse_search_page(data))

print(f"scraped ({len(search_data)}) from {url}")

return search_data

Here, we use the parse_hidden_data we defined earlier to extract the data in JSON from the script tag. Then, we use the parse_search_page function to remove the unnecessary details using JMESPath. Next, we crawl over search pages by scraping the first search page and extracting the number of maximum search pages available. Finally, we add the remaining search pages to a scraping list and scrape them concurrently.

The result is a list of property listing data from three search pages, similar to this:

Sample output

[

{

"id": 16754956,

"listingType": "listing",

"listingModel": {

"promoType": "premiumplus",

"url": "/317-55-queens-road-melbourne-vic-3000-16754956",

"images": [

"https://rimh2.domainstatic.com.au/SXfU4fEx8JoWy2uvdFvIaAI-eYk=/660x440/filters:format(jpeg):quality(80)/16754956_1_1_231120_072357-w3000-h2002",

"https://rimh2.domainstatic.com.au/NkkQtevg-5Ru_feyL39QmtO1gfA=/660x440/filters:format(jpeg):quality(80)/13013890_2_1_190321_053622-w3000-h2002",

"https://rimh2.domainstatic.com.au/BQlwroGo_nBdi_cwTJ9OBDwkSxg=/660x440/filters:format(jpeg):quality(80)/16754956_3_1_231120_072357-w2878-h1921",

"https://rimh2.domainstatic.com.au/wF6PD7BvXuR9KiPulNOS9YRFdpg=/660x440/filters:format(jpeg):quality(80)/13013890_3_1_190321_053622-w2878-h1921",

"https://rimh2.domainstatic.com.au/kNRsjFXUDI5n_IcNubVGGNxYhXM=/660x440/filters:format(jpeg):quality(80)/13013890_4_1_190321_053650-w3000-h2002",

"https://rimh2.domainstatic.com.au/hlbd5TKcUerkqDOJNnbWI0fOLzA=/660x440/filters:format(jpeg):quality(80)/13013890_5_1_190321_053650-w3000-h2002",

"https://rimh2.domainstatic.com.au/ucF8iRTfyXnZiNhwznxd7F92VTw=/660x440/filters:format(jpeg):quality(80)/13013890_6_1_190321_053650-w4134-h2756",

"https://rimh2.domainstatic.com.au/Yt8IctotQzRMzmBkCARqFl12DfY=/660x440/filters:format(jpeg):quality(80)/12823878_3_1_190103_043521-w440-h330",

"https://rimh2.domainstatic.com.au/LQrslg4tyTUafVXHV7I0D2OXHSo=/660x440/filters:format(jpeg):quality(80)/13013890_8_1_190321_053650-w4134-h2756"

],

"brandingAppearance": "light",

"price": "$620 pw",

"hasVideo": false,

"branding": {

"agencyId": 345,

"agents": [

{

"agentName": "Simon Koulouris",

"agentPhoto": null

}

],

"agentNames": "Simon Koulouris",

"brandLogo": "https://rimh2.domainstatic.com.au/1zCcz7Jq9n-5IG0HlindcwPAGkI=/170x60/filters:format(jpeg):quality(80)/https://images.domain.com.au/img/Agencys/345/logo_345.png?buster=2023-11-23",

"skeletonBrandLogo": "https://rimh2.domainstatic.com.au/PeWP7T-vRHfYKqkZ5q_1SG5eVuE=/200x70/filters:format(jpeg):quality(80):no_upscale()/https://images.domain.com.au/img/Agencys/345/logo_345.png?buster=2023-11-23",

"brandName": "Dingle Partners",

"brandColor": "#fff",

"agentPhoto": null,

"agentName": "Simon Koulouris"

},

"address": {

"street": "317/55 Queens Road",

"suburb": "MELBOURNE",

"state": "VIC",

"postcode": "3000",

"lat": -37.8478546,

"lng": 144.977615

},

"features": {

"beds": 2,

"baths": 1,

"parking": 1,

"propertyType": "ApartmentUnitFlat",

"propertyTypeFormatted": "Apartment / Unit / Flat",

"isRural": false,

"landSize": 0,

"landUnit": "m\u00b2",

"isRetirement": false

},

"inspection": {

"openTime": "2023-11-25T12:45:00",

"closeTime": "2023-11-25T13:00:00"

},

"auction": null,

"tags": {

"tagText": "New",

"tagClassName": "is-new"

},

"displaySearchPriceRange": null,

"enableSingleLineAddress": false

}

}

]

Awesome! We can successfully scrape real estate data from realestate.com.au property and search pages. However, our scraper is very likely to get blocked after sending additional requests. Let's take look at a solution!

Bypass Domain.com.au Blocking with Scrapfly

To scrape domain.com.au without getting blocked, we'll use ScrapFly.

ScrapFly provides web scraping, screenshot, and extraction APIs for data collection at scale.

- Anti-bot protection bypass - scrape web pages without blocking!

- Rotating residential proxies - prevent IP address and geographic blocks.

- JavaScript rendering - scrape dynamic web pages through cloud browsers.

- Full browser automation - control browsers to scroll, input and click on objects.

- Format conversion - scrape as HTML, JSON, Text, or Markdown.

- Python and Typescript SDKs, as well as Scrapy and no-code tool integrations.

We can use the asp feature to scrape domain.com.au without getting blocked:

import httpx

from parsel import Selector

response = httpx.get("some domain.com.au url")

selector = Selector(response.text)

# in ScrapFly SDK becomes

from scrapfly import ScrapeConfig, ScrapflyClient, ScrapeApiResponse

scrapfly_client = ScrapflyClient("Your ScrapFly API key")

result: ScrapeApiResponse = scrapfly_client.scrape(ScrapeConfig(

# some domain.com.au url

"https://www.domain.com.au/610-399-bourke-street-melbourne-vic-3000-2018835548",

# we can select specific proxy country

country="AU",

# and enable anti scraping protection bypass

asp=True,

# allows JavaScript rendering similar to headless browsers

render_js=True

))

# use the built-in parsel selector

selector = result.selector

FAQ

To wrap up this guide, let's take a look at some frequently asked questions about domain.com.au web scraping.

Is it legal to scrape domain.com.au?

Yes, all the data on domain.com.au are publicly available and it's legal to scrape it as long as the scraper is not damaging the website. For more details, refer to our is web scraping legal guide.

Is there an API for domain.com.au?

Currently, there is no public API for domain.com.au. However, domain.com.au scraping is straightforward and you can make an API yourself with Python scraping and Fastapi; for that see turning your web scrapers into API.

Are there alternatives for domain.com.au?

Yes, realestate.com.au is another popular website for real estate ads in Australia. Refer to our #realestate blog tag for the guide and for more real estate target websites.

Web Scraping Domain.com.au Summary

In this guide, we explained how to scrape domain.com.au, a popular Australian website for real estate ads.

We went through a step-by-step guide on scraping domain.com.au listings from property and search pages using Python by extracting the data directly in JSON from script tags.